In this post, we will learn how to train a style transfer network with Paperspace's Gradient° and use the model in ml5.js to create an interactive style transfer mirror. This post is the second on a series of blog posts dedicated to train machine learning models in Paperspace and then use them in ml5.js. You can read the first post in this series on how to train a LSTM network to generate text here.

Style Transfer

Style Transfer is the technique of recomposing images in the style of other images.1

It first appeared in September 2015, when Gatys et. al published the paper A Neural Algorithm of Artistic Style. In this paper, the researchers demonstrated how deep neural networks, specifically convolutional neural networks, can develop and extract a representation of the style of an image and store this representation inside feauture maps. The idea is to then use that learned style representation and apply it to another image. More specifically:

The system uses neural representations to separate and recombine content and style of arbitrary images, providing a neural algorithm for the creation of artistic images. [...] our work offers a path forward to an algorithmic understanding of how humans create and perceive artistic imagery 2

Basically, you train a deep neural network to extract a representation of an image style. You can then apply this style (S) to a content image (C) and create a new image (CS) that has the content of C but the style of S. After Gatys et. al publication, other similar methods and optimizations were published. Perceptual Losses for Real-Time Style Transfer and Super-Resolution by Johnson et. al, introduced new methods for optimizing the process, three orders of magnitude faster3, and with high resolution images. (You can learn more about the technical details of what the network is doing when transfering styles, here, here and in this previous Paperspace post.)

"Pablo Picasso painting on glass in 1937, restyled by works from his Blue, African, and Cubist periods respectively". By Gene Kogan.

Style Transfer mirror in the browser

In this tutorial we will train a model to capture and learn the style of any image you want. We will then use this model inside the browser, with ml5.js, to create an interactive mirror that will use the webcam and which applies a real time style transfer over the captured image. Here is a demo of the final result using Chungungo Pate Factory in Tunquén, 1993 by the chilean artist Bororo (Please allow and enable your webcam):

We are running this model entirley on the browser thanks to ml5.js. If you haven't read the previous post, ml5.js is a new JavaScript library that aims to make machine learning approachable for a broad audience of artists, creative coders, and students. The library provides access to machine learning algorithms and models in the browser, building on top of TensorFlow.js with no other external dependencies.

So, we will train a model in Python using GPU acceleration thanks to Gradient°, export the model to JavaScript and run everything on the browser with the ml5.styleTransfer() method.

Setting up

You can find the code for this project in this repository. This code is based on github.com/lengstrom/fast-style-transfer which is a combination of Gatys' A Neural Algorithm of Artistic Style, Johnson's Perceptual Losses for Real-Time Style Transfer and Super-Resolution, and Ulyanov's Instance Normalization.

Training this algorithm requires access to the COCO Dataset. COCO is a large-scale object detection, segmentation, and captioning dataset. The version of the dataset we will be using is about 15GB in total. Fortunately, Paperspace has public datasets which you can access from your jobs so there's no need to download it. Public datasets are automatically mounted to your jobs and notebooks under the read-only /datasets directory.

Install the Paperspace node API

We will use the Paperspace Node API or the Python API. If you don't have it installed, you can easily install it with npm:

npm install -g paperspace-node

or with Python:

pip install paperspace

(you can also install binaries from the GitHub releases page if you prefer).

Once you have created a Paperspace account you will be able to login in with your credentials from your command line:

paperspace login

Add your Paperspace email and password when prompted.

If you don't have an account with Paperspace yet, you can use this link to get $5 for free! Link: https://www.paperspace.com/&R=VZTQGMT

Training Instructions

1) Clone the repository

Start by cloning or downloading the project repository:

git clone https://github.com/Paperspace/training_styletransfer.git

cd training_styletransfer

This will be the root of our project.

2) Select a style image

Put the image you want to train the style on inside the /images folder.

3) Run your code on Gradient°

In the repository root you will find a file called run.sh. This is a script that contains the instructions we will use run to train our model.

Open run.sh and modify the --style argument to point to your image:

python style.py --style images/YOURIMAGE.jpg \

--checkpoint-dir checkpoints/ \

--vgg-path /styletransfer/data/imagenet-vgg-verydeep-19.mat \

--train-path /datasets/coco/ \

--model-dir /artifacts \

--test images/violetaparra.jpg \

--test-dir tests/ \

--content-weight 1.5e1 \

--checkpoint-iterations 1000 \

--batch-size 20

--style should point to the image you want to use. --model-dir will be the folder where the ml5.js model will be saved. --test is an image that will be used to test the process during each epoch. You can learn more about how to use all the parameters for training in the on the original repository for this code here and here.

Now we can start the training process! Type:

paperspace jobs create --container cvalenzuelab/styletransfer --machineType P5000 --command './run.sh' --project 'Style Transfer training'

This means we want to create a new paperspace job using as a base container a Docker image that comes pre-installed with all the dependencies we will need. We also want to use a machineType P5000 and we want to run the command ./run.sh to start the training process. This project will be called Style Transfer training

When the training process starts you should see the following:

Uploading styletransfer.zip [========================================] 1555381/bps 100% 0.0s

New jobId: jstj01ojrollcf

Cluster: PS Jobs

Job Pending

Waiting for job to run...

Job Running

Storage Region: East Coast (NY2)

Awaiting logs...

ml5.js Style Transfer Training!

Note: This traning will take a couple of hours.

Training is starting!...

Training this model takes between 2-3 hours on a P5000 machine. You can choose a better GPU if you wish to make the process faster. You can also close the terminal if you wish, the training process will still continue and will not be interrupted. If you login into Paperspace.com, under the Gradient tab you can check the status of the model. You can also check it by typing:

paperspace jobs logs --tail --jobId YOUR_JOB_ID

4) Download the model

Once it finishes you should see the following in the log:

Converting model to ml5js

Writing manifest to artifacts/manifest.json

Done! Checkpoint saved. Visit https://ml5js.org/docs/StyleTransfer for more information

This means the final model is ready in /artifacts folder of your job!

If you go to Paperspace.com, check your job under the Gradient tab and click 'Artifacts' you will see a folder called /model. This folder contains the architecture and all the learned weigths from our model. They are ported into a JSON friendly format that web browsers can use and that ml5.js uses to load it. Click the icon on the right to download the model or type:

paperspace jobs artifactsGet --jobId YOUR_JOB_ID

This will download the folder with our trained model. Be sure to download the model inside /ml5js_example/models.

Now we are ready to try our model in ml5.js!

5) Using the model

In the /root of our project, you will find a folder called /ml5js_example. This folder contains a very simple example of how to load a style transfer model in ml5 and use the camera as input (it uses p5.js to make this process easier). You can look at the original example and code here. For now, the line that you should change is this, at the stop of /ml5js_example/sketch.js:

const style = new ml5.styleTransfer('./models/YOUR_NEW_MODEL');

YOUR_NEW_MODEL should be the name of the model you just downloaded.

We are almost ready to test the model. The only thing left is to start a server to view our files. If you are using Python 2:

python -m SimpleHTTPServer

If you are using Python 3:

python -m http.server

Visit http://localhost:8000 and if everything went well you should see the demo:

A note on training and images

Try with different images and hyperparametrers and discover different results. Avoid geometry or images with a lot of patterns because those images do not hold enough recongizable feature that network can learn. This for example, is using 'Winter' a serigraphy by the chilean kinetic artist Matilde Pérez.

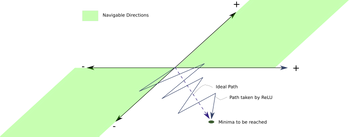

You can notice that the results are not as good as the previous example because the input image is mostly composed of a regular geometric patter repetition with few independent features.