Bring this project to life

HuggingFace.co is one of the greatest resources for AI developers at every level, from hobbyists to researchers at FAANG companies, to learn and play around with the hottest open source AI technologies. HuggingFace offers a Git-like environment to host large files and datasets, represented by their Model and Dataset products. To support this, the site also contains additional infrastructure to enable users to both run inference on these models using their Diffusers/Transformers libraries. Most interestingly to the AI education community, however, is probably their Spaces product, and, here at Paperspace, we are huge fans of their partnership with Gradio and other web application libraries to bring the the awesome, free-to-use tool about.

In short, Spaces allow users to upload applications, typically written with Gradio, and run them on HuggingFace's GPU resources to create publicly accessible web applications to serve a wide variety of Deep Learning models. These have proven to be one of the most reliable ways to share novel AI projects with the greater community, and has been critical spreading open source technologies to the general public. Since most of them are created as Gradio or similar applications, the low code environment of these web apps allow users with little to no coding knowledge to use AI for whatever purpose.

One of the only problems for this setup can be popularity - each space running on HuggingFace takes up at least a single seat of their limited GPU space. While they have a large set of T4 and A10 GPUs, this can leave users waiting a long time to make use of the most popular applications. Furthermore, these GPUs are notably weaker than some of the newer and more powerful GPUs available on cloud platforms, like the A100-80G or H100.

In this short tutorial, we will show how to set up any HuggingFace space on a Paperspace Notebook. This process will automate out any of the hassle of setup, and allows Paperspace users to take full advantage of our more powerful GPUs to run the myriad of different HuggingFace spaces. Afterwards, we will show off two of our favorite current spaces: Seamless M4T & MagicAnimate running on Paperspace.

Setup

Bring this project to life

The first thing we need to do is look at our HuggingFace Space of interest. While most, in our experience, are written using Gradio as a backend, there are still many that are not. For example, this popular application using Nomic AI's GPT4ALL was written in JavaScript, and is not suitable for this tutorial method.

Let's use Seamless M4T as our example, as it's setup is probably the quickest. Click the Run on Paperspace link here or under the Setup header to open a Paperspace Notebook. Keep in mind, this is defaulted to the Free-GPU (M4000), so Growth and Pro plan users may want to consider switching to a more powerful machine type. Once the web page has loaded, click "Start Machine" to begin launching the Notebook.

After the set up is complete, we can begin launching the web application.

The HuggingFace to Paperspace Shell Script

To simplify the setup process, we have provided a useful shell script, shown below:

apt-get update && apt-get install git-lfs -y

while getopts ":f:" option; do

case $option in

f)

url="$OPTARG"

git-lfs clone $url

cd ${url##*/}

pip install -U gradio transformers

pip install -r requirements.txt

sed -i 's/.launch()/.launch(share=True)/' app.py

python app.py --share

;;

esac

doneThis handles the vast majority of the launching process for us. We simply need to add a URL to the shell script call with the flag --f. The script will then clone the Space to our Notebook, install some common requirements, the application itself's requirements, edits the Gradio code to produce a shared, public link, and finally launch the application.

When put together, which we can see in the notebook file run_any_space.ipynb, this allows for a very quick method for low coders to launch HuggingFace spaces in a Paperspace Notebook. Executing the shell script is shown here:

!bash HF_to_PS.sh --f <paste_name_of_repo_here>If everything goes correctly, the script will begin automating all the preparation and launch the application for us. Before we go into looking at those examples, let's discuss the times we may run into complications with the script, and try to identify quick solutions.

Edge cases for HF_to_PS.sh

While we discussed easy setup throughout this article, there are situations where that may not be the case. This isn't just when we find a non-Gradio application, but situations where the install process creates installation conflicts that the application cannot resolve.

The first example of this is actually one we chose for this tutorial: MagicAnimate. Specifically, if we use the unaltered shell script to run the application, we get a conflict with the ProtoBuf versioning. It requires version 3.19.0 or lower to work, but the requirements.txt file lists 3.24.0 as the install version. In order to remedy this, we will need to manually edit the requirements.txt file on line after failing the initial run of the bash script. The initial run will fail, but the clone will succeed and we will be able to edit the requirements file. We recommend stopping the run using the Stop button at the top left corner of the cell when the clone completes and the installs begin. If done correctly, on subsequent runs the code will work completely.

Other times, we may run into situations where packages like Diffusers, Gradio, or Transformers may require different versions. Diffusers, Gradio, and Transformers are automatically updated by the script to try to ameliorate this, but it may still be possible that a conflict occurs. This will usually be displayed prominently in red in the error message, and makes it simple to correct. Once we identify the missing or incorrect install, we can simply add it to the requirements.txt file to resolve the issue. This will also work for packages other than those listed, as needed.

Demos: Seamless M4T

Seamless M4T is a foundational ASR model for rapid speech translation from Meta. What makes Seamless so exciting? Speech to speech translation, the holy grail of ASR technologies, makes a huge leap forward with Seamless M4T. We are going to definitely cover this incredible model in the future in more detail, but let's look at how we can use HF_to_PS.sh to launch the Seamless M4T Gradio demo from HuggingFace spaces.

To run Seamless M4T in a Paperspace Notebook, click the link at the top of this article to spin up a Notebook with our Github repo as its working directory. We first need to install pip install gradio==3.50.2 to make sure our app works, as this application is inexplicably unable to work with the >4.0.0 versions of Gradio. Paste the following in a code cell.

!pip install gradio==3.50.2After that's done, open up the run_any_space.ipynb file. Then we want to paste our space's URL in where it says to get a code cell like the one below. Run it to start Seamless M4T, and click the public link to open it.

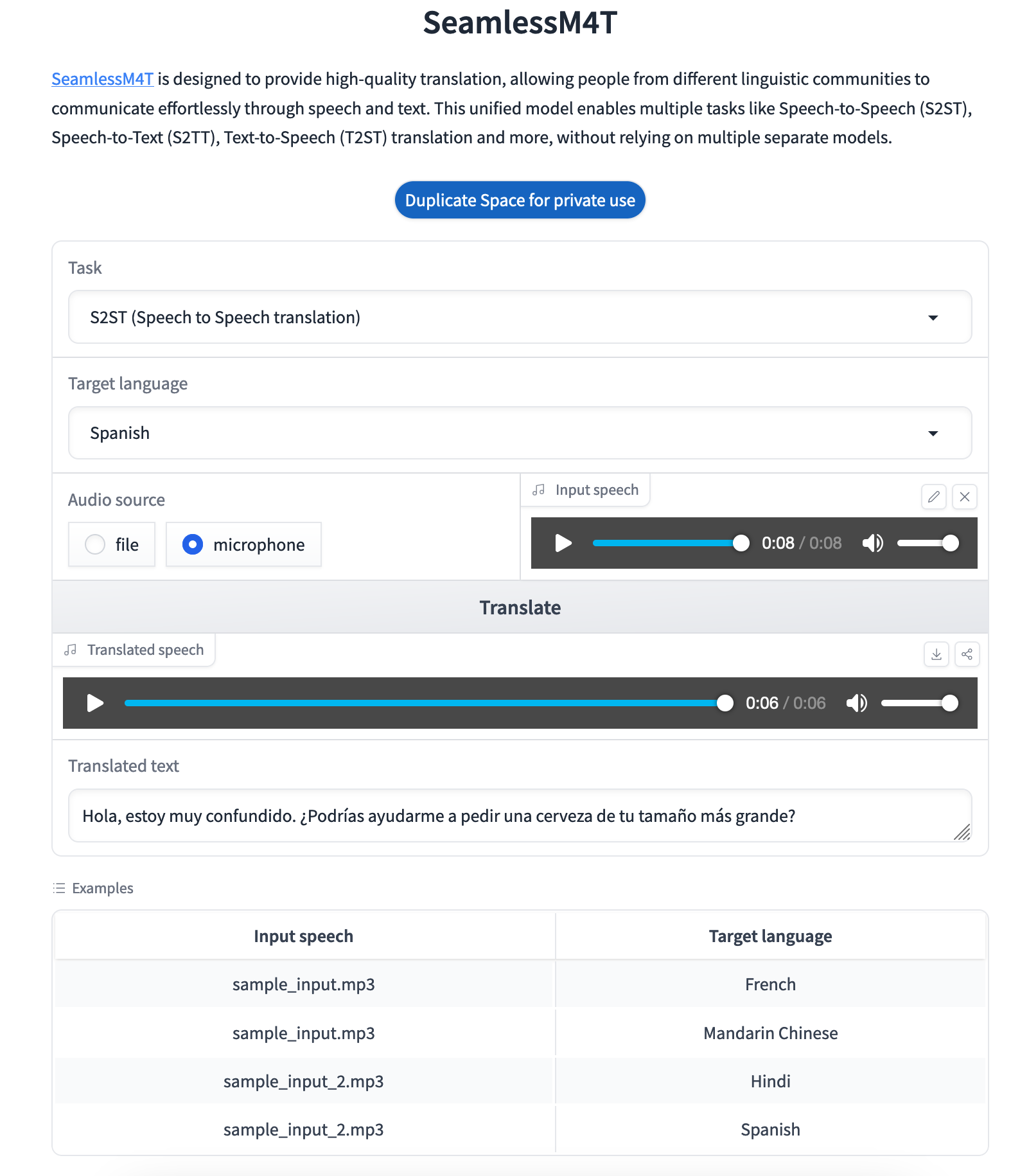

!bash HF_to_PS.sh --f https://huggingface.co/spaces/facebook/seamless_m4tOnce your demo is running, we are met with the demo homepage. Here we can select our task (speech to speech translation being the default), the target language we want for our output, and then input our own audio files or record live with a microphone. Below is an example we made using our own voice.

English to Spanish speech to speech.

As we can hear and see, the translation was very successful. As Spanish speaker's ourselves, we can confirm that the translation itself was correct, and the speech generated sounded very realistic. Given how quickly this generated from the sample, we are looking very forward to the near future where Star Trek like, handheld translators are available to the masses.

Demos: MagicAnimate

MagicAnimate is our favorite of the new video generation models out there. While it requires a more rigid skeleton than projects like MoonValley, MagicAnimate is the smoothest available to open source users, and gives a far greater degree of control over generation when compared to competitors.

To run MagicAnimate, we just need to run the code cell below. Remember to edit the requirements file following the instructions in paragraph 2 of the "Edge cases for HF_to_PS.sh" section of this article

!bash HF_to_PS.sh --f https://huggingface.co/spaces/zcxu-eric/magicanimateWe can then input any square image of a person, along with a DensePose sequence extracted using Detectron2, to generate an animation that only alters the initial figures positioning to create the appearance of movement. Here is an example we made using a photo of the author of this article with a sample from the demo's DensePose examples.

As we can see, it did a decent job of maintaining the background objects and clothing of the subject. It struggled to deal with the bright yellow lighting to get the correct hair coloring, with the video appearing a bit blonde, and the facial structure is completely different. However, the movement is as crisp as any Stable Diffusion based video generator we have seen yet.

Check back in the new year for a complete demo on this awesome model.

Closing Thoughts

HuggingFace is an incredible resource for AI coders. It's only real limitation is it's popularity and relatively small selection of available GPU options. HF_to_PS.sh represents a very tangible method for resolving these problems using Paperspace's superior variety of GPUs to better meet the needs of these applications. Please, try lots of Gradio HuggingFace apps on Paperspace!