Introduction

In this ever-changing era of technology, artificial intelligence (AI) is driving innovation and transforming industries. Among the various advancements within AI, the development and deployment of AI agents are known to reshape how businesses operate, enhance user experiences, and automate complex tasks.

AI agents, which are software entities capable of performing specific tasks autonomously, have become indispensable in many applications, ranging from customer service chatbots to advanced data analysis tools to finance agents.

In this article, we will create a basic AI agent to explore the significance, functionalities, and technological frameworks that facilitate these agents' creation and deployment. Specifically, we will understand LangGraph and Ollama, two powerful tools that simplify building local AI agents. By the end of this guide, you will have a comprehensive understanding of leveraging these technologies to create efficient and effective AI agents tailored to your specific needs.

Understanding AI Agents

AI agents are entities or systems that perceive their environment and take actions to achieve specific goals or objectives. These agents can range from simple algorithms to sophisticated systems capable of complex decision-making. Here are some key points about AI agents:

- Perception: AI agents use sensors or input mechanisms to perceive their environment. This could involve gathering data from various sources such as cameras, microphones, or other sensors.

- Reasoning: AI agents receive information and use algorithms and models to process and interpret data. This step involves understanding patterns, making predictions, or generating responses.

- Decision-making: AI agents, like humans, decide on actions or outputs based on their perception and reasoning. These decisions aim to achieve specific goals or objectives defined by their programming or learning process. Further, AI agents will act more as assistants rather than replace humans.

- Actuation: AI agents execute actions based on their decisions. This could involve physical actions in the real world (like moving a robot arm) or virtual actions in a digital environment (like making recommendations in an app).

An example of AI agents in action is healthcare systems, which analyze patient data from various sources, such as medical records, test results, and real-time monitoring devices. These AI agents then use this data to make informed decisions, such as predicting the likelihood of a patient developing a specific condition or recommending personalized treatment plans based on the patient's medical history and current health status. For instance, AI agents in healthcare might help doctors diagnose diseases earlier by analyzing subtle patterns in medical imaging data or suggest adjustments to medication dosages based on real-time physiological data.

Difference Between AI Agent and RAG Application

RAG (Retrieval-Augmented Generation) applications and AI agents refer to different concepts within artificial intelligence.

RAG is used to improve the performance or the output of LLM models by incorporating information retrieval methods. The retrieval system searches for relevant documents or information from a large corpus based on the input query. The generative model (e.g., a transformer-based language model) then uses this retrieved information to generate more accurate and contextually relevant responses. This helps increase the generated content's accuracy due to the integration of retrieved information. Furthermore, this technique removes the need to fine-tune or train a LLM on new data.

On the other hand, AI agents are autonomous software entities designed to perform specific tasks or a series of tasks. They operate based on predefined rules, machine learning models, or both. They often interact with users or other systems to gather inputs, provide responses, or execute actions. Some AI agent's performance increases as they can learn and adapt over time based on new data and experiences. AI can handle multiple tasks simultaneously, providing scalability for businesses.

| RAG | A.I.Agent |

|---|---|

| RAG is a technique used to improve the performance of generative models by incorporating information retrieval methods | An AI personal assistant can perform autonomous tasks and make decisions |

| Retrieval system + generative model | Rule-based systems, machine learning models, or a combination of AI techniques |

| Improved accuracy and relevance, leverage external data | Improved versatility, adaptability |

| Question answering, customer support, content generation | Virtual assistants, autonomous vehicles, recommendation systems |

| Ability to leverage large, external datasets for enhancing generative responses without requiring the generative model itself to be trained on all that data | Capability to interact with users and adapt to changing requirements or environments. |

| A chatbot that retrieves relevant FAQs or knowledge base articles to answer user queries more effectively. | A recommendation engine that suggests products or content based on user preferences and behavior. |

In summary, RAG applications are specifically designed to enhance the capabilities of generative models by incorporating retrieval mechanisms; AI agents are broader entities intended to perform a wide array of tasks autonomously.

Brief Overview of LangGraph

LangGraph is a powerful library for building stateful, multi-actor applications using large language models (LLMs). It helps create complex workflows involving single or multiple agents, offering critical advantages like cycles, controllability, and persistence.

Key Benefits:

- Cycles and Branching: Unlike other frameworks that use simple directed acyclic graphs (DAGs), LangGraph supports loops and conditionals, essential for creating sophisticated agent behaviors.

- Fine-grained control: LangGraph provides detailed control over your application's flow and state as a low-level framework, making it ideal for developing reliable agents.

- Persistence: It includes built-in persistence, allowing you to save the state after each step, pause and resume execution, and support advanced features like error recovery and human-in-the-loop workflows.

Features:

- Cycles and Branching: Implement loops and conditionals in your apps.

- Persistence: Automatically save state after each step, supporting error recovery.

- Human-in-the-Loop: Interrupt execution for human approval or edits.

- Streaming Support: Stream outputs as each node produces them.

- Integration with LangChain: Seamlessly integrates with LangChain and LangSmith but can also be used independently.

LangGraph is inspired by technologies like Pregel and Apache Beam, with a user-friendly interface similar to NetworkX. Developed by LangChain Inc., it offers a robust tool for building reliable, advanced AI-driven applications.

Quick Introduction to Ollama

Ollama is an open-source project that makes running LLMs on your local machine easy and user-friendly. It provides a user-friendly platform that simplifies the complexities of LLM technology, making it accessible and customizable for users who want to harness the power of AI without needing extensive technical expertise.

It is easy to install. Furthermore, we have a selection of models and a comprehensive set of features and functionalities designed to enhance the user experience.

Key Features:

- Local Deployment: Run sophisticated LLMs directly on your local machine, ensuring data privacy and reducing dependency on external servers.

- User-Friendly Interface: Designed to be intuitive and easy to use, making it accessible for users with varying levels of technical knowledge.

- Customizability: Fine-tune the AI models to meet your specific needs, whether for research, development, or personal projects.

- Open Source: Being open-source, Ollama encourages community contributions and continuous improvement, fostering innovation and collaboration.

- Effortless Installation: Ollama stands out with its user-friendly installation process, offering intuitive, hassle-free setup methods for Windows, macOS, and Linux users. We have created an article on downloading and using Ollama; please check out the blog (link provided in the resource section.)

- Ollama Community: The Ollama community is a vibrant, project-driven that fosters collaboration and innovation, with an active open-source community enhancing its development, tools, and integrations.

A step-by-step guide to creating an AI agent using LangGraph and Ollama

Bring this project to life

In this demo, we will create a simple example of an agent using the Mistral model. This agent can search the web using the Tavily Search API and generate responses.

We will start by installing Langgraph, a library designed to build stateful, multi-actor applications with LLMs that are ideal for creating agent and multi-agent workflows. Inspired by Pregel, Apache Beam, and NetworkX, LangGraph is developed by LangChain Inc. and can be used independently of LangChain.

We will use Mistral as our LLM model, which will be integrated with Ollama and Tavily's Search API. Tavily's API is optimized for LLMs, providing a factual, efficient, persistent search experience.

In our previous article, we learned how to use Qwen2 using Ollama, and we have linked the article. Please follow the article to install Ollama and how to run LLMs using Ollama.

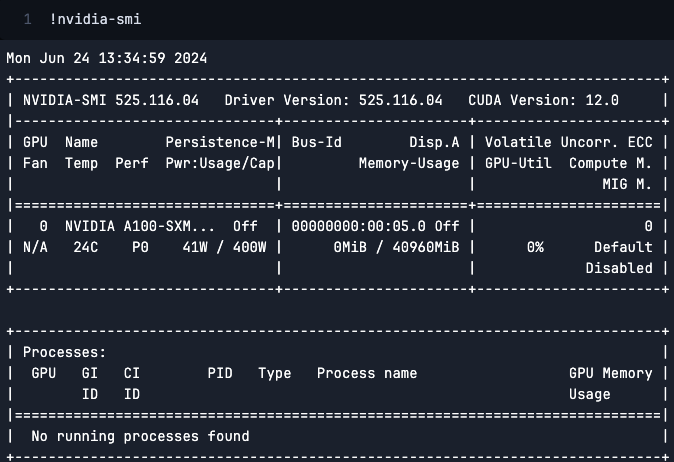

Before we begin with the installation, let us check our GPU. For this tutorial, we will use A100 provided by Paperspace.

The NVIDIA A100 GPU is a powerhouse designed for AI, data analytics, and high-performance computing. Built on the NVIDIA Ampere architecture, it offers unprecedented acceleration and scalability. Key features include multi-instance GPU (MIG) technology for optimal resource utilization, tensor cores for AI and machine learning workloads, and support for large-scale deployments. The A100 excels in training and inference, making it ideal for various applications, from scientific research to enterprise AI solutions.

You can open a terminal and type the code below to check your GPU config.

nvidia-smi

Now, we will start with our installations.

pip install -U langgraph

pip install -U langchain-nomic langchain_community tiktoken langchainhub chromadb langchain langgraph tavily-python

pip install langchain-openai

After completing the installations, we will move on to the next crucial step: providing the Travily API key.

Sign up to Travily and generate the API key.

export TAVILY_API_KEY="apikeygoeshere"

Now, we will run the code below to fetch the model. Please try this using Llama or any other version of Mistral.

ollama pull mistral

Import all the necessary libraries required to build the agent.

from langchain import hub

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain.prompts import PromptTemplate

from langgraph.prebuilt import create_react_agent

from langchain_openai import ChatOpenAI

from langchain_core.output_parsers import JsonOutputParser

from langchain_community.chat_models import ChatOllamaWe will start by defining the tools we want to use and bind the tools with the llm. For this simple example, we will utilize a built-in search tool via Tavily.

tools = [TavilySearchResults(max_results=3)]

llm_with_tools = llm.bind_tools(tools)

The below code snippet retrieves a prompt template and prints it in a readable format. This template can then be used or modified as needed for the application.

prompt = hub.pull("wfh/react-agent-executor")

prompt.pretty_print()

Next, we will configure the use of Mistral via the Ollama platform.

llm = ChatOpenAI(model="mistral", api_key="ollama", base_url="http://localhost:11434/v1",

)

Finally, we will create an agent executor using our language model (llm), a set of tools (tools), and a prompt template (prompt). The agent is configured to react to inputs, utilize the tools, and generate responses based on the specified prompt, enabling it to perform tasks in a controlled and efficient manner.

agent_executor = create_react_agent(llm, tools, messages_modifier=prompt)

================================ System Message ================================

You are a helpful assistant.

============================= Messages Placeholder =============================

{{messages}}The given code snippet invokes the agent executor to process the input message. This step aims to send a query to the agent executor and receive a response. The agent will use its configured language model (Mistral in this case), tools, and prompts to process the message and generate an appropriate reply

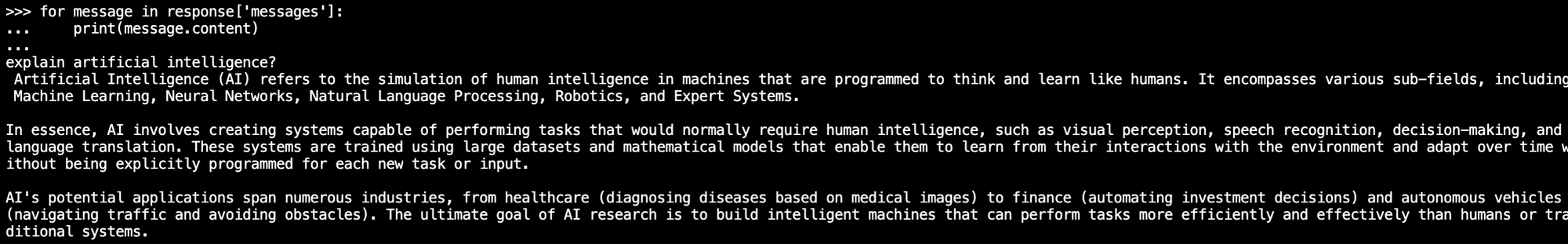

response = agent_executor.invoke({"messages": [("user", "explain artificial intelligence")]})

for message in response['messages']:

print(message.content)and this will generate the below response.

Conclusion

LangGraph and tools like AI Agents and Ollama represent a significant step forward in developing and deploying localized artificial intelligence solutions. By leveraging LangGraph's ability to streamline various AI components and its modular architecture, developers can create versatile and scalable AI solutions that are efficient and highly adaptable to changing needs.

As our blog describes, AI Agents offer a flexible approach to automating tasks and enhancing productivity. These agents can be customized to handle various functions, from simple task automation to complex decision-making processes, making them indispensable tools for modern businesses.

Ollama, as part of this ecosystem, provides additional support by offering specialized tools and services that complement LangGraph's capabilities.

In summary, the integration of LangGraph and Ollama provides a robust framework for building AI agents that are both effective and efficient. This guide is a valuable resource for anyone looking to harness the potential of these technologies to drive innovation and achieve their objectives in the ever-evolving landscape of artificial intelligence.

We hope you enjoyed reading this article!