Decision Transformers are a new type of machine learning model that enable the combination of transformers with reinforcement learning, opening up new avenues of research and application.

Recently, Hugging Face posted about these on their blog, and in this article we will show how to get started working with them on Paperspace Gradient.

You can try them out for yourself by running a Gradient Notebook using this link as your Workspace URL in the advanced options.

What are Decision Transformers?

Decision transformers combine the power of transformers to work with sequential data (natural language processing, etc.), and the ability of reinforcement learning (RL) to recursively improve the abilities of an intelligent agent within an environment.

The problem to be solved by RL is cast as a sequence of steps, which are then used to train the transformer. This means that the agent doesn't have to be in the environment to do its learning, and can save a lot of the compute power that RL otherwise needs.

Because the agent is not in the environment while being trained, this is called offline reinforcement learning.

In regular RL, the agent learns the actions to take within its environment to maximize its future reward. In offline RL, the transformer looks at the information-cast-as-sequence, and uses this to generate the future sequence of what it should do.

In other words, we are using what a transformer is good at — generating future sequences — to give what we want for RL: an agent that gains reward from its environment.

Decision transformers thus represent a convergence between transformers and RL, an exciting prospect that could combine the powers of both.

For more about decision transformers, see the Hugging Face blog entry, or the original paper.

How do you run them on Paperspace?

We recently released a major update of our Notebooks, and it is now even easier to run machine learning content with GPUs, either at a small scale for a demo, or much larger scales for solving real problems.

While the number of steps to run on us is more than used in the HuggingFace demo example, which is basically just one step to run, the process here is much the same as you would have for working on your own real projects.

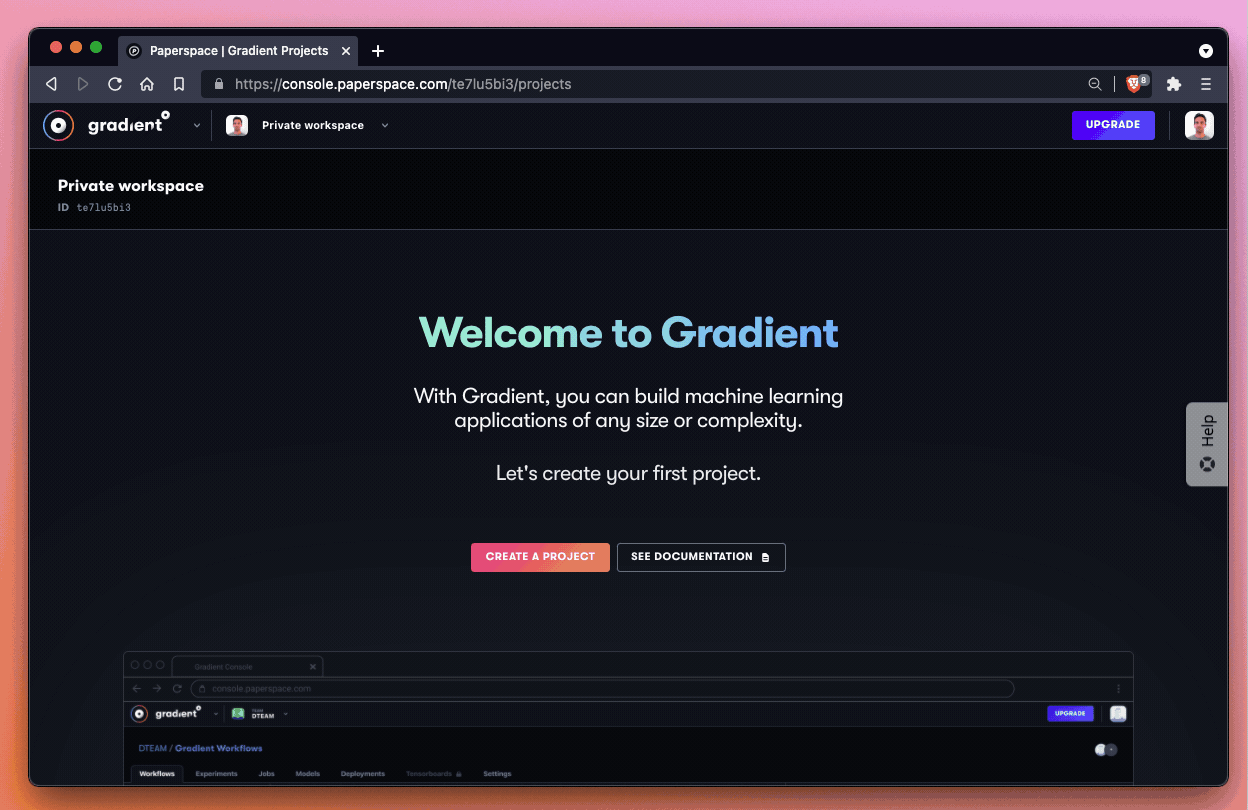

To get started, log into the Paperspace Gradient console by going to console.paperspace.com. If you do not have an account already, you can sign up to get free access to our free remote GPUs, as well as the option to upgrade to a paid account with higher quality GPU machines available. After signing in to Paperspace, proceed through the following steps.

Bring this project to life

1. Create a Project

Make sure you are on Paperspace Gradient and not Core, by selecting from the dropdown list at the top left. You will then see a screen like this:

or an existing list of projects if you are in a private workspace or team that has some.

Either way, click Create a Project and give it a name, such as Decision Transformers.

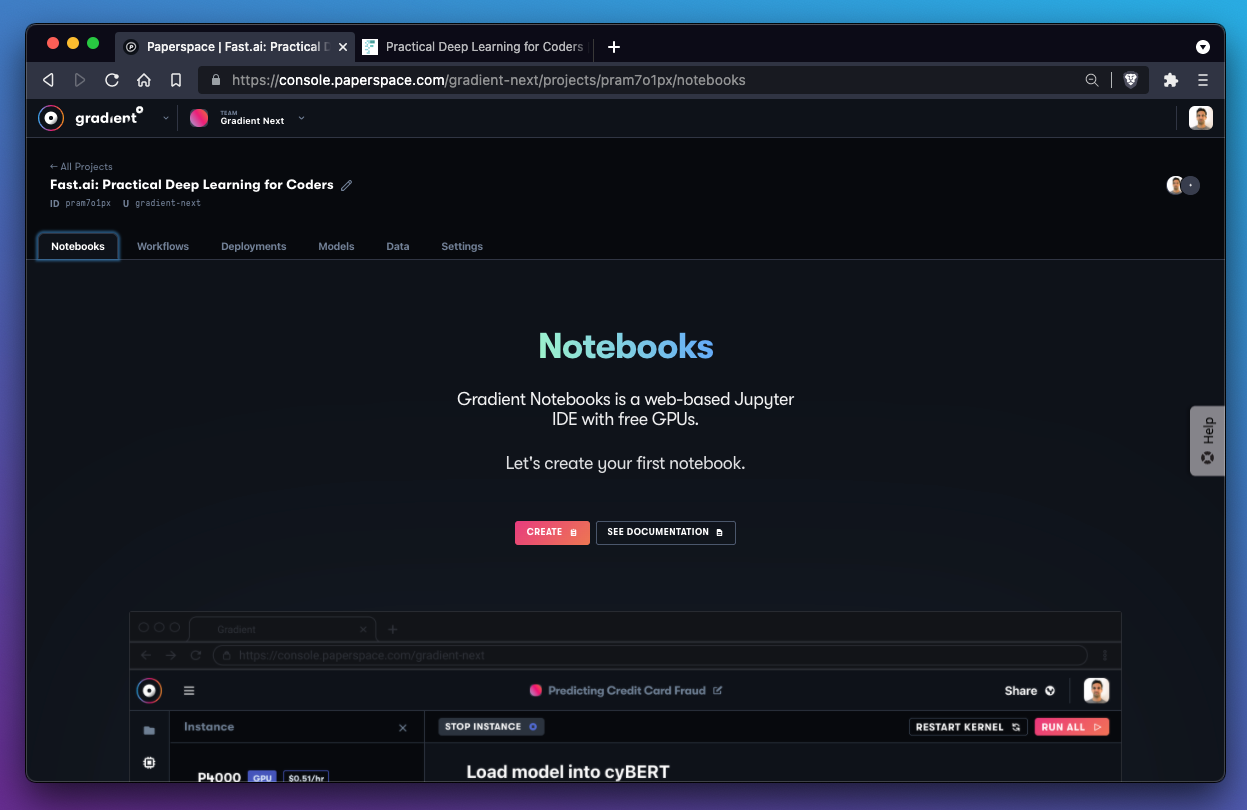

2. Create a Notebook

In your new project, you will see the Notebooks tab, which guides you to create a new Notebook instance. The Notebook instance then allows you to manage and run individual .ipynb Jupyter notebooks.

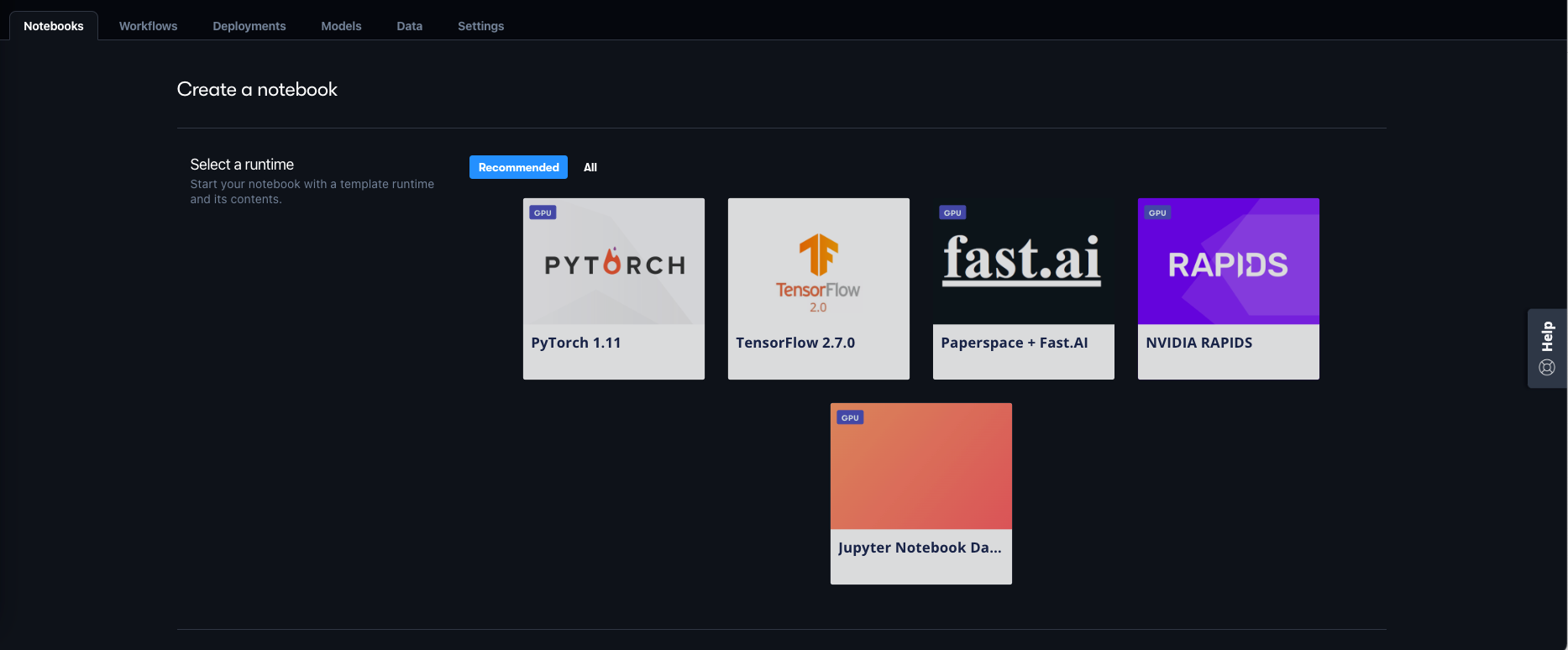

Notebook creation goes through a short series of steps to choose which runtime (PyTorch, TensorFlow, etc.) and machine type (CPU, which GPU, etc.) you would like, plus a few other details.

For the runtime, choose PyTorch:

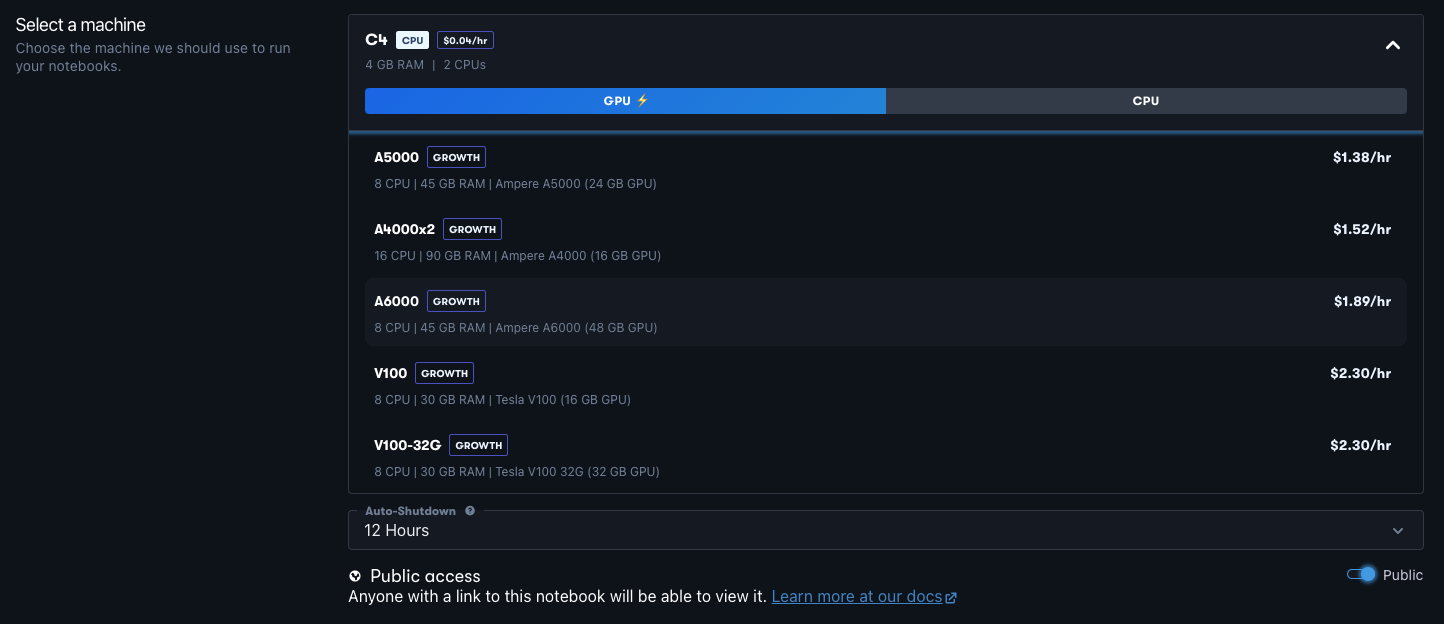

3. Choose a GPU

Choose your GPU from the list of types available. There are a variety of types available, from Nvidia Maxwell M4000 through to Ampere A100, and single or multi-GPU setups. We recently benchmarked these if you would like more details.

For this demo, the M4000 or P4000 are in fact sufficient.

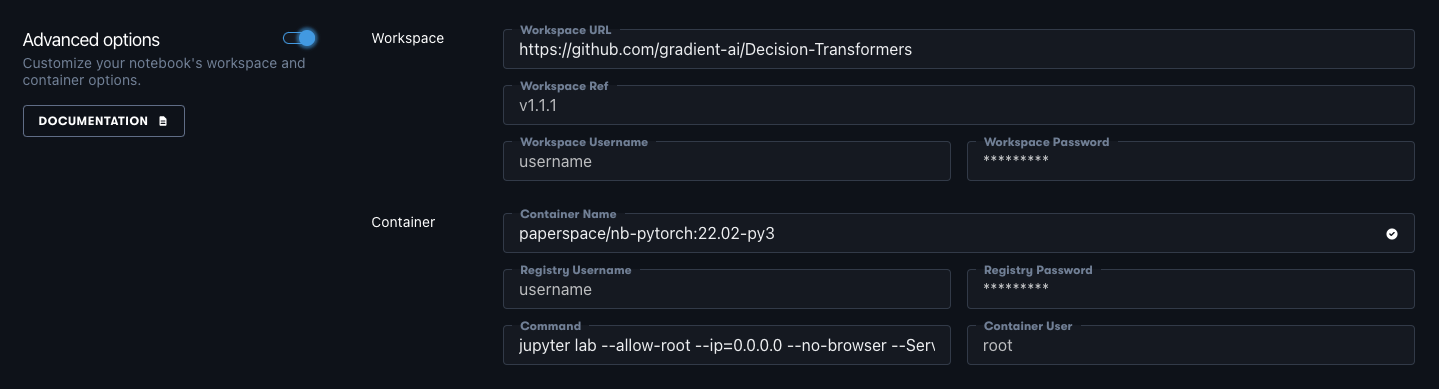

4. Point to decision transformer GitHub repository

By default, our PyTorch runtime points to our quick-start PyTorch repo, so here we want to instead point to the decision transformers.

To do this, open Advanced Options and under Workspace, change the Workspace URL from https://github.com/gradient-ai/PyTorch to https://github.com/gradient-ai/Decision-Transformers. The other options can be left as they are.

By this same method, you can point to any accessible repo when creating a Notebook to use as your working directory contents.

5. Start the Notebook

Your Notebook is now ready to go! Go ahead and click Start Notebook.

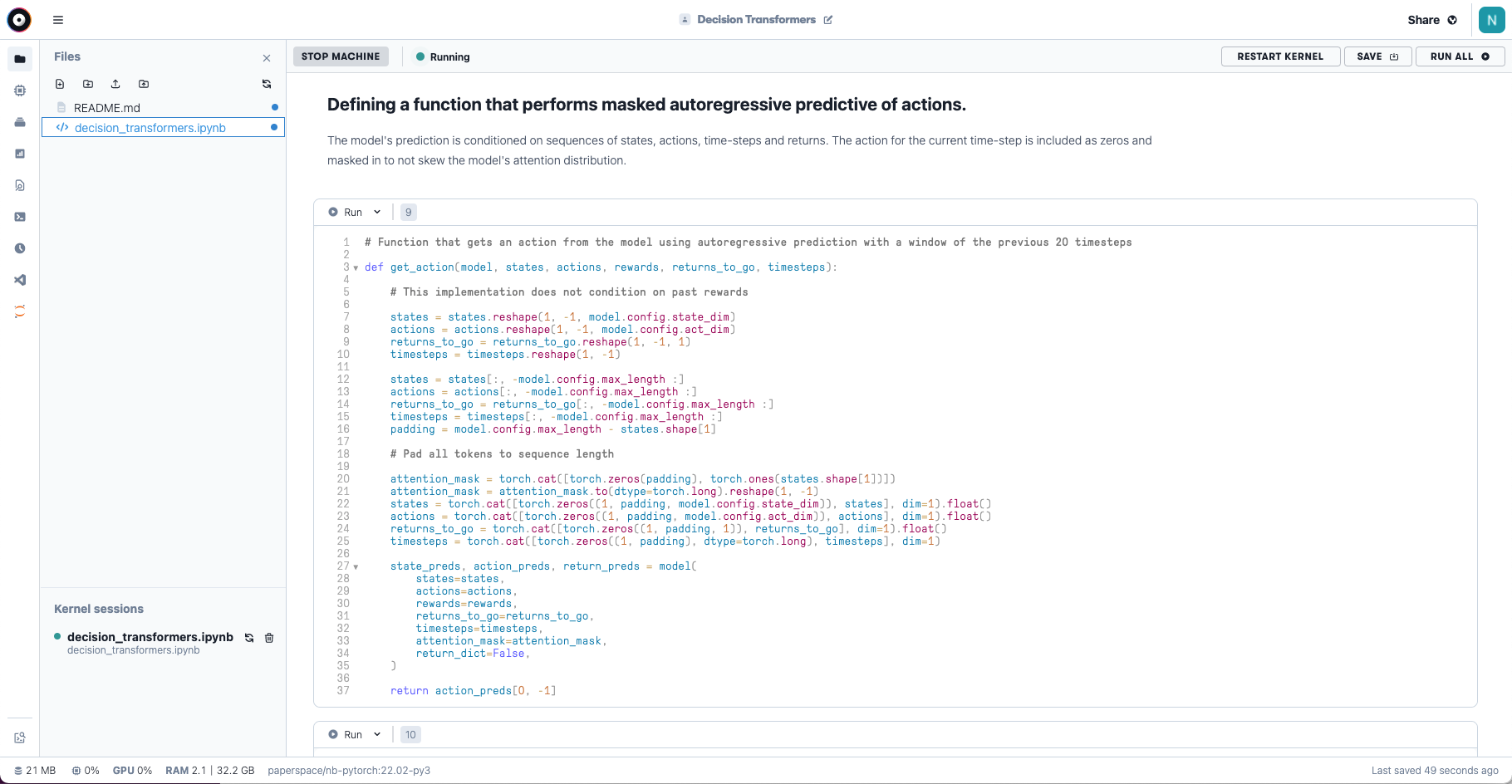

This will take a few seconds to start up, then you will see the Notebook screen, with a list of files in the left-hand navigation bar. You will know the Notebook is ready when it says "Running" in the top left of the task bar.

6. Run Decision Transformers

Double-click decision_transformers.ipynb to open the notebook file, and then click Run All in the top right to execute the cells.

NOTE - If the video fails to generate the first time through, click Restart Kernel then Run All again to redo.

This notebook is a slightly modified version of the original one from the Hugging Face decision transformers repo that runs on Colab. There are some minor changes in library setup, and some lines to ensure that the resulting cell set still works under Run All.

What do they look like?

If the Notebook has run through correctly, you should get a video of the trained agent traveling through its environment, like this:

You can see that the model is performing quite well, traveling for some distance without falling down.

To view the code in more detail, run the Notebook, or see the Hugging Face blog entry for some snippets with explanations.

Next steps

Now that you have a working decision transformer setup on Paperspace, this opens up a range of possibilities.

- For more details about decision transformers, see the Hugging Face blog entry. This includes some more of the theory on decision transformers, a link to some pre-trained model checkpoints representing different forms of locomotion, details of the auto-regressive prediction function by which the model learns, and some model evaluation.

- To run your own Hugging Face models, you can start a Notebook like above, and point to their repos, e.g., https://github.com/huggingface/transformers .

- To run at scale, for example for longer pre-training or fine-tuning runs, choose from our selection of Pascal, Volta, and Ampere GPUs or multi-GPUs as the compute power for your Notebook.

- To run in production, check out Paperspace's Workflows and Deployments.

Decision transformers are part of some exciting convergences we are seeing in deep learning now, where models in previously separate fields, such as text and computer vision, are becoming more general and capable across several fields.

It may even be the case that a generic architecture that works across text, images, etc., such as Perceiver IO, will become able to be used for solving most problems.

Whatever happens, combinations of capabilities such as Hugging Face's machine learning models, and Paperspace's end-to-end data science + MLOps + GPU infrastructure, will continue to open them up to more users.