Streamlit enables data scientists and machine learning practitioners to build data and machine learning applications quickly. In this piece, we will look at how we can use Streamlit to build a face verification application. However, before we can start verifying faces, we have to detect them. In computer vision, face detection is the task of locating and localizing faces in an image. Face verification is the process of comparing the similarity of two or more images.

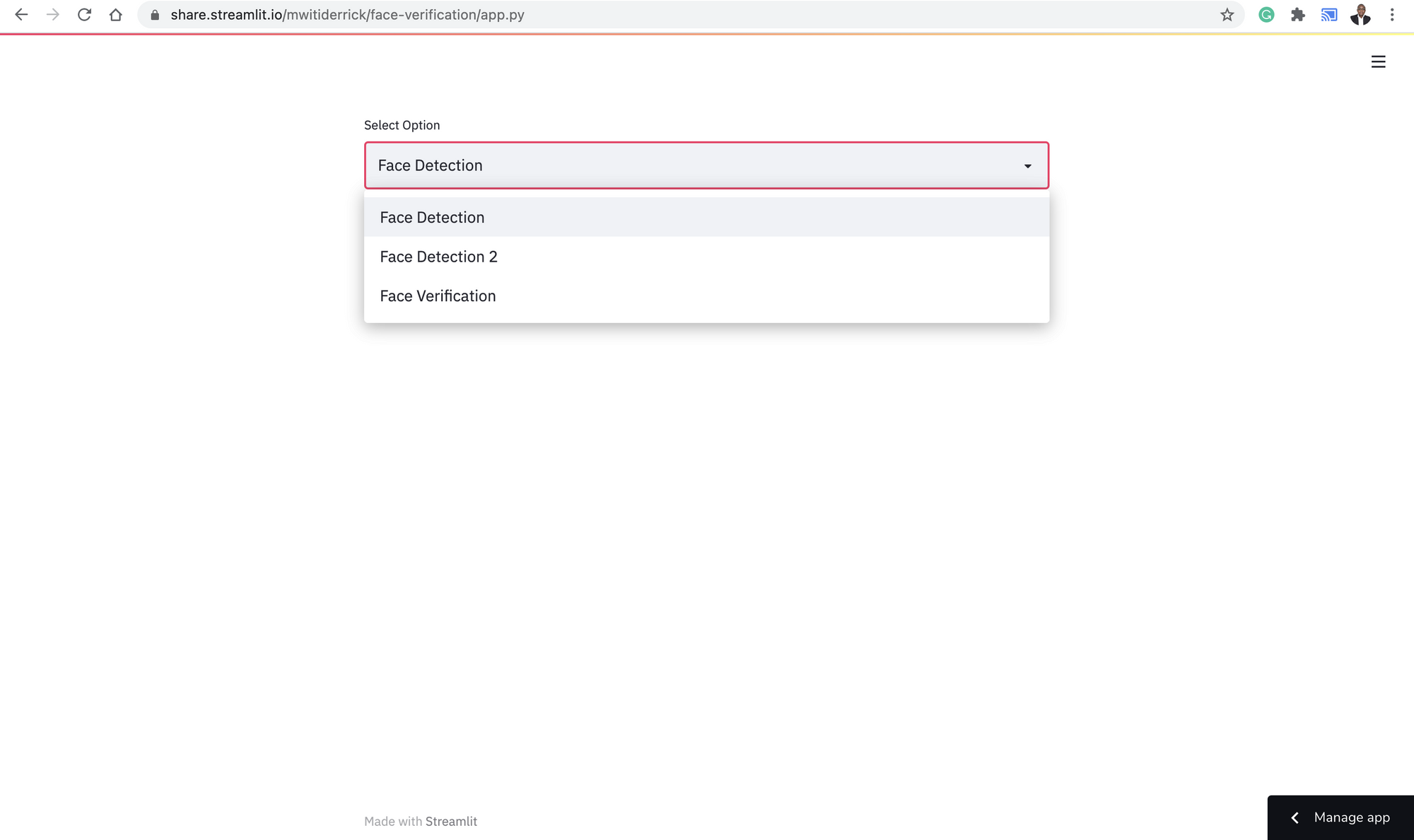

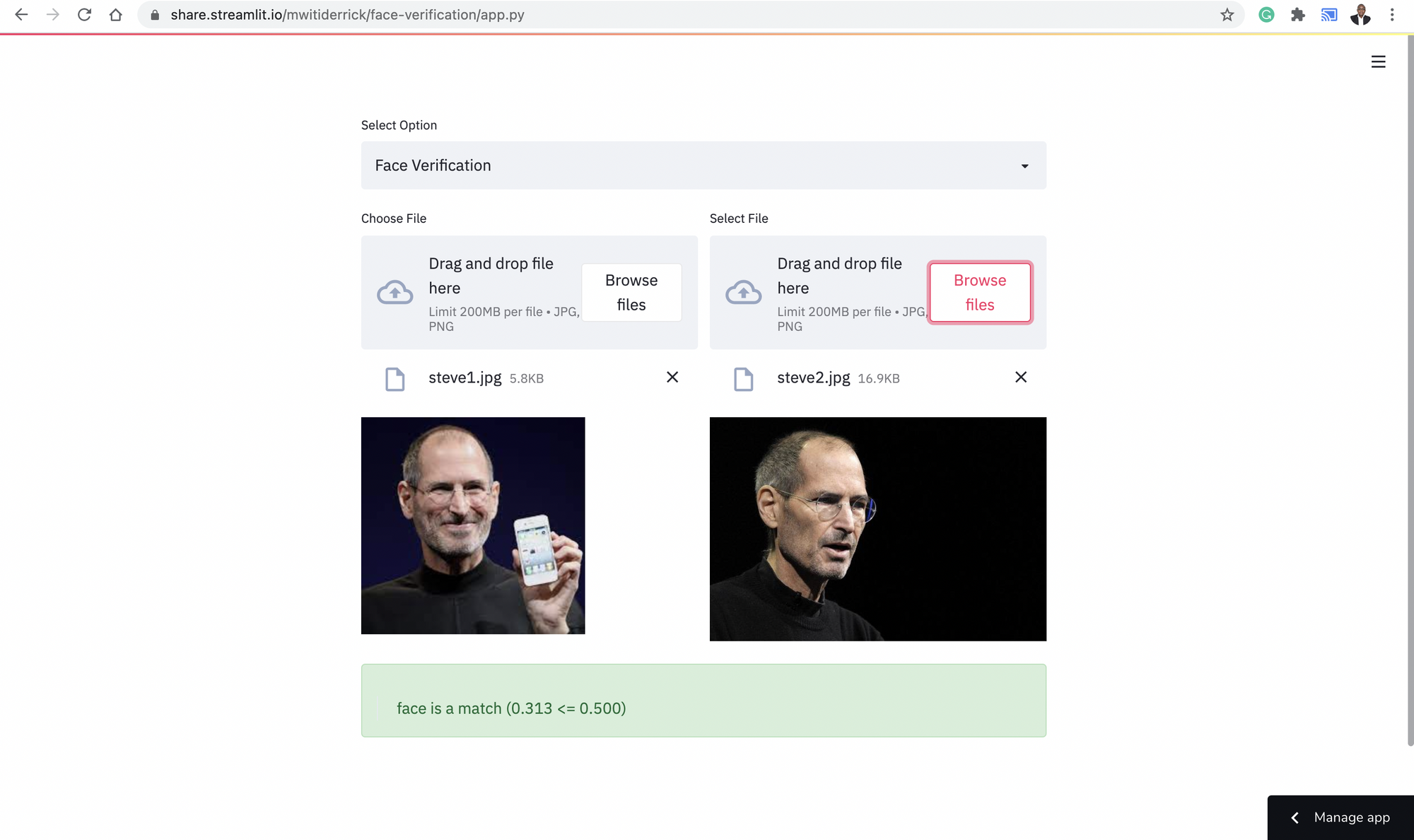

The image below shows the application that we shall be working on in this article. The live demo is located here.

Bring this project to life

Detecting faces with MTCNN

MTCNN stands for Multi-Task Cascaded Convolutional Neural Network and was first proposed in this paper. The framework is made up of three stages:

- In the first step, candidate windows and their bounding box regression vectors are produced via a shallow CNN known as the Proposal Network (P-Net)

- The next step uses a complex CNN to reject non-face windows. The paper refers to this CNN as the Refine Network (R-Net).

- It then uses a more complex CNN to refine the result and output five facial landmarks’ positions.

The input to the above three-stage cascaded framework is an image that has been resized to different scales in order to build an image pyramid.

In this article, we'll use Iván de Paz Centeno 's implementation of the paper. It is available as a pip installable package.

pip install mtcnnFace recognition and verification models

VGGFace refers to face recognition models developed from computer vision datasets from the Visual Geometry Group (VGG) at the University of Oxford. The main models in this series are VGGFace and VGGFace2. The Deep Face Recognition paper proposes a large-scale dataset for face recognition and verification. The paper describes a way to verify faces by comparing their embeddings in the Euclidian space. This is done using a Triplet loss. In this implementation, the Euclidian distance between similar faces is small while it's larger for different faces.

VGGFace2 is described in this paper. It proposes a new large-scale face dataset containing 3.31 million images of 9131 subjects. Each subject has an average of 362.6 images. Models trained on this dataset are referred to as VGGFace2. The models can be found on this GitHub repo. We shall use the Keras implementation of this model to verify faces. The first thing we'll need to do is to detect faces and then obtain their embeddings. The paper refers to these embeddings as face descriptors. The distance between these descriptors will then be computed using cosine similarity.

In Keras, we can use VGGDFace models using the keras-vggface package. You'll have to install it from GitHub.

pip install git+https://github.com/rcmalli/keras-vggface.gitSetting up the Streamlit application

Before we can start working on the application, you'll need to install all the requirements. The requirements are available here. After downloading the file install all the requirements via pip.

pip install -r requirements.txt Import all the requirements

Now open a new Python file (preferably named app.py) and import all the requirements at the top of the file:

MTCNNwill be used for face detectionStreamlitfor setting up the web applicationMatplotlibfor displaying the facesCirclefor marking landmarks on the faceRectanglefor creating a box around detected facesImagefor opening an image filecosineto compute the cosine similarity between facesVGGfacefor obtaining the face embeddingspreprocess_inputfor converting the image to the format that is required by the model

from mtcnn.mtcnn import MTCNN

import streamlit as st

import matplotlib.pyplot as plt

from matplotlib.patches import Rectangle

from matplotlib.patches import Circle

from PIL import Image

from numpy import asarray

from scipy.spatial.distance import cosine

from keras_vggface.vggface import VGGFace

from keras_vggface.utils import preprocess_inputSetting up the drop-down menu

The next step is to set up the drop-down options. Luckily, Streamlit provides a variety of widgets for building an HTML page.

In this case, we need to create a select_box. The select box function expects a label and the options needed for the select box. Assigning the function to a variable enables us to capture the option selected by the user.

choice = st.selectbox("Select Option",[

"Face Detection",

"Face Detection 2",

"Face Verification"

])Defining the main function

The next thing we need to do is to create a main function that will be responsible for various user selections. In this function, we'll build up the code for detecting faces as well as verifying them.

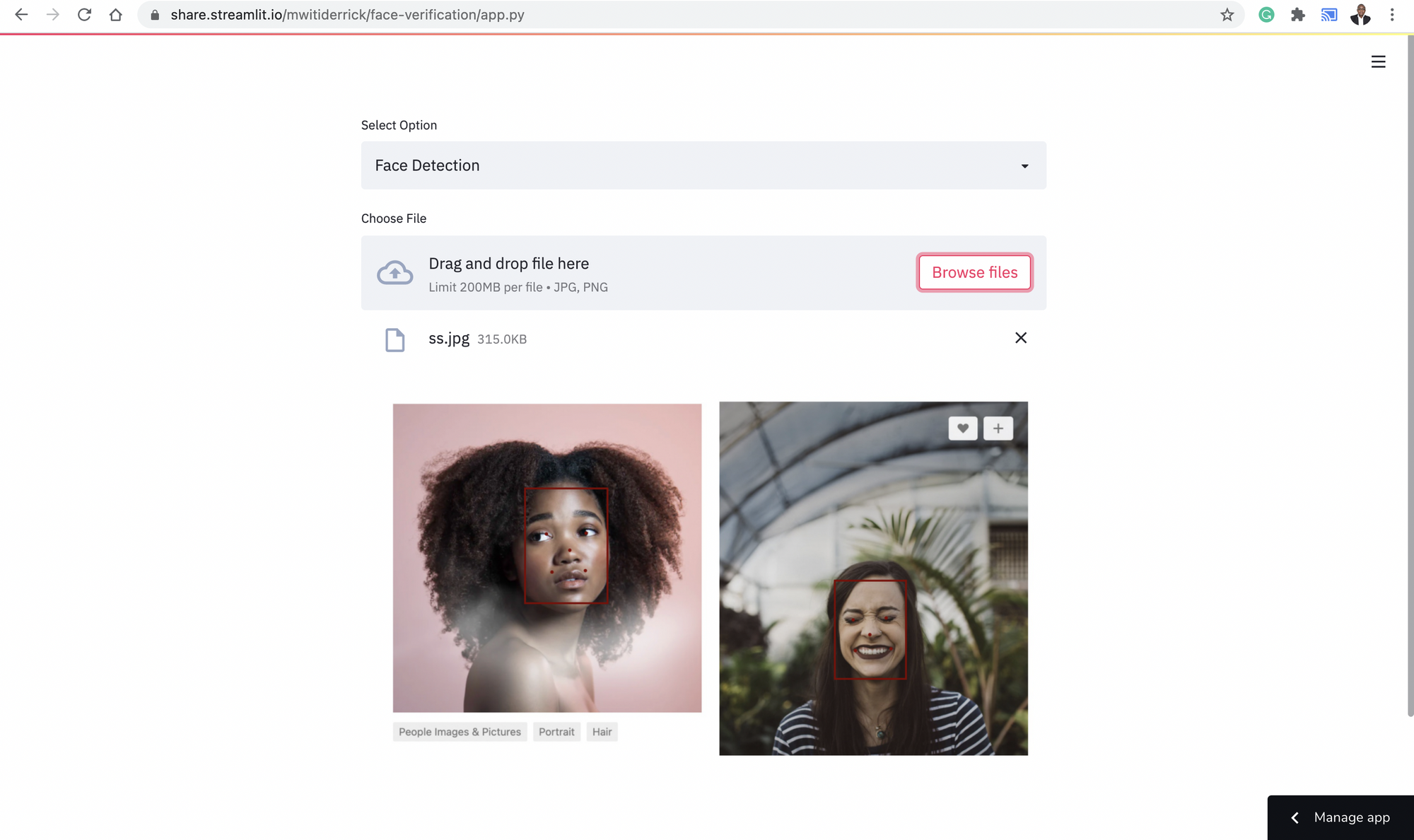

Let's start by implementing the face detection feature. The first step is to check that the user has selected the face detection option. After that, here is what happens next:

- Upload the file using the

file_uploaderfrom Streamlit - Open the image using Pillow and convert it into a NumPy array

- Visualize the image after turning off Matplotlib axes

- Create an

MTCNNdetector for detecting faces - Obtain the coordinates of the faces and the facial landmarks

- Use

CircleandRectangleto display the face with the bounding box and facial landmarks

def main():

fig = plt.figure()

if choice == "Face Detection":

uploaded_file = st.file_uploader("Choose File", type=["jpg","png"])

if uploaded_file is not None:

data = asarray(Image.open(uploaded_file))

plt.axis("off")

plt.imshow(data)

ax = plt.gca()

detector = MTCNN()

faces = detector.detect_faces(data)

for face in faces:

x, y, width, height = face['box']

rect = Rectangle((x, y), width, height, fill=False, color='maroon')

ax.add_patch(rect)

for _, value in face['keypoints'].items():

dot = Circle(value, radius=2, color='maroon')

ax.add_patch(dot)

st.pyplot(fig)

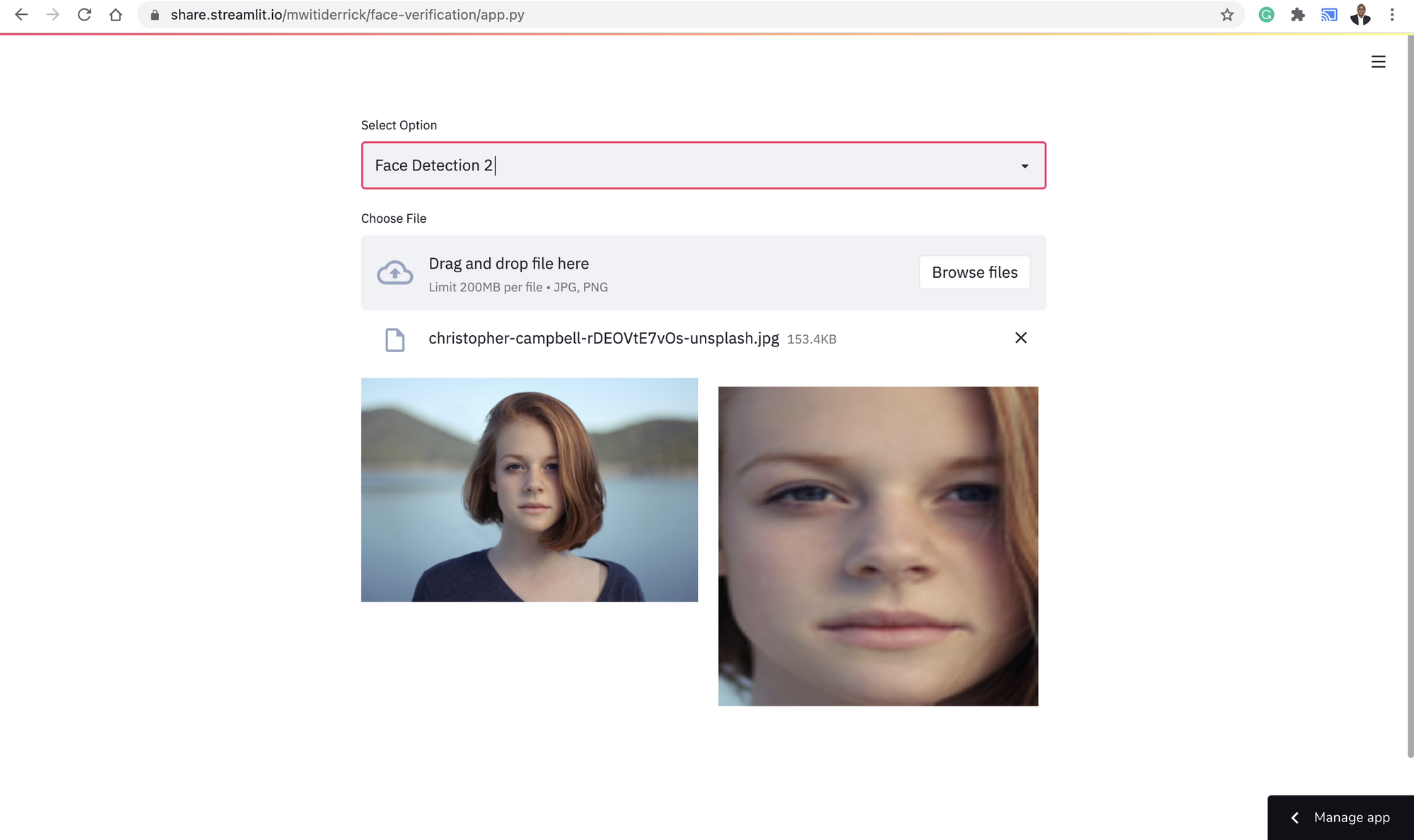

Apart from displaying dots and bounding boxes from an image, we can also return the detected faces. The process is similar to what we have done above. The only difference is that we use the coordinates of the detected face to extract the face. After that, we use Pillow to convert the arrays into an image.

results = detector.detect_faces(pixels)

x1, y1, width, height = results[0]["box"]

x2, y2 = x1 + width, y1 + height

face = pixels[y1:y2, x1:x2]

We use Streamlit beta_columns to show the image on the left and the detected face on the right. Here is the complete code for that part.

elif choice == "Face Detection 2":

uploaded_file = st.file_uploader("Choose File", type=["jpg","png"])

if uploaded_file is not None:

column1, column2 = st.beta_columns(2)

image = Image.open(uploaded_file)

with column1:

size = 450, 450

resized_image = image.thumbnail(size)

image.save("thumb.png")

st.image("thumb.png")

pixels = asarray(image)

plt.axis("off")

plt.imshow(pixels)

detector = MTCNN()

results = detector.detect_faces(pixels)

x1, y1, width, height = results[0]["box"]

x2, y2 = x1 + width, y1 + height

face = pixels[y1:y2, x1:x2]

image = Image.fromarray(face)

image = image.resize((224, 224))

face_array = asarray(image)

with column2:

plt.imshow(face_array)

st.pyplot(fig)Face verification

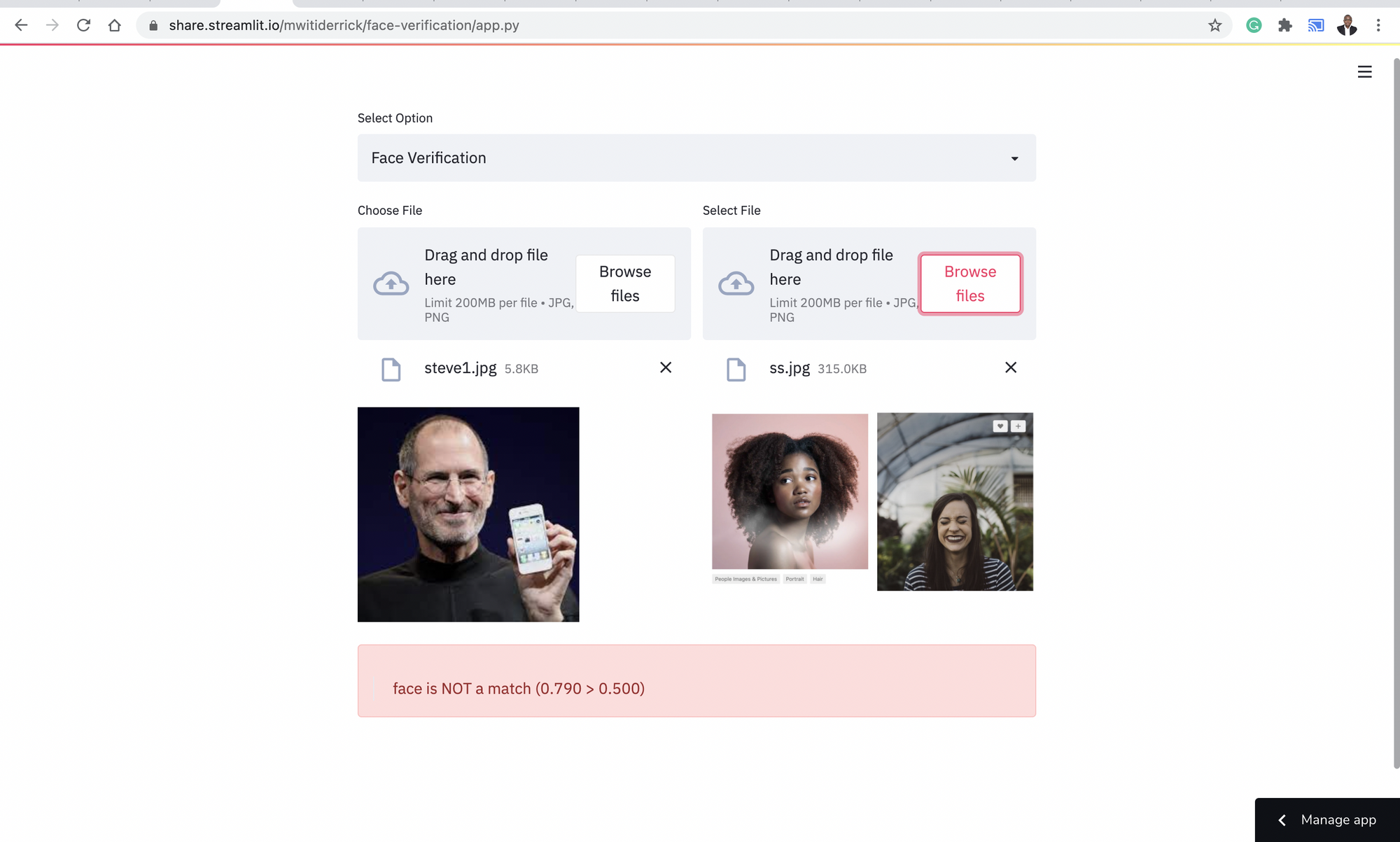

To verify faces, we'll first need to get the face embedding using a VGGFace model. Passing include_top=False in the model means that we are not interested in the final classification layer of the model. This is important because we are only interested in learning the face embeddings. After that, we'll compute the cosine similarity of the two face embeddings. We also set a threshold of 0.50 for determining the face similarity. We can use Streamlit columns to showcase the two faces side by side.

elif choice == "Face Verification":

column1, column2 = st.beta_columns(2)

with column1:

image1 = st.file_uploader("Choose File", type=["jpg","png"])

with column2:

image2 = st.file_uploader("Select File", type=["jpg","png"])

if (image1 is not None) & (image2 is not None):

col1, col2 = st.beta_columns(2)

image1 = Image.open(image1)

image2 = Image.open(image2)

with col1:

st.image(image1)

with col2:

st.image(image2)

filenames = [image1,image2]

faces = [extract_face(f) for f in filenames]

samples = asarray(faces, "float32")

samples = preprocess_input(samples, version=2)

model = VGGFace(model= "resnet50" , include_top=False, input_shape=(224, 224, 3),

pooling= "avg" )

# perform prediction

embeddings = model.predict(samples)

thresh = 0.5

score = cosine(embeddings[0], embeddings[1])

if score <= thresh:

st.success( " >face is a match (%.3f <= %.3f) " % (score, thresh))

else:

st.error(" >face is NOT a match (%.3f > %.3f)" % (score, thresh))We also create a function that is used to detect and return faces from the uploaded images. The function:

- Uses MTCNN to detect faces

- Extracts the faces from the images

- Converts the image arrays to image using Pillow

- Resizes the image to the required size

- Returns the image as a NumPy array

def extract_face(file):

pixels = asarray(file)

plt.axis("off")

plt.imshow(pixels)

detector = MTCNN()

results = detector.detect_faces(pixels)

x1, y1, width, height = results[0]["box"]

x2, y2 = x1 + width, y1 + height

face = pixels[y1:y2, x1:x2]

image = Image.fromarray(face)

image = image.resize((224, 224))

face_array = asarray(image)

return face_array

We can also demonstrate this using two faces that are not similar.

The final thing in this implementation is to run the main function.

if __name__ == "__main__":

main()The code and requirements for this project can be found here.

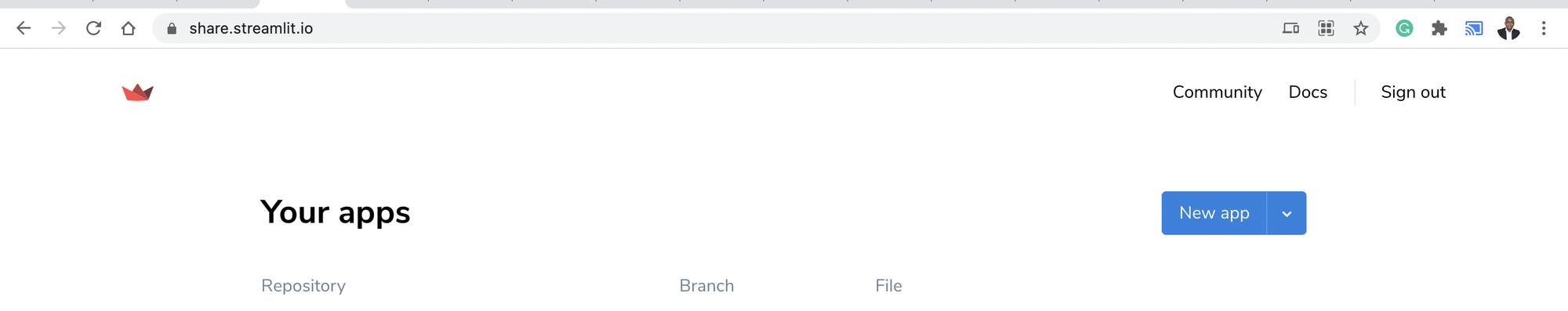

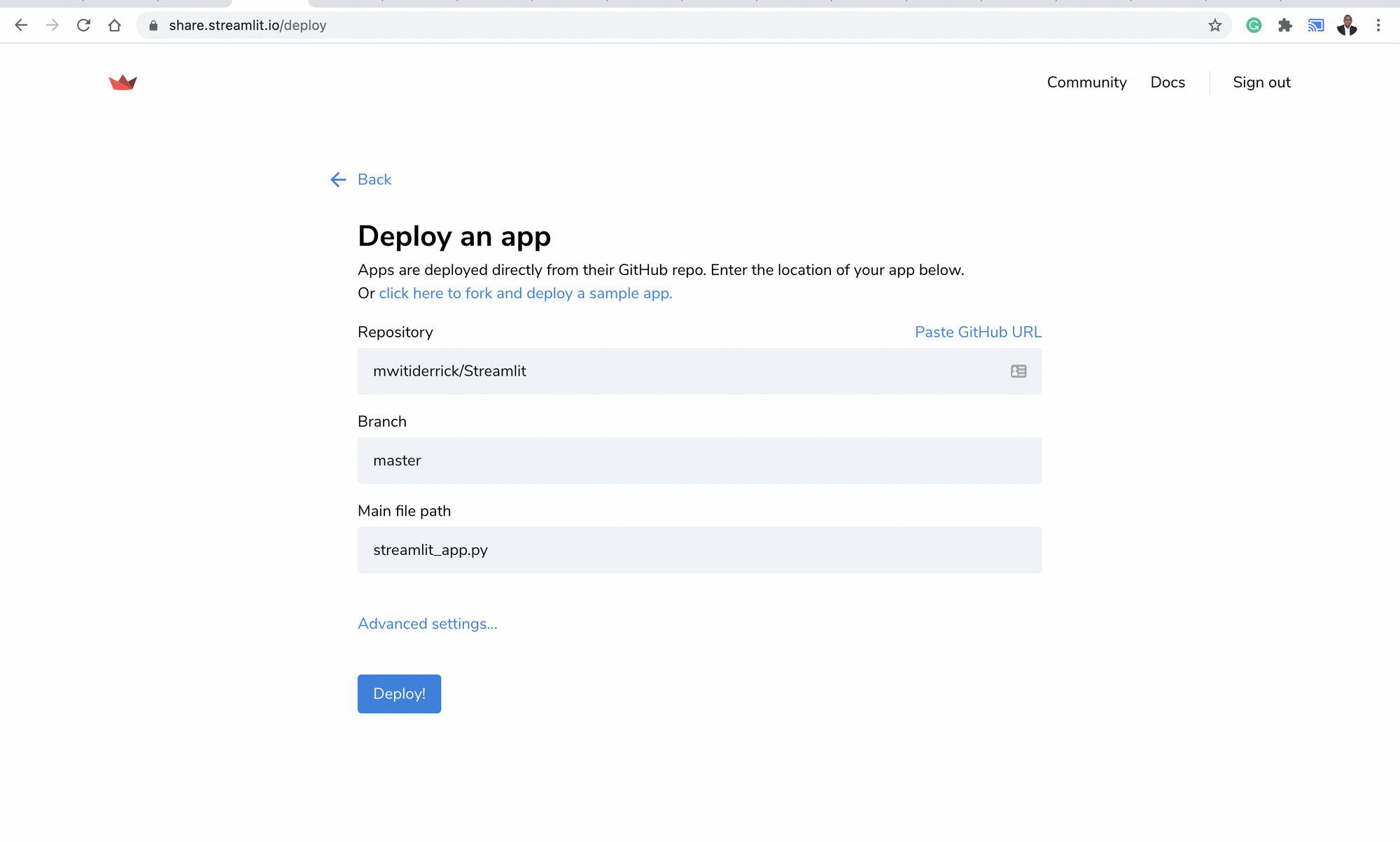

Deploying the application using Streamlit Sharing

Streamlit allows us to deploy machine learning applications with just two clicks of a button. Head over to share.streamlit.com and set up a free account. Click the New app button to start the deployment process. For this free version, the application needs to be freely available on GitHub.

After that, you will select the repository you would like to deploy and click the deploy button. The repo needs to have a requirements.txt file with all the libraries needed by your application.

Final thoughts

In this article, you have seen how to build and deploy an image verification application using Streamlit and Keras. Streamlit can be used to build and deploy all sorts of data science and machine learning applications. Its syntax is also fairly easy to grasp. In the VGG model, we used the ResNet architecture, you can try different models such as VGG16 and SENet 50 and compare the results. If you are feeling ambitious, you can also fine-tune the model further.

That's it for this one folks!