We spend most of our time here at Paperspace making software that runs on GPUs. Given our familiarity with the hardware, we thought it would be easy to get started with the newest and greatest in machine learning and deep learning software. What we quickly realized is that getting a new machine up and running is a surprisingly complicated dance of driver versions, out-of-date tutorials, CUDA versioning, OpenBLAS compiling, Lua configuring etc.

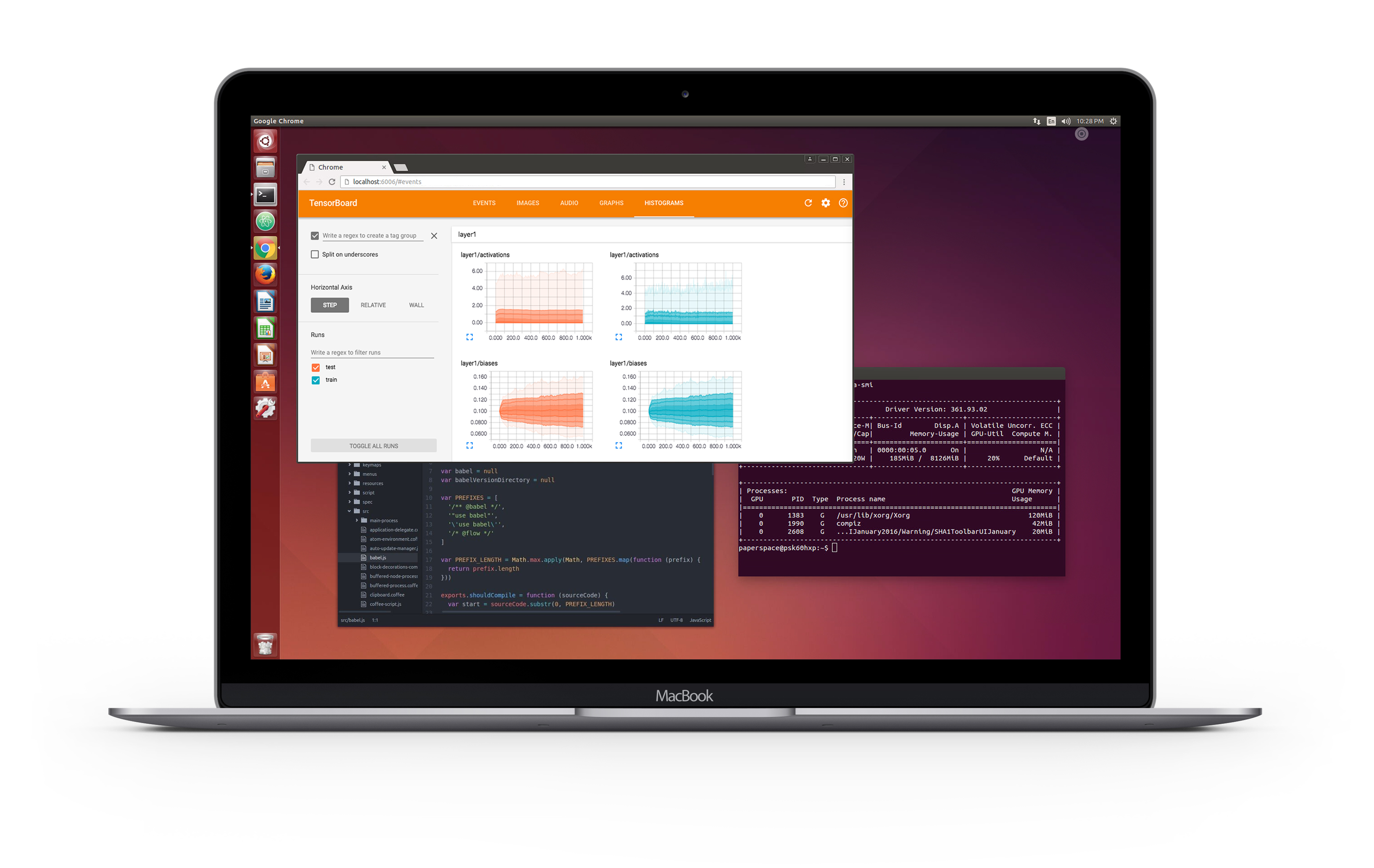

Ubuntu Desktop running in the cloud

Update: This offering was recently featured in TechCrunch

We spend most of our time here at Paperspace making software that runs on GPUs. Given our familiarity with the hardware, we thought it would be easy to get started with the newest and greatest in machine learning and deep learning software. What we quickly realized is that getting a new machine up and running is a surprisingly complicated dance of driver versions, out-of-date tutorials, CUDA versioning, OpenBLAS compiling, Lua configuring etc.

At the same time, the few options that exist for running a GPU in the cloud are very costly, out-of-date and not optimized for this type of application.

It became clear that there was a need for an effortless VM in the cloud, backed by a powerful GPU, and pre-loaded with all of the latest ML frameworks.

We started by thinking about how people would interact with their Paperspace machine in search of a solution that was simple but still powerful and familiar.

Rethinking the interface

During the beta, we quickly learned that while many people are proficient in a terminal, they tend to prefer working in a desktop environment. To that end, we built a full desktop experience that runs directly in a web browser.

Because the browser is so powerful, we also took a stab at building our own terminal, directly inside Chrome.

Web based terminal

Of course, you can always connect with SSH but we hope you'll check out these two novel ways you can interact with your Machine Learning instance.

Preconfigured template: ML-in-a-box

We use templates internally at Paperspace for everything from provisioning web servers to running CAD design rigs so it made sense to work on a gold standard machine learning template. Templates are one of the most powerful features of running virtual machines -- you only have to get everything installed once and then you can clone it and share it as much as you need.

We followed a lot of guides for getting a really great machine learning setup including some of these:

https://github.com/saiprashanths/dl-setup

The version that we are shipping today includes (among other things):

- CUDA

- cuDNN

- TensorFlow

- Python (numpy, ipython, matplotlib, etc)

- OpenBLAS

- Caffe

- Theano

- Torch

- Keras

- Latest GPU drivers

When you fire up a Paperspace Linux machine today you get a machine that just works. We are constantly improving the base image so if we forgot anything let us know!

More GPU Options

The majority of our infrastructure was built around the NVIDIA GRID architecture of "virtual" GPUs. For more intensive tasks such as model training, visual effects, and data processing we needed to find a more powerful GPU.

Unlike the GRID cards, we began a search for a card capable of running in "passthrough" mode. In this scenario, the virtual machine gets complete access to the card, without any virtualization overhead.

Today, we have a few different GPU options: The NVIDIA M4000 is a cost-effective but powerful card while the NVIDIA P5000 is built on the new Pascal architecture and is heavily optimized for machine learning and ultra high-end simulation work. We will be adding several options over the coming months. We hope to also include some of the latest ML optimized cards coming out of AMD and possibly even hardware designed explicitly for intelligence applications.

What's Next

We are very excited about the possibilities this will unlock. It's still early days and we definitely have our work cut out for us, but early feedback has been extremely positive and we can't wait for you to try it.

We will be pushing out more and more features for this offering as well as building a community of Data Scientists and Applied AI Professionals contributing technical content and tutorials. For example, Felipe Ducau (a student of deep-learning pioneer Yann LeCun) recently wrote the widely read and reposted “Autovariational Autoencoders in Pytorch”. Lily Hu, during her time in the Insight AI Fellowship, created an algorithm to separate overlapping chromosomes in medical images, solving an outstanding problem from the Artificial Intelligence Open Network (AI-ON).

To get started with your own ML-in-a-box setup, sign up here.

Subscribe to our blog to get all the latest announcements.

<3 from Paperspace