TensorFlow is one of the most popular frameworks used for deep learning projects and is approaching a major new release- TensorFlow 2.0. Luckily, we don't have to wait for the official release. A beta version is available to experiment on the official site and you can also use the preconfigured template on Paperspace Gradient. In this tutorial, we will go over a few of the new major features in TensorFlow 2.0 and how to utilize them in deep learning projects. These features are eager execution, tf.function decorator, and the new distribution interface. This tutorial assumes a familiarity with TensorFlow, the Keras API and generative models.

To demonstrate what we can do with TensorFlow 2.0, we will be implementing a GAN model. The GAN paper we will be implementing here is MSG-GAN: Multi-Scale Gradient GAN for Stable Image Synthesis. Here the generator produces multiple different resolution images and the discriminator decides on multiple resolutions given to it. By having the generator produce multiple resolution images, we ensure that the latent features throughout the network are relevant to output images.

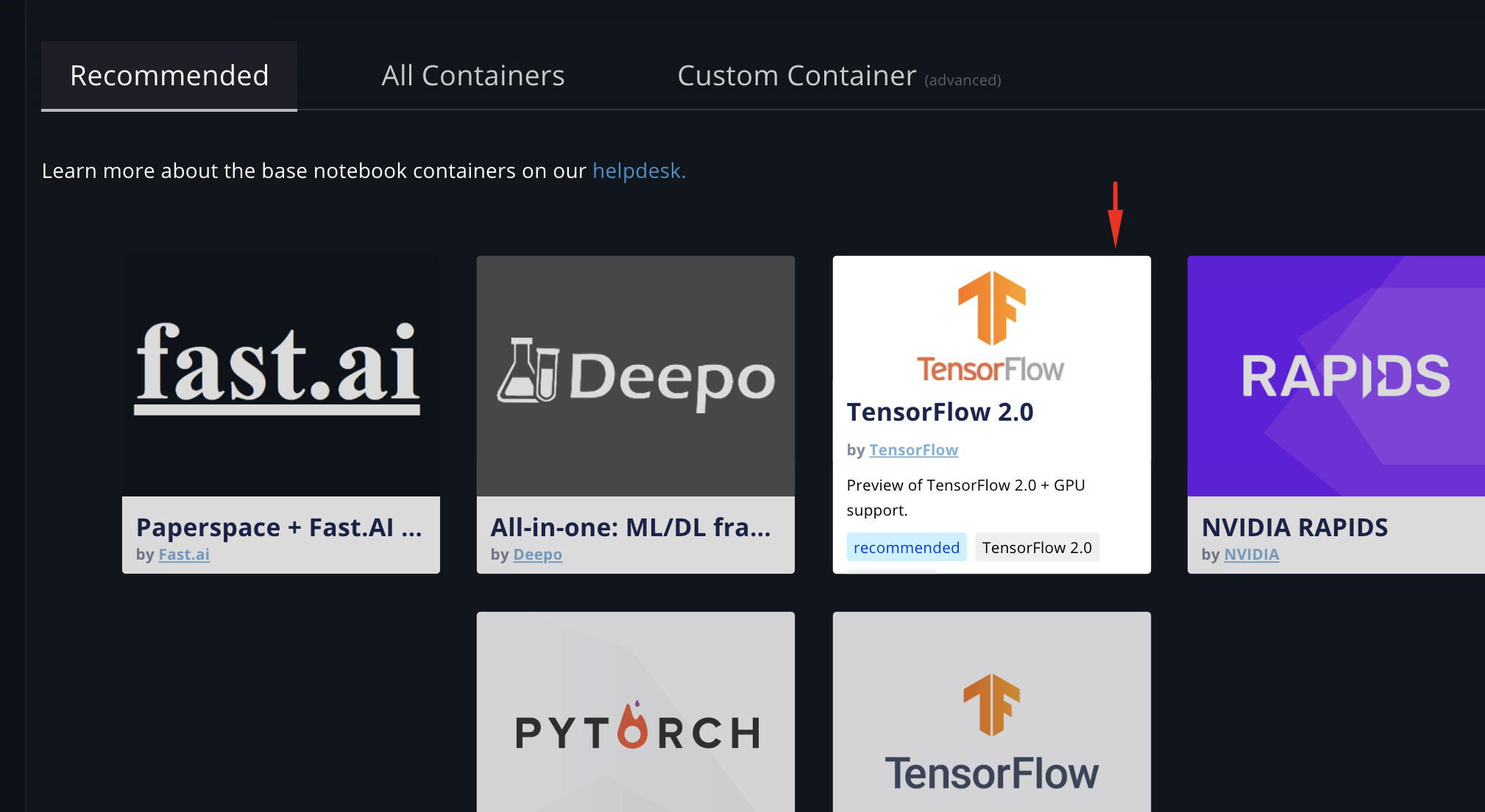

Bring this project to life

Dataset Setup

The first step for training a network is to get the data pipeline started. Here we will be using the fashion MNIST dataset and use the established dataset API to create a TensorFlow dataset.

The eager execution implemented in TensorFlow 2.0 removes the need for initializing variables and creating sessions. With eager execution we can now use TensorFlow in a more pythonic way and debug as we go. This extends to the dataset api in TensorFlow and grants us the ability to interact with the data pipeline interactively through iteration.

Model Setup

We can move onto creating the generator and discriminator models, now that the dataset is made and verified. In 2.0, the Keras interface is the interface for all deep learning. That means the generator and discriminator are made like any other Keras model. Here we will make a standard generator model with a noise vector input and three output images, ordered from smallest to largest.

Next we make the discriminator model.

Training with tf.functions

With the generator and discriminator models created, the last step to get training is to build our training loop. We won't be using the Keras model fit method here to show how custom training loops work with tf.functions and distributed training. The tf.function decorator is one of the most interesting tools to come in TensorFlow 2.0. Tf.functions takes a given native python function and autographs it onto the TensorFlow execution graph.This gives a performance boost over using a traditional python function which would have to use a context switch and not take advantage of graph optimizations. There are number of caveats for getting this performance boost. The largest drop of performance comes from passing python objects as arguments and not TensorFlow classes. With this is mind, we can create our custom training loop and loss functions using the function decorator.

Distribution in 2.0

after our custom training loop is established its time to distribute it over multiple GPUs. In my opinion, the new strategy focused distribute API is the most exciting feature coming in 2.0. It is also the most experimental, not all distribution features are currently supported for every scenario. Using the distribute API is simple and requires a handful of modifications to the current code. to begin, we have to pick the strategy we want to use for distributed training. here we will use the MirroredStrategy. This strategy distributes work over available GPUs on a single machine. There are other strategies in the works, but this is currently the only supported strategy for custom training loops. Using strategies is simple; pick the strategy and then place code inside scope.

This interface is easy to use, and this is the only change that needs to be made when using the Keras fit method to train. We are using a a custom training loop, so we still have a few modifications to make. The dataset needs to be fixed first. Each training batch the dataset creates will be split up onto each GPU. A dataset with a batch size of 256 distributed over 4 GPUs will place batches of size 64 onto each GPU. We need to adjust the batch size of the dataset to use a global batch size, instead of the batch size we want per GPU. An experimental function also needs to be used to prepare the dataset for distribution.

The last step is to wrap the train step function with a distributed train function. Here we have to use another experimental function. This one requires us to give a non tf.function for it to distribute.

Now when I run with distribution I get the following numbers on 1070s.

- 1 GPU: average 200.26 ms/image

- 3 GPU: average 39.27 ms/image

We would expect 3x performance increase not a 5x increase, but hey, it is in beta.

Now we have used eager execution to inspect the data pipeline, used tf.functions for training, and used the new distribute api with a custom loss function.

Have fun exploring and working with TensorFlow 2.0. As a reminder, you can launch a GPU-enabled instance with TensorFlow 2.0 and all the necessary libraries, drivers (CUDA, cuDNN etc.) on Gradient in just a few clicks.