Advanced deep learning methods are achieving exceptional results for specific ML problems, namely describing images and translating text from one language to another. What’s most interesting is that a single deep learning model can learn word meaning and perform language tasks, evading the need for performing complex language tasks.

In recent years, a variety of deep learning models have been applied to natural language processing (NLP) to improve, accelerate, and automate the text analytics functions and NLP features. Moreover, these models and methods are offering superior solutions to convert unstructured text into valuable data and insights.

Read on to discover deep learning methods are being applied in the field of natural language processing, achieving state-of-the-art results for most language problems.

1. Tokenization and Text Classification

Tokenization involves chopping words into pieces (or tokens) that machines can comprehend. English-language documents are easy to tokenize as they have clear spaces between the words and paragraphs. However, most other language presents novel challenges. For instance, logographic languages like Cantonese, Mandarin, and Japanese Kanji can be challenging as they have no spaces between words or even sentences.

But all languages follow certain rules and patterns. Through deep learning we can train models to perform tokenization. Therefore, most AI and deep learning courses encourage aspiring DL professionals to experiment with training DL models to identify and understand these patterns and text.

Also, DL models can classify and predict the theme of a document. For instance, deep convolutional neural networks (CNN) and recurrent neural network (RNN) can automatically classify the tone and sentiment of the source text using word embeddings that find the vector value of words. Most social media platforms deploy CNN and RNN-based analysis systems to flag and identify spam content on their platforms. Text classification is also applied in web searching, language identification, and readability assessment.

2. Generating Captions for Images

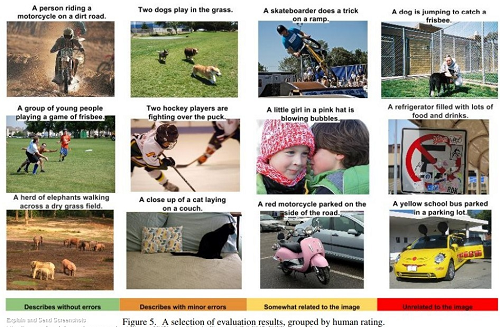

Automatically describing the content of an image using natural sentences is a challenging task. The caption of the image should not only recognize the objects contained in it but also express how they are related to each other along with their attributes (visual recognition model). Also, semantic knowledge has to be expressed in natural language which requires a language model too.

Aligning the visual and semantic elements is core to generating perfect image captions. DL models can help automatically describe the content of an image using correct English sentences. This can help visually impaired people to easily access online content.

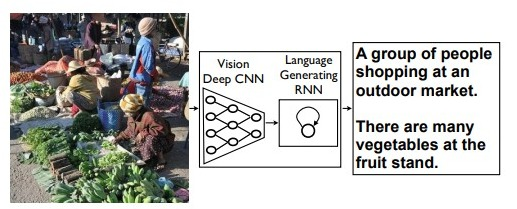

Google’s Neural Image Caption Generator (NIC) is based on a network consisting of a vision CNN followed by a language-generating RNN. The model automatically views images and generates descriptions in plain English.

3. Speech Recognition

DL is being increasingly used to build and train neural networks to transcribe audio inputs and perform complex vocabulary speech recognition and separation tasks. In fact, these models and methods are used in signal processing, phonetics, and word recognition, the core areas of speech recognition.

For instance, DL models can be trained to identify each voice to the corresponding speaker and answer each of the speakers separately. Further, CNN-based speech recognition systems can translate raw speech into a text message that offers interesting insights pertaining to the speaker.

4. Machine Translation

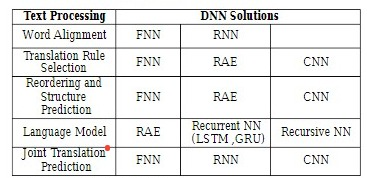

Machine translation (MT) is a core task in natural language processing that investigates the use of computers to translate languages without human intervention. It’s only recently that deep learning models are being used for neural machine translation. Unlike traditional MT, deep neural networks (DNN) offer accurate translation and better performance. RNNs, feed-forward neural network (FNNs), recursive auto-encoder (RAE), and long short-term memory (LSTM) are used to train the machine to convert the sentence from the source language to the target language with accuracy.

Suitable DNN solutions are used for processes, such as word alignment, reordering rules, language modeling, and join translation prediction to translate sentences without using a large database of rules.

5. Question Answering (QA)

Question answering systems try to answer a query that is put across in the form of a question. So, definition questions, biographical questions, and multilingual questions among other types of questions asked in natural languages are answered by such systems.

Creating a fully functional question answering system has been one of the popular challenges faced by researchers in the DL segment. Though deep learning algorithms have made decent progress in text and image classification in the past, they weren’t been able to solve the tasks that involve logical reasoning (like question answering problem). However, in recent times, deep learning models are improving the performance and accuracy of these QA systems.

Recurrent neural network models, for instance, are able to correctly answer paragraph-length questions where traditional approaches fail. More importantly, the DL model is trained in such a way that there’s no need to build the system using linguistic knowledge like creating a semantic parser.

6. Document Summarization

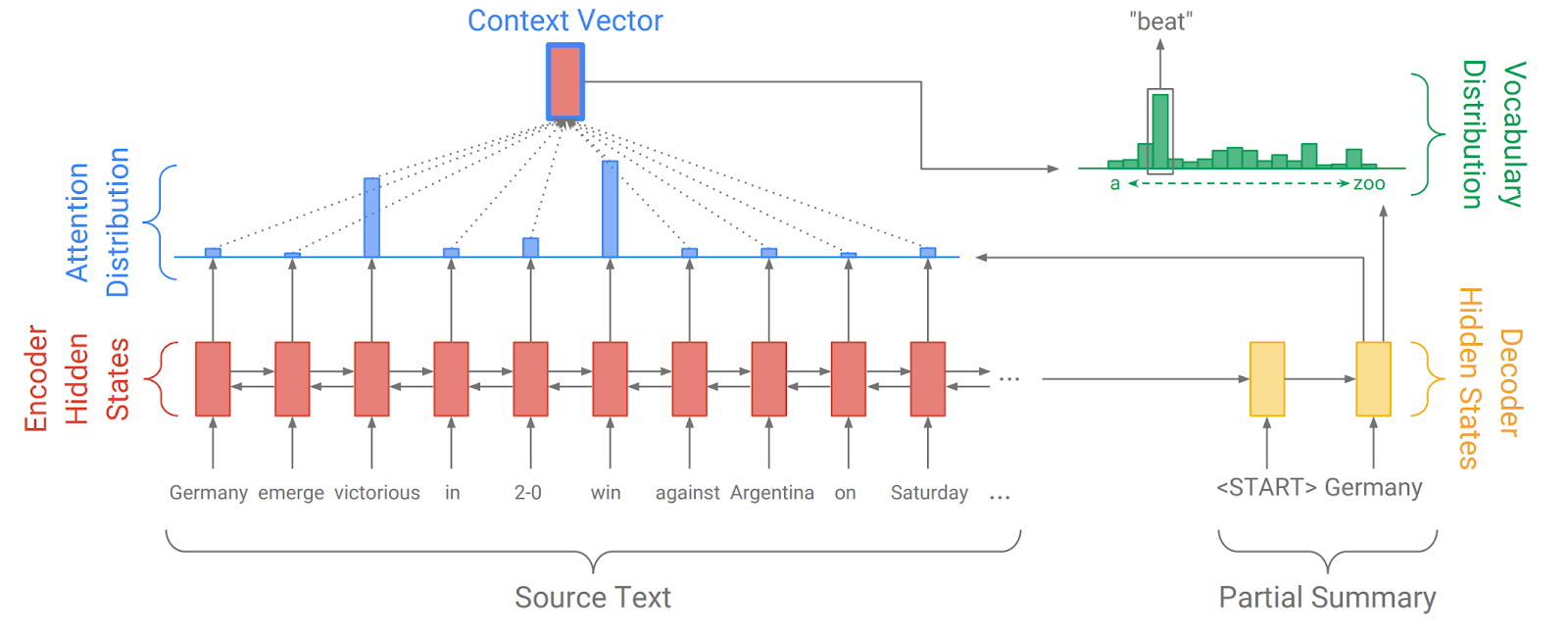

The increasing volume of data available today is making the role of document summarization critical. The latest advances in sequence-to-sequence models have made it easy for DL experts to develop good text summarization models. The two types of document summarization, namely extractive and abstractive summarization can be achieved through the sequence-to-sequence model with attention. Refer the diagram below from the Pointer Generator blog by Abigail See.

Here, the encoder RNN reads the source text, producing a sequence of encoder hidden states. Next, the decoder RNN receives the previous word of the summary as the input. It uses this input to update the decoder hidden state (the context vector). Finally, the context vector and the decoder hidden state produce the output. This sequence-to-sequence model where the decoder is able to freely generate words in any order is a powerful solution to abstractive summarization.

Summing Up

The field of language modeling is rapidly shifting from statistical language modeling to deep learning methods and neural networks. This is because DL models and methods have ensured a superior performance on complex NLP tasks. Thus, deep learning models seem like a good approach for accomplishing NLP tasks that require a deep understanding of the text, namely text classification, machine translation, question answering, summarization, and natural language inference among others.

This post will help you appreciate the growing role of DL models and methods in natural language processing.

(Feature Image Source: Pixabay)