Today we're introducing a new ML Showcase project for aitextgen, a python library for training and generating text using GPT-2 and GPT-3/GPT Neo.

In this ML Showcase entry, we'll be training a model to generate text based on a sample of input Shakespeare text that we feed it. This ML Showcase entry is a collection of notebooks forked from the original repo and adapted to work in Paperspace Gradient notebooks.

In general, generated text will start out relatively incoherent and will gain clarity with each epoch of training. Outcomes are quite good after only ~15 minutes of training!

aitextgen is compatible with every GPU that Paperspace provides – including Free GPU instances! When you're running this project you are free to use the instance selector in the Gradient IDE to swap to a different CPU or GPU instance at any time.

Now let's dive in!

Bring this project to life

Forking aitextgen to run in a Gradient notebook

The first thing we need to do is copy the aitextgen notebooks over to our Gradient console.

Before you do anything though you'll first need to create a free account on Paperspace if you don't already have one. A free account entitles you to a single free CPU or free GPU instance running at a time. (Full limitations are listed here.)

Next, we'll need to fork the aitextgen notebook from the ML Showcase entry which is linked here:

.jpg)

After you select Launch Project you should be taken to a read-only copy of the notebook which you can then fork by selecting Run.

Note: If you are not signed in or have yet to sign up for Paperspace, you will be required to make an account.

Run to fork a new version into your Paperspace accountAlternatively, if you'd like to explore the repo first before you run it, you can take a look at the notebooks you'll be running here:

Start training with aitextgen

Once you've forked the notebook, you'll see six separate ipynb files included in the ML Showcase entry:

- Train a Custom GPT-2 Model + Tokenizer w/ GPU

- Train a GPT-2 (or GPT Neo) Text-Generating Model w/ GPU

- Generation Hello World

- Training Hello World

- Hacker News Demo

- Reddit Demo

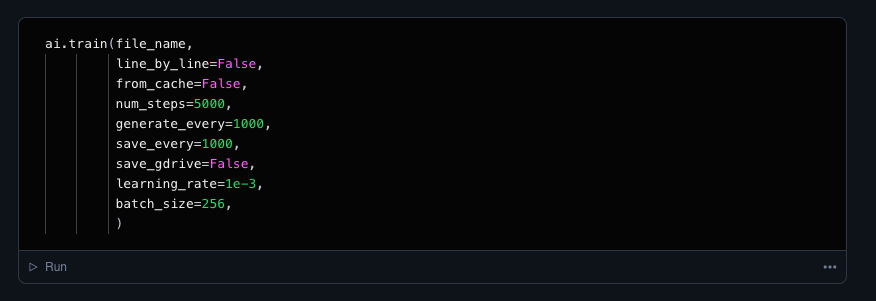

Each notebook is self-contained and for the most part self-explanatory. Configuration options are given inline. You might adjust configuration options at first to use smaller values for options like num_steps in order to first get a sense of how long a process will take to run on your instance. You can always scale up later!

A note on storage

By default, when you run !wget to upload a dataset into your notebook or when you kick off a training run that generates artifacts, the notebook will be saving to top level of the notebook file directory which is located at /notebooks.

If you'd like to save data to another location, you'll need to specify that in the notebooks by changing your working directory.

Terminals and other capability

If you ever need terminal access or access to JupyterLab while exploring this project, feel free to press the Jupyter button located in the left sidebar. From here you can open a terminal, upload folders of data, and so forth!

Next steps

So now that you're up and running with aitextgen on Gradient, what's next?

- Explore more ML Showcase projects

- Submit a pull request to our public fork of the aitextgen library

- Write an even better tutorial to share with the Paperspace community

- Let us know what tutorials you'd like to see next!