Introduction

A model of a language can be thought of as a probability distribution across word sequences. The main purpose of this tool is to determine the likelihood of various word sequences or to predict the next word in a sequence. Many natural language processing (NLP) tasks rely on language models, including text generation, information retrieval, and voice recognition. N-gram models, RNNs, and Transformer-based models, such as GPT-3, are all examples of language models. In this tutorial, we will build a language model using PyTorch with the WikiText-2 dataset and a Transformer. We will use WikiText2 dataset to build our language model. Over 100 million tokens were taken from Good and Featured articles on Wikipedia and compiled into this dataset.

Import necessary libraries

import math

import torch

import torch.nn as nn

import torch.optim as optim

from torchtext.datasets import WikiText2

from torchtext.data.utils import get_tokenizer

from torchtext.vocab import build_vocab_from_iterator

from torch.utils.data import DataLoader

To interact with text data in PyTorch, we've supplied a code snippet that imports the necessary libraries and modules. Included are the get_tokenizer function from torchtext.data.utils, the build_vocabulary_from_iterator function from torchtext.vocabulary, and the WikiText2 dataset from torchtext.datasets. The DataLoader class from the torch.utils.data package is also brought in.

Install portalocker and torchdata

- Portalocker: File locking on Windows platforms is facilitated by this package. Since Windows has a built-in mechanism for locking files, it is a required component of torchdata.

- torchdata: Included in the wider PyTorch ecosystem, this package contains useful tools for manipulating data in PyTorch.

pip install portalocker

pip install torchdataLoad and preprocess the dataset

This code prepares the WikiText2 dataset for use in a language model's training, validation, and testing phases.

# Initialize the tokenizer

tokenizer = get_tokenizer("basic_english")

# Load the WikiText2 dataset

train_iter, val_iter, test_iter = WikiText2()

# Define a function to yield tokens from the dataset

def yield_tokens(data_iter):

for text in data_iter:

yield tokenizer(text)

# Build the vocabulary from the training dataset

vocab = build_vocab_from_iterator(yield_tokens(train_iter), specials=["<unk>"])

vocab.set_default_index(vocab["<unk>"])

# Define a function to process the raw text and convert it to tensors

def data_process(raw_text_iter):

data = [torch.tensor([vocab[token] for token in tokenizer(item)], dtype=torch.long) for item in raw_text_iter]

return torch.cat(tuple(filter(lambda t: t.numel() > 0, data)))

# Process the train, validation, and test datasets

train_data = data_process(train_iter)

val_data = data_process(val_iter)

test_data = data_process(test_iter)

# Define a function to batchify the data

def batchify(data, bsz):

nbatch = data.size(0) // bsz

data = data.narrow(0, 0, nbatch * bsz)

data = data.view(bsz, -1).t().contiguous()

return data

# Set the batch sizes and batchify the data

batch_size = 20

eval_batch_size = 10

train_data = batchify(train_data, batch_size)

val_data = batchify(val_data, eval_batch_size)

test_data = batchify(test_data, eval_batch_size)

- We initialize the tokenizer by using the "basic_english" tokenizer that can be found in torchtext.data.utils

- We Load the WikiText2 dataset into training, validation, and testing iterators

- The yield_tokens function is a generator that accepts an iterator of tokens as its arguments. When called, the function iteratively processes the text data, passing each tokenized token through the tokenizer function. The generator yields the tokenized text. Rather than loading all of the text data into memory at once, the yield_tokens method enables you to tokenize the text items one at a time, making it ideal for processing text data in an iterator. When dealing with huge datasets, this may be very useful since it allows for more efficient data processing and prevents issues with memory.

- Construct the vocabulary based on the iterator, with a special token for unknown words. We also set the default index for unknow words.

- Define a function known as data_process that is responsible for processing the raw text data and converting it into tensors.

- Perform the necessary steps to process the data for training, validation, and testing.

- The batchify function is responsible for the creation of data batches. It requires two arguments, data and bsz, to function properly. The preprocessed text data are included inside the data tensor, while bsz refers to the batch size. Training can go more quickly if the data is separated into batches, which the network can then handle simultaneously. This is especially crucial when working with graphics processing units (GPUs), which can process massive amounts of data in parallel.

- Build up your data in batches for use in training, validation, and testing

Define the model architecture

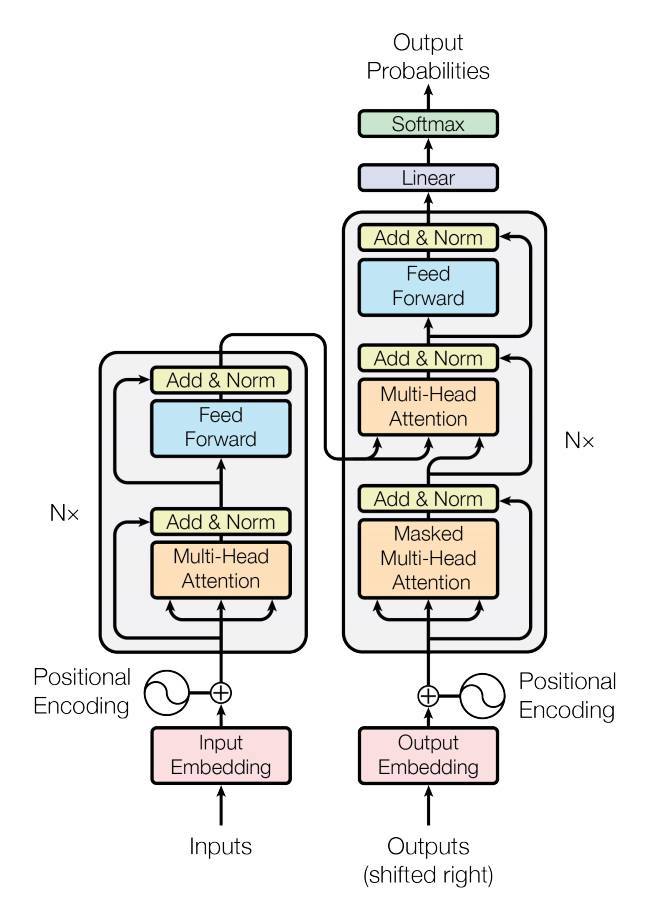

This code sample provides the TransformerModel class and the PositionalEncoding class, two fundamental building blocks of a Transformer architecture for NLP applications.

# Define the TransformerModel class

class TransformerModel(nn.Module):

def __init__(self, ntoken, d_model, nhead, d_hid, nlayers, dropout=0.5):

super(TransformerModel, self).__init__()

self.model_type = 'Transformer'

self.src_mask = None

self.pos_encoder = PositionalEncoding(d_model, dropout)

encoder_layers = nn.TransformerEncoderLayer(d_model, nhead, d_hid, dropout)

self.transformer_encoder = nn.TransformerEncoder(encoder_layers, nlayers)

self.encoder = nn.Embedding(ntoken, d_model)

self.d_model = d_model

self.decoder = nn.Linear(d_model, ntoken)

##Generate a mask for the input sequence

def _generate_square_subsequent_mask(self, sz):

mask = (torch.triu(torch.ones(sz, sz)) == 1).transpose(0, 1)

## Change all the zeros to negative infinity and all the ones to zeros as follows:

mask = mask.float().masked_fill(mask == 0, float('-inf')).masked_fill(mask == 1, float(0.0))

return mask

# Define the forward pass

def forward(self, src):

if self.src_mask is None or self.src_mask.size(0) != len(src):

device = src.device

mask = self._generate_square_subsequent_mask(len(src)).to(device)

self.src_mask = mask

src = self.encoder(src) * math.sqrt(self.d_model)

src = self.pos_encoder(src)

output = self.transformer_encoder(src, self.src_mask)

output = self.decoder(output)

return output

# Define the PositionalEncoding class

class PositionalEncoding(nn.Module):

def __init__(self, d_model, dropout=0.1, max_len=5000):

super(PositionalEncoding, self).__init__()

self.dropout = nn.Dropout(p=dropout)

pe = torch.zeros(max_len, d_model)

position = torch.arange(0, max_len, dtype=torch.float).unsqueeze(1)

div_term = torch.exp(torch.arange(0, d_model, 2).float() * (-math.log(10000.0) / d_model))

pe[:, 0::2] = torch.sin(position * div_term)

pe[:, 1::2] = torch.cos(position * div_term)

pe = pe.unsqueeze(0).transpose(0, 1)

self.register_buffer('pe', pe)

def forward(self, x):

x = x + self.pe[:x.size(0), :]

return self.dropout(x)

- The Transformer architecture for NLP tasks is implemented in the TransformerModel class, which is a PyTorch nn.Module. These are the parameters that can be sent to the constructor:

- ntoken: Vocabulary size .

- d_model: The size of the model's embeddings.

- nhead: For a system with several attention heads, nhead specifies how many heads there are.

- d_hid: The size of the feed-forward network's hidden layer.

- nlayers: The number of transformer layers.

- dropout: dropout Rate

The class contains the following components:

- pos_encoder: PositionalEncoding module that encodes positional information onto input embeddings.

- encoder_layers: A nn.TransformerEncoderLayer module that specifies the encoder's transformer layers.

- transformer_encoder: It is a nn.TransformerEncoder module that is used to stack several encoders.

- Encoder: Encoder is a subclass of nn.Embedding that performs the transformation from token indices to embeddings.

- decoder: The decoder(nn.Linear module) will convert the output of the transformer encoder back to the vocabulary size.

- During the training phase, the TransformerModel class's _generate_square_subsequent_mask function creates a mask for the input sequence. This mask serves to prevent the model from paying attention to future tokens in the sequence.

- In order to generate the output, the forward method of the TransformerModel class processes the input sequence src by first passing it through the encoder, the positional encoder, then the transformer encoder, and finally the decoder.

- Positional information can be added to the input embeddings with the help of the PositionalEncoding class, which is a PyTorch nn.Module. These are the arguments that can be sent to the constructor:

- d_model: It represents the size of the embeddings in the model.

- dropout: The dropout rate

- max_len: maximum length of input sequence

The following elements make up the class:

- dropout: An nn.Dropout component for regularizing input embeddings using the dropout regularization.

- pe: The positional encodings for each point in the input sequence are stored in a tensor called pe.

By supplementing the input embeddings with positional information, the PositionalEncoding module improves the model's understanding of word order inside sentences. This is significant since the Transformer design, which processes input tokens in parallel, has no innate concept of word order. This module uses variable-frequency sine and cosine functions to construct a fixed-size encoding for each position in the input sequence. This encoding is a vector that has d dimensions, where d is the embedding dimension of the model.

In the process of generating the positional encoding, the div_term variable plays an essential part in defining the frequency of the sine and cosine functions that are used to construct the positional encodings. To determine the value of the div_term variable, an exponential function with a base of 10000 and a scaling factor that depends to the embedding dimension d_model is used.

Because to the exponentially decreasing tensor values generated by this process, the sine and cosine functions will have different frequencies in each embedding dimension. Sine and cosine functions, with their frequencies controlled by the div_term, can be used to construct positional encodings that are unique and easily recognizable. This enables the model to account for where tokens occur in the sequence and establish their relative importance.

- The positional encodings are added to the input embeddings by the forward function of the PositionalEncoding class. This essentially combines the meaning of the word with its position in the sentence.

Instantiate the model, loss function, and optimizer

The following code starts the process of initializing a Transformer-based language model using the dimensions and hyperparameters that have been supplied.

ntokens = len(vocab) # size of vocabulary

emsize = 200 # embedding dimension

nhid = 200 # dimension of the feedforward network model in ``nn.TransformerEncoder``

nlayers = 2 # number of ``nn.TransformerEncoderLayer`` in ``nn.TransformerEncoder`

nhead = 2 # number of heads in ``nn.MultiheadAttention``

dropout = 0.2 # dropout probability

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = TransformerModel(ntokens, emsize, nhead, nhid, nlayers, dropout).to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

Define a function to get data batches

The get_batch method is used to generate small batches of data and targets for use in the language model's training and assessment processes. Each mini-batch's sequence length is determined by the source data, an initial index i, and the optional bptt (backpropagation through time) parameter.

def get_batch(source, i, bptt=35):

seq_len = min(bptt, len(source) - 1 - i)

data = source[i:i+seq_len]

target = source[i+1:i+1+seq_len].view(-1)

return data, target- seq_len: Determine the length of the source data sequence from the current index i using the minimum of the remaining length and the bptt.

- data: Extract rows from the source data starting at index i and ending at index i + seq_len.

- target: slice the source data from the index i+1 all the way to the index i+1 and reshape it into a one-dimensional tensor. This is the target data (in this case, the next word in the sequence) for the mini-batch that is now being processed.

Define the training function

In the code sample, the train function is used to train the language model inside the PyTorch environment. The operation can be summarized as follows:

def train(model, train_data, criterion, optimizer, batch_size, bptt=35):

model.train() # Set the model to training mode

total_loss = 0. # Initialize the total loss to 0

ntokens = len(vocab) # Get the number of tokens in the vocabulary

# Iterate through the mini-batches of data

for batch, i in enumerate(range(0, train_data.size(0) - 1, bptt)):

data, targets = get_batch(train_data, i, bptt) # Get the input data and targets for the current mini-batch

optimizer.zero_grad() # Reset the gradients to zero before the next backward pass

output = model(data) # Forward pass: compute the output of the model given the input data

loss = criterion(output.view(-1, ntokens), targets) # Calculate the loss between the model output and the targets

loss.backward() # Backward pass: compute the gradients of the loss with respect to the model parameters

optimizer.step() # Update the model parameters using the computed gradients

total_loss += loss.item() # Accumulate the total loss

return total_loss / (batch + 1) # Return the average loss per mini-batch

The function iteratively processes the mini-batches of data, generates model output, computes loss, and uses an optimizer to update model parameters. At the completion of training, the average loss per minibatch is given back.

Define the evaluation function

To evaluate how well a language model performs on a certain dataset without modifying the model's parameters, we can use the evaluate function. Specifically, this is how it works:

def evaluate(model, data_source, criterion, batch_size, bptt=35):

model.eval() # Set the model to evaluation mode

total_loss = 0. # Initialize the total loss to 0

ntokens = len(vocab) # Get the number of tokens in the vocabulary

# Use torch.no_grad() to disable gradient calculation during evaluation

with torch.no_grad():

# Iterate through the mini-batches of data

for i in range(0, data_source.size(0) - 1, bptt):

data, targets = get_batch(data_source, i, bptt) # Get the input data and targets for the current mini-batch

output = model(data) # Forward pass: compute the output of the model given the input data

loss = criterion(output.view(-1, ntokens), targets) # Calculate the loss between the model output and the targets

total_loss += loss.item() # Accumulate the total loss

return total_loss / (i + 1) # Return the average loss per mini-batch

Train the model

The training loop for a language model built using PyTorch can be found in the following lines of code. It stores the state of the model if there is an improvement in the validation loss after training the model for a certain number of epochs.

epochs = 10 # Set the number of epochs for training

best_val_loss = float("inf") # Initialize the best validation loss to infinity

# Iterate through the epochs

for epoch in range(1, epochs + 1):

# Train the model on the training data and calculate the training loss

train_loss = train(model, train_data, criterion, optimizer, batch_size)

# Evaluate the model on the validation data and calculate the validation loss

val_loss = evaluate(model, val_data, criterion, eval_batch_size)

# Print the training and validation losses for the current epoch

print(f"Epoch: {epoch}, Train loss: {train_loss:.2f}, Validation loss: {val_loss:.2f}")

# If the validation loss has improved, save the model's state

if val_loss < best_val_loss:

best_val_loss = val_loss

torch.save(model.state_dict(), "transformer_wikitext2.pth")

Evaluate the best model on the test dataset

To determine which model performs the best on the test dataset, you can load the model's state that was previously stored and then apply the evaluate function to the test data. This is the code you can use to do that:

# Load the best model's state

best_m = TransformerModel(ntokens, emsize, nhead, nhid, nlayers, dropout).to(device)

best_m.load_state_dict(torch.load("transformer_wikitext2.pth"))

# Evaluate the best model on the test dataset

test_loss = evaluate(best_m, test_data, criterion, eval_batch_size)

print(f"Test loss: {test_loss:.2f}")

Conclusion

The Transformer design has reshaped NLP and is becoming an indispensable resource for a wide range of ML projects, including language modeling, MT, and summarization. The nn.Transformer module in the PyTorch framework offers a straightforward implementation of the transformer architecture, simplifying the process of creating and training unique transformer-based language models. Through this article, we went over the following components of developing a Transformer-based language model with PyTorch:

- Bringing in the required library components and loading the dataset.

- Performing preliminary processing on the dataset, which can include tokenization, constructing the vocabulary, and developing data loaders.

- Defining the model architecture, including the TransformerModel and PositionalEncoding classes among other components.

- Creating an instance of the model, the loss function, and the optimizer.

- Training the model through the use of a specialized training loop and analyzing its performance based on validation data.

- Saving the state of the best model and evaluating it on the test dataset.

The provided code samples and explanations will guide you through the process of creating your own Transformer-based language model in PyTorch, which you can then tweak for use in a wide range of natural language processing applications.

Reference