This article will discuss Depth Anything V2, a practical solution for robust monocular depth estimation. Depth Anything model aims to create a simple yet powerful foundation model that works well with any image under any conditions. The dataset was significantly expanded using a data engine to collect and automatically annotate around 62 million unlabeled images to achieve this. This large-scale data helps reduce generalization errors.

This powerful model uses two key strategies to make the data scaling effective. First, a more challenging optimization target is set using data augmentation tools, which pushes the model to learn more robust representations. Second, auxiliary supervision is added to help the model inherit rich semantic knowledge from pre-trained encoders. The model's zero-shot capabilities were extensively tested on six public datasets and random photos, showing impressive generalization ability.

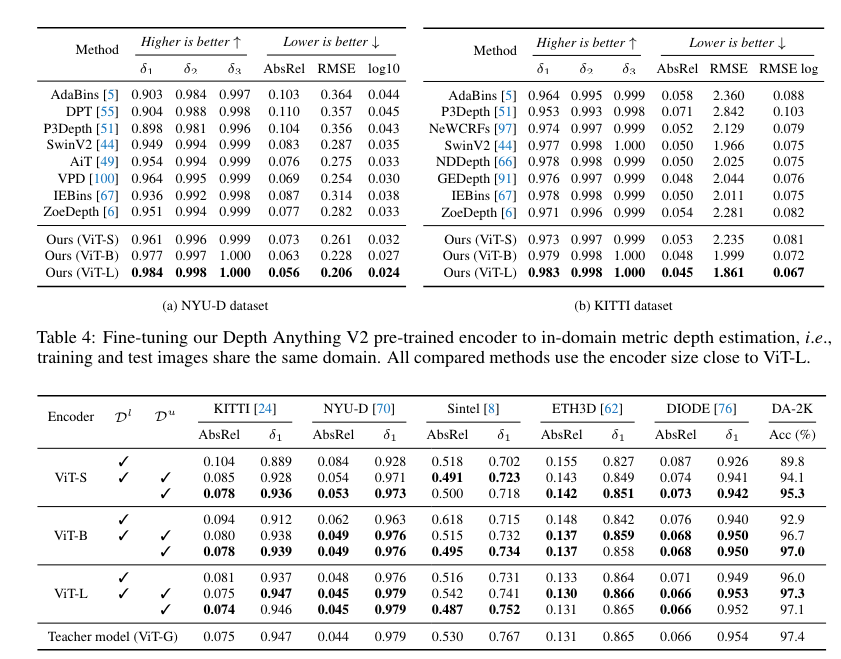

Fine-tuning with metric depth information from NYUv2 and KITTI has also set new state-of-the-art benchmarks. This improved depth model also enhances depth-conditioned ControlNet significantly.

Related Works on Monocular Depth Estimation (MDE)

Recent advancements in monocular depth estimation have shifted towards zero-shot relative depth estimation and improved modeling techniques like Stable Diffusion for denoising depth. Works such as MiDaS and Metric3D have collected millions of labeled images, addressing the challenge of dataset scaling. Depth Anything V1 enhanced robustness by leveraging 62 million unlabeled images and highlighted the limitations of labeled real data, advocating for synthetic data to improve depth precision. This approach integrates large-scale pseudo-labeled real images and scales up teacher models to tackle generalization issues from synthetic data. In semi-supervised learning, the focus has moved to real-world applications, aiming to enhance performance by incorporating large amounts of unlabeled data. Knowledge distillation in this context emphasizes transferring knowledge through prediction-level distillation using unlabeled real images, showcasing the importance of large-scale unlabeled data and larger teacher models for effective knowledge transfer across different model scales.

Strengths of the Model

The research aims to construct a versatile evaluation benchmark for relative monocular depth estimation that can:-

1) Provide precise depth relationship

2) Cover extensive scenes

3) Contains mostly high-resolution images for modern usage.

The research paper also aims to build a foundation model for MDE that has the following strengths:

- Deliver robust predictions for complex scenes, including intricate layouts, transparent objects like glass, and reflective surfaces such as mirrors and screens.

- Capture fine details in the predicted depth maps, comparable to the precision of Marigold, including thin objects like chair legs and small holes.

- Offer a range of model scales and efficient inference capabilities to support various applications.

- Be highly adaptable and suitable for transfer learning, allowing for fine-tuning downstream tasks. For instance, Depth Anything V1 has been the pre-trained model of choice for all leading teams in the 3rd MDEC1.

What is Monocular Depth Estimation (MDE)?

Monocular depth estimation is a way to determine how far away things are in a picture taken with just one camera.

Imagine looking at a photo and being able to tell which objects are close to you and which ones are far away. Monocular depth estimation uses computer algorithms to do this automatically. It looks at visual clues in the picture, like the size and overlap of objects, to estimate their distances.

This technology is useful in many areas, such as self-driving cars, virtual reality, and robots, where it's important to understand the depth of objects in the environment to navigate and interact safely.

The two main categories are:

- Absolute depth estimation: This task variant, or metric depth estimation, aims to provide exact depth measurements from the camera in meters or feet. Absolute depth estimation models produce depth maps with numerical values representing real-world distances.

- Relative depth estimation: Relative depth estimation predicts the relative order of objects or points in a scene without providing exact measurements. These models produce depth maps that show which parts of the scene are closer or farther from each other without specifying the distances in meters or feet.

Model Framework

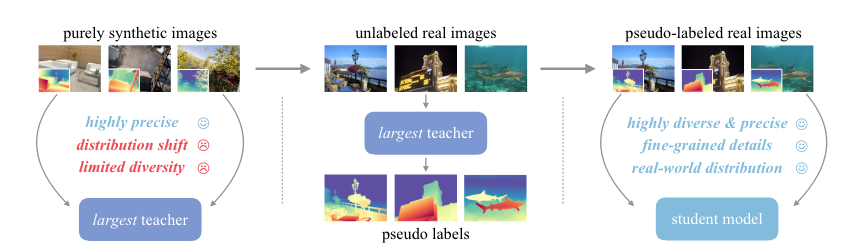

The model pipeline to train the Depth Anything V2, includes three major steps:

- Training a teacher model that is based on DINOv2-G encoder on high-quality synthetic images.

- Generating accurate pseudo-depth on large-scale unlabeled real images.

- Training a final student model on the pseudo-labeled real images for robust generalization.

Here’s a simpler explanation of the training process for Depth Anything V2:

- Model Architecture: Depth Anything V2 uses the Dense Prediction Transformer (DPT) as the depth decoder, which is built on top of DINOv2 encoders.

- Image Processing: All images are resized so their shortest side is 518 pixels, and then a random 518×518 crop is taken. In order to standardize the input size for training.

- Training the Teacher Model: The teacher model is first trained on synthetic images. In this stage:

- Batch Size: A batch size of 64 is used.

- Iterations: The model is trained for 160,000 iterations.

- Optimizer: The Adam optimizer is used.

- Learning Rates: The learning rate for the encoder is set to 5e-6, and for the decoder, it's 5e-5.

- Training on Pseudo-Labeled Real Images: In the third stage, the model is trained on pseudo-labeled real images generated by the teacher model. In this stage:

- Batch Size: A larger batch size of 192 is used.

- Iterations: The model is trained for 480,000 iterations.

- Optimizer: The same Adam optimizer is used.

- Learning Rates: The learning rates remain the same as in the previous stage.

- Dataset Handling: During both training stages, the datasets are not balanced but are simply concatenated, meaning they are combined without any adjustments to their proportions.

- Loss Function Weights: The weight ratio of the loss functions Lssi (self-supervised loss) and Lgm (ground truth matching loss) is set to 1:2. This means Lgm is given twice the importance compared to Lssi during training.

This approach helps ensure that the model is robust and performs well across different types of images.

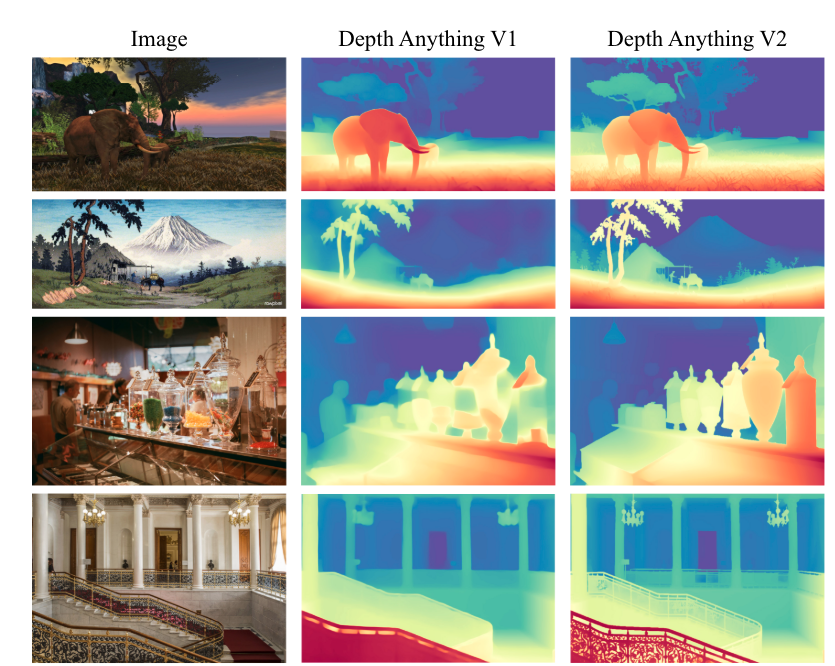

To verify the model performance the Depth Anything V2 model has been compared to Depth Anything V1 and MiDaS V3.1 using 5 test dataset. The model comes out superior to MiDaS. However, slightly inferior to V1.

Paperspace Demonstration

Depth Anything offers a practical solution for monocular depth estimation; the model has been trained on 1.5M labeled and over 62M unlabeled images.

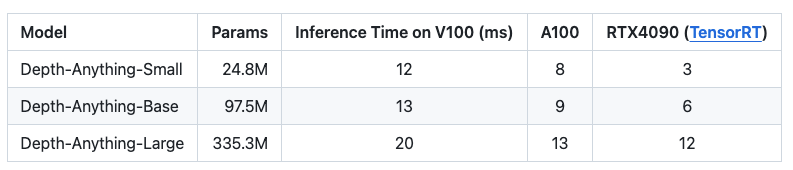

The list below contains model details for depth estimation and their respective inference times.

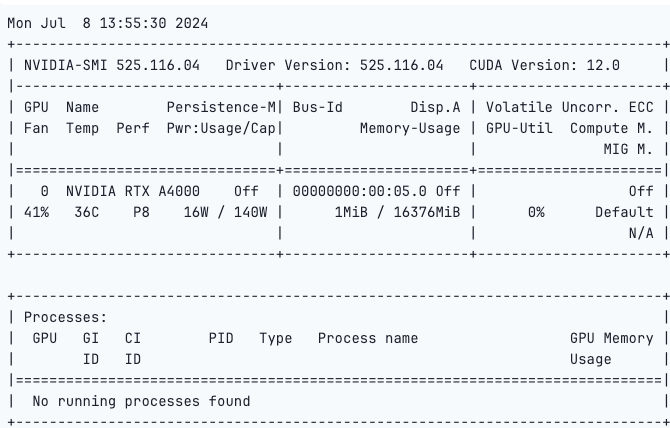

For this demonstration, we will recommend the use of an NVIDIA RTX A4000. The NVIDIA RTX A4000 is a high-performance professional graphics card designed for creators and developers. The NVIDIA Ampere architecture features 16GB of GDDR6 memory, 6144 CUDA cores, 192 third-generation tensor Cores, and 48 RT cores. The RTX A4000 delivers exceptional performance in demanding workflows, including 3D rendering, AI, and data visualization, making it an ideal choice for architecture, media, and scientific research professionals.

Paperspace also offers powerful H100 GPUs. To get the most out of Depth Anything and your Virtual Machine, we recommend recreating this on a Paperspace by DigitalOcean Core H100 Machine.

Bring this project to life

Let us run the code below to check the GPU

!nvidia-smi

Next, clone the repo and import the necessary libraries.

from PIL import Image

import requests!git clone https://github.com/LiheYoung/Depth-Anythingcd Depth-AnythingInstall the requirements.txt file.

!pip install -r requirements.txt!python run.py --encoder vitl --img-path /notebooks/Image/image.png --outdir depth_visArguments:

--img-path: 1) specify a directory containing all the desired images, 2) specify a single image, or 3) specify a text file that lists all the image paths.- Setting

--pred-onlysaves only the predicted depth map. Without this option, the default behavior is to visualize the image and depth map side by side. - Setting

--grayscalesaves the depth map in grayscale. Without this option, a color palette is applied to the depth map by default.

If you want to use Depth Anything on videos:

!python run_video.py --encoder vitl --video-path assets/examples_video --outdir video_depth_visRun the Gradio Demo:-

To run the gradio demo locally:-

!python app.pyNote: If you encounter KeyError: 'depth_anything', please install the latest transformers from source:

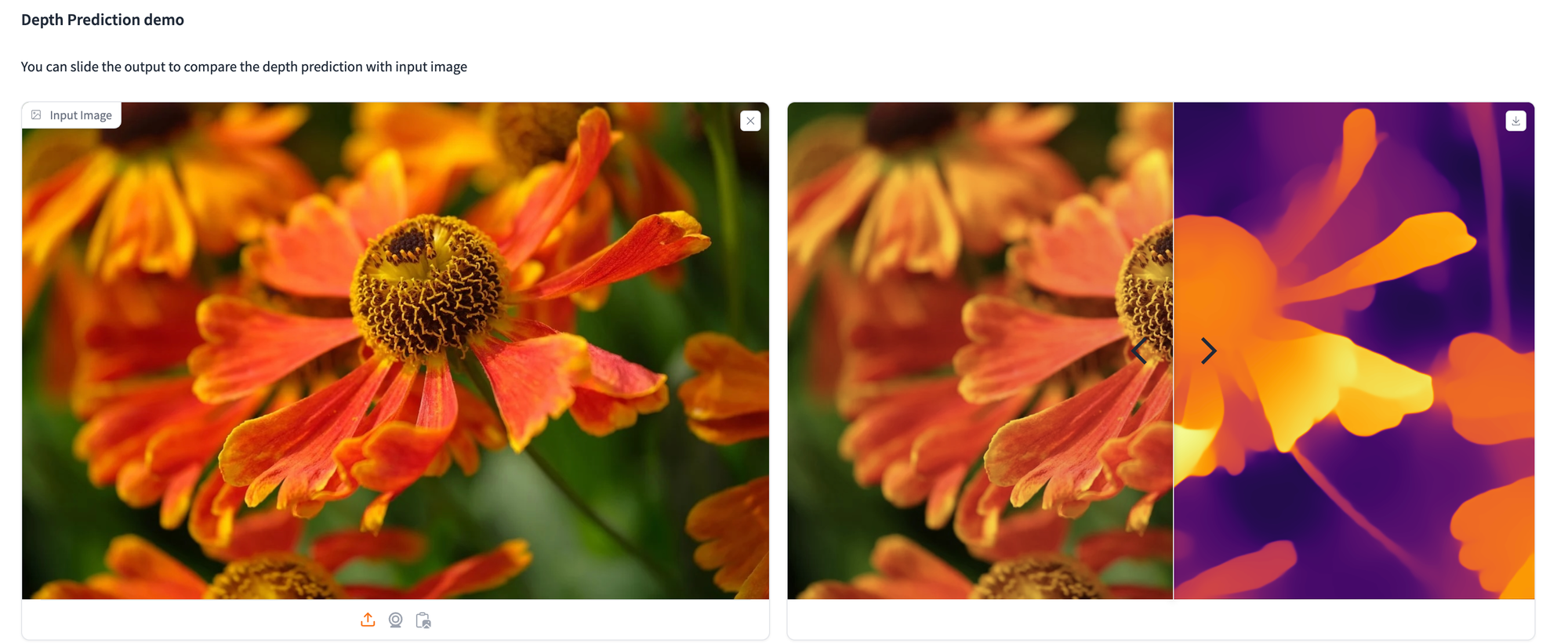

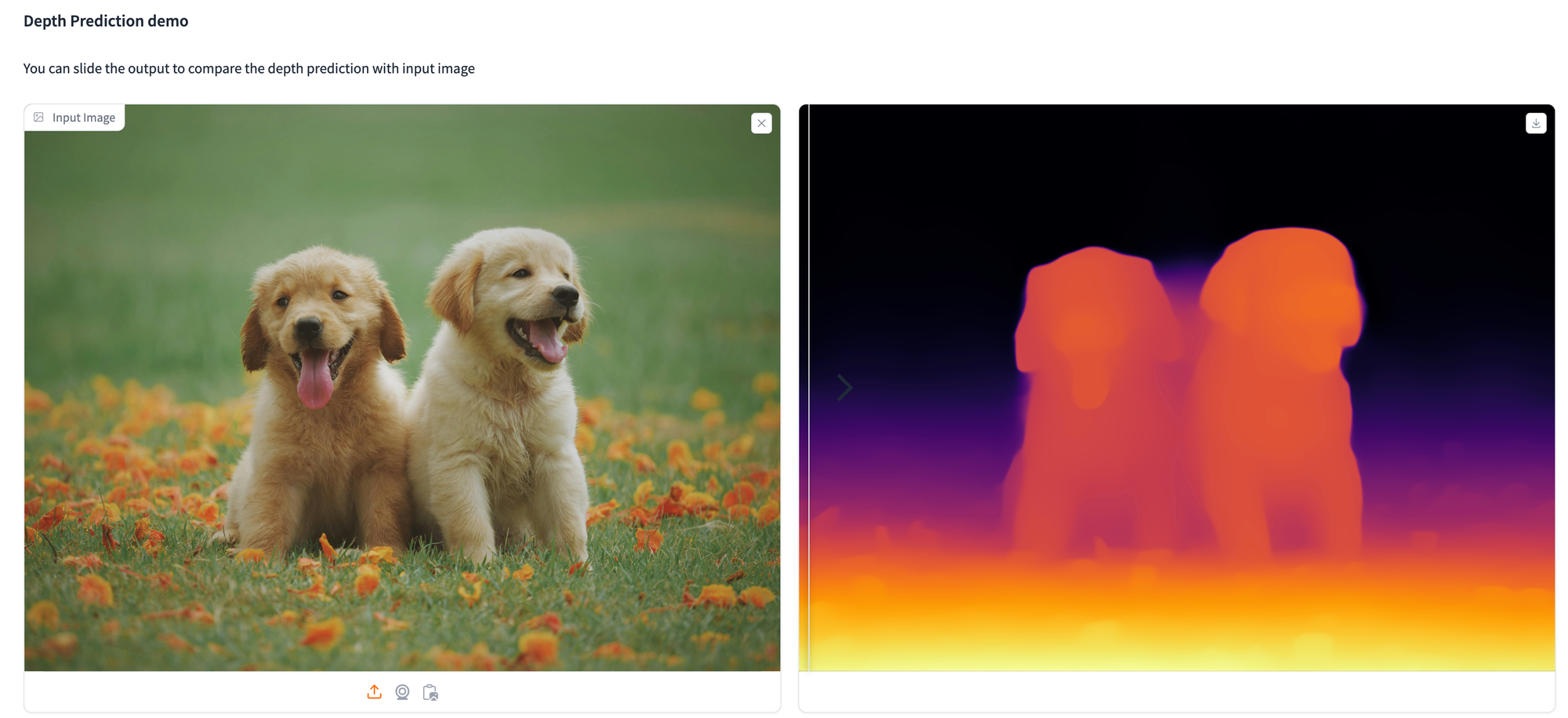

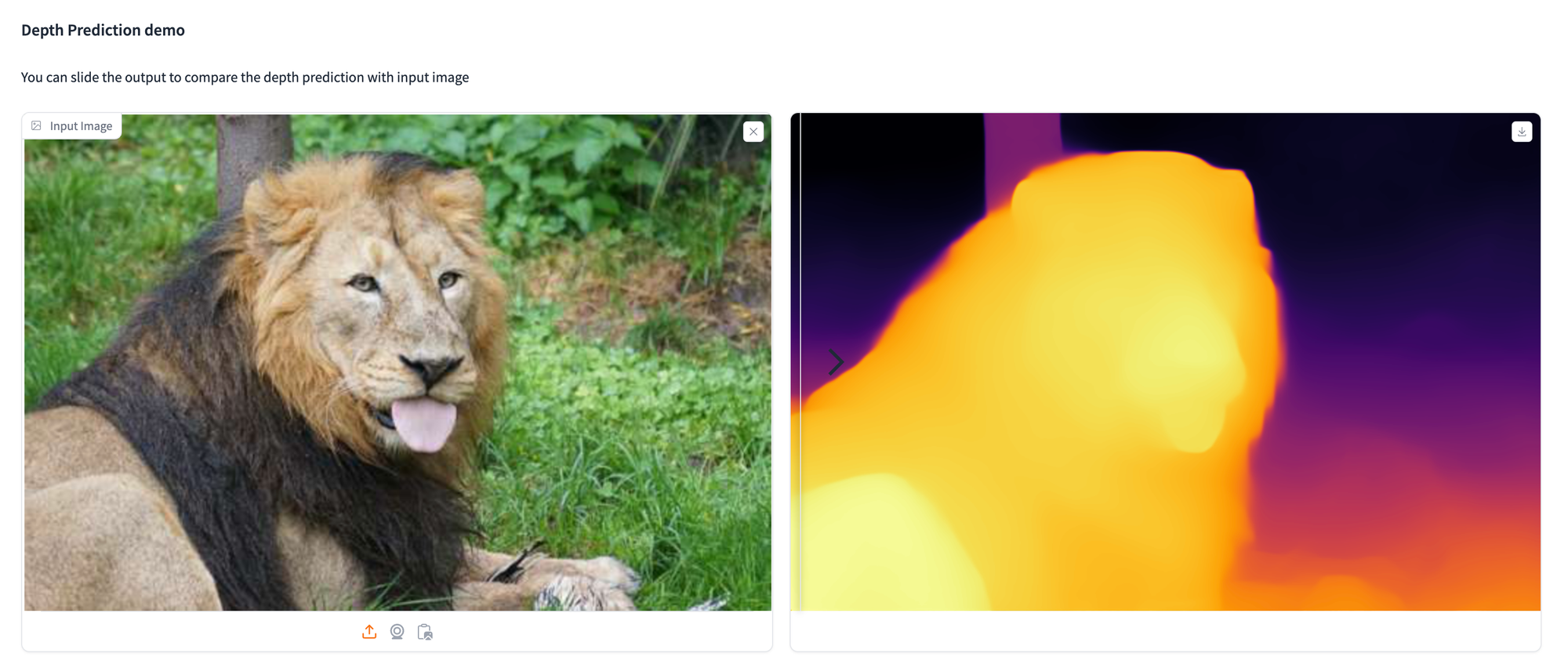

!pip install git+https://github.com/huggingface/transformers.gitHere are a few examples demonstrating how we utilized the depth estimation model to analyze various images.

Features of the Model

The models offer reliable relative depth estimation for any image, as indicated in the above images. For metric depth estimation, the Depth Anything model is finetuned using the metric depth data from NYUv2 or KITTI, enabling strong performance in both in-domain and zero-shot scenarios. Details can be found here.

Additionally, the depth-conditioned ControlNet is re-trained based on Depth Anything, offering a more precise synthesis than the previous MiDaS-based version. This new ControlNet can be used in ControlNet WebUI or ComfyUI's ControlNet. The Depth Anything encoder can also be fine-tuned for high-level perception tasks such as semantic segmentation, achieving 86.2 mIoU on Cityscapes and 59.4 mIoU on ADE20K. More information is available here.

Applications of Depth Anything Model

Monocular depth estimation has a range of applications, including 3D reconstruction, navigation, and autonomous driving. In addition to these traditional uses, modern applications are exploring AI-generated content such as images, videos, and 3D scenes. DepthAnything v2 aims to excel in key performance metrics, including capturing fine details, handling transparent objects, managing reflections, interpreting complex scenes, ensuring efficiency, and providing strong transferability across different domains.

Concluding Thoughts

Depth Anything V2 is introduced as a more advanced foundation model for monocular depth estimation. This model stands out due to its capabilities in providing powerful and fine-grained depth prediction, supporting various applications. The depth anything model sizes ranges from 25 million to 1.3 billion parameters, and serves as an excellent base for fine-tuning in downstream tasks.

Future Trends:

- Integration with Other AI Technologies: Combining MDE models with other AI technologies like GANs (Generative Adversarial Networks) and NLP (Natural Language Processing) for more advanced applications in AR/VR, robotics, and autonomous systems.

- Broader Application Spectrum: Expanding the use of monocular depth estimation in areas such as medical imaging, augmented reality, and advanced driver-assistance systems (ADAS).

- Real-Time Depth Estimation: Advancements towards achieving real-time depth estimation on edge devices, making it more accessible and practical for everyday applications.

- Cross-Domain Generalization: Developing models that can generalize better across different domains without requiring extensive retraining, enhancing their adaptability and robustness.

- User-Friendly Tools and Interfaces: Creating more user-friendly tools and interfaces that allow non-experts to leverage powerful MDE models for various applications.