Modern large language models (LLMs) are very powerful and becoming more so by the day. However, their power is generic: they are good at answering questions, following instructions, etc., on public content on the internet, but can rapidly fall away when dealing with specialized subjects outside of what they were trained on. They either do not know what to output, or worse, fabricate something incorrect that can appear legitimate.

For many businesses and applications, it is in precisely this realm of specialized information that an LLM can provide its greatest value-add, because it allows their users to get the detail information that they need from a product. An example is answering questions about a company's documentation that may not otherwise be public or part of a training set.

Retrieval-augmented generation (RAG) allows this value to be enabled on LLMs by supplementing their generic training data with extra or specialized information, including:

- Information about a particular topic in greater detail

- Data that was not otherwise public

- Information that is new or has changed since the base model was trained

RAG can be approached by doing things in a more low-code way, such as using a vendor product that abstracts away the LLM or other code being used, or in a more open but lower level way by directly using various emerging frameworks such as LangChain, LlamaIndex, and DSPy.

DigitalOcean's Sammy AI Assistant chatbot in our documentation exposes the former approach to the user. Here, we focus on the latter, showing how a few lines of code allow you to augment a generic LLM with your own webpages or documents.

Specifically, we instantiate:

- LangChain

- GPT-4o

- Webpages and PDFs

and we run them on a standard DigitalOcean+Paperspace Gradient Notebook. We show how a model with RAG gives significantly better answers on our new data than the same model without it. The chain formulation of LangChain makes this comparison of RAG versus base easy.

The resulting models could then be deployed to production on an endpoint in the usual way. Here our focus is on the improvement of RAG versus a base model, and the ease of getting your own data into the process.

Finally, it's worth noting that other approaches to improving model outputs besides RAG are available, such as model finetuning. We have other blogposts featuring this, such as here. Finetuning is more computationally intensive than RAG.

A couple of other recent RAG posts on the Paperspace blog are here and here.

Why this approach?

What makes the particular approach in this blogpost attractive is that it is very general. By starting a DigitalOcean+Paperspace (DO+PS) Gradient Notebook, you can:

- Use any LLM model accessible by an API from LangChain (there are a lot, free or paid)

- Use any one of a large number of supported vector databases for the model embeddings

- Augment with any webpage or PDF that it can read, either public or your own (billions)

- Put the result into production and extend the work in many ways: web application, other LLM models such as code, and so on

Because DO+PS is generic, the same idea would also work with LlamaIndex, DSPy, or other emerging frameworks.

Components

LangChain

LangChain is one of several emerging frameworks that enable working with LLMs more easily and putting them into production. While which framework will win out long-term is not yet established, this one works well for our purposes here of demonstrating working RAG on DO+PS.

GPT-4o

We want the best answers to our questions, and LangChain can call APIs, so we use the best model: OpenAI's GPT-4o.

This is a paid model, but other OpenAI models, paid, free or open source models from elsewhere, can be substituted.

For example, we looked at the answers from the older GPT-3.5 Turbo as well. These are often almost as good as GPT-4o for the questions here, although GPT-4o is better at spotting context such as when it is being asked a question that is not answered within our documents, but the prompt asked it to use the documents.

Chroma Vector Database

RAG works by finding the shortest "distance" between your query and your added documents. This means that the data needs to be stored in a form for which distance between arbitrary text can be measured. This form is embeddings, and works well in a vector database.

LangChain has support for many vector databases, and the example here instantiates one that uses Chroma.

DigitalOcean has existing support for integrations with databases, and so the use of a vector one is a natural extension within our infrastructure.

Setup

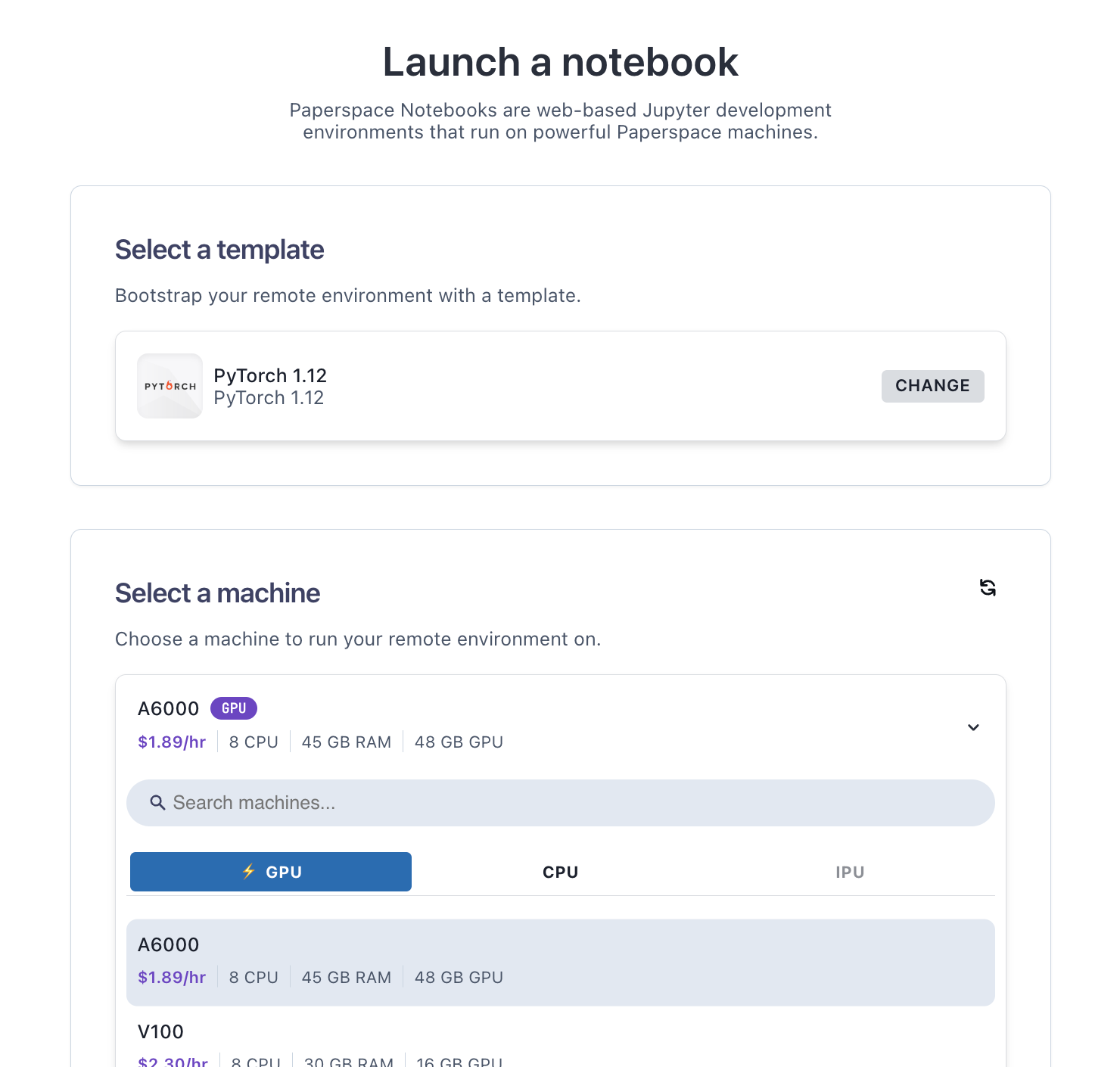

We use a standard Gradient Notebook setup, launching on a single A6000 GPU on the PyTorch runtime, but other similar GPUs will work.

We then add the software onto PS+DO's basic set that this use case needs, fixing the versions on a set that works (like a requirements.txt):

%pip install --upgrade \

langchain==0.2.8 \

langchain-community==0.2.7 \

langchainhub==0.1.20 \

langchain-openai==0.1.16 \

chromadb==0.5.3 \

bs4==0.0.2Some tweaks are needed to make it work correctly on the particular stack that results from our Gradient runtime plus these installs:

%pip install --upgrade transformers==4.42.4

%pip install pydantic==1.10.8(Python upgrade!)

!sudo apt update

!sudo apt install software-properties-common -y

!sudo add-apt-repository ppa:deadsnakes/ppa -y

!sudo apt install Python3.11 -y

!rm /usr/local/bin/python

!ln -s /usr/bin/python3.11 /usr/local/bin/python%pip install pysqlite3-binary==0.5.3

OpenAI API key

To access GPT-4o, we need an OpenAI API key. This can be obtained from your account after signing up and logging in, and setting the permissions to all-permissions and not read only.

Then set it as an environment variable using

import os

os.environ["OPENAI_API_KEY"] = "<Your API key>"or use, e.g., getpass to avoid a key being in the code.

We are now ready to see LangChain and RAG in action.

RAG results

The sequence of steps here is based on the LangChain question answering quickstart for webpages. We extend the example to PDFs.

Webpages

Webpages can be parsed using BeautifulSoup.

Import what we need:

import bs4

from langchain import hub

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.vectorstores import Chroma

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain_text_splitters import RecursiveCharacterTextSplitterThen the rest is

loader = WebBaseLoader(

web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),

bs_kwargs=dict(

parse_only=bs4.SoupStrainer(

class_=("post-content", "post-title", "post-header")

)

),

)

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

splits = text_splitter.split_documents(docs)

vectorstore = Chroma.from_documents(documents=splits, embedding=OpenAIEmbeddings())

retriever = vectorstore.as_retriever()

prompt = hub.pull("rlm/rag-prompt")

llm = ChatOpenAI(model_name="gpt-4o", temperature=0)

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)Here, the code starts by loading and parsing a webpage with loader = WebBaseLoader(...). This works for any page that can be parsed so you can substitute your own. We are using the same blogpost as the LangChain quickstart: https://lilianweng.github.io/posts/2023-06-23-agent/ .

text_splitter and splits split the text into tokens for the LLM. They are done in chunks to better fit in a model context window, but with overlap so that text context is not lost.

vectorstore puts the result into a Chroma vector database as embeddings.

retriever is the function to retrieve from the database.

prompt provides text that goes at the start of the prompt to the model to control its behavior, for example, the text of the prompt we use here is:

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.llm is the model we want to use, here gpt-4o.

Then finally rag_chain is constructed that represents the RAG process we want, with a function format_docs to pass them to the chain correctly.

The chain is run on the webpage using, e.g.,

rag_chain.invoke("What is Task Decomposition?")and here we can ask it any question we want.

An example answer is:

rag_chain.invoke("What is self-reflection?")Self-reflection is a process that allows autonomous agents to iteratively improve by refining past action decisions and correcting previous mistakes. It involves analyzing failed trajectories and using ideal reflections to guide future changes in the plan, which are then stored in the agent’s working memory for future reference. This mechanism is crucial for enhancing reasoning skills and performance in real-world tasks where trial and error are inevitable.

We see that the answer is correct, and that it is summarizing the content from the paper, not just repeating back blocks of text.

We can also view the data that we have loaded from the webpage as a sanity check to check that the model is using the text that we think it is, e.g.,

len(docs[0].page_content)

43131print(docs[0].page_content[:500])

LLM Powered Autonomous Agents

Date: June 23, 2023 | Estimated Reading Time: 31 min | Author: Lilian Weng

Building agents with LLM (large language model) as its core controller is a cool concept. Several proof-of-concepts demos, such as AutoGPT, GPT-Engineer and BabyAGI, serve as inspiring examples. The potentiality of LLM extends beyond generating well-written copies, stories, essays and programs; it can be framed as a powerful general problem solver.

Agent System Overview#

Inwhich we see is the same text that the webpage starts with.

For more information, the LangChain RAG Q&A quickstart has further commentary and links to the parts of its documentation dealing with each section in full.

Two extensions to the above are to load multiple webpages into the augmentation at the same time, and compare the RAG answers to those from a base model. We show both of these below for PDFs.

PDFs

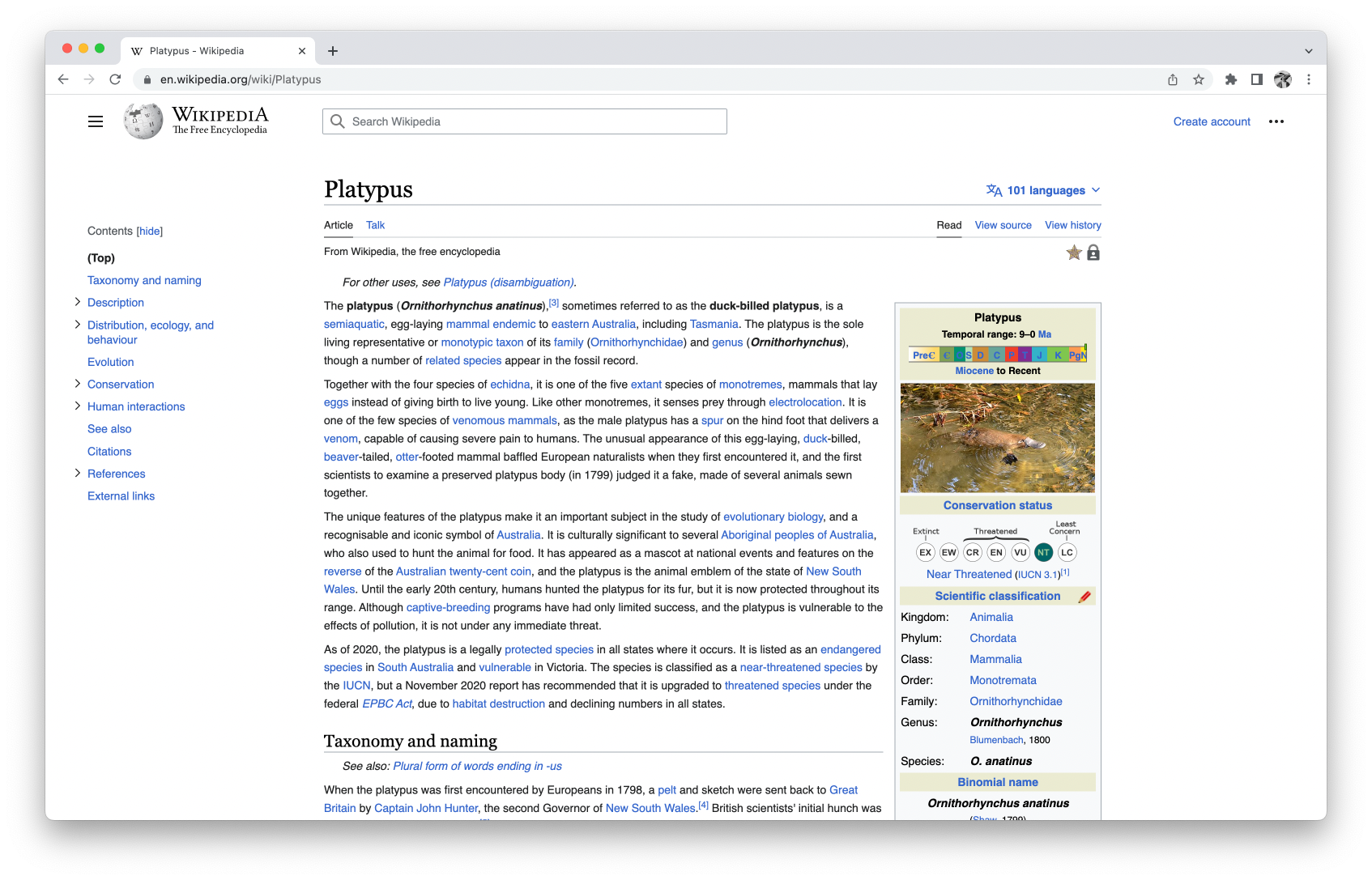

As with webpages, any set of PDFs that the LangChain parser can read can be used to RAG-augment the LLM. Here, we use some of the author's set of refereed astronomy publications from the arXiv preprint server. This is partly for interest, but also for the convenience that the correct answers are both quite obscure but largely known. This means they are unlikely to be answered well by a non-RAG model, and we see clearly how the process improves results on a specialized topic. Questions outside the scope of the publications can also be easily asked as a control.

To work with PDFs, the process is similar to above. For a single document, we can use PyPDFLoader and point to its location as a URL or local file.

We want to use multiple PDFs, so we use PyPDFDirectoryLoader and put the PDFs in a local directory /notebooks/PDFs first.

%pip install pypdf==4.3.0The code is similar to the webpage example:

from langchain_community.document_loaders import PyPDFDirectoryLoader

loader = PyPDFDirectoryLoader("/notebooks/PDFs/")

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=150)

splits = text_splitter.split_documents(docs)

vectorstore = Chroma.from_documents(documents=splits, embedding=OpenAIEmbeddings(disallowed_special=()))

retriever = vectorstore.as_retriever()

prompt = hub.pull("rlm/rag-prompt")

llm = ChatOpenAI(model_name="gpt-4o", temperature=0)

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)and like the webpages we can sanity check the data with commands like

len(docs)

97which is the correct total number of pages, and

print(docs[0].page_content)

...

We review the current state of data mining and machine learni ng in astronomy. Data

Miningcan have a somewhat mixed connotation from the point of view o f a researcher in

this field. If used correctly, it can be a powerful approach, h olding the potential to fully

exploit the exponentially increasing amount of available d ata, promising great scientific

advance. However, ifmisused, it can be little more than the b lack-box application of com-

...which is the beginning text of the first paper, although the parsing to ASCII is not perfect.

For a more advanced system and a larger number of documents, they could be stored in a NoSQL database.

Comparison to base model

Let's compare our RAG answers on the PDFs to those of a base non-RAG model. The LangChain formulation makes this easy because we can substitute the RAG chain:

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)with one that simply points to the base model:

base_chain = base_prompt | llm | StrOutputParser()We need to alter the prompt when using the base model so that we don't tell it to use a now non-existent RAG context. Prompts are passed using a template, and the RAG one is

template = """Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

Use three sentences maximum and keep the answer as concise as possible.

{context}

Question: {question}

Helpful Answer:"""

prompt = PromptTemplate.from_template(template)For the base, we change this to

template = """You are an assistant for question-answering tasks.

Use your existing knowledge to answer the question.

If you don't know the answer, just say that you don't know.

Use three sentences maximum and keep the answer concise.

Question: {question}

Helpful Answer:"""

base_prompt = PromptTemplate.from_template(template)Note that the {context} argument is removed, since there now isn't one to pass from.

Now we can ask questions and compare base GPT-4o to RAG-augmented GPT-4o with our PDFs.

A question to the base model:

base_chain.invoke("How many galaxies and stars were the classifications accurate for?")I don't have the specific numbers for how many galaxies and stars were accurately classified. The accuracy of classifications can vary depending on the methods and technologies used, such as those in projects like the Sloan Digital Sky Survey or the Hubble Space Telescope. For precise figures, you would need to refer to the latest data from these specific astronomical surveys.

The same question to the RAG model:

rag_chain.invoke("How many galaxies and stars were the classifications accurate for?")The classifications were accurate for 900,000 galaxies and 6.7 million stars.

which is the correct answer for these PDFs.

Using this and other questions, we can clearly see that the RAG-augmented model gives better answers about the specific new information in the PDFs. Like with the webpages, the answers again do not just duplicate text from the paper, but shorten and summarize it appropriately.

When asked control questions outside the scope of the PDFs, the answers are good for the base model.

For the RAG model, where in our particular prompt we are asking it to use the PDFs and to say that it doesn't know if there is no good answer from them, it says it does not know:

base_chain.invoke("What are some good places to visit in the San Francisco Bay Area?")Some good places to visit in the San Francisco Bay Area include the Golden Gate Bridge, Alcatraz Island, and Fisherman's Wharf. Other popular spots are Golden Gate Park, the San Francisco Museum of Modern Art (SFMOMA), and the tech hub of Silicon Valley. For natural beauty, consider Muir Woods National Monument and the scenic drive along Highway 1.

rag_chain.invoke("What are some good places to visit in the San Francisco Bay Area?")I don't know.

So we have now a useful system of RAG-augmentation of LLMs with our own webpages and PDFs.

Clearly there is more that can be done than this, and our blogpost here does not show the solution to everything. For example, optimizing your prompts, preventing hallucinations, proving RAG always helps, and best possible parsing of inputs.

But we do see clearly that better answers are given here by RAG than without it, and not a lot of code is needed. Analogous improvement is likely on your own data, and can be realized on DO+PS.

Conclusions and next steps

We have shown that we can add our own documents to an LLM model, and have it give useful answers to questions about them, for example, explaining technical terms or summarizing longer text into shorter form.

Specifically, we instantiated:

- LangChain

- GPT-4o

- Webpages and PDFs

Other models, such as GPT-3.5 Turbo, or free and open source models, can easily be substituted. The method works for any webpages and PDFs that the LangChain parser can read.

You can adapt the code to your own data, preferred LLM, and use case.

While RAG improves your model's results over a non-RAG model, it does not completely solve the generic LLM problem of differentiation between truth and fiction. This is subject to ongoing research, and various approaches are available. A good approach at present is to encourage LLM use in contexts where the information given either does not have to be "true", such as generation of ideas and drafts, or where it is easily verifiable, such as answering questions about documentation that a user can follow up upon.

There are a number of improvements to the basic processes shown in this blogpost:

- More detailed webpage or PDF parsing

- Other document types such as code

- More sophisticated retrieval such as multi-query

- Alteration of tunable parameters such as the prompt, chunking, overlap, and model temperature

- Add LangChain's LangSmith to see traces of more complex chains

- Show the source documents used in generating the answer to the user

- Add a deployed endpoint, user interface, chat history, etc.

The LangChain documentation shows many examples of these improvements, and other frameworks besides LangChain such as LlamaIndex and DSPy are available that would work similarly on our generic DO+PS infrastructure.

Provided users are aware that LLMs' outputs may sometimes be incorrect, and especially when working in regimes where the LLM output information can be quickly and easily verified, RAG adds a lot of value.

You can run your own by going to Paperspace + DigitalOcean, and our documentation.