With increased public interest in artificial image generation models, researchers in computer vision have developed several deep learning implementations of these models i.e. Stable Diffusion, Dall.E, Imagen, etc. These models have been used for various purposes such as generating personal avatars, generating website templates, and contributing to graphic design artifacts. The majority of the models used for image generation today are diffusion models. In a nutshell, diffusion models are probabilistic models that take in a matrix of 100% Gaussian noise and iteratively remove the noise from this matrix to result in a reasonable image that matches the training data.

In this blog post, I will review some recent diffusion models that have been used not only for generic image generation but also for image editing purposes. I will give an overview of each model and summarize the blog post with the pros and cons of each model.

Bring this project to life

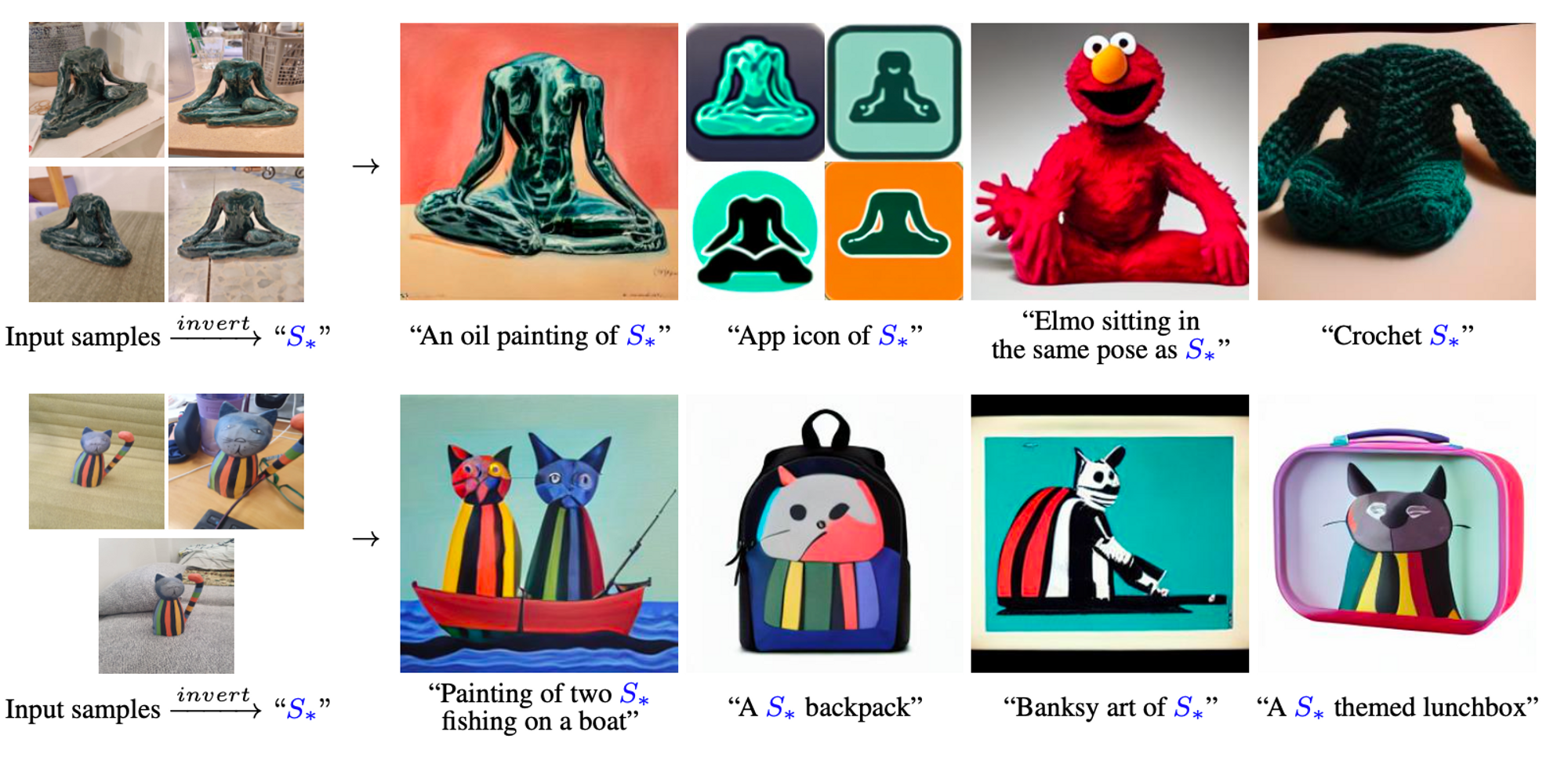

Textual Inversion

The authors of this project wanted to create an image generation model that could learn new subjects/objects, referred to in this model as ‘concepts’, to influence the image generation process. The main idea is that if the model can quickly pick up these new concepts without much effort (i.e. days worth of model training) then users can easily generate new images based on these concepts e.g An image of ‘concept’ riding a bike. The model can learn whatever ‘concept’ is and generate an image that semantically matches the prompt. How do they accomplish this task? Given a set of images and a text prompt (a.k.a string), they first convert the string into a set of tokens (numerical value). Thereafter, each token is replaced with its own embedding vector which is then fed into the pretrained model. The authors’ goal is to “find new embedding vectors that represent new, specific concepts.” (Gal, Rinon, et al.)

Applications of Textual Inversion

- Style Transfer → The model learns to represent the new concept in various visual artistic styles

- Dataset Debiasing → The model can be used to find new embeddings for biased concepts which allow researchers to curate fairer representation even from small datasets.

- Image Compositions → Once the model has learned various concepts, the model can combine various concepts in one image e.g. ‘A photo of concept A in the style of concept B’

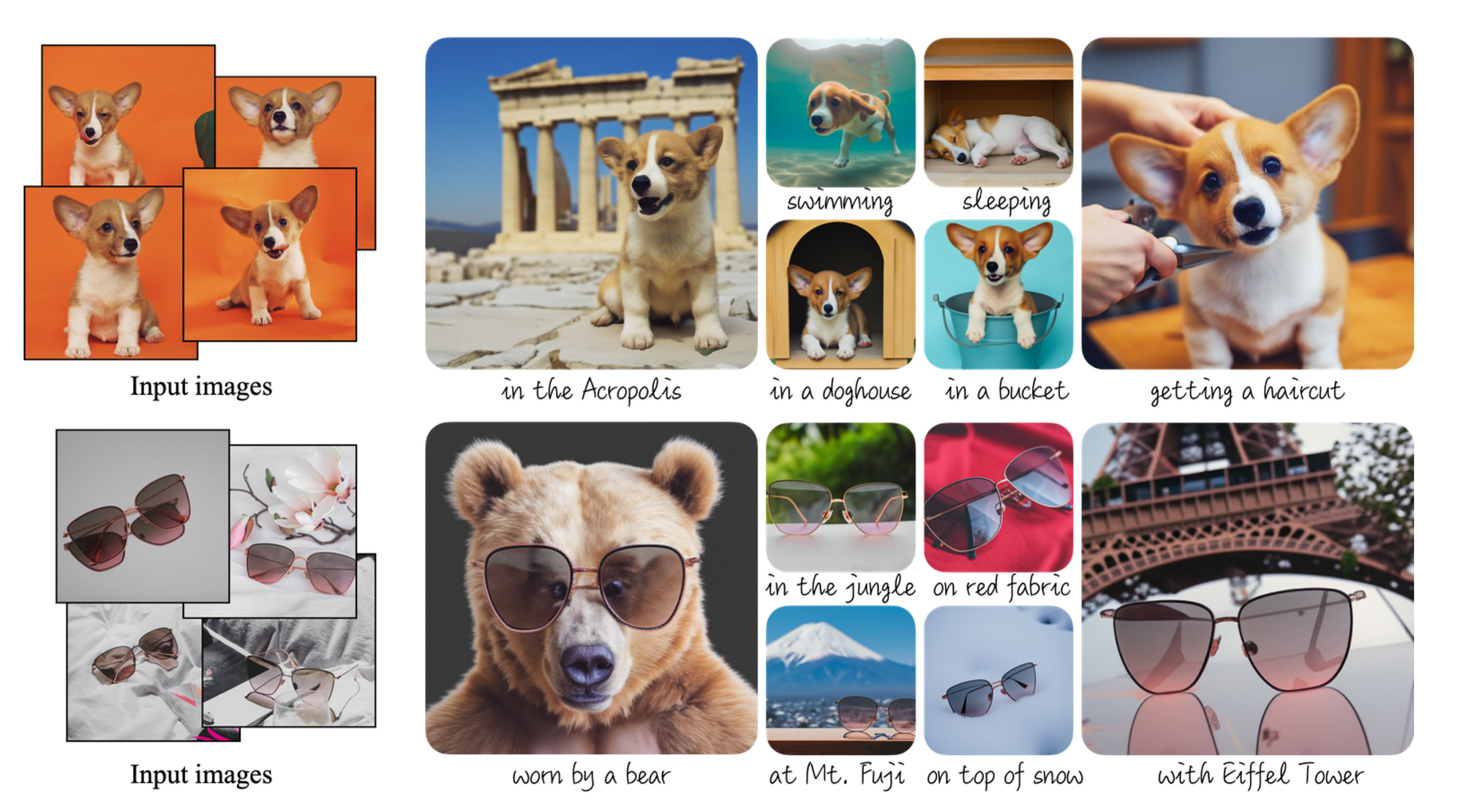

DreamBooth

Similar to Textual Inversion, DreamBooth aims to personalize text-to-image generation models. DreamBooth allows the user to provide a few samples of a subject image and an associated text prompt: “a [identifier] [class noun]”, which translates into a unique identifier. The goal is to use these few data points to fine-tune a large text-to-image model so that the output model can be used to generate images of the subject in different contexts. Like Textual Inversion, they use large-scale pretrained models however, in this project they work on ironing out 2 major problems in the transfer learning process: overfitting and language drift.

Overfitting → Because the model is fine-tuned on a few images, it is possible for the model to memorize the poses and contexts of the subject’s training images and fail to generalize. In order to curb this the authors propose regularization or selectively fine-tuning parts of the model.

Language Drift → Fine-tuning all layers leads to the high fidelity of the subject's appearance but it also edits the text conditioning layers of the model which causes language drift.

When a diffusion model is trained on a small set of images it slowly forgets how to generate subjects of the same class and progressively forgets the class-specific prior and cannot generate different instances of the class in question. In order to curb this problem, the authors introduced an autogenous class-specific prior-preserving loss to ensure that the model does not overfit on the few training samples and that the model does not forget the prior knowledge while generating new samples.

DreamBooth also incorporates super-resolution models in order to generate photorealistic content.

Applications of DreamBooth

- Custom Context Manipulation → changing the background and how the subject exists in the background. This is more than a background change because the background structure is modified to appropriately suit the subject

- Artistic Manipulation → It also includes style changes (artistic expressions). This is different from style transfer because it better maintains the semantics of the subject

- Accessorization → Adding Accessories i.e. hats, sunglasses, etc. to the subject

- Property Modification → This goes beyond color and texture but you can also combine different instances of the same class to get a new image (sorta). In the paper, they show that they are able to cross a ‘chow chow’ dog and a lion

Note: In their various experiments, the authors of this project show that DreamBooth outperforms Textual Inversion in terms of semantic correctness of the subject in instances of recontextualization

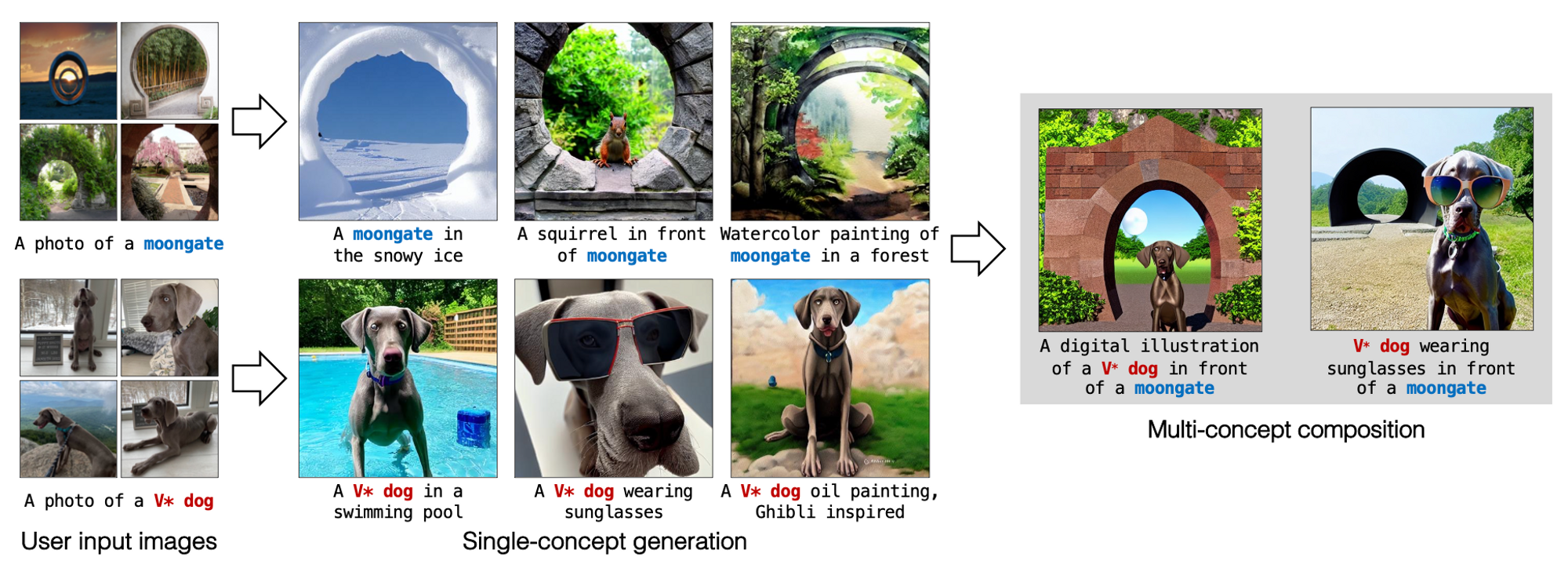

Multi-Concept Custom Stable Diffusion (a.k.a Custom Diffusion)

The goal of this project is to train a custom text-to-image model that can be tuned in as little as ~6 minutes. Wow! Not only can you train new concepts but you can train for multiple new concepts in the same fine-tuning instance. Similar to Dreambooth, using a few images, we can augment existing text-to-image diffusion models with the new concept. The fine-tuned model should be able to generalize the new concepts and generate images that combine the new concepts with existing concepts.

Some of the challenges associated with fine-tuning these large models include:

- The model tends to forget or change the meaning of existing concepts (Language Drift)

- The model overfits the few training examples (Overfitting)

- The challenge of compositional fine-tuning (The ability to tune for multiple concepts is a relatively new concept)

A) Single Concept Fine-tuning

Learning the Rate of Change of Models

The goal is to fine-tune the model given as few as 4 images. Using Stable Diffusion as their base model, they encode the input images into a latent representation using the hybrid objectives of VAE, PatchGAN, and LPIPS such that running an autoencoder recovers the input image.

Thereafter they train a diffusion model on the latent representation with text condition injected in the model using cross-attention. Naive fine-tuning would mean that they would need to fine-tune all the weights of the model which would be computationally expensive and lead to overfitting, they opt to fine-tune a small subset of the weights.

In order to identify the subset in question they analyze the change in weights for each layer in the fine-tuned model on the target dataset given a specific loss function. The weights come from 3 types of layers:

- cross-attention (between the text and image)

- self-attention (within the image itself)

- the rest of the parameters (convolutional blocks and normalization layers)

They average the change in parameters for the 3 categories and determine which layer(s) has the largest rate of change in its weights.

They identified the cross-attention block as having the largest variation because it “modifies the features of the network according to the condition features”(Kumari, Nupur, et al.). Because the task of fine-tuning aims to update the mapping from given text to image distribution, the authors identify the specific cross-attention blocks that require updating and those are the only ones that get altered during the fine-tuning process.

Text Encoding

When creating a new concept that does not exist in the dataset an accompanying caption will be tokenized and paired with the new images in the latent space. However, when simply personalizing a concept — introducing a unique instance of an existing general category — they introduce a modified token embedding i.e. V {general class name}.*

Dataset Regularization

Training on the new image & text pairs can lead to language drift → This is when the model forgets or changes the meaning of an existing concept. Having such few images connected to general classes could lead to overfitting and a lack of variation in generated instances belonging to the class. To manage these issues they select 200 regularization images from the LAION-400M dataset with corresponding captions that have a high similarity with the target text prompt (above a 0.85 threshold) in the CLIP encoding space.

B). Multiple Concept Compositional Fine-tuning

To do this they combine the training datasets of all the concepts and train them jointly. They use different modifier tokens, $V_i^*$, initialized with different rarely-occurring tokens and optimize them along with cross-attention key and value matrices for each layer. The method in this project only updates the key/value projection matrices corresponding to the text features. In order to merge concepts, they simply combine the text features to generate images that merge both concepts.

Applications

- Personalized text-to-image generation

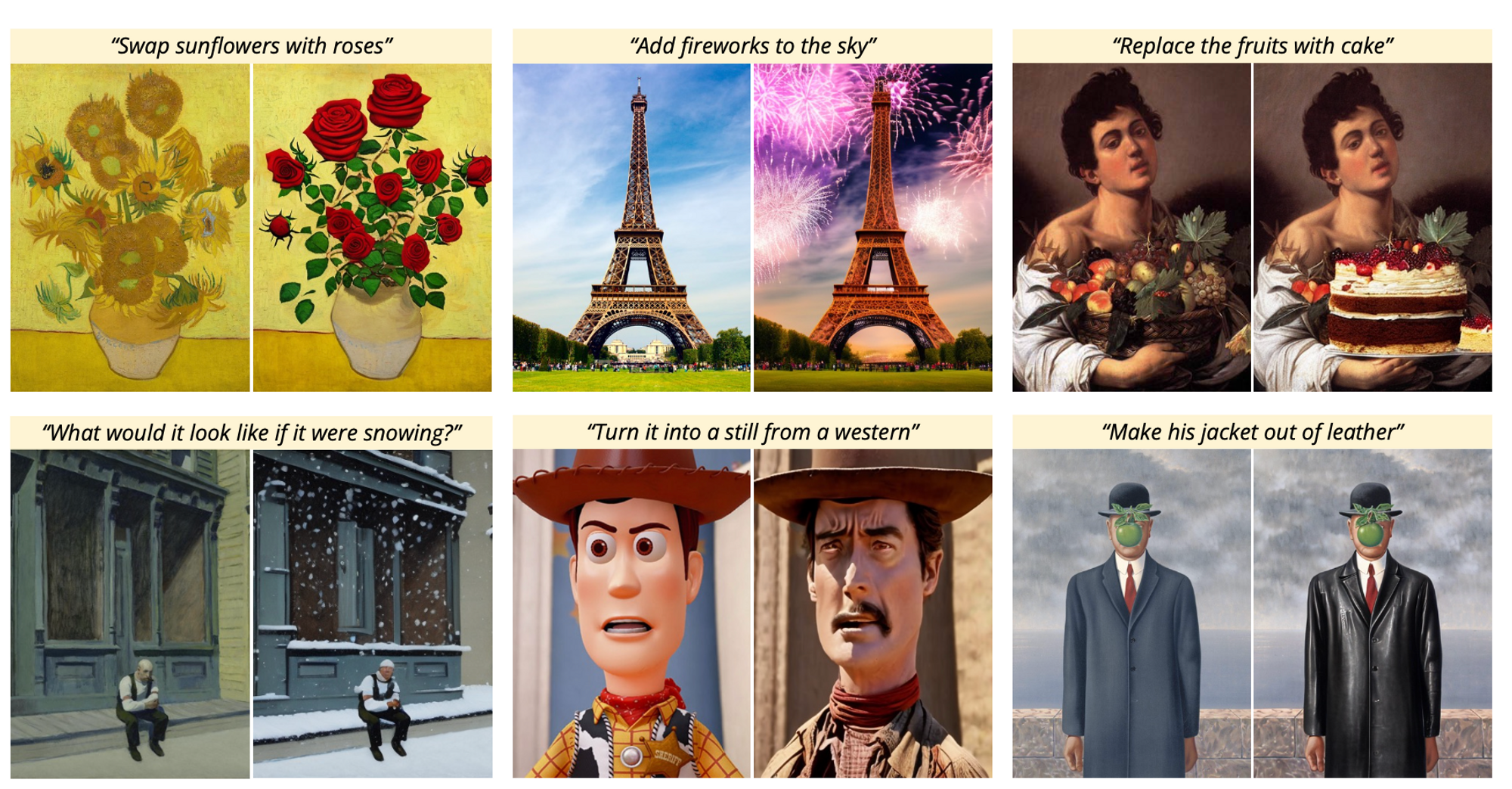

Instruct Pix2Pix

Bring this project to life

The authors decided to create a generative model that edits images based on written instructions from the user. They created their training data using GPT-3 and Stable Diffusion and show that their trained model generalizes well to new data points.

This model is an improvement from previous image editing generative models because:

- Their model only takes in a single image and instruction on how to edit an image and performs the edit in one forward pass without the need to have a user-drawn mask, additional images, or per-example inversion or finetuning

- This model enables editing from instructions that tell the model what action to perform. ‘A key benefit of following editing instructions is that the user can just tell the model exactly what to do in natural written text’. (Brooks, Tim, Aleksander Holynski, and Alexei A. Efros.)

They treat instruction-based editing as a supervised learning problem in the following ways:

- They generate pairs of text edit instructions and images before/after the edit

- They train an image editing diffusion model on this generated dataset

They use a pretrained stable diffusion checkpoint because of limited paired training data. Their diffusion model learns to predict the noise added to the latent code given image conditioning and text instruction conditioning.

“To support image conditioning, we add additional input channels to the first convolutional layer, concatenating the latent code and the image conditioning input.” (paper) They “reuse the same text conditioning mechanism that was originally intended for captions to instead take as input the text edit instruction”

They implement classifier-free guidance. This is a method of trading off the quality and diversity of samples generated by a diffusion model. It is commonly used in conditional text-to-image generation to “improve the visual quality of generated images and to make sampled images better correspond with their conditioning”

Pix2Pix can be used to create any text edits that you can think of. The applications are not as fragmented as in the previous 2 models.

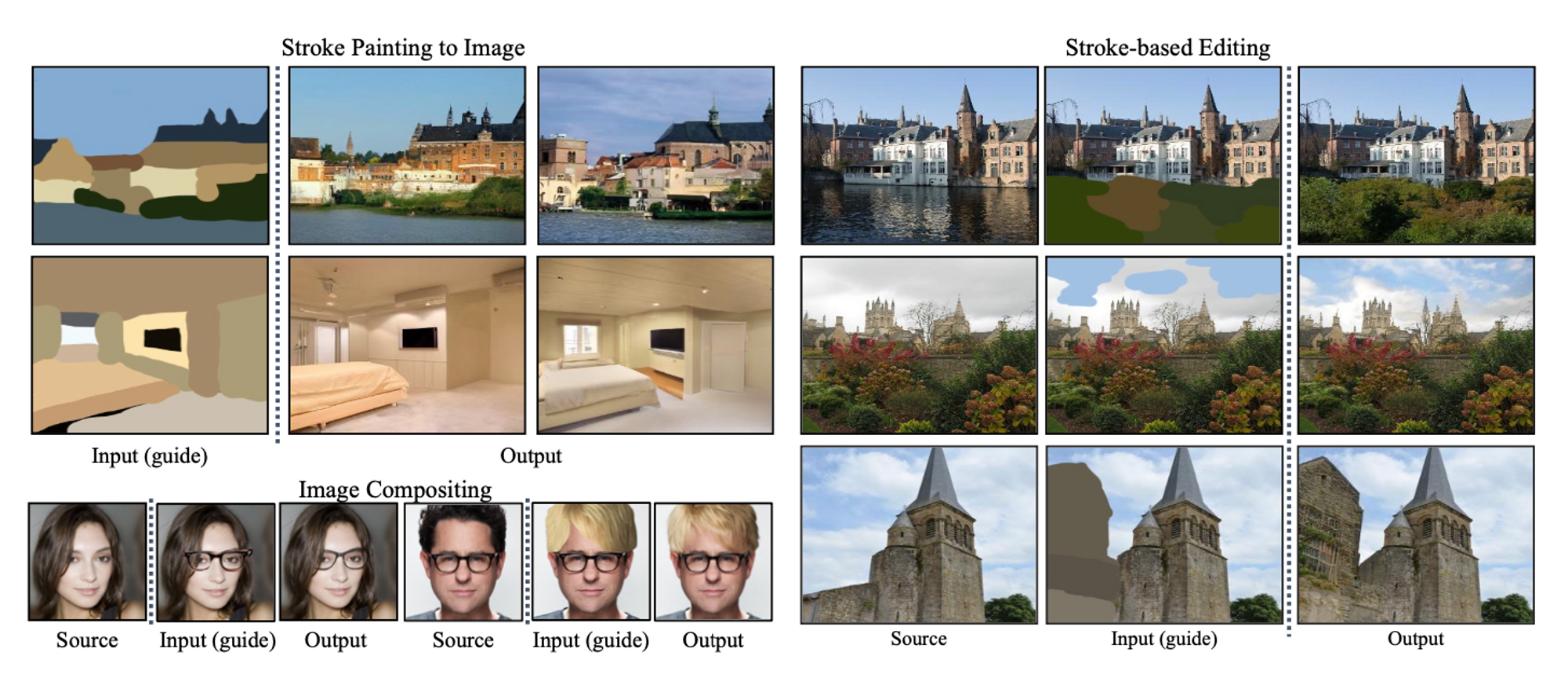

SDEdit

Bring this project to life

Unlike the previous methods, this method of image editing does not rely on text information. In this project, the authors aim to create an image editing diffusion model which relies on 3 different guidance techniques:

- stroke-based image synthesis → This is where the input guidance is an RGB image entirely created out of stroke paintings. This means that the entire image will have to be generated from scratch by the diffusion generator.

- stroke-based image editing → This is where the input guidance is an RGB image with realistic content and some portions of the content have been overlayed with paint strokes which will guide the edits in those portions of the image

- image compositing → This is where the input guidance image is an RGB image with coarse strokes indicating the need for artifacts to be included in the image i.e. sketching a pair of glasses over a person’s face.

This project uses a stochastic differential editing diffusion model to generate the images. What is interesting is that once the guidance image is given, the model doesn't have to add 100% noise to it through the noising process. The model can add 60% noise to the guidance image and then pass the picture through the denoising process to generate a realistic image. Therefore, they add a bit of noise to the guidance image and then denoise it to a reasonable image.

Throughout the paper, the authors discuss a tradeoff between faithfulness and realism. Faithfulness is a measure of how much the edited image matches the guidance description. Realism is a measure of how realistic the image is.

- The faithfulness is measured by $L_2$ distance (the intuition is the same thing behind LPIPS)

- The realism is measured by the kernel inception distance (KID)

Higher values of $t_0$ for the number of diffusion steps lead to more realism but less faithfulness and vice versa. The authors say that you can use a binary search to find the ideal $t_0$ such that there’s a balance between faithfulness and realism.

Applications → SDEdit can be used to create any visual edit that you can think of. Of course, the possible results are bound by the examples seen in the training data. 🙂

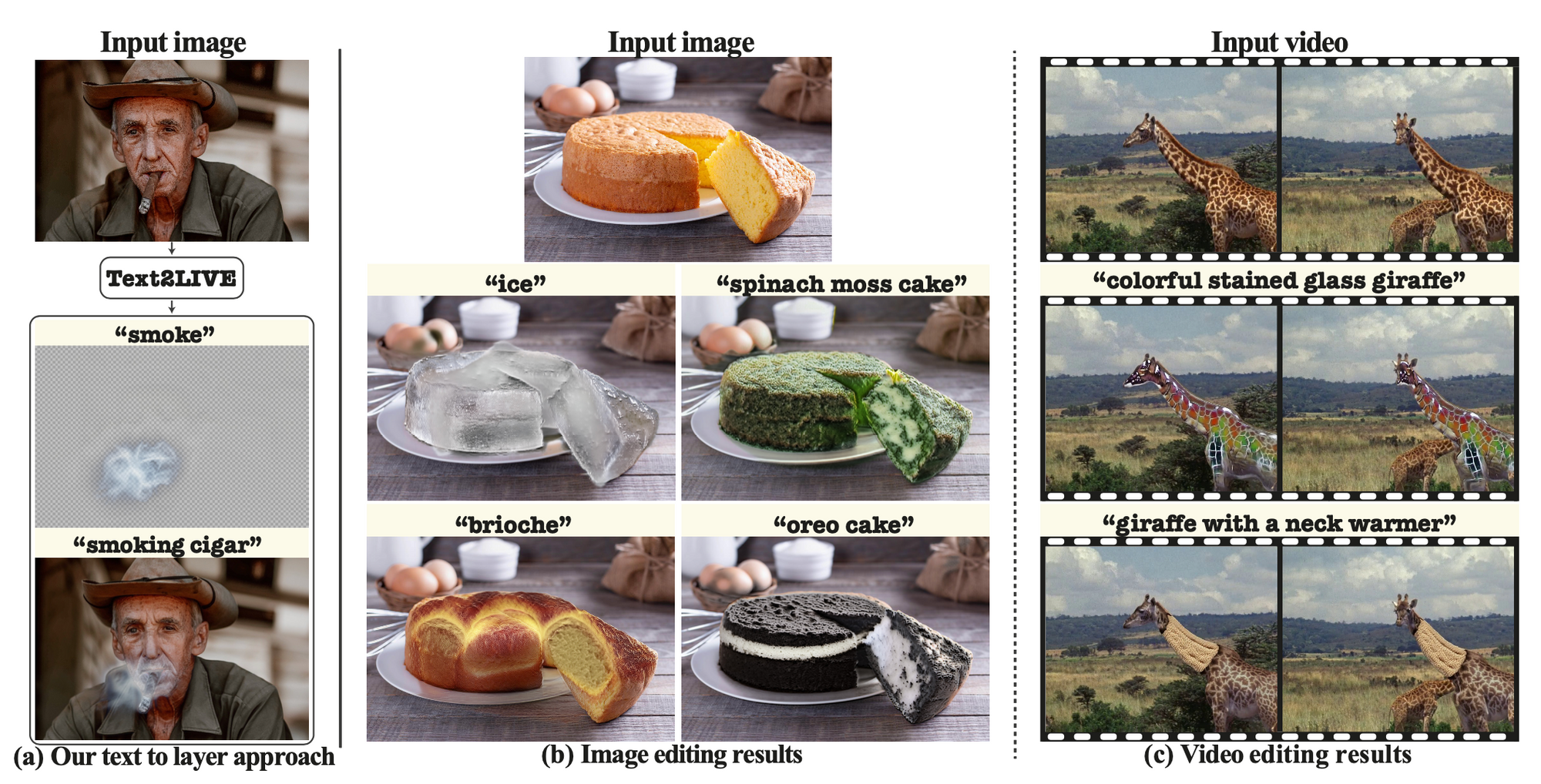

Text2Live

Bring this project to life

The goal of this project is to provide a method to do zero-shot text-driven manipulation in images and video.

- What’s interesting about this model is that because of the need for ‘zero-shot learning’, the training of the model does not require the collection of a new training set. The training set comprises image and text augmentations of the input image and text edit prompt.

- They train the generator using an internal dataset generated from a single input while leveraging an external pre-trained CLIP model to create loss functions.

- Rather than generating an edited image, the model outputs an edited RGBA layer (color & opacity) that is composited over the original image. The cool thing about this is that they can easily localize the edits to the regions of interest while leaving the rest of the image unedited. This ensures high fidelity to the original input.

- Previous methods of image generation, not limited to the few mentioned above, have various limitations:

- They often need to combine a pretrained generator in conjunction with CLIP to encode the input image/text data

- GANs, for instance, struggle with images outside their training domain and with converting images into their latent spaces

- Diffusion models overcome these barriers but face a tradeoff between satisfying the target edit and maintaining high fidelity but it is not straightforward to apply the edits to the video.

- In order to expand the edits to videos, they reduce the video data into 2D atlases, apply the edits onto the atlas as if it was a 2D image, and then use the atlases to map the entire video in a consistent manner. (More on neural layered atlases (NLA) in a future post 😊)

Applications of Text2Live

- Image and Video Editing → This model can edit both images and videos with text-based instructions.

Summary

While Textual Inversion, DreamBooth, and Custom Diffusion fine-tune pretrained models to learn new text-to-image pairs, Custom Diffusion edits a portion of the model while DreamBooth requires fine-tuning of the entire model to get results that maintain the fidelity of the subject. There is a tradeoff between time and image quality when choosing between these three models. Custom Diffusion, however, manages to maintain high subject fidelity while fine-tuning the model within minutes. Textual Inversion, Instruct pix2pix, and SDEdit both offer the ability to edit portions of an image. While choosing between the three models I would consider the user's ability to word their proposed edits. While it’s easy to propose the text prompt “change the city to Tokyo” it is difficult to propose the text prompt that edits a select portion of the image while retaining the other unaltered features. For text edits, Instruct pix2pix and Text2LIVE differ from DreamBooth, Custom Diffusion, and Textual Inversion because they do not require a fine-tuned model and are instead ready for use for editing. Once DreamBooth, Textual Inversion, and Custom Diffusion have been fine-tuned on your images and subject name, then you can go ahead and propose text edits. In this regard, I think that Custom Diffusion, SDEdit, Instruct pix2pix, and Text2Live give faster high-quality results. These are some factors to consider when using diffusion models for image editing.

$\text{Note}_1$: After reading the DreamBooth paper, it was apparent that Dall.E 2 and Imagen were insufficient at maintaining fidelity subject’s attributes and therefore I do not cover them in this article. They also do not present themselves as “image editing” generation models.

$\text{Note}_2$: I am aware of Low-Rank Adaptation (LoRA) which proposes an easier fine-tuning method by freezing the pretrained weights and injecting trainable matrices into the transformer network of the model. Although its implementation could affect the performance of the mentioned models, i.e. DreamBooth, I am choosing to cover that in a future article because it is not directly applicable to image editing.

Citations:

- Gal, Rinon, et al. "An image is worth one word: Personalizing text-to-image generation using textual inversion." arXiv preprint arXiv:2208.01618 (2022).

- Ruiz, Nataniel, et al. "Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation." arXiv preprint arXiv:2208.12242 (2022).

- Kumari, Nupur, et al. "Multi-Concept Customization of Text-to-Image Diffusion." arXiv preprint arXiv:2212.04488 (2022).

- Brooks, Tim, Aleksander Holynski, and Alexei A. Efros. "Instructpix2pix: Learning to follow image editing instructions." arXiv preprint arXiv:2211.09800 (2022).

- Meng, Chenlin, et al. "Sdedit: Image synthesis and editing with stochastic differential equations." arXiv preprint arXiv:2108.01073 (2021).APA

- Bar-Tal, Omer, et al. "Text2live: Text-driven layered image and video editing." Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XV. Cham: Springer Nature Switzerland, 2022.