Bring this project to life

Welcome, fellow anime enthusiasts! Picture this: you're are surrounded by posters of your favorite anime characters, and suddenly, inspiration strikes! You want to create your own anime masterpiece, now, but how can you do it?

With LoRA and diffusion models we will learn how to create amazing anime characters!

In this article we will learn how to generate anime images with the most talked about duo: LoRA and Stable Diffusion (SD). We will try to understand the basics of LoRA and why is it used and also get an overview of SD.

What makes Anime portraits so special

Anime characters are like old friends; we never get tired of them. They come in all shapes and sizes, from fierce warriors to quirky high school students to BTS characters. They are very special to us. But what makes them truly special is their ability to capture our hearts and whisk us away to fantastical worlds where anything is possible.

Previously, creating custom Anime style artwork required several things, most notably artistic talent. Now with Stable Diffusion, it is possible to take advantage of the AI revolution and make our own artwork with little to no training.

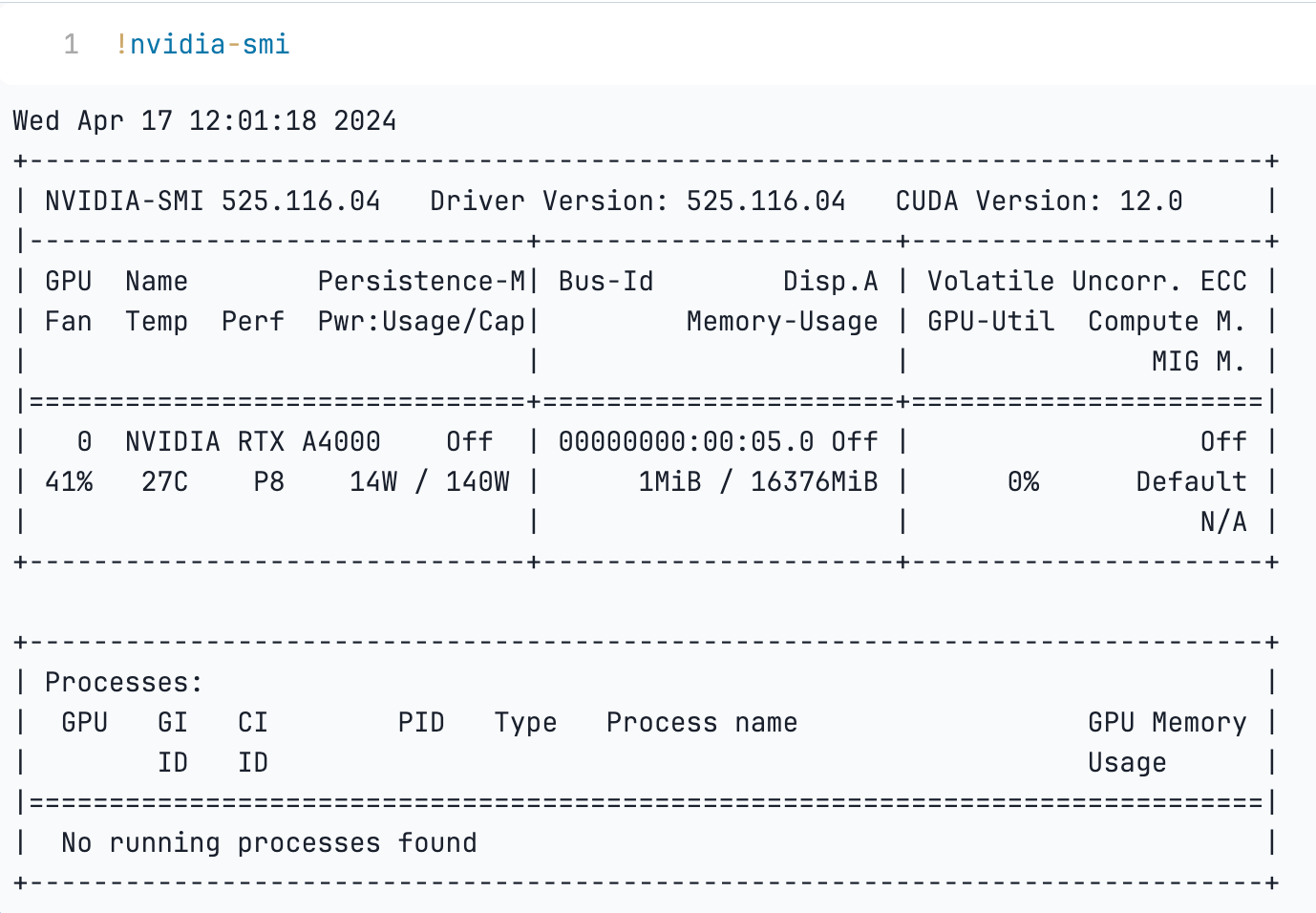

The best part is we will do this using the powerful Paperspace GPUs. With the power of cutting-edge technology at your fingertips, there's no telling what amazing characters you might create. For this tutorial we will use A4000 GPU which will cost you $0.76/hour. Powered by the NVIDIA Ampere architecture, and with the latest CUDA cores, it delivers amazing performance when compared to the previous generation.

Empower your graphics and compute-intensive tasks with a robust 16 GB of GDDR6 memory featuring ECC, doubling the memory capacity compared to the previous generation. Feel free to check the NVIDIA page to learn more.

What is LoRA

As we scale up our model to a larger models, conducting full fine-tuning, which typically involves retraining all the model parameters. This process becomes tedious and costly not only in terms of money but also computational expenses too. To address this challenge Low-Rank Adaptation, or LoRA was developed.

LoRA works by freezing the pre-trained model weights and introducing trainable rank decomposition matrices into every layer of the Transformer architecture. This approach significantly reduces the number of trainable parameters required for downstream tasks.

One of the examples introduced in the original research paper highlights the fact that– when compared to fine-tuning GPT-3 175B with Adam - LoRA can decrease the number of trainable parameters by a factor of 10,000 and reduce GPU memory requirements by threefold.

Furthermore, despite reducing model training to have fewer parameters, LoRA demonstrates comparable or superior model quality to fine-tuning methods on various architectures such as RoBERTa, DeBERTa, GPT-2, and GPT-3. Moreover, LoRA tuning achieves higher training efficacy throughput and does not incur additional inference latency, unlike adapter-based approaches.

We have a detailed article on "Training a LoRA model for Stable Diffusion XL with Paperspace," and we highly recommend the article as a pre-requisite to better understand the model.

Overview of Stable Diffusion and the model used

Stable diffusion, is a generative artificial intelligence (generative AI) model which utilizes diffusion technology and uses latent space to generate photorealistic images. One can run the model using CPU as well however works well if you have a GPU. Essentially, diffusion models includes Gaussian noise for encoding an image. Subsequently, they use a noise predictor along with a reverse diffusion process to reconstruct the original image.

The main components of Stable Diffusion includes a variational autoencoder, reverse diffusion, a noise predictor, and text conditioning.

In a variational autoencoder, there are two main components: an encoder and a decoder. The encoder compresses a large 512x512 pixel image into a smaller 64x64 representation in a latent space that's easier to handle. Later, the decoder reconstructs this compressed representation back into a full-size 512x512 pixel image.

Forward diffusion involves gradually adding Gaussian noise to an image until it's completely obscured by random noise. During training, all images undergo this process, although it's typically used only for image-to-image conversions later on.

Reverse diffusion is the opposite process, essentially undoing the forward diffusion step by step. For instance, if you train the model with images of cats and dogs, the reverse diffusion process would tend to reconstruct either a cat or a dog, with little in between. In practice, training involves vast amounts of images and uses prompts to create diverse and unique outputs.

A noise predictor, implemented as a U-Net model, plays a crucial role in denoising images. U-Net models, originally designed for biomedical image segmentation, are employed to estimate the noise in the latent space and subtract it from the image. This process is repeated for a specified number of steps, gradually reducing noise according to user-defined parameters. The noise predictor is influenced by conditioning prompts, which guide the final image generation.

Text conditioning is a common form of conditioning, where textual prompts are used to guide the image generation process. Each word in the prompt is analyzed and embedded into a 768-value vector by a CLIP tokenizer. Up to 75 tokens can be used in a prompt. Stable Diffusion utilizes these prompts by feeding them through a text transformer from the text encoder to the U-Net noise predictor. By setting the seed of the random number generator, different images can be generated in the latent space.

In this demo we have used Pastel Anime LoRA for SDXL. This model represents a high-resolution, Low-Rank Adaptation model for Stable Diffusion XL. The model has been fine-tuned with a learning rate set at 1e-5 across 1300 global steps and a batch size of 24, it uses a dataset comprising of superior-quality anime-style images. Derived from Animagine XL, this model, very similar to other anime-style Stable Diffusion models, facilitates image generation utilizing Danbooru tags.

Paperspace Demo

Bring this project to life

Let us perform a quick demo using Paperspace platform and A4000 GPU

Before we start we will do a quick check

!nvidia-smi

- Install the necessary packages and modules to run the model

!pip install diffusers --upgrade

!pip install invisible_watermark transformers accelerate safetensors

!pip install -U peft

- Import the libraries

import torch

from torch import autocast

from diffusers import StableDiffusionXLPipeline, EulerAncestralDiscreteScheduler- Specify the base model for generating images and safetensors to the variable

base_model = "Linaqruf/animagine-xl"

lora_model_id = "Linaqruf/pastel-anime-xl-lora"

lora_filename = "pastel-anime-xl.safetensors"

- Next, we will initialize a pipeline for stable diffusion XL model with the specific configurations. We will load the pre-trained model, specify the torch data type to be used for the model's computations. Further, using

float16helps to reduce memory usage and speed up computation, especially on GPUs.

pipe = StableDiffusionXLPipeline.from_pretrained(

base_model,

torch_dtype=torch.float16,

use_safetensors=True,

variant="fp16"

)

- Update the scheduler of a diffusion XL pipeline and then moves the pipeline object to the GPU for accelerated computation.

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

pipe.to('cuda')- Load the weights

pipe.load_lora_weights(lora_model_id, weight_name=lora_filename)

- Use the model to produce captivating anime creations

prompt = "face focus, cute, masterpiece, best quality, 1girl, green hair, sweater, looking at viewer, upper body, beanie, outdoors, night, turtleneck"

negative_prompt = "lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry"

image = pipe(

prompt,

negative_prompt=negative_prompt,

width=1024,

height=1024,

guidance_scale=12,

target_size=(1024,1024),

original_size=(4096,4096),

num_inference_steps=50

).images[0]

image.save("anime_girl.png")

We will highly encourage our readers to unleash their creativity when providing prompts for image generation.

Conclusion

In this article we explored how to generate anime characters using LoRA and Stable Diffusion. Stable Diffusion's ability to generate images with fine details, varied styles, and controlled attributes makes it a valuable tool for numerous applications, including art, design, and entertainment.

As research and development in Gen AI continues to progress, we anticipate further innovations and refinements in these models. Stable Diffusion along with LoRA will undoubtedly reshape the landscape of image synthesis and push the boundaries of creativity and expression. These novel approaches will no doubt revolutionize how we perceive and interact with digital imagery in the years to come.

We hope you enjoyed reading the article!

Reference

- Pastel anime xl lora

- What is Stable diffusion

- Stable Diffusion with Paperspace

- Distil Stable Diffusion

- Training a LoRA model for Stable Diffusion XL with Paperspace