Data augmentation is a widely used practice across various verticals of machine learning to help increase data samples in the existing dataset. There could be multiple reasons to why you would want to have more samples in the training data. It could be because the data you’ve collected is too little to start training a good ML model or maybe you’re seeing evidence of some overfitting happening. In either of the cases, data augmentation can help in introducing variety/diversity in the already existing data using various techniques, and will eventually help our machine learning models in handling overfitting and generalizing well on unseen samples. It can also be thought of as a regularizer here. Augmentation methods are quite popular in the domain of computer vision and are getting there for NLP as well.

In this blog, we will majorly focus on some of the popular techniques and python libraries that can be used when trying to augment textual data.

Method 1

Talking about the work done in Easy-Data-Augmentation Techniques in NLP, the Authors propose various easy, intuitive and effective functions for transforming a given text sample to its augmented version, especially for the use-case of text classification. Text classification is the task of categorizing text pieces into pre-defined groups. The authors propose 4 functions, namely, SR (Synonym Replacement), RI (Random Insertion), RS (Random Substitution), and RD (Random Delete). Details of each of them are mentioned below:

Synonym Replacement (SR) - Randomly choose a non-stop word and replace it with its synonym.

Note: Stop words are those common words that have the same likeliness of occurring in all documents. These words as such don’t add any significant demarcating information to associated text pieces. Examples of some of them are “the”, “an”, “is”, etc. One can use language-specific single universal list of stop words for removing them from the given text, although a common practice is to extend the universal list by mining common words from domain-specific corpus as well. Natural Language ToolKit (NLTK) comes prepackaged with a list of stop users you can use as a starting point.

Random Insertion (RI) - Insert the synonym of a randomly selected word at random position in the text.

Random Substitution (RS) - Randomly swap any two words in the text.

Random Delete (RD) - Randomly remove a word at random from the text.

Each of the above-mentioned functions can be done more than 1 times but should be chosen considering the trade-off between information loss and the total number of samples. The intuition behind such transformations is to mimic the general writing behavior and mistakes any human would make while writing a piece of text. I would also recommend you go through the paper or watch this video to get insights around experiments performed. You can follow examples from this awesome python library to directly use these functions out-of-the-box. The library also allows you to perform these operations at character level and not just words.

One more thing that can be tried for doing surface level transformations apart from the above-mentioned ones is to expand contractions using regular expressions using a pre-defined map. For example - Don’t -> Do not, We’ll -> We will, etc.

Method 2

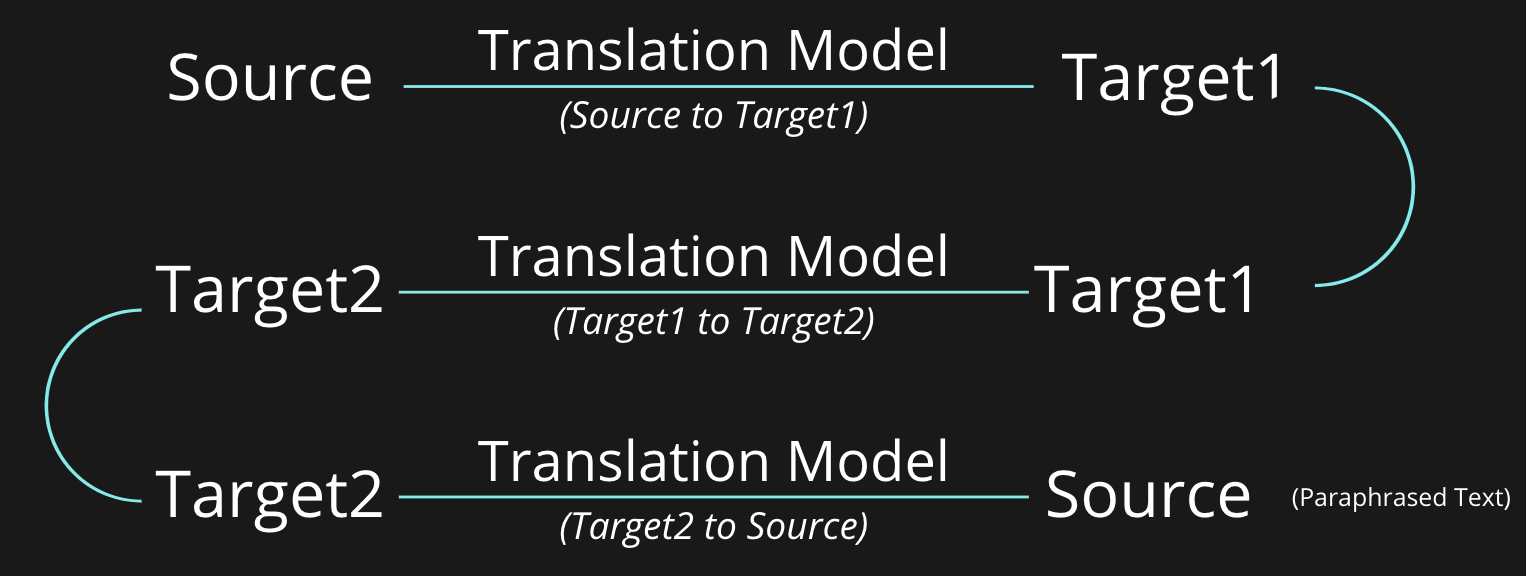

Back-translation is also a popular technique often used in practice when doing text augmentation. Given the probabilistic behavior of these days' translation systems, back-translation can be quite effective in generating paraphrased versions of the original text. Paraphrases are sentences that preserve the original intent of the original sentence while also allowing rewording of the content. For example - If the original sentence is "Everybody often goes to the movies" then one of the paraphrases for it could be "Everyone often goes to the movies".

The back-translation approach consists of 3 basic steps. Each of which are mentioned below:

Intermediate Translation - Translating the text from Source to Target language. For example - English to French.

Reverse Translation - Translating the text from step-1 back to its Source language.

Pruning - Removing back-translation if it's the same as the original text.

You can use any translation model that’s available out there for building this pipeline. Often as seen in practice, doing more than 1 level of translation can get pretty good paraphrases. For example, following the pipeline - English to French, French to Spanish, Spanish to English. The below figure illustrates the flow. But certainly, that depends on the computation bandwidth in hand. You can follow examples from this awesome python library and directly use this function out-of-the-box.

You can also check out this github, it fine-tunes a pre-trained T5 model on Quora duplicate questions dataset in a supervised fashion for generating paraphrased questions. You can always extend this further by fine-tuning it on Microsoft Research Paraphrase Corpus, PPDB: The Paraphrase Database and similar datasets for building a more general paraphrasing system.

Method 3

With recent advancements in Natural language processing with the Transformers model, this blog talks about using the GPT-2 model for text data augmentation (go here for a more general overview of GTP-2). At a very high level, the blog talks about fine-tuning existing GPT-2 models on sentences from training data by adding prefix text of class-labels to the input example sentences. It then trains the model to maximize the likelihood of the generated sequence in presence of the class labels. Adding the class labels helps the model to understand various clusters of classes available in the training data. During inference, by just giving the class name as a prompt to the GPT-2 model, it generates the sentence that aligns with the class name intent. You can find the code for this at TextAugmentation-GPT2 Github. Also, below are some of the examples generated by the above model (as stated in the official GitHub) on the SPAM/HAM email classification task.

SPAM: you have been awarded a £2000 cash prize. call 090663644177 or call 090530663647<|endoftext|>

SPAM: FREE Call Todays top players, the No1 players and their opponents and get their opinions on www.todaysplay.co.uk Todays Top Club players are in the draw for a chance to be awarded the £1000 prize. TodaysClub.com<|endoftext|>

HAM: I don't think so. You got anything else?<|endoftext|>

HAM: Ugh I don't want to go to school.. Cuz I can't go to exam..<|endoftext|>

You can use this method with a Gradient Notebook by creating the notebook with the repo as the Workspace URL, found in the advanced options toggled section.

Method 4

Some of the above-mentioned approaches if done blindly could result in the label and text intent mismatching. For example, if the sentence is “I don’t like this food” and the label is “negative”. And let’s say we are doing random word deletion as a result of which the word “don’t” gets deleted, so now the new sentence becomes “I like this food” with the label “negative”. This is wrong! So it’s always important to keep an eye on the transformed sentences and tweak them accordingly.

So the authors’ in Data Augmentation using Pre-trained Transformer Models point to the above-mentioned limitations and propose a technique to perform contextual augmentation to preserve label and sentence intent. They do this by fine-tuning various pre-trained transformer models like BERT, GPT, Seq2Seq BART, T5, and more over sentences along with the class label information. They try out two variations of it, both of them are mentioned below:

Expand - This involves prepending class labels to each sentence and also adding class labels as part of the model vocabulary and then fine-tuning it based on the pre-defined objective of the respective Transformer model.

Prepend - This involves prepending class labels to each sentence but not adding class labels as part of the model vocabulary and fine-tuning it based on the pre-defined objective of the respective Transformer model.

They found that the pre-trained Seq2Seq models performed well compared to other approaches that the authors tried. I would recommend you go through the paper to get insights into experiments. Also, feel free to access the code for the same.

Conclusion

As discussed earlier, data augmentation is one of the important practices that you should incorporate when building your machine learning models. In this blog, we saw various data augmentation techniques in the domain of natural language processing, ranging from context insensitive to sensitive transformations, python libraries that can help prototype these functions faster and things to keep in mind when playing around with various transformation options. Also, it's important to note that data augmentation might not always help to improve model performance(but again this is something you can't guarantee for any practice in machine learning), but definitely, something to try out and having it handy is a good practice. As a rule of thumb, it's always good to try out various augmentation approaches and see which one works better and use a validation set to tune the number of samples to augment for achieving the best results.

Hope you found this read useful. Thank you!