Generative Adversarial Networks (GANs) have evolved since their inception in 2015 when they were introduced by Ian Goodfellow. GANs consist of two models: a generator and a discriminator. The generator creates new artificial data points that resemble the training data, while the discriminator distinguishes between real data points from the training data and artificial data from the generator. The two models are trained in an adversarial manner. At the beginning of training, the discriminator has almost 100% accuracy because it is comparing real structure images with noisy images from the generator. However, as the generator gets better at producing sharper images, the accuracy of the discriminator decreases. The training is said to be complete when the discriminator can no longer improve its classification accuracy (also known as an adversarial loss). At this point, the generator has optimized its data generation capacity. In this blog post, I will review an unsupervised GAN, StyleGAN, which is arguably the most iconic GAN architecture that has ever been proposed. This review will highlight the various architectural changes that have contributed to the state-of-the-art performance of the model. There are various components/concepts to look out for when comparing different StyleGAN models: their approaches to generating high-resolution images, their methods of styling the image vectors in the latent space, and their different regularization techniques which encouraged smooth interpolations between generated samples.

About the latent space ….

In this article and the original research papers, you might read about a latent space. GAN models encode the training images into vectors that represent the most ‘descriptive’ attributes of the training data. The hyperspace that these vectors exist in is known as the latent space. To understand the distribution of the training data, you would need to understand the distribution of the vectors in the latent space. The rest of the article will explain how a latent vector is manipulated to encode a certain ‘style’ before being decoded and upsampled to create an artificial image that contains the encoded ‘style’.

StyleGAN (the original model)

The StyleGAN model was developed to create a more explainable architecture in order to understand various aspects of the image synthesis process. The authors chose the style transfer task because the image synthesis process is controllable and hence they could monitor the changes in the latent space. Normally, the input latent space follows the probability density of the training data there is some degree of entanglement. Entanglement, in this context, is when changing one attribute inadvertently changes another attribute e.g. changing someone’s hair color could also change their skin tone. Unlike the predecessor GANs, the StyleGAN generator embeds the input data into an intermediate latent space which has an impact on how factors of variation are represented in the network. The intermediate latent space in this model is free from the restriction of mimicking the training data therefore the vectors are disentangled.

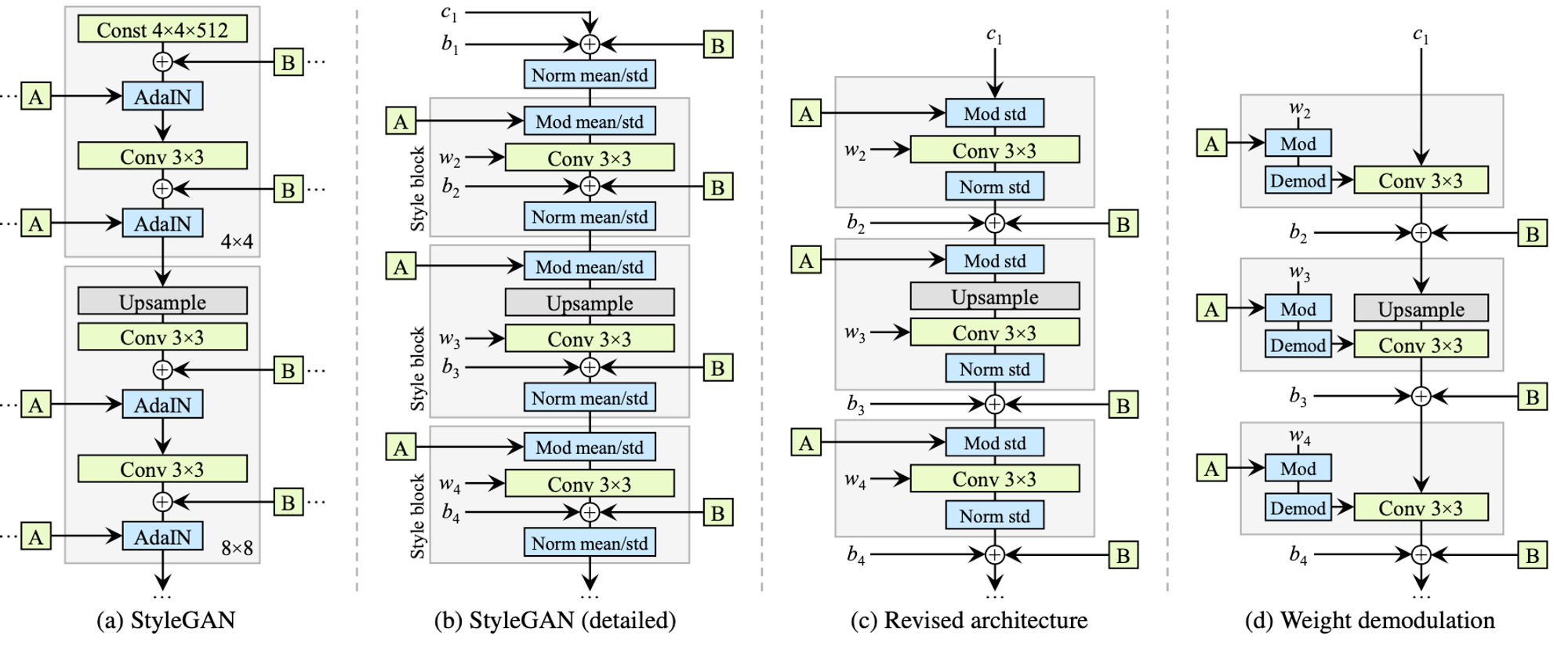

Instead of taking in a latent vector through its first layer, the generator begins from a learned constant. Given an input latent vector, $z$, derived from the input latent space $Z$, a non-linear mapping network $f: Z \rightarrow W$ produces $w \in W$. The mapping network is an 8-layer MLP that takes in and outputs 512-dimensional vectors. After we get the intermediate latent vector, $w$, learned affine transformations then specialize $w$ into styles that control adaptive instance normalization (AdaIN) operations after each convolution layer of the synthesis network $G$.

The AdaIN operations normalize each feature map of the input data separately, thereafter the normalized vectors are scaled and biased by the corresponding style vector that is injected into the operation. In this model, they edit the image by editing the feature maps which are output after every convolutional step.

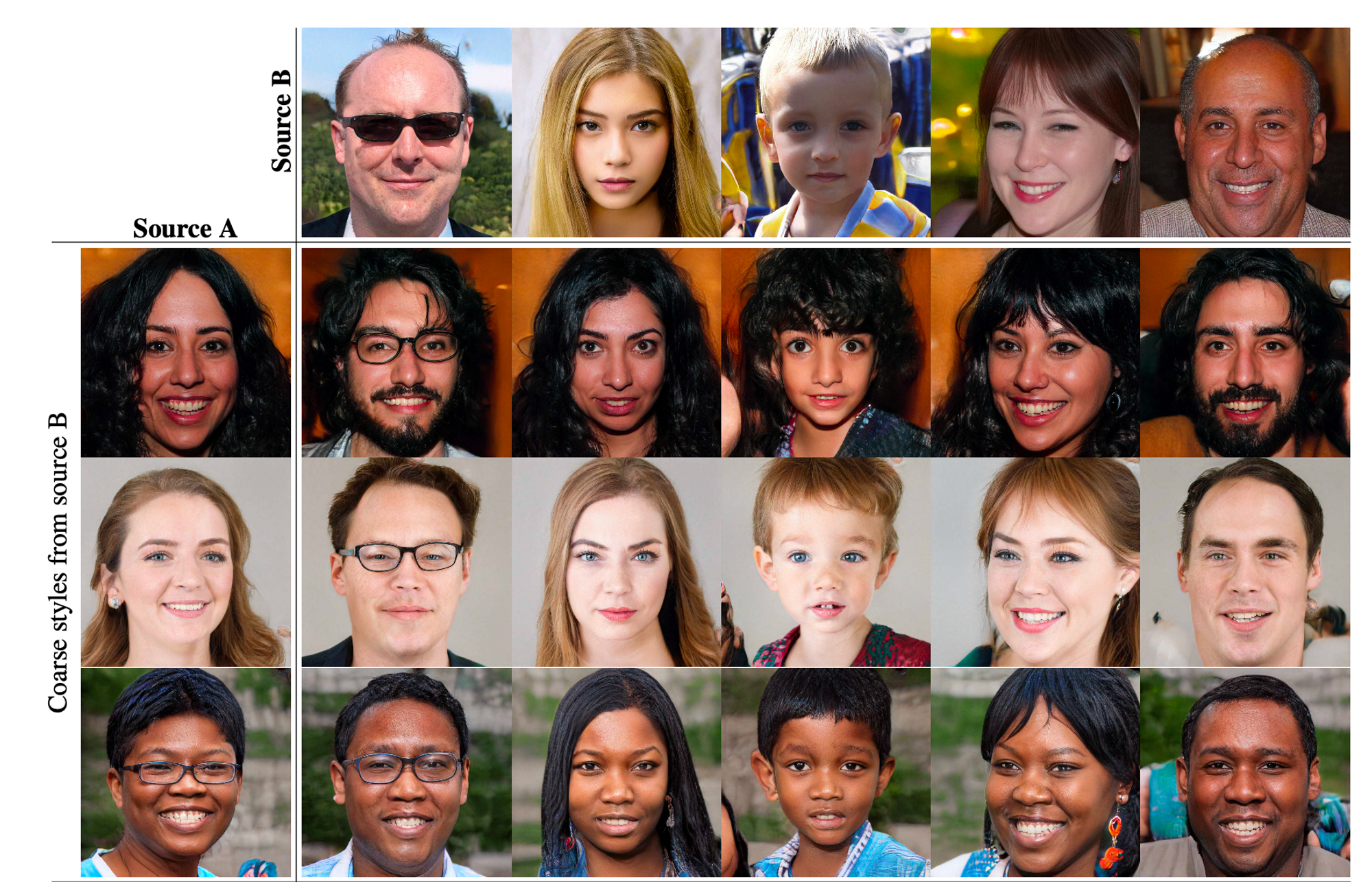

What is this style vector? StyleGAN can encode style through different techniques namely, style-mixing and by adding pixel noise during the generation process. In style-mixing, the model uses one input image to produce the style vector (encoded attributes) for a portion of the generation process before it is randomly switched out for another style vector from another input image. For per-pixel noise, random noise is injected into the model at various stages of the generation process.

To ensure that StyleGAN offered the user the ability to control the styling of the generated images, the authors designed two metrics to determine the degree of disentanglement of the latent space. Although I defined ‘entanglement’ above, I believe defining disentanglement further cements the concept. Disentanglement is when a single styling operation only affects one factor of variation (one attribute). The metrics they introduced include:

- Perceptual Path Length: This is the difference between generated images formed from vectored sampled along a linear interpolation. Given two points within a latent space, sample vectors at uniform intervals along the linear interpolation between the source and endpoints. Find the spherical interpolation within normalized input latent

- Linear Separability: They train auxiliary classification networks on all 40 of the CelebA attributes and then classify 200K generated images based on these attributes. They then fit a linear SVM to predict the label based on the latent-space point and classify the points by this place. They then compute the conditional entropy H(Y|X) where X are the classes predicted by the SVM and Y are the classes determined by the pre-trained classifier. → This tells us how much information is needed to determine the true class of the sample given that we know which side of the hyperplane it lies. A low value suggests consistent latent space directions for the corresponding factors of variation. A lower score shows more disentanglement of features.

StyleGAN 2

After the release of the first StyleGAN model to the public, its widespread use led to the discovery of some of the quirks that were causing random blob-like artifacts on the generated images. To fix this, the authors redesigned the feature map normalization techniques they were using in the generator, revised the ‘progressive growing’ technique they were using to generate high-resolution images, and employed new regularization to encourage good conditioning in the mapping from latent code to images.

- Reminder: StyleGAN is a special type of image generator because it takes a latent code $z$ and transforms it into an intermediate latent code $w$ using a mapping network. Thereafter affine transformations then produce styles that control the layers of the synthesis network via adaptive instance normalization (AdaIN). Stochastic variation is facilitated by providing additional noise to the synthesis network → This noise contributes to image variation/diversity

- Metrics Reminder:

- FID → A measure of the differences in density of two distributions in the high dimensional feature space of an InceptionV3 classifier

- Precision → A measure of the percentage of the generated images that are similar to training data

- Recall → Percentage of the training data that can be generated

The authors mention that the metrics above are based on classifier networks that have been shown to focus on texture rather than shapes and therefore some aspects of image quality are not captured. To fix this, they propose a perceptual path length (PPL) metric that correlates with the consistency and stability of shapes. They use PPL to regularize the synthesis network to favor smooth mappings and improve image quality.

By reviewing the previous StyleGAN model, the authors noticed blob-shaped artifacts that resemble water droplets. The anomaly starts around the $64^2$ resolution and is present in all feature maps and gets stronger at higher resolutions. They track the problem down to the AdaIN operation that normalizes the mean and variance of each feature map separately. They hypothesize that the generator intentionally sneaks signal strength information past the instance normalization operations by creating a strong, localized spike that dominates the statistics. By doing so, the generator can effectively scale the signal as it likes elsewhere. This leads to generating images that can fool the discriminator but ultimately fail the ‘human’ qualitative test. When they removed the normalization step, the artifacts disappeared completely.

The original StyleGAN applies bias and noise within the style block causing their relative impact to be inversely proportional to the current style’s magnitude. In StyleGAN2 the authors move these operations outside the style block where they operate on normalized data. After this change, the normalization and modulation operate on the standard deviation alone.

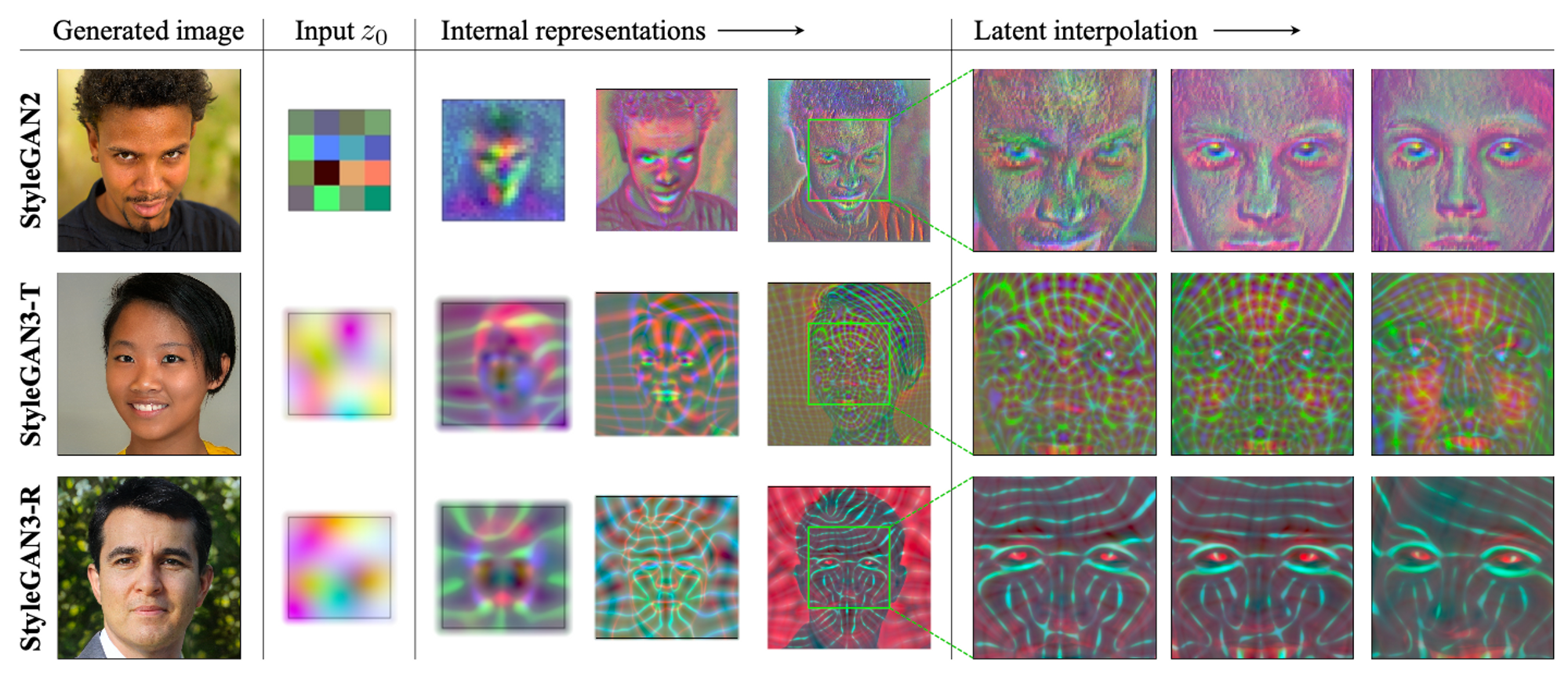

In addition to the droplet-like artifacts, the authors discovered the issue of ‘texture sticking’. Texture sticking occurs when progressively grown generators seem to have a strong location preference for details where certain attributes of an image seem to have a preference for certain regions of the image. This could be seen when generators always generate images with the person’s mouth at the center of the image (as seen in the image above). The hypothesis is that in progressive growth each intermediate resolution serves as a temporary output resolution. This, therefore, forces these intermediate layers to learn very high-frequency details if the input which compromises shift-invariance.

To fix this issue, they use a modified version of the MSG-GAN generator which connects the matching resolutions of the generator and the discriminator using multiple skip connections. In this new architecture, each block outputs a residual which is summed up and scaled, as opposed to a “potential output” for a given resolution of StyleGAN.

For the discriminator, they provide the downsampled image to each resolution block. They also use bilinear fitting in up and downsampling operations and modify the design to use residual connections. The skip connections in the generator drastically improve PPL and the residual discriminator is beneficial for FID.

StyleGAN 3

The authors note that although there are several ways of controlling the generative process, the foundations of the synthesis process are still only partially understood. In the real world, the details of different scales tend to transform hierarchically e.g. moving a head causes the nose to move which in turn moves the skin pores around it. Changing the details of the coarse features changes the details of the high-frequency features. This is the scenario in which texture sticking does not affect the spatial invariance of the generation process.

For a typical GAN generator the “coarse, low-resolution features are hierarchically refined by upsampling layers, locally mixed by convolutions, and new detail is introduced through nonlinearities” (Karras, Tero, et al.) Current GANs do not synthesize images in a natural hierarchical manner: The coarse features mainly control the presence of finer features but not their precise positions. Although they fixed the artifacts in StyleGAN2, they did not completely fix the spatial invariance of finer features like hair.

The goal of StyleGAN3 is to create an “architecture that exhibits a more natural transformation hierarchy, where the exact sub-pixel position of each feature is exclusively inherited from the underlying coarse features.” This simply means that coarse features learned in earlier layers of the generator will affect the presence of certain features but not their position in the image.

Current GAN architectures can partially encode spatial biases by drawing on unintentional positional references available to the intermediate layers through image borders, per-pixel noise inputs and positional encodings, and aliasing.

Despite aliasing receiving little attention in GAN literature, the authors identify two sources of it:

- faint after-images resulting from non-ideal upsampling filters

- point-wise application of non-linearities in the convolution process i.e. such as ReLU / swish

Before we get into some of the details of StyleGAN3, I need to define the terms aliasing and equivariance for the context of StyleGAN.

Aliasing → “Standard convolutional architectures consist of stacked layers of operations that progressively downscale the image. Aliasing is a well-known side-effect of downsampling that may take place: it causes high-frequency components of the original signal to become indistinguishable from its low-frequency components.”(source)

Equivariance → “Equivariance studies how transformations of the input image are encoded by the representation, invariance being a special case where a transformation has no effect.” (source)

One of StyleGAN3’s goals is to redesign the StyleGAN2 architecture to suppress aliasing. Recall that aliasing is one of the factors that ‘leak’ spatial information into the generation process thereby enforcing texture sticking of certain attributes. The spatial encoding of a feature would be useful information in the later stages of the generator, therefore, making it a high-frequency component. To ensure that it remains a high-frequency component, the model needs to implement a method to filter it from low-frequency components that are useful earlier in the generator. They note that previous upsampling techniques are insufficient to prevent aliasing and therefore they present the need to design high-quality filters. In addition to designing high-quality filters, they present a principled solution to aliasing caused by point-wise nonlinearities (ReLU, swish) by considering their effect in the continuous domain and appropriately low-pass filtering the results.

Recall that equivariance means that the changes to the input image are traceable through its vector representation. To enforce continuous equivariance of sub-pixel translation, they describe a comprehensive redesign of all signal-processing aspects of the StyleGAN2 generator. Once aliasing is suppressed, the internal representations now include coordinate systems that allow details to be correctly attached to the underlying surfaces.

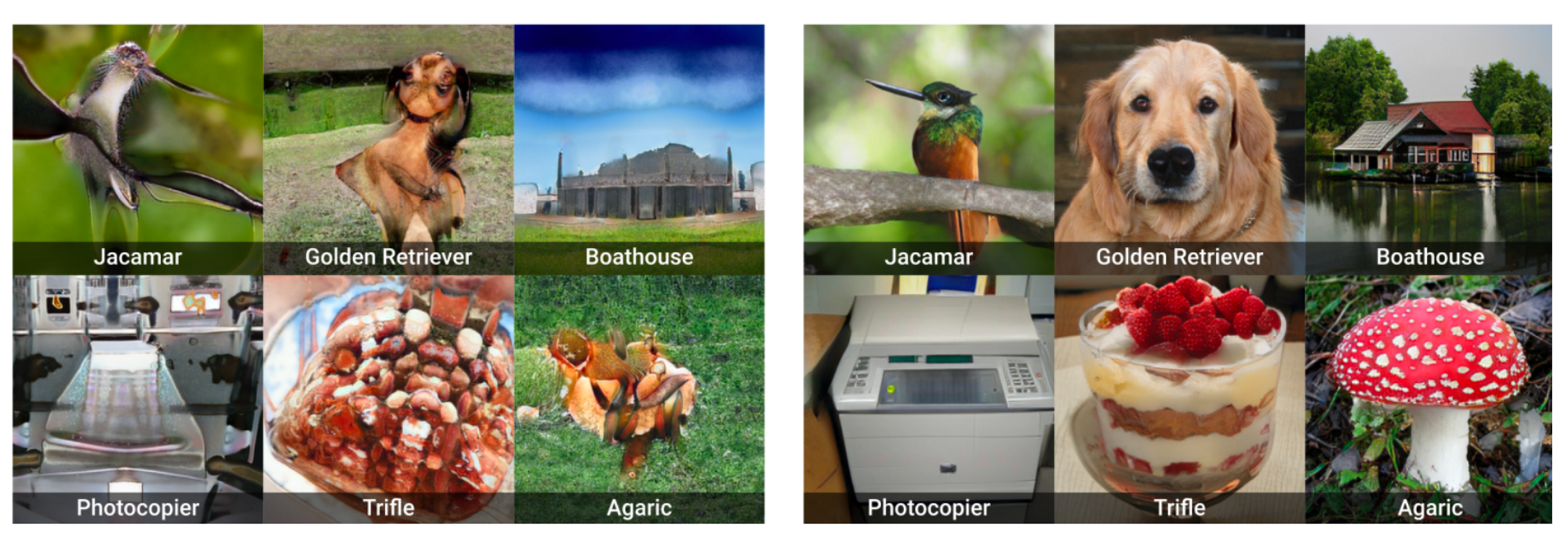

StyleGAN XL

StyleGAN 1,2, & 3 have shown tremendous success with regard to face-image generation but have lagged in terms of image generation for more diverse datasets. The goal of StyleGAN XL is to implement a new training strategy influenced by Projected GAN to train StyleGAN3 on a large, unstructured, and high-resolution dataset like ImageNet.

The 2 mains issues addressed with previous GAN models are:

- The need for structured datasets to guarantee semantically correct generated images

- The need for larger more expensive models to handle large datasets (a scale issue)

What is Projected GAN? Projected GAN works by projecting generated and real samples into a fixed, pretrained feature space. This revision improves the training stability, training time, and data efficiency of StyleGAN3. The goal is to train the StyleGAN3 generator on ImageNet and success is defined in terms of sample quality primarily measured by inception score (IS) and diversity measured by FID.

To train on a diverse class-conditional dataset, they implement layers of StyleGAN3-T the translation-equivariant configuration of StyleGAN3. The authors discovered that regularization improves results on uni-modal datasets like FFHQ or LSUN while it can be disadvantageous to multi-modal datasets therefore, they avoid regularization where possible in this project.

Unlike StyleGAN 1&2 they disable style mixing and path length regularization which leads to poor results and unstable training when used on complex datasets. Regularization is only beneficial when the model has been sufficiently trained. For the discriminator, they use spectral normalization without gradient penalties and they also apply a gaussian filter to the images because it prevents the discriminator from focusing on high frequencies early on. As we saw in previous StyleGAN models, focusing on high frequencies early on can lead to issues like spatial invariance or random unpleasant artifacts.

StyleGAN inherently works with a latent dimension of size 512. This dimension is pretty high for natural image datasets (ImageNet’s dimension is ~40). A latent size code of 512 is highly redundant and makes the mapping network’s task harder at the beginning of training. To fix this, they reduce StyleGAN’s latent code z to 64 to have stable training and lower FID values.

Conditioning the model on class information is essential to control the sample class and improve overall performance. In a class-conditional StyleGAN, a one-hot encoded label is embedded into a 512-dimensional vector and concatenated with z. For the discriminator, class information is projected onto the last layer. These edits to the model make the generator produce similar samples per class resulting in high IS but it leads to low recall. They hypothesize that the class embeddings collapse when training with Projected GAN. To fix this, they ease the optimization of the embeddings via pertaining. They extract and spatially pool the lowest resolution features of an Efficientnet-lite0 and calculate the mean per ImageNet class. Using this model keeps the output embedding dimension small enough to maintain stable training. Both the generator and discriminator are conditioned on the output embedding.

Progressive growth of GANs can lead to faster more stable training which leads to higher resolution output. However, the original method proposed in earlier GANs leads to artifacts. In this model, they start the progressive growth at a resolution of $16^2$ using 11 layers and every time the resolution increases, we cut off 2 layers and add 7 new ones. For the final stage of $1024^2$, they add only 5 layers as the last two are not disregarded. Each stage is trained until FID stops decreasing.

Previous studies show that “pretrained feature networks F perform similarly in terms of FID when used for Projected GAN training regardless of training data, pertaining objective, or network architecture.” (Sauer, Axel, Katja Schwarz, and Andreas Geiger.) In this paper, they explore the value of combining different feature networks. Starting from the standard configuration, an EfficientNet-lite0, they add a second feature network to inspect the influence of its pertaining objective and architecture. They mention that combining an EfficientNet with a ViT improves performance significantly because these two architectures learn different representations. Combining both architectures has complementary results.

The final contribution of this model is the use of classifier guidance mainly because it specializes on diverse datasets. Classifier guidance injects class information into diffusion models. In addition to the class embeddings given by EfficientNet, they also inject class information into the generator. How do they do this?

- They pass a generated image $x$ through a pretrained classifier (DeiT-small) to predict the class label $c_i$

- Add a cross-entropy loss as an additional term to the generator loss and scale this term by a constant $\lambda$

Doing this leads to an improvement in the inception score (IS) indicating an increase in sample quality. Classifier guidance only works well for higher resolutions ($>32^2$) otherwise it leads to mode collapse. In order to ensure that the models are not inadvertently optimizing for FID and IS when using classifier guidance, they propose random FID (rFID). They assess the spatial structure of the images using sFID. They report sample fidelity and diversity are evaluated via precision and recall metrics.

StyleGAN-XL substantially outperforms all other ImageNet generation models across all resolutions in FID, sFID, rFID, and IS. StyleGAN-XL also attains high diversity across all resolutions, because of the redefined progressive growth strategy.

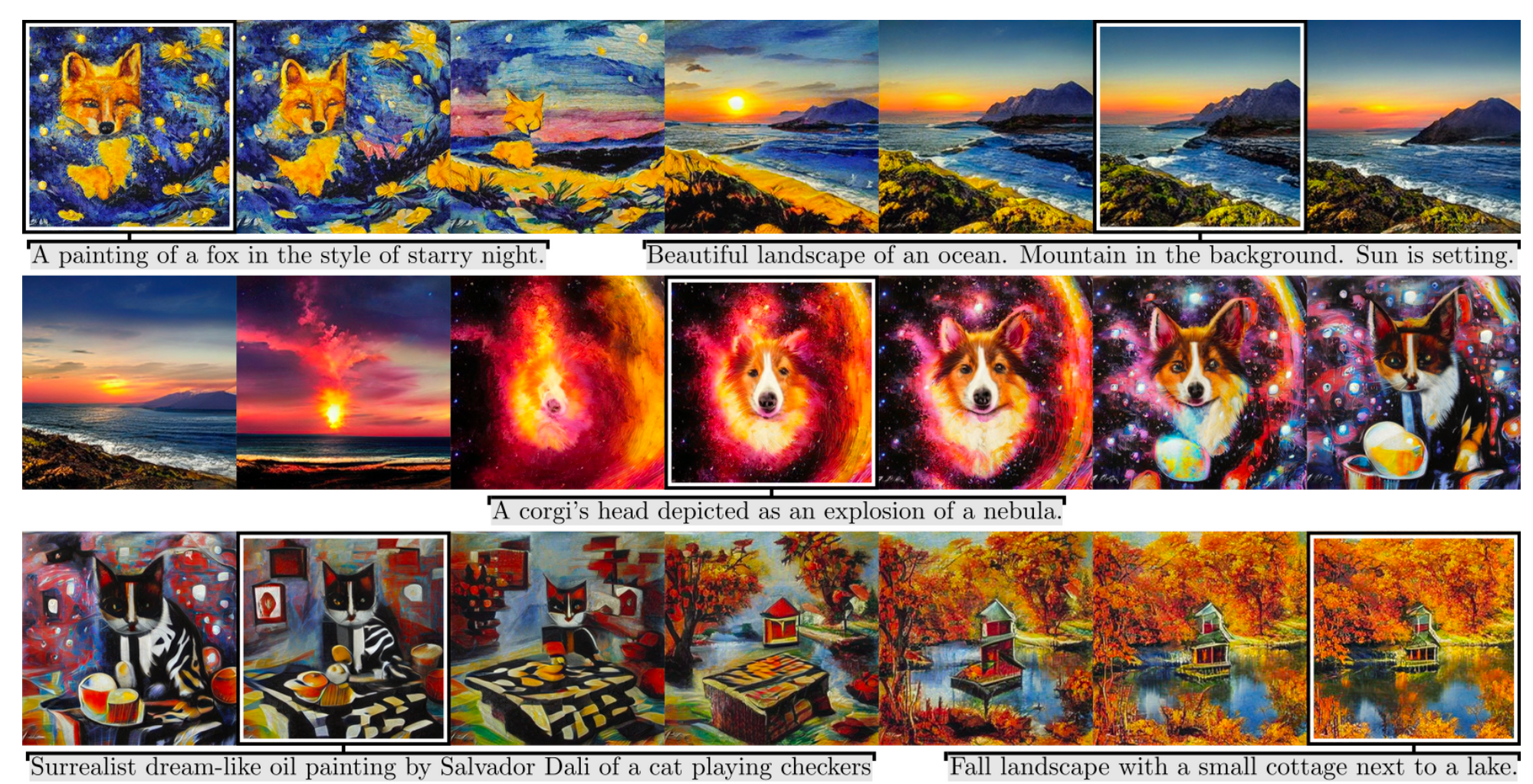

StyleGAN-T

Just when we thought GANs were about to be extinct with the dawn of diffusion models, StyleGAN-T said “hold my beer soda”. Current Text-to-Image (TI) synthesis is slow because generating a single sample requires several evaluation steps using diffusion models. GANs are much faster because they contain one evaluation step. However, previous GAN methods do not outperform diffusion models (SOTA) in terms of stable training on diverse datasets, strong text alignment, and controllable variation vs text alignment tradeoff. They propose StyleGAN-T performs better than previous SOTA-distilled diffusion models and previous GANs.

A couple of things make test-to-image synthesis possible:

- Text prompts are encoded using pretrained large language models that make it possible to condition synthesis based on general language understanding

- Largescale datasets containing image and caption pairs

Recent success in TI has been driven by diffusion models (DM) and autoregressive (ARM) models which have the large capacity to absorb the training data and the ability to deal with highly multi-modal data. GANs work best with smaller and less diverse datasets which make them less ideal than higher-capacity diffusion models

GANs have the advantage of faster inference speed & control of the synthesized image due to manipulation of the latent space. The benefits of StyleGAN-T include “fast inference speed and smooth latent space interpolation in the context of text-to-image synthesis”

The authors choose the StyleGAN-XL model as the baseline architecture because of its “strong performance in class-conditional ImageNet synthesis”. The image quality metrics they use are FID and CLIP Score using a ViT-g-14 model trained on LAION-2B. To convert the class conditioning into text conditioning, they embedded the text prompts using a pre-trained CLIP ViT-L/14 text encoder and use the embeddings in place of class embeddings.

For the generator, the authors drop the constraint for translation equivariance because successful DM & ARM do not require equivariance. They “drop the equivariance and switch to StyleGAN2 backbone for the synthesis layers, including output skip connections and spatial noise inputs that facilitate stochastic variation of low-level details” (Sauer, Axel, et al.)

The basic configuration of StyleGAN implies that a “significant increase in the generator’s depth leads to an early mode collapse in training”. To manage this they “make half the convolution layers residual and wrap them by GroupNorm” for normalization and LayerScale for scaling their contribution. This allows the model to gradually incorporate the contributions of the convolutional layer and stabilize the early training iterations.

In a style-based architecture, all of this variation has to be implemented by the per-layer styles. This leaves little room for the text embedding to influence the synthesis. Using the baseline architecture they noticed a tendency of the input latent $z$ to dominate over the text embedding $c_{text}$, leading to poor text alignment.

To fix this they let the $c_{text}$ bypass the mapping network assuming that the CLIP text encoder defines an appropriate intermediate latent space for the text conditioning. They concatenate $c_{text}$ to $w$ and use a set of affine transforms to produce per-layer styles $\tilde{s}$. Instead of using the resulting $\tilde{s}$ to modulate the convolutions as-is, they further split $\tilde{s}$ into 3 vectors of equal dimensions $\tilde{s}{1,2,3}$ and compute the final style vector as, $s = \tilde{s}! \circledcirc \tilde{s}_2 + \tilde{s}_3$. This operation ensures element-wise multiplication that turns the affine transformation into a $2^{nd}$ order polynomial network that has increased expressive power.

The discriminator borrows the following ideas from StyleGAN-XL like relying on a frozen, pre-trained feature network and using multiple discriminator heads. The model uses ViT-S (ViT: Visual Transformer) for the feature network because it is lightweight, quick inferencing, and encodes semantic info at high resolution. They use the same isotropic architecture for all the discriminator heads which they space equally between the transformer layers. These discriminator heads are evaluated independently using hinge loss. They use data augmentation (random cropping) when working with resolutions larger than 224x224. They augment the data before passing it into the feature network and this has shown significant performance increases.

Guidance: This is a concept in TI that trades variation for perceived image quality in a principled way preferring images that are strongly aligned with the text conditioning

To guide the generator StyleGAN-XL uses an ImageNet classifier to provide additional gradients during training. This guides the generator toward images that are easy to classify.

To scale this approach, the authors used a CLIP image encoder as opposed to a classifier. At each generator update, StyleGAN-T passes the generated image through the CLIP image encoder to get $c_{image}$ and minimize the squared spherical distance to the normalized text embedding $c_{text}$

$L_{CLIP} = arccos^2(c_{image},c_{text})$

This loss function is used during training to guide the generated distribution toward images that are captioned similarly to the input text. The authors note that overly strong CLIP guidance during training impairs FID as it limits distribution diversity. Therefore the overall weight of this loss function needs to strike a balance between image quality, text conditioning, and distribution diversity. It’s interesting to note that the clip guidance is only helpful up to 64x64 resolution, at higher resolutions they apply it to random 64x64 crops.

They train the model in 2 phases. In the primary phase, the generator is trainable and the text encoder is frozen. In the secondary phase, the generator becomes frozen and the text encoder is trainable as far as the generator conditioning is concerned the discriminator and the guidance term in the loss function $c_{text}$ still receive input from the original frozen text encoder. The secondary phase allows a higher CLIP guidance weight without introducing artifacts to the generated images and compromising FID. After the loss function converges the model resumes the primary phase.

To trade high variation for high fidelity, GANs use the truncation trick where a sampled latent vector $w$ is interpolated towards its mean w.r.t the given conditioning input. Truncation pushes $w$ to a higher-density region where the model performs better. Images generated from vector $w$ are of higher quality compared to images generated from far-off latent vectors.

This model StyleGAN-T has much better Zero-shot FID scores on 64x64 resolution than many diffusion and autoregressive models included in the paper. Some of these include Imagen, DALL-E (1&2), and GLIDE. However, it performs worse than most DM & ARM on 256x256 resolution images. My take on this is that there’s a tradeoff between speed and image quality. The variance of FID scores on zero-shot evaluation is not as varied as the differing performance on inference speed. Based on this realization, I think that StyleGAN-T is a top contender.

Summary

Although StyleGAN may not be considered the current state-of-the-art for image generation, its architecture was the basis for a lot of research on explainability in image generation because of its disentangled intermediate latent space. There is less clarity on image generation w.r.t diffusion models, therefore, giving room for researchers to transfer ideas on understanding image representation and generation from the latent space.

Depending on the task at hand, you could perform unconditional image generation on a diverse set of classes or generate images based on text prompts using StyleGAN-XL and StyleGAN-T respectively. GANs have the advantage of fast inferencing and the state-of-the-art StyleGAN models (StyleGAN XL and StyleGAN T) have the potential to produce output comparable in quality and diversity to current diffusion models. One caveat of StyleGAN-T, which is based on CLIP, is that it sometimes struggles in terms of binding attributes to objects as well as producing coherent text in images. Using a larger language model would resolve this issue at the cost of a slower runtime.

Although fast inference speed is a great advantage of GANs, the StyleGAN models I have discussed in this article have not been explored as thoroughly for customization tasks as have diffusion models therefore I cannot confidently speak on how easy or reliable fine-tuning these models would be as compared to models like Custom Diffusion. These are a few factors to consider when determining whether or not to use StyleGAN for image generation.

Citations:

- Karras, Tero, Samuli Laine, and Timo Aila. "A style-based generator architecture for generative adversarial networks." Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2019.

- Karras, Tero, et al. "Analyzing and improving the image quality of stylegan." Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020.

- Karras, Tero, et al. "Alias-free generative adversarial networks." Advances in Neural Information Processing Systems 34 (2021): 852-863.

- Sauer, Axel, Katja Schwarz, and Andreas Geiger. "Stylegan-xl: Scaling stylegan to large diverse datasets." ACM SIGGRAPH 2022 conference proceedings. 2022.

- Sauer, Axel, et al. "Stylegan-t: Unlocking the power of gans for fast large-scale text-to-image synthesis." arXiv preprint arXiv:2301.09515 (2023).