GPT-NeoX is the latest Natural Language Processing (NLP) model from EleutherAI, released in February 2022. It is the largest open-source NLP model made available to-date, containing 20 billion parameters.

Here, we show that Gradient's multi-GPU capability allows this model to be used for text generation and model evaluation, without the need for user setup first.

What is GPT-NeoX?

GPT-NeoX, or, more specifically, GPT-NeoX-20B, is part of a series of increasingly large NLP models that have been released in the last few years. This is following the discovery that the performance of such models keeps improving the larger the models are made. So research groups have been incentivized to keep pushing the boundaries.

Unfortunately, a lot of the capability to produce the largest models resides within the research groups of large tech companies. These models have therefore remained closed-source and not available to the general community, except via an API or app to use the already-trained models.

This has motivated EleutherAI and others to release their own series of open-source versions of large NLP models, so that the wider community can do research with them. GPT-NeoX-20B is the latest of these, trained on 96 A100 GPUs.

GPT-NeoX-20B on Gradient

total params: 20,556,201,984

The model is available on EleutherAI's GitHub repository. While the repo is well presented and easily accessible, in isolation this requires the user to have a GPU setup capable of running the model. In particular, at the time of writing, it requires at least 2 GPUs to be able to run. This is not just for total RAM, but numerically as well. This introduces a multi-GPU setup requirement in both hardware and software to the user, on top of the usual tasks of setting up a GPU plus CUDA, etc., to run machine learning workloads.

Gradient removes the GPU setup overhead, allowing the user to proceed directly to using the repo and running the model.

To set up the model to run on Gradient: (1)

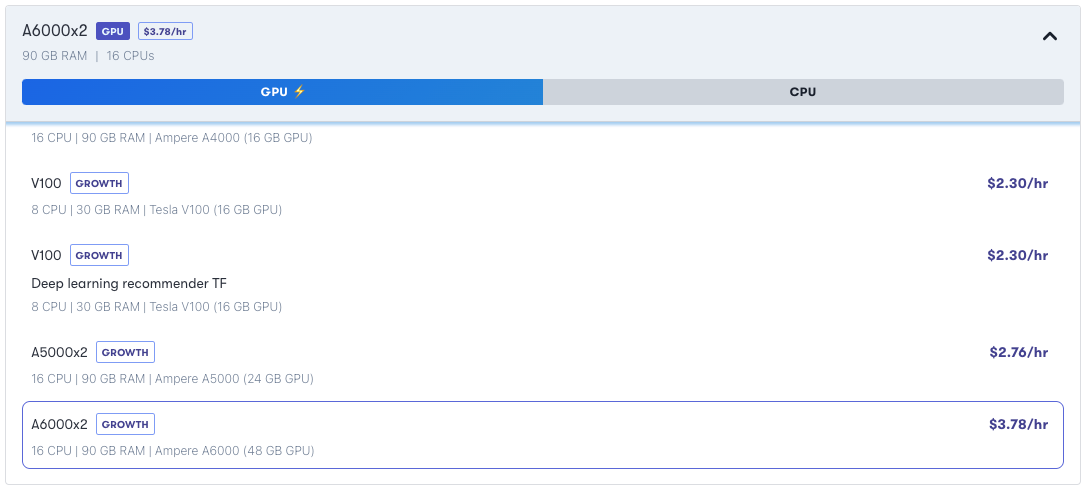

- Sign up for a subscription that allows access to multi-GPU machines. Currently this is the Growth level or higher.

- Create a new Project and Notebook, using an A6000x2 machine (2 Nvidia Ampere A6000 GPUs)

- Under the Notebook's Advanced options, set the container to be

nvcr.io/nvidia/pytorch:21.10-py3. - Also set the workspace URL to be the EleutherAI repo:

https://github.com/EleutherAI/gpt-neox. This mounts that repo into your Notebook so you can immediately access it. - Open a terminal window in the Notebook and install its dependencies:

pip install -r requirements/requirements.txt - Then download the model:(2)

wget --cut-dirs=5 -nH -r --no-parent --reject "index.html*" https://mystic.the-eye.eu/public/AI/models/GPT-NeoX-20B/slim_weights/ -P 20B_checkpoints

- This puts it in the directory

20B_checkpoints/in your current directory. You could also put it in your Notebook's persistent/storagedirectory, or make it into a Gradient Dataset (see our Data documentation). (3) - Edit the

configs/20B.ymlYAML file to change its"pipe-parallel-size": 4setting from 4 to 1, because we are using 2 GPUs not 8. - If your

20B_checkpoints/directory is not in your current directory, edit thevocab-file,saveandloadsettings in the same YAML file to point to its location.

Now that the model is ready to run, we will proceed to have it generate some text, followed by evaluating its performance.

(1) These instructions assume you know the basics of using Gradient. If you are less familiar with Gradient, the Notebooks Tutorial in the documentation is a great place to start.

(2) The model is large: 39GB. In future we may add this to the Gradient Public Datasets to make it easier to access than this generic wget to an external site.

(3) Gradient Datasets are generic versioned sets of files, so they can include files such as NLP model checkpoints.

Bring this project to life

Text generation

A simple way to show the model in action is to have it generate some text. You can either run it through unconditional generation, or supply a file containing text prompts from which the model then provides a continuation.

For unconditional generation, run

./deepy.py generate.py ./configs/20B.yml

It takes about a half hour to run first time through, because the 39GB-sized model has to be loaded. The output by default is put in samples.txt.

If you run nvidia-smi while the script is running, you can see that it is taking up about 40GB total GPU RAM across the 2 GPUs, as stated in the repo. When the script is done, it nicely clears the GPUs back to zero memory usage.

When we ran it, the generated text was (minus the JSON formatting):

Q:

How to get the value of a variable in a function in a different file?

I have a file called "main.py" and a file called "functions.py".

In "main.py" I have:

import functions

def main():

print(functions)For conditional generation, you can run, e.g.,

./deepy.py generate.py ./configs/20B.yml -i prompt.txt -o sample_outputs.txt

where our inputs are in prompt.txt and this time we put the output in sample_outputs.txt.

When we ran it, using the three prompts:

Paperspace Gradient is

The second prompt is

This is the last linePaperspace Gradient is a new type of gradient that is based on the paper-folding technique. It is a gradient that is created by folding a piece of paper into a specific shape. The shape is then cut out and used as a gradient.

The gradient is created by folding a piece of paper into a specific shape. The

The second prompt is to enter the name of the file you want to create.

The third prompt is to enter the name of the file you want to create.

The fourth prompt is to enter the name of the file you want to create.

The fifth prompt is to enter the name of the file you want toand

This is the last line of the file.

A:

You can use the following regex:

^.*?\

(.*?)\

(.*?)\

(.*?)\

(.*?)\

(.*?)\

(.*?)\

(.*?)\

(.*?)\

(which looks OK-ish for the first one, although the second two might need some work. This is probably because the inputs are rather unusual text or referencing a proper noun with "Gradient". As above, the output length is limited to the user-supplied or default number of characters, which is why it cuts off.

Since the prompt can be arbitrary text, and the model has capabilities for things like code generation as well as text, the scope of usage for it is pretty much unlimited.

Model evaluation

Besides the qualitative appearance of how good the generated text is, what the model generates can also be evaluated quantitatively. There are many ways to do this.

Using a simple example from the repo, we can run

./deepy.py evaluate.py ./configs/20B.yml --eval_tasks lambada piqa

This tells it to use the same pretrained model as above, i.e., GPT-NeoX-20B as described in 20B.yml, to run the evaluation tasks on the datasets lambada and piqa . The lambada data gets downloaded by the evaluate.py script.

Note: In our setup there appeared to be a bug where the arguments in the lm_eval lambada.py appeared to be reversed versus the best_download Python module, resulting in the output file being named as its checksum value and then not being found. It's not clear if this is an error in the repo or mismatching library versions somewhere, but we worked around it by just getting the data directly: wget http://eaidata.bmk.sh/data/lambada_test.jsonl -O data/lambada/lambada_test.jsonl and then rerunning evaluate. (And yes it's JSONL, not JSON.)

Unfortunately, the repo and white paper don't explicitly mention what lambada and piqa are, but presumably they correspond to the LAMBADA and PIQA text datasets previously mentioned on EleutherAI's blog, and elsewhere. They are text datasets that contain training and testing portions, and are thus suitable for evaluating an NLP model.

When we ran the evaluation, it gave the results (minus some formatting again):

'lambada':

'acc': 0.7209392586842616

'acc_stderr': 0.006249003708978234

'ppl': 3.6717612629980607

'ppl_stderr': 0.07590817388828183

'piqa':

'acc': 0.7742110990206746

'acc_norm': 0.780739934711643

'acc_norm_stderr': 0.009653357463605301

'acc_stderr': 0.009754980670917316which means it got accuracies of 72.09 +/- 0.62% and 77.4 +/- 0.98%, which are consistent with the values in the original white paper. So our results look reasonable.

Future work

The obvious missing piece so far is model fine-tuning training.

While text generation and model evaluation are crucial capabilities to gain value from NLP, the normal way to obtain maximum value for a given business problem or other project, is to take the supplied pretrained model and then perform fine-tuning training on it to tailor the model to your specific task or domain.

This entails bringing one's own additional pertinent training data, and training the model for a few more epochs so that it performs well in the desired domain.

For example, in the EleutherAI repo, they supply a range of fine-tuning datasets, the default being the Enron emails data from the well-known controversy a few years back. Fine-tuning the model on this will improve its performance at generating new Enron-style emails compared to the generic trained model. Maybe you don't need better Enron emails for your project, but the idea is clear by analogy if you supply the data for what you do need.

Data for fine-tuning training is typically much smaller than the original text used for training from scratch. Here, the original supplied GPT-NeoX-20B was trained on The Pile text dataset, which is 800GB, but fine-tuning datasets can be a few 10s of MB, so long as the examples are of high quality.

The repo, however, states that, compared to text generation and model evaluation that require about 40G GPU RAM as we saw above, for total memory it needs "significantly more for training". Following this blog post by Forefront Technologies, we expect it to work on a setup with 8 A100 GPUs, but here we defer this to a future blog entry.

The repo also states that they use Weights & Biases to track their model training. Following this recent post on our blog, we plan to show this in action as well.

Conclusions

We have shown that the largest open-source natural language processing (NLP) model released to-date, GPT-NeoX-20B:

- Runs on Gradient without users being required to set up any GPU infrastructure themselves

- Has its requirement met that at least 2 GPUs are present (multi-GPU), and 40GB+ total GPU RAM

- Successfully generates sensible-looking text

- Performs to the expected accuracy (model evaluation)

- Can be run by any Gradient user on the Growth subscription or above

Since this is part of Gradient's generic Notebooks+Workflows+Deployments data science + MLOps capability, a project running the model can then be extended by the user in any way that they would like to.

In future posts, we plan to show fine-tuning training on a larger number of GPUs than the 2 used here, and monitoring of the training via Weights & Biases.

Next Steps

- Sign up for Gradient to try out GPT-NeoX-20B

- Read more about it at EleutherAI's GitHub repository

- Check out their original white paper