“Any sufficiently advanced technology is indistinguishable from magic” ~Arthur C. Clarke

I remember being amused by a scene from the popular TV series “Silicon Valley”, where the character, Jared gets stuck in a self-driving car. His destination is accidentally overwritten, and the car tries to take him to Arallon instead. We are left in fits of laughter when he yells, "Mr. Car! Mr. Car!"

Self-driving cars have never failed to amaze us. While some of us have had the opportunity to travel in one, realistically only a fraction of people around the world, most of the population is likely to not know what a self-driving car is or especially how one works. This article aims to give an in-depth introduction about such cars.

The Motivation Behind Self-Driving Cars

We are a long way away from having a true self-driving car. By a true self-driving car we mean a car that can be essentially driven in any manner as a human driving a car. This is an incredibly hard thing to achieve.

Major automobile companies have been trying to achieve true autonomous driving The main motivations behind the idea are:

- Safer Roads

- Increase in productivity

- More economical

- The movement will be more efficient

- More environment friendly

The companies that are working on this idea include, but are not limited to — Tesla, Pony.ai, Waymo, Apple, Kia-Hyundai, Ford, Audi, and Huawei.

Basic Terminologies used in self-driving cars

Before we get into the working of self-driving cars, let’s get familiar with the common terminologies used in them.

1) Levels of Autonomy

Level 0 (No Driving Automation): The vehicles are manually controlled by human drivers who monitor the surrounding environment and perform the driving tasks, namely acceleration/deceleration and steering. Integrated support systems, i.e. blindspot warning, parking, and lane-keeping assistance, fall under this category because they only offer alerts and do not control the vehicle in any way.

Level 1 (Driver Assistance): An automated system assists in either steering or acceleration, but the driver monitors the road along with vehicle parameters. Adaptive cruise control and automatic braking fall under this category

Level 2 (Partial Automation): The advanced driver assistance system (ADAS) can control both steering and acceleration. The driver still carries out the monitoring task and is on standby to take over the vehicle at any moment. Tesla Autopilot falls under this category.

Level 3 (Conditional Automation): The ADAS is programmed with environmental detection features using input data from the sensors to control the vehicle. This car is self-driving rather than autonomous and can drive itself under certain conditions but still needs human interference when necessary. Traffic Jam Pilot falls under this category.

Level 4 (High Automation): This level of a self-driving vehicle has additional capabilities which allow it to make decisions in case of failure of the ADAS. A human passenger must still be present. Currently, they are restricted to a particular area through geofencing. It is intended to be used in driverless taxis and public transport where the vehicle travels a fixed route.

Level 5 (Full Automation): The ADAS can navigate through and handle different kinds of driving conditions in different driving modes with zero human interaction. These vehicles can go anywhere, even without a human passenger. They are expected not to have any steering wheels or pedals.

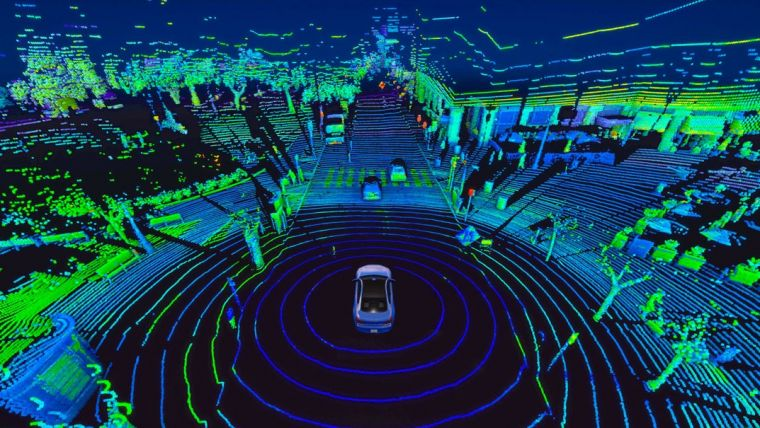

2) Lidar

According to Wikipedia:

liDAR is a method for determining ranges (variable distance) by targeting an object with a laser and measuring the time for the reflected light to return to the receiver.

In autonomous cars, liDAR is the eye of the car. It is basically a 360-degree rotational camera that can detect any kind of obstacles that comes in its way.

3) Adaptive

Adaptive Behaviour in self-driving cars means that it can set its parameters according to its surroundings. For example, if there is a lot of traffic around the car, it will automatically slow down its speed.

4) Autopilot

Autopilot is a system used to automatically control the working of a vehicle without any manual control. It is not only used in autonomous cars but also in aircraft and submarines.

Working of Autonomous Cars

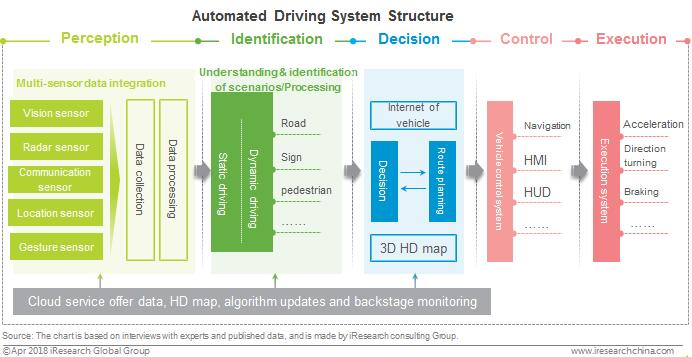

Autonomous cars are made up of three obligatory parts: the vehicle, system hardware, and driving software. The blend of hardware and software integrates seamlessly for safe driving. A consecutive series of steps take the output of the previous step being taken as input for the next step. The workflow can be segmented into five distinct stages:

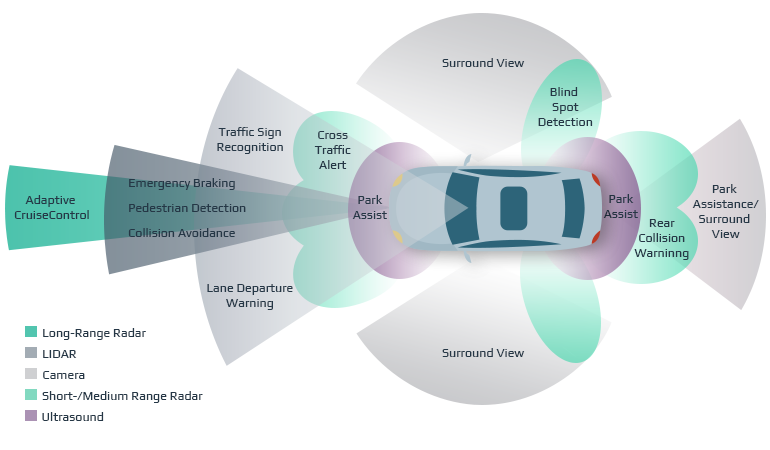

- Perception: The primary course of action to take is to collect data from its surroundings which is done through different kinds of sensors to ‘see’ and ‘hear’. Three major sensors, cameras, radar, and LiDAR, work together as the human eyes and brain. The vehicle then processes the raw information, deriving meaning from it. Computer vision is implemented through convolutional neural networks to identify the objects from the camera feed.

2. Identification: Software then processes those inputs, plots a path, and sends instructions to the vehicle’s “actuators,” which control acceleration, braking, and steering. Hard-coded rules, obstacle avoidance algorithms, predictive modeling, and “smart” object discrimination (i.e., knowing the difference between a bicycle and a motorcycle) help the software follow traffic rules and navigate obstacles. These systems collect data regarding the vehicle, driver, and surroundings through various receivers, such as camera, radar, LIDAR, and navigation maps to then analyze the situation and then take appropriate decisions, in terms of driving. This shows that the vehicle needs to be connected to other vehicles on the road, as well as to the overall infrastructure for different types of information related to the road and conditions. The degree to which the vehicle and its systems are connected to each another, as well as to other vehicles and multiple information providers becomes very important. Such information needs to be analyzed, and the resulting steps would facilitate the decision-making aspect of autonomous vehicles.

Hardware components

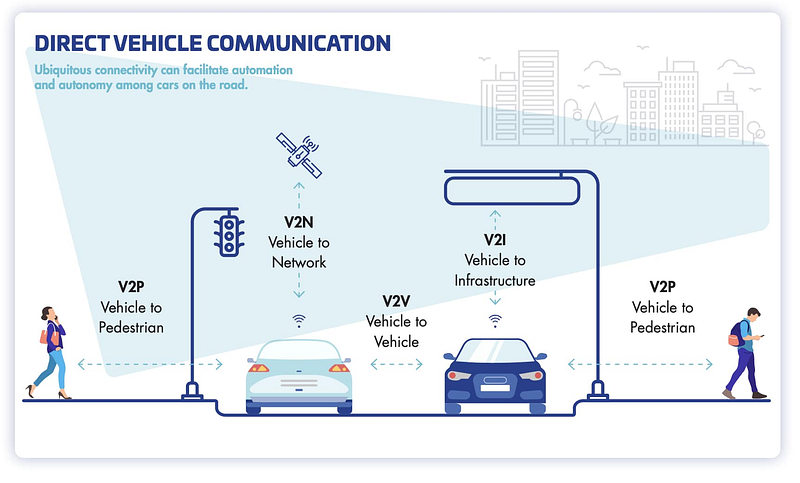

The hardware components are classified into three major roles to complete such tasks as seeing using sensors, communicating using V2V technology, and moving through actuators.

- Sensor fusion: The sensors can be considered analogous to the human eyes which allow the vehicle to absorb information about its environment. To create high-quality overlapping data patterns, the different sensors collect different kinds of data but work together to form a coherent observation system. The input from all the sensors is merged at 1 GB/s for a 360-degree picture. The ones used include: Cameras, liDAR, RADAR.

2. V2X tech: These components are analogous to the human mouth and ears. V2X stands for Vehicle-to-Everything or vehicle to X, involving information flow from a vehicle to any object related to its operation and vice versa. They are designed to allow an autonomous vehicle to ‘talk’ to another or to other connected systems such as a traffic light. Based on the destination, they can be classified as:

- V2I (Vehicle-to-Infrastructure): data exchange between a car and equipment installed alongside roads to relay traffic conditions and emergency information to drivers.

- V2N (Vehicle-to-Network): access of a network for cloud-based services

- V2V (Vehicle-to-Vehicle): data exchange between vehicles.

- V2P (Vehicle-to-Pedestrian): data exchange between the car and pedestrians.

3. Actuators: This section is analogous to the human muscles responding to nerve impulses sent by the brain. The actuators are directed by processors to perform physical activity such as braking and steering.

Machine Learning Algorithms used in Autonomous Cars

Software components

For all intents and purposes, the software can be considered as the brain of the self-driving car. To put it in simple terms, algorithms trained in real-life situations take the input data from the sensors and derive meaning from it to make the necessary decisions for driving. The most appropriate path is plotted, and the relevant instructions are conveyed to the actuators.

The algorithms incorporated in the ADAS have to guide the vehicle through 4 main stages of autonomous driving:

- Perception: analyzing obstacles through object detection and classification, and necessary parameters in the neighborhood

- Localization: defining the position of the vehicle concerning the surrounding area

- Planning: the best path is planned from the current location to destination considering the data from the perception and localization stages

- Control: following the trajectory with the appropriate steering angle and an acceleration value.

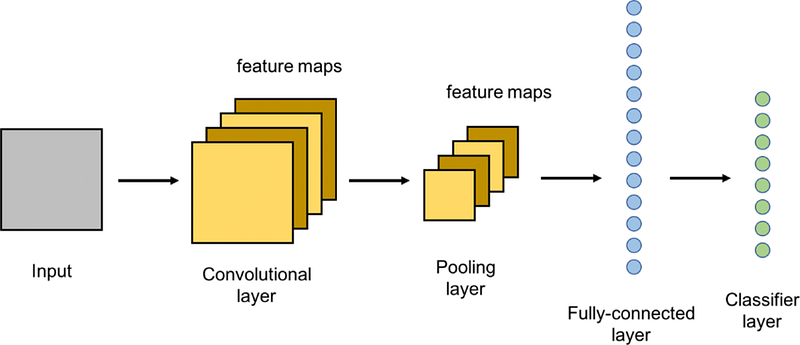

Convolutional Neural Networks (CNN)

CNN's are the first choice when it comes to feature extraction due to their high accuracy. The specialty lies in the convolutional layer, which reduces data by performing convolutions with a filter matrix or kernel. The kernel, usually 3x3 or 5x5 pixels, slides over the input image, and the mathematical dot product is taken between the matrix and the pixel values of the image bounded by it. This resultant value is assigned into a feature map which represents data about features such as the edges or corners. Deeper layers will capture more complicated and comprehensive features such as the shape of the object. The output of the CNN is given to an activation function to introduce non-linear relationships between the data. ReLU (Rectified Linear Unit) is the most common one used as it converges quickly. The max-pooling layer, used for data reduction, carries information about the background and texture of the image. On the whole, the model is trained to get the highest accuracy without overfitting, and then is applied to real-world situations for object detection and classification.

The specific CNNs using in self-driving cars are

- HydraNet by Tesla

- ChauffeurNet by Google Waymo

- Nvidia Self driving car

Scale-invariant feature transform (SIFT)

This algorithm tackles the problem of partially visible objects through image matching and identification by extracting distinctive features of the object in question. For example, consider the eight corners of a stop sign. These features do not change with rotation, scaling, or noise disturbance so they are considered scale-invariant features. The relationship between the object and features is noted and stored by the system. Taking an image, extracted features are consulted against SIFT features from a database. By comparison, the vehicle can thus recognize the object.

Data reduction algorithms for pattern recognition

The images received through sensor fusion contain unnecessary and overlapping data, which has to be filtered out. To determine the occurrence of a particular object class, repeating patterns are used to aid in recognition. These algorithms help reduce noise and unessential data by fitting line segments to corners and circular arcs to elements that resemble an arc shape. These segments and arcs ultimately combine to form the identifiable features specific to a particular object class.

The specific algorithms using in self-driving cars are

- Principal Component Analysis (PCA): reduces the dimensionality of the data.

- Support Vector Machines (SVM): excellent for non-probabilistic binary linear classification.

- Histograms of Oriented Gradients (HOG): excellent for human detection

- You Only Look Once (YOLO): an alternative to HOG, it predicts each image section with respect to the context of the entire image.

Clustering algorithms

Due to low-resolution or blurry images, intermittent or sparse data, classification algorithms can have a hard time detecting objects and may miss them altogether. Clustering finds the inherent structures present in data to classify them based on a maximum amount of common features.

The specific clustering algorithms using in self-driving cars are:

- K-means: k number of centroids are used to define different clusters. Data points are assigned to the closest centroid. Subsequently, the centroid is moved to the mean of the points in the cluster.

- Multi-class neural networks: concerned with using the inherent structures in data to organize the data into groupings of maximum shared traits.

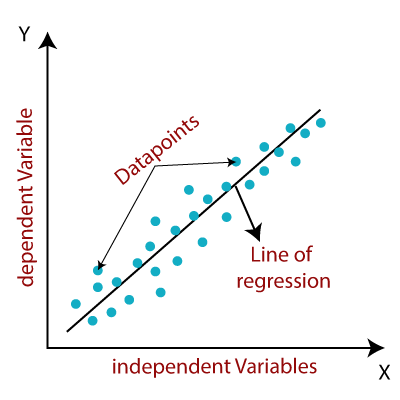

Regression learning algorithms

Not unlike Doctor Strange, these algorithms try to predict future scenarios. The repetitive features in an environment are used to the algorithm’s advantage to build a statistical model of the relationship between a given image and the position of a particular object in it. The relationship between a minimum of two variables is computed, and then compared on different scales using regression analysis. Its dependencies are the shape of the regression line, the type of dependent variables, and the number of independent variables. Initially, the model is learned offline. When the model is live, it samples images for fast detection to output the position of an object and its certainty of that position. This model can be further applied to other entities without additional modeling.

The specific algorithms using in self-driving cars are

- Random forest regression

- Bayesian regression

- Neural network regression

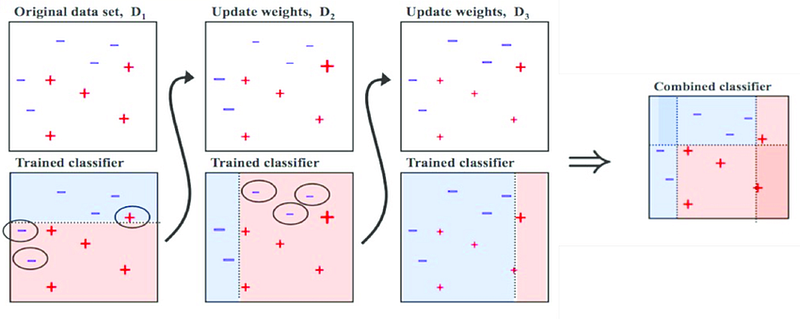

Decision-making algorithms

As evident by the name, determining the right choice is what these algorithms are all about. Multiple models are trained separately and combined to give an inclusive prediction with minimum error. The decision takes into account the amount of confidence the algorithm has in the identification, classification, and movement forecast of an object.

Adaptive Boosting (Adaboost) is among the most popular algorithmic frameworks used. It essentially combines a series of weak learners (low accuracy) trained on weighted data into an ensemble so that the involved algorithms complement each other and boost the final performance to obtain an accurate classifier.

Let’s try to understand how it works using the diagram above. In the original unweighted dataset, the positive data points are represented with red crosses and the negative ones with blue minus signs. The first model is able to classify three negatives and four positive points correctly, but also incorrectly classifies one positive and two negative points. These misclassified points are assigned a higher weight and given as input to build the second model. The decision boundary in the second model has shifted to classify the previous errors correctly, but, in the process, has incorrectly classified three different points. The weights are updated to give the new misclassified points a higher value, and this iteration continues until a specified condition is satisfied. We can see that all the models have some data points which are misclassified, and as a result, they are weak learners. By taking the weighted average of the models, the models cover the others’ weak points and all the points become correctly classified, showing that the final ensemble model is a strong learner.

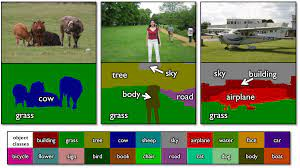

TextonBoost works on the same principle. It uses textons: image data clusters with the same characteristics and, thus, the same response to a filter. It combines appearance, shape, and context by looking at the image as a whole and gleaning contextual information to understand its relationships. For example, a boat pixel is always surrounded by a water pixel.

Challenges faced by autonomous cars

Autonomous vehicles have come a long way since their conception, but the technical and safety challenges must be solved flawlessly because of the high risk involved. There are still hurdles to cross before we can see these vehicles on our roads. Some of the obstacles ahead are:

- Inability to comprehend complex social interactions: Human driving is imperfect, and drivers often rely on social savvy from their environment, such as eye contact and hand gestures, to navigate. Interaction with cyclists and pedestrians mainly requires responding to social cues and subtleties which robots still lack. As of now, the automated system needs to be able to understand the environment as well as why the people they encounter are behaving this way to be able to respond to every quirk.

- Not suitable for all weather conditions: Under severe weather conditions, autonomous vehicles will have trouble interpreting low-visibility situations and loss of traction. The sensors being covered by snow, ice, or obscured by heavy rain experience reduce effectiveness. In addition, thick layers of snow conceal signs and lane markers. The solution of tiny windshield wipers on the sensor works well.

- Mapping for self-driving is complex: Before any self-driving car can drive, it must need a combination of predefined maps with obstacles categorized to use as a reference for navigating through its route. Over time, areas and road features change, requiring constant updates. To build and maintain these 3D maps is a time-consuming process and requires a lot of resources. Fully autonomous cars are also limited to the regions which are fully mapped.

- Lack of infrastructure: The proper driving environment has to be available for these vehicles, which is not always available, especially in developing countries. Road infrastructure can be different even 10 km apart. The transition to smart infrastructure, including in IoT systems, connectivity to traffic lights, and V2V (vehicle to vehicle communication), is slow.

- Cybersecurity issues: Digital security in connected cars is one of the most critical issues. The more connected a vehicle is, the easier it will be to find ways to attack the system. People are scared of cyberattacks on automotive software or components.

- Accident Liability: The matter of responsibility in case of accidents is still being debated. Either it will lie with the human passenger or manufacturer. Mistakes could be fatal and cost human life.

Future of autonomous vehicles

Currently, the self-driving cars in the market mainly belong to Level 1 and 2, including Teslas. Level 3 prototypes are slowly becoming available to the public following rigorous testing and research, such as Honda’s limited release of its luxury sedan Legend. Autonomous vehicle development has progressed an astonishing amount; however, researchers speculate fully autonomous vehicles are decades away from being attainable. Even after production, initially, they are most likely to be inaugurated for restricted use in industries to do automated tasks on a fixed route.

Another perspective to consider on the growing horizon of autonomous vehicles is whether they will be marketed for personal use or employed as service vehicles, such as taxis, cargo transport, or public transport. Traditional companies such as GM intend to retail these vehicles in the future to the public while newer companies such as Waymo, AutoX, and Tesla intend to operate or already operate robotaxis. Other companies, namely Volkswagen, plan to lease these vehicles on a subscription-based plan where the customer pays by the hour. Google’s Waymo has been operating Level 4 robotaxis in select cities in America at a rate of 1,000–2,000 rides a week from late 2020. In a similar exhibition, AutoX had deployed a fleet of 75 robotaxis in Shanghai accessible to members of the public. Many kinks and issues in the functioning of these robotaxis still need to be rectified before large-scale adoption as the robots still struggle when faced with complicated situations.

This accident, involving a Tesla car in which two people died, has highlighted the safety uncertainties surrounding autonomous vehicles. The crashes in deployed Level 2 vehicles were inferred to be the result of human error, but car manufacturers have the responsibility to build the autonomous systems considering the factor of human inattention and negligence. The technology to build a Level 5 autonomous car may be easier to secure than the proper safety and legal regulations. Public acceptance is an essential element for the integration of autonomous vehicles. Humans are predisposed to a prejudice against the arrival of the changes that unfamiliar technology can bring. It is crucial that public safety regulations are created with winning public trust in mind.