Introduction

Two popular options have recently emerged for building an AI application based on large language models (LLMs): LlamaIndex and LangChain. Deciding which one to use can be challenging, so this article aims to explain the differences between them in simple terms.

LangChain is a versatile framework designed for building various applications, not just those confined to large language models. It offers tools for loading, processing, and indexing data and interacting with LLMs. LangChain's flexibility allows users to customize their applications based on the specific needs of their datasets. This makes it an excellent choice for creating general-purpose applications that require extensive integration with other software and systems.

On the other hand, LlamaIndex is specifically designed for building search and retrieval applications. It provides a straightforward interface for querying LLMs and retrieving relevant documents. While it may not be as general-purpose as LangChain, LlamaIndex is highly efficient, making it ideal for applications that process large amounts of data quickly and effectively.

Overview of LlamaIndex and LangChain

LangChain is an open-source framework designed to simplify the development of applications powered by large language models (LLMs). It provides developers with a comprehensive set of tools and APIs in Python and JavaScript, facilitating the creation of diverse LLM-driven applications such as chatbots, virtual agents, and document analysis tools. LangChain's architecture seamlessly integrates multiple LLMs and external data sources, enabling developers to build complex and interactive applications quickly.

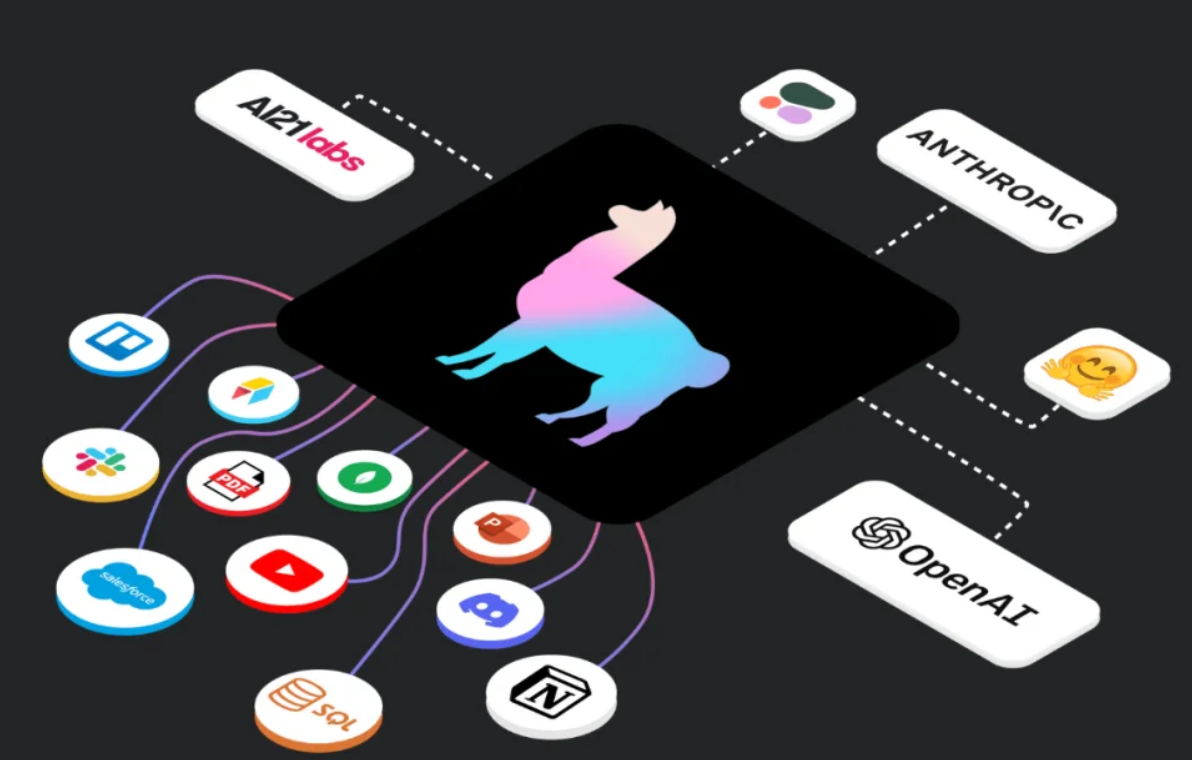

LlamaIndex is a framework designed for efficient data retrieval and management. It excels in creating search and retrieval applications by using algorithms to rank documents based on their semantic similarity. LlamaIndex offers different data connectors through LlamaHub, allowing direct data ingestion from various sources without extensive conversion processes. It is particularly well-suited for knowledge management systems and enterprise solutions that require accurate and rapid information retrieval capabilities.

What is LlamaIndex?

Bring this project to life

LlamaIndex, previously known as the GPT index, is a framework that makes life easy when working with LLMs. It can be understood as a simple tool connecting your custom data—whether in APIs, databases, or PDFs—with powerful language models like GPT-4. It simplifies making your data accessible and usable, enabling you to effortlessly create powerful custom LLM applications and workflows.

With LlamaIndex, users can easily create powerful applications such as document Q&A, data-augmented chatbots, knowledge agents, and more. The key tools provided by LlamaIndex to augment the LLM applications with data are listed below:-

- Data Ingestion:- Helps to connect with any existing data sources such as APIs, PDFs, documents, etc.,

- Data Indexing:- Stores and indexes the data for different use cases.

- Query Interface:- Provides a query interface that accepts the prompt and returns a quicker knowledge-augmented response.

- Data Source:- With LlamaIndex, users can connect to the unstructured, structured, or semi-structured data source

Install LlamaIndex

To install LlamaIndex, we can either clone the repository or use pip.

!git clone https://github.com/jerryjliu/llama_index.gitor

!pip install llama-indexWhat is a RAG?

Before diving into the key comparison between LlamaIndex and Langchain, let us understand RAG.

RAG, or Retrieval-Augmented Generation, enhances the capabilities of large language models (LLMs) by combining two fundamental processes: retrieval and generation. Typically, a language model generates responses based solely on its training data, sometimes leading to outdated or unsupported answers (a.k.a hallucination.) RAG improves this by retrieving relevant information from external sources (like the internet or specific databases) based on the user's query. This retrieved information grounds the model's response in up-to-date and reliable data, ensuring more accurate and informed answers. By integrating retrieval with generation, RAG addresses challenges such as outdated information and lack of sources, making LLM responses more trustworthy and relevant.

RAG helps reduce the need to retrain a large language model when new information arises entirely. Instead, it allows us to update the data source with the latest information. This means that the next time a user asks a question, we can provide them with the most current information. Secondly, RAG ensures that the language model pays attention to reliable sources of information before generating a response. This approach reduces the risk of the model inventing answers or revealing inappropriate data based solely on its training. It also enables the model to acknowledge when it cannot confidently answer a question rather than providing potentially misleading information. However, if the retrieval system doesn't offer high-quality information, it may result in some answerable queries going unanswered.

Performance on GPU Servers

Computational efficiency is critical when running AI workloads on GPU servers. LlamaIndex excels in performance metrics, efficiently utilizing GPU resources to handle large datasets and complex queries. LangChain also demonstrates strong performance, leveraging GPU capabilities to process chained models with minimal latency. While both frameworks perform well, their suitability may vary based on specific workload requirements.

Setting up LlamaIndex and LangChain on GPU servers involves different processes. LlamaIndex requires compatibility checks and may involve a steeper initial setup process, but once configured, it integrates seamlessly with existing AI workflows. LangChain boasts a more user-friendly setup, with extensive support for APIs and libraries, simplifying integration into diverse AI environments. The learning curve for both frameworks is mitigated by comprehensive documentation and active community support.

LlamaIndex vs Langchain

| LlamaIndex | Langchain |

|---|---|

| LlamaIndex (GPT Index) is a simple framework that provides a central interface to connect your LLM's with external data. | LangChain is a tool that helps developers easily build applications that use large language models (LLMs). These are powerful AI tools that can understand and generate human-like text. |

| LlamaIndex offers a selection of data connectors on LlamaHub, simplifying data access by supporting direct ingestion from native sources without conversion. | LangChain uses document loaders as data connectors to fetch and convert information from various sources into a format it can process. |

| LlamaIndex specializes in developing search and retrieval applications with a straightforward interface for indexing and accessing relevant documents, emphasizing efficient data management for LLMs. |

LangChain integrates retrieval algorithms with LLMs to dynamically fetch and process context-relevant information, making it ideal for interactive applications like chatbots. |

| LlamaIndex is optimized for retrieval by using algorithms to rank documents based on their semantic similarity for effective querying | Chains are a key feature of LangChain that helps to link multiple language model API calls in a logical sequence, combining various components to build cohesive applications. |

| LlamaIndex is perfect for internal search systems, creating RAG applications, and extracting precise information for enterprises. | LangChain is a versatile framework and comes with tools, functionalities, and features essential for developing and deploying diverse applications powered by large language models (LLMs). |

| LlamaIndex specializes in optimizing document retrieval through algorithms that rank documents based on semantic similarity, making it well-suited for efficient search systems and knowledge management solutions |

LangChain is designed as an orchestration framework for building applications using large language models (LLMs), integrating various components like retrieval algorithms and data sources to create interactive and dynamic experiences. |

Benefits of LlamaIndex and Langchain

Langchain:

- Ease of Use: Langchain is recommended if you're starting a new project and need to get it running quickly. It offers a more intuitive starting point and has a larger developer community, making it easier to find examples and solutions.

- Retriever Model: It uses a retriever paradigm for querying data, which is straightforward and suitable for basic retrieval tasks.

- Community and Support: Langchain has a well-established community with ample resources like tutorials, examples, and community support, which can benefit beginners.

LlamaIndex:

- Advanced Querying: LlamaIndex is more suitable if your project requires sophisticated querying capabilities such as subqueries or synthesizing responses from external datasets.

- Memory Structure Flexibility: It allows for more complex memory structures, such as composing different indices (like list indices, vector indices, and graph indices), which can help build chatbots or large language models with specific memory needs.

- Composability: While currently more challenging to learn than Langchain, LlamaIndex offers composability on the memory structure side, allowing you to customize and structure data indices flexibly.

- Future Potential: With ongoing improvements and community growth (recent funding and development updates), LlamaIndex is evolving towards easier usability while retaining powerful features for advanced users.

Conclusion

In summary, if you need to develop a general-purpose LLM-based application that requires flexibility, extensibility, and integration with other software, LangChain is the better choice. However, if the focus is on creating an efficient and straightforward search and retrieval application, LlamaIndex is the superior option.

Furthermore, we highly recommend the detailed blog on Langchain, which will help you gain a deeper understanding of the framework and provide a hands-on experience.

Are you looking forward to trying out the frameworks with a code example and building your own application? Stay tuned for part 2 of the blog, where we'll delve deeper into the framework with examples and step-by-step instructions.

We hope you enjoyed the article!