Creating and deploying Deep Learning model serving applications is one of the best and most frequent use cases for Paperspace customers. Managing and running these with Deployment's makes it simpler and faster to spin up your apps than anywhere else on the web. We've talked extensively here on the Paperspace Blog about the utility of the Deployment's product, and we recommend reading more about it here.

In this article, we are going to cover all the facets of application maintenance with Paperspace Deployment's. Readers can expect to finish this article with a greater understanding of the factors which influence application deployment costs, and we will recommend several easy methodologies to help keep the associated fees as low as possible. With these recommendations, we aim to show how your applications can be served with the least hassle and expense using Paperspace.

Paperspace Deployments Overview

Before we continue, let's do a quick review of the Deployment's product. In short, Deployment's allow Paperspace users to serve their containerized applications to the web using Paperspace GPUs as web applications or as a RESTful API endpoint.

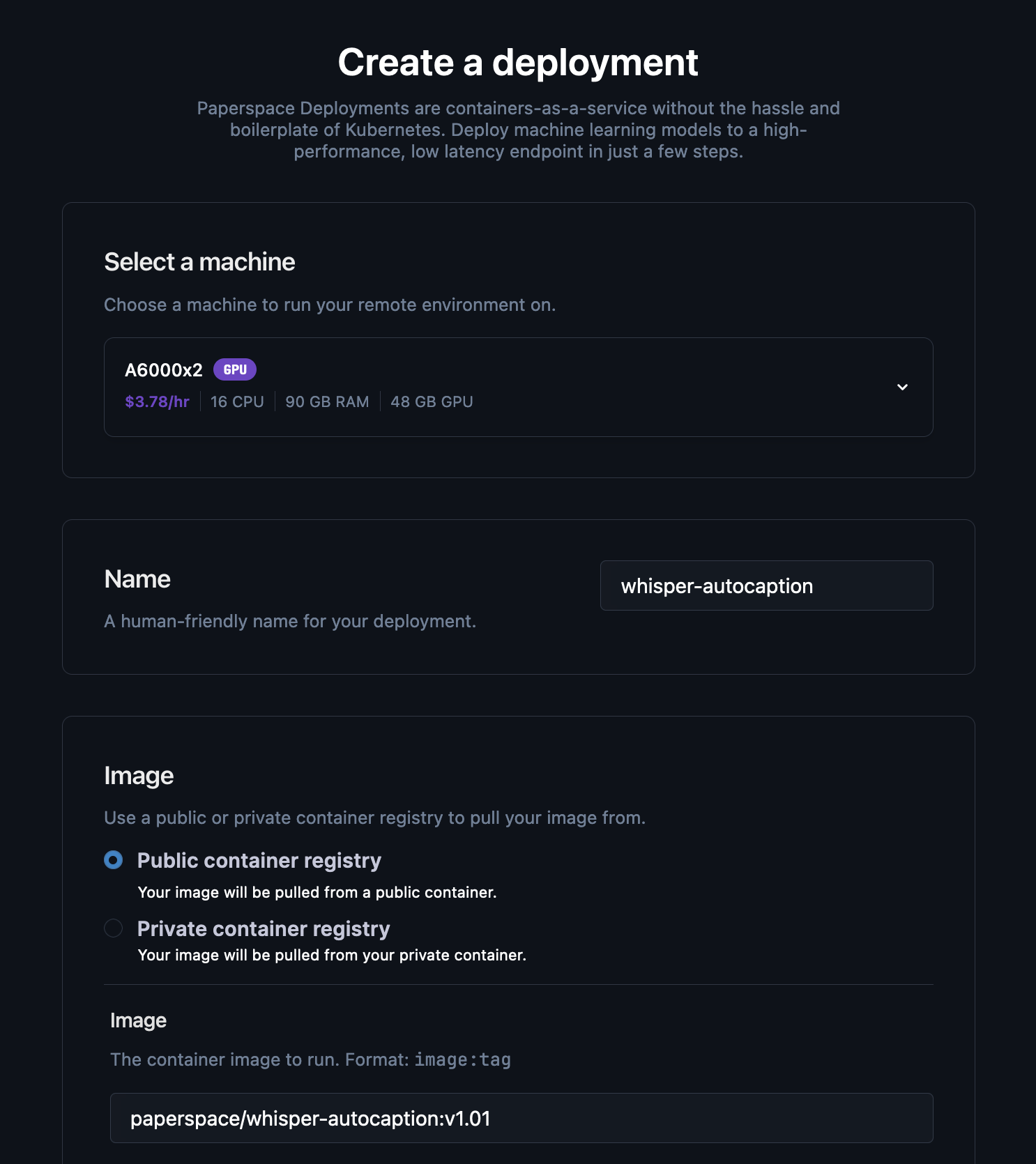

The Deployment itself can be made using our simple form-fill in creation page. All that is required to set it up are the GPU machine selection, Image declaration, and a few minor advanced settings and integrations for useful services like HuggingFace. If we have already created and shared our container to a repository (Docker Hub, NVIDIA NGC, GithHub Container Registry, etc.), then we can pull and Deploy the image with little to no setup. If we are using a private registry, this may require some setup on your part.

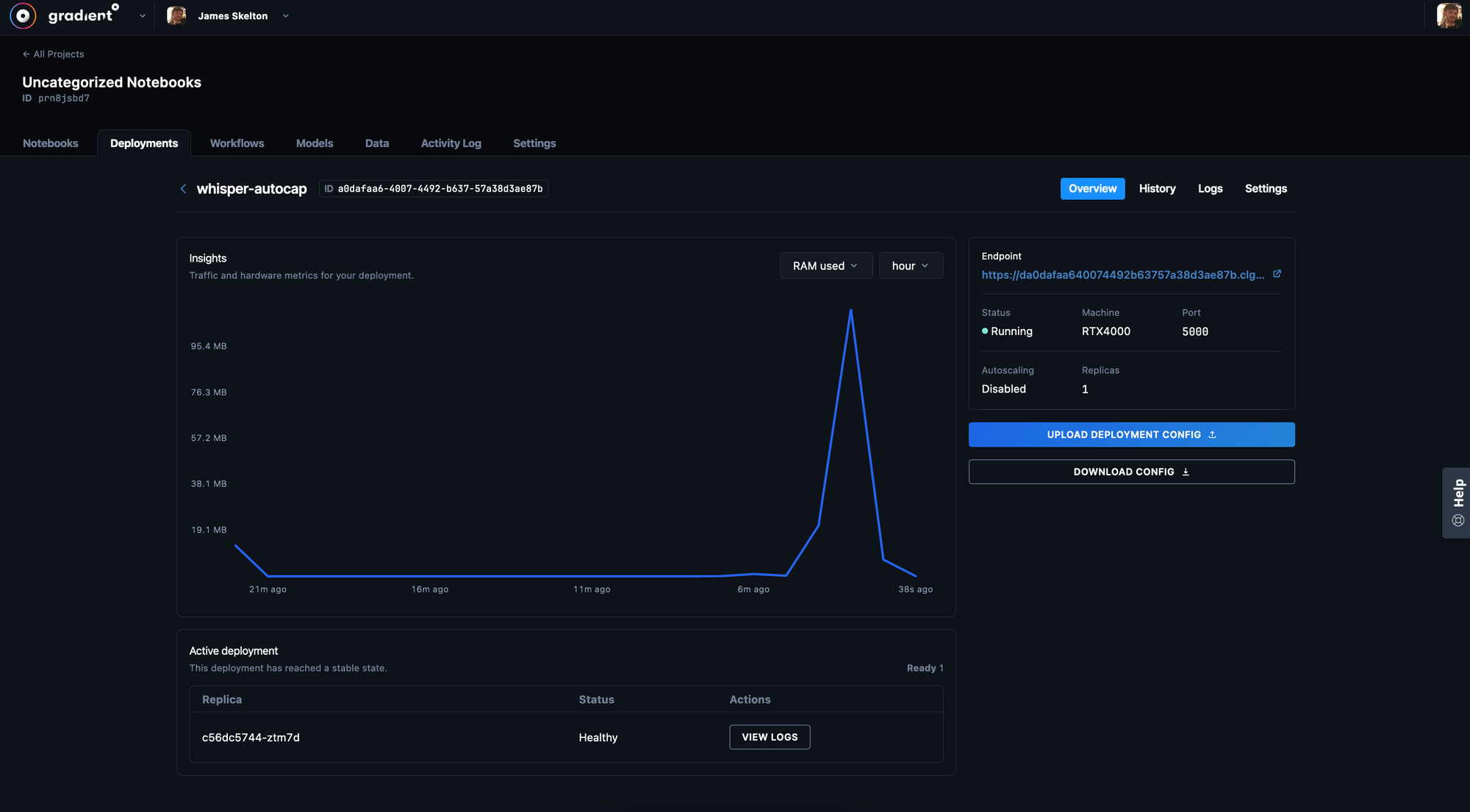

Once our deployment is launched, we can access the details page. The overview contains useful metrics about the application like RAM used and access to important links, like the API endpoint URL itself. The history tab lets us see details about when we start and stopped the application, or made any major changes. The logs allow us to view the applications logs itself for troubleshooting. Finally, the settings allow us to make important changes to the model like setting a shutdown timer. We definitely recommend remembering that shutdown timer, as it is not automatically set.

Now that we have finished reviewing Deployments, let's talk about maintaining our applications with Paperspace.

Application maintenance with Paperspace

The scope of Deep Learning app maintenance on the cloud is incredibly wide, but it is thankfully simple to distill the important considerations down to a few categories that encompass everything. These traits and requirements are namely:

- Compute: this is a very rough measure of how much compute we actually need to run the application. This measure scales directly with the number of users making calls to the application, and is directly related to cost

- Time: this is a measure of how long the model needs to run. Certain deployments need to be online constantly, and others will need a complex scheduling system to optimize costs

- Modifiable: the trait of being able to be updated is critical when running live web applications for users. Deployment's must be able to be updated whenever needed with as little hassle as possible

- Cost: While also a function of the other considerations, cost is itself the most critical thing to consider when Deploying with Paperspace. All application maintenance is centered around optimizing cost, in the end

When we consider all of these factors for our application Deployment, it is far easier to keep our application effectively by reducing costs and keeping our Deep Learning models up to date. Let's dig a bit deeper into each of these, discuss what factors influence app maintenance cost in relation to these factors, and formulate ideas for how a business can determine the costs required to maintain an application on Paperspace.

Compute

Compute is a catch-all term for the computational requirements required from the GPU (or CPU) machine we are using to serve the application. It is arguably the most important factor: without sufficient compute, our application will not run quickly or even at all. Therefore, it is imperative when considering application maintenance to also consider which machine we are using.

Nearly always, Deep Learning models use GPUs to handle computations. The larger the model, generally, the more computational power is required from the machine. In order to optimize our models, then, we must select the best machine for the job. Typically, we will have an idea about our VRAM requirements from testing, so we can use our insights from development to make adjustments to the application in production as needed. With Paperspace Deployment's, making this change is quick and easy. All we need to do is edit our JSON file with the new GPU machine code and reupload the spec.

For example, we may do this if we were going to update a Deployment serving Large Language Models to handle a model with higher parameter count than it had previously dealt with. We might change our GPU type to one with a higher VRAM, or switch to a Multi-GPU Machine like our 4 x A100-80GB, in order to handle this larger model.

Changing the compute type will always change the associated cost of the Deployment, so it's critical we do this with cost requirements in mind. Although, since larger machines can handle workloads faster, it may actually be more cost effective to switch to a more powerful machine and reduce our time actually running the application.

Time

The amount of time we need to run our Deployment is another of the most critical factors to consider. During development, it is critical for application maintenance and cost optimization in production to identify the associated time requirements to run the application. Since certain applications must be run at all times, while others can be run sparingly, this can seriously affect how we maintain our applications in practice.

If our application does not require a 24/7 runtime, then we have some very interesting options for maintaining application costs. Notably, the new Paperspace CLI will make it easier than ever to create a automated schedule, or even a real-time trigger system to start the Deployment up. All we need to do is set up our script to trigger a Deployment up call when needed. The very simple script shown below demonstrates one way this could work with a trigger from strings inputs.

import subprocess

variable = 'on'

if variable == 'on':

out = subprocess.run(["pspace",

"deployment",

"up",

"--config",

"[PATH TO CONFIG FILE, enabled]",

"cwd",

"[WORKING DIRECTORY TO USE]",

"--project-id",

"[YOUR PROJECT ID]"])

if variable == 'off':

out = subprocess.run(["pspace",

"deployment",

"up",

"--config",

"[PATH TO CONFIG FILE, disabled]",

"cwd",

"[WORKING DIRECTORY TO USE]",

"--project-id",

"[YOUR PROJECT ID]"])Another critical thing to remember about time is that the Deployments do not shut down automatically. You must either shut it down or set a shut down timer manually by navigating to the settings tab of your Deployment's details page. There, we can set our application's shut down time to our needs. This is important to remember if we intend to make a updating schedule for the application, as well.

Modifiable

Another critical trait of the Deployment's product is the ease with which we can make changes to our application. The current environment for Deep Learning is growing so rapidly, that every month a new foundation model seems to make waves across the public consciousness. That means that keeping up with the current expectation for better and better technologies must be constantly met in order to not be left in the dust by competitors with better modeling.

Paperspace Deployment's are incredibly simple to modify for one reason: the work is entirely on the container level. By editing our Dockerfile and application files, we can make any and all changes we need to make to the Deployment. Once we make our changes, we just push up the new container, make a small change to our spec reflecting the change, and run the Deployment again.

We highly recommend a regular schedule of maintenance for your containers. Not only to keep up to date with the latest Deep Learning models, but also in order to update your application itself. Updates to packages like Flash Attention or TensorRT can make huge differences in the associated costs for running our models.

Cost

At the end of the day, app maintenance's first and last question should be about cost. Optimizing for cost is always the most important challenge for any business. Determining application cost is going to be a function of the other three factors we described above. While they do not capture the problem in it's entirety, it's very helpful to abstract the factor's influencing application maintenance cost down to these buckets.

With these factors in mind, let's look at the specific expenses we may encounter when Deploying on Paperspace:

- GPU cost: GPUs are priced by the hour for Deployment's. More powerful GPUs are generally more costly, but can reduce the amount of time we need to run our Deployment. This efficiency tradeoff is important to consider

- Storage: Storage is priced by the GB, and is offered up to 2 TB. More storage will be more costly, which may be needed if we are running enormous models or storing multiple models on a single Deployment

- Scale: The amount of GPUs we requisition for our Deployment can be set to automatically scale up to the needs of our users. This feature will increase costs, but also increase capability

With these factors in mind, regular application maintenance can be incredibly useful for keeping costs low. We can choose the most affordable GPU setup for our app, we can watch the amount of storage used during development, and we can monitor auto-scaling to prevent the Deployment from growing out of control.

Closing thoughts

One of the greatest challenges facing the slew of new Deep Learning products, is easy access and maintenance of their served model resources. When business's approach this issue, we have found that it is critical to assess the compute requirements for our specific task, monitor the time our Deployment actually needs to be active, take advantage of the easily modifiable design offered by the Deployment's being executed through containers, and to optimize for cost at every step. We believe that keeping these factor's in mind will greatly facilitate the development and release of succesful Deployment's with Paperspace.