[12/2/2021 Update: This article contains information about Gradient Experiments. Experiments are now deprecated. For more information on current Gradient Resources, please see the Gradient Docs]

Today, we're excited to announce a number of powerful new features and improvements to the entire Gradient product line.

First, we're introducing support for multinode/distributed machine learning model training.

We've also delivered a major upgrade to GradientCI, our groundbreaking continuous integration service for Gradient that connects to GitHub.

And we've completely revamped the way users interact with Gradient, introducing Projects and Experiments to easily organize your work and collaborate.

GradientCI 2.0

We are super excited to release our newest GitHub App called GradientCI. We soft-launched the first version of GradientCI a few months back and the response has been incredible.

With this release, you can create a GradientCI Project in Gradient that will trigger an Experiment automatically, whenever you push code to a machine learning repository on GitHub. Just install the latest GradientCI GitHub App and configure it. Easily view model/host performance metrics directly in your web console.

This powerful new set of tools is designed to make the machine learning pipeline process faster, more deterministic, and easier to integrate in to your existing Git-based workflow.

What's next? GradientCI will soon send status checks directly to GitHub and you can view them inline on your pull requests with rich information about your training performance.

https://github.com/apps/gradientci

Projects & Experiments

Say hello to Projects

When you login to your console, you will now see a new tab for "Projects".

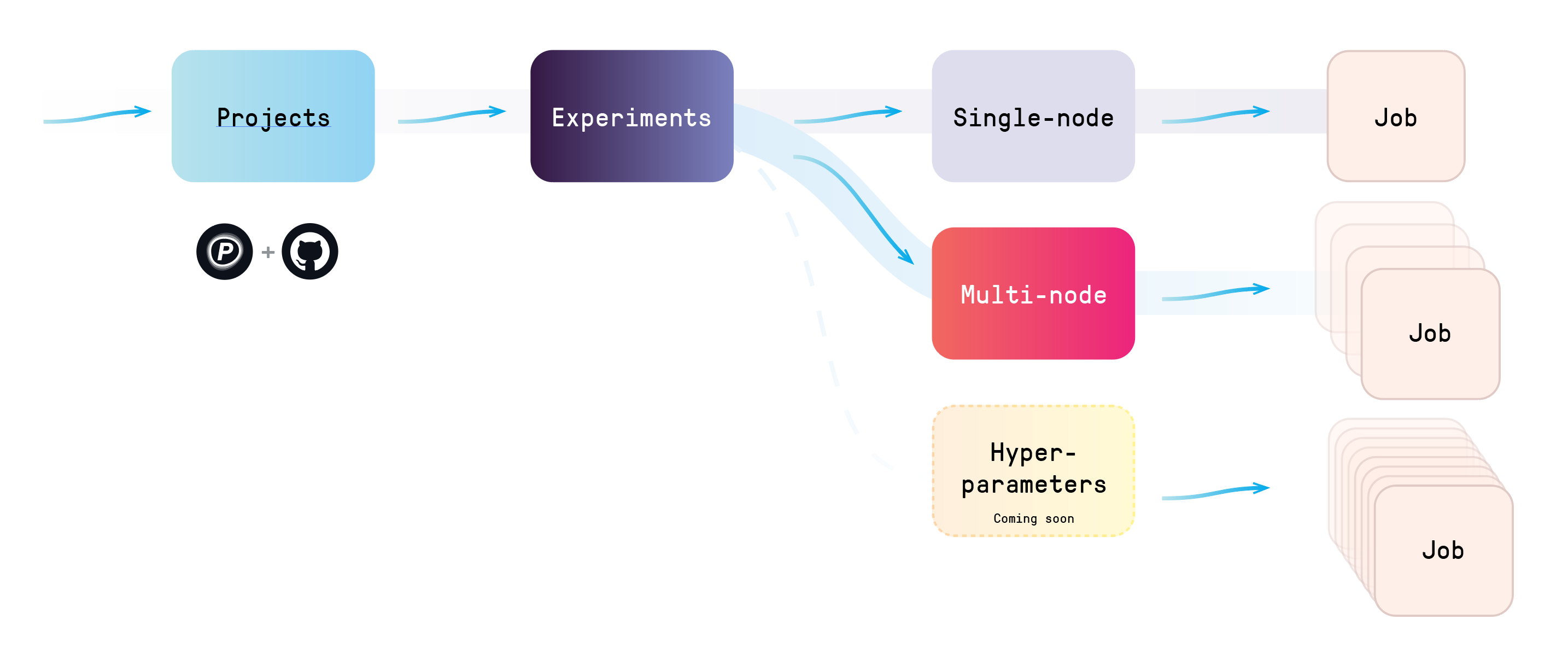

Projects are the new way to organize all of your machine learning development in Gradient. Projects can be either standalone–run manually through our GUI or our CLI–or they can be GitHub-enabled through GradientCI.

And... Experiments

A Project is a creative workspace that allows you to easily organize and manage the newest addition to the Gradient family: Experiments.

You can run any number of Experiments within each Project. And Experiments can take many forms, including the new possibility of running many different containers working in tandem to produce a result. The first of these is native support for multinode training.

Out of the gate, we're supporting single-node and multinode Experiments. Single-node Experiments correspond to one job. Multinode experiments include multiple jobs – one for each node your distributed training runs on.

As you can probably see, experiments open the door to hyperparameter sweeps. These are coming to Gradient in the very near future.

Distributed Model Training

With the new Projects & Experiments model, it's now incredibly easy to run a multinode training job with Gradient. Here's a sample project:

https://github.com/Paperspace/multinode-mnist

Gradient's native distributed training support relies on the parameter server model, and each multinode Experiment will have one or more parameter servers and worker nodes. Multinode training makes it possible to train models on bigger data than ever before.

A Modern, Unified AI Platform

We can't wait for you to get started with all of these powerful new features and improvements to Gradient.

This evolution of our product offering includes a major upgrade to our popular GradientCI GitHub App, a new conceptual model of Projects and Experiments, and Multinode/Distributed training. We're closer than ever to offering a unified platform for the modern AI workflow.

Please do let us know about your experience – we love to hear from customers! In the meantime, check out the docs for more information to get started on all of these new features and improvements. And look out for more amazing new features, coming soon!