In this tutorial, we’ll cover attention mechanisms in RNNs: how they work, the network architecture, their applications, and how to implement attention mechanism-imbued RNNs using Keras.

Specifically, we'll cover:

- The Problem With Sequence-to-Sequence Models for Neural Machine Translation

- An Introduction to Attention Mechanisms

- Categories of Attention Mechanisms

- Applications of Attention Mechanisms

- Neural Machine Translation Using an RNN With Attention Mechanism (Keras)

- Conclusion

You can run all of the code in this tutorial on a free GPU from a Gradient Community Notebook.

Let’s get started!

Note: All of the examples in this series (Advanced RNNs) have been trained on TensorFlow 2.x.

Bring this project to life

The Problem With Sequence-to-Sequence Models for Neural Machine Translation

The machine translation problem has thrust us towards inventing the “Attention Mechanism”. Machine translation is the automatic conversion from one language to another. The conversion has to happen using a computer program, where the program has to have the intelligence to convert the text from one language to the other. When a neural network performs this job, it’s called “Neural Machine Translation”.

Machine translation is one of the most challenging problems in artificial intelligence due to the ambiguity and complexity involved in human language (be it any).

Despite the complexity, we’ve seen many approaches arise to solve this problem.

Neural networks played a crucial role in devising ways to automate the machine translation process. The first neural network seen as suitable for this application was a sequence-to-sequence model.

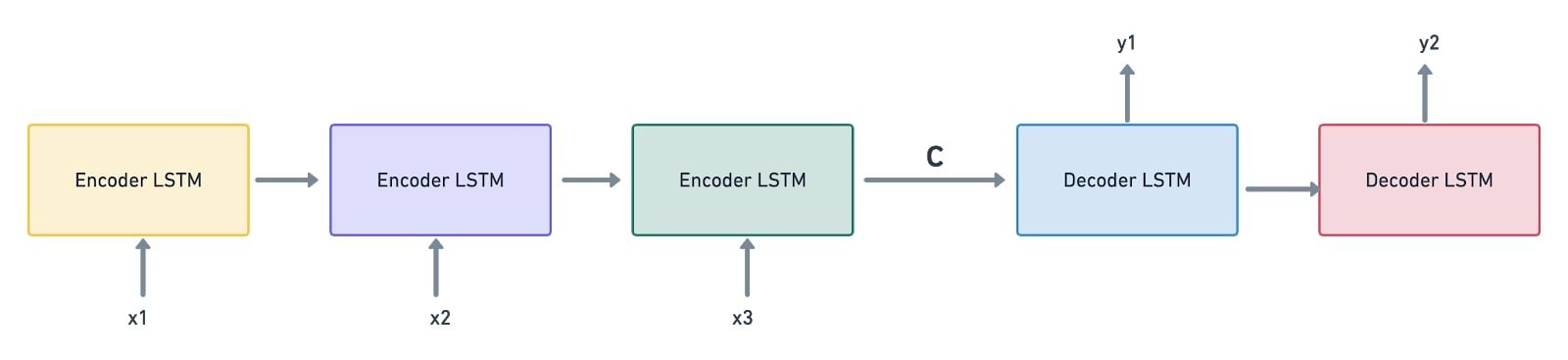

As seen in Introduction to Encoder-Decoder Sequence-to-Sequence Models (Seq2Seq), a sequence-to-sequence model comprises an encoder and a decoder, wherein an encoder produces a context vector (encoded representation) as a by-product. This vector is given to a decoder which then starts generating the output.

Interestingly, this is how the usual translation process happens.

The encoder-decoder sequence-to-sequence model in itself is similar to the current translation process. It involves encoding the source language into a suitable representation, and then decoding it into a target language where the input and output vectors needn’t be of the same size. However, this model has its share of problems:

- The context vector is of a fixed length. Assume that there’s a long sequence that has to be encoded. Owing to the encoded vector’s constant size, it can get difficult for the network to define an encoded representation for long sequences. Oftentimes, it may forget the earlier parts of the sequence, leading to the loss of vital information.

- A sequence-to-sequence model considers the encoder’s final state as the context vector to be passed on to the decoder. In other words, it doesn’t examine the intermediate states generated during the encoding process. This can also contribute to the loss of information if there are long sequences of input data involved.

These two factors can act as the bottlenecks to improving the performance of a sequence-to-sequence model. To eradicate this issue, we can extend this architecture by enabling the model to soft-search the input to filter the relevant positions in it. It can then predict the output based on the relative context vectors and all the previously generated output words.

This is precisely what the attention mechanism does!

An Introduction to Attention Mechanisms

Going by the typical English vocabulary, “Attention” refers to directing your focus on something. If we consider the neural machine translation example, where do you think “Attention” fits in?

The attention mechanism aims to solve both of the issues we discussed when training a neural machine translation model with a sequence-to-sequence model. Firstly, when there’s attention integrated, the model need not compress the encoded output into a single context vector. Instead, it encodes the input sequence into a sequence of vectors and picks a subset of these vectors depending on the decoder’s hidden states. In a nutshell, there’s “attention” applied to choose what’s necessary, without letting go of other necessary information.

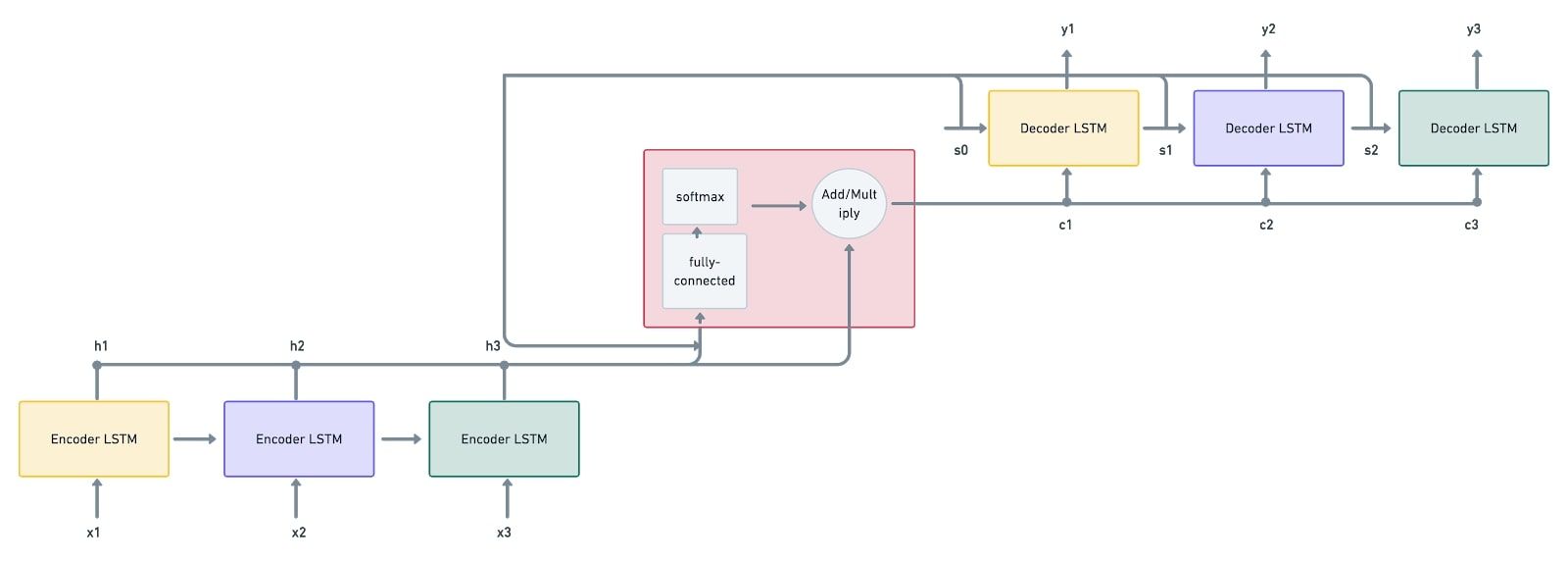

This is what an RNN model with an attention mechanism looks like:

Here’s a step-by-step procedure as to how the machine translation problem is solved using the attention mechanism:

- Firstly, the input sequence x1,x2,x3 is given to the encoder LSTM. The vectors h1,h2,h3 are computed by the encoders from the given input sequence. These vectors are the inputs given to the attention mechanism. This is followed by the decoder inputting the first state vector s0, which is also given as an input to the attention mechanism. We now have s0 and h1,h2,h3 as inputs.

- The attention mechanism mode (depicted in a red box) accepts the inputs and passes them through a fully-connected network and a softmax activation function, which generates the “attention weights”.

- The weighted sum of the encoder’s output vectors is then computed, resulting in a context vector c1. Here, the vectors are scaled according to the attention weights.

- It’s now the decoder's job to process the state and context vectors to generate the output vector y1.

- The decoder also produces the consequent state vector s1, which is again given to the attention mechanism model along with the encoder’s outputs.

- This produces the weighted sum, resulting in the context vector c2.

- This process continues until all the decoders have generated the output vectors y1,y2,y3.

The catch in an attention mechanism model is that the context vectors enable the decoder to focus only on certain parts of its input (in fact, context vectors are generated from the encoder’s outputs). This way, the model stays attentive to all those inputs which it thinks are crucial in determining the output.

Categories of Attention Mechanisms

We can segregate attention mechanisms broadly into three categories: Self-Attention, Soft Attention, and Hard Attention mechanisms.

Self-Attention

Self-Attention helps the model to interact within itself. The long short-term memory-networks for machine reading paper uses self-attention. The learning process is depicted in the example below:

The attention here is computed within the same sequence. In other words, self-attention enables the input elements to interact among themselves.

Soft Attention

Soft attention ‘softly’ places the attention weights over all patches of the input (image/sentence), i.e., it employs the weighted average mechanism. It measures the attention concerning various chunks of the input, and outputs the weighted input features. It discredits the areas which are irrelevant to the task at hand by assigning them low weights. This way, soft attention doesn’t confine its focus to specific parts of the image or the sentence; instead, it learns continuously.

Soft attention is a fully differentiable attention mechanism, where the gradients can be propagated automatically during backpropagation.

Hard Attention

“Hard”, as the name suggests, focuses on only a specific part of the image/sentence. During backpropagation, to estimate the gradients for all the other states, we need to perform sampling and average the results using the Monte Carlo method.

Applications of Attention Mechanisms

Just a few applications of attention mechanisms include:

- Image Captioning

- Speech Recognition

- Machine Translation

- Self-Driving Cars

- Document Summarization

Neural Machine Translation Using an RNN With Attention Mechanism (Keras)

An RNN can be used to achieve machine translation. Generally, a simple RNN laced with an encoder-decoder sequence-to-sequence model does this job. However, as stated in the section ‘The Problem with Sequence-to-Sequence Models for Neural Machine Translation’, a crammed representation of the encoded output might ignore vital features required for the translation process. To eradicate this problem, when we embed the attention mechanism with an encoder-decoder sequence-to-sequence model, we do not have to compromise on the loss of information when there are long sequences of text involved.

The attention mechanism focuses on all those inputs which are really required for the output to be generated. There’s no compression involved; instead, it considers all the encoder’s outputs and assigns importance to them according to the decoder’s hidden states.

Here’s a step-by-step process to employ an RNN model (encoder-decoder sequence-to-sequence with attention mechanism) for French to English translation.

Don't forget that you can follow along with the code and run it on a free GPU from a Gradient Community Notebook.

Step 1: Import the Dataset

First, import the English-to-French dataset (download link). It has about 185,583 language translation pairs.

Untar the dataset and store the txt file path.

Step 2: Preprocess the Dataset

The dataset has Unicode characters, which have to be normalized.

Moreover, all the tokens in the sequences have to be cleaned using the regular expressions library.

Remove unwanted spaces, include a space between every word and the punctuation following it (to differentiate between both), replace unwanted characters with spaces, and append <start> and <end> tokens to specify the start and end of a sequence.

Encapsulate the unicode conversion in a function unicode_to_ascii() and sequence preprocessing in a function preprocess_sentence().

Step 3: Prepare the Dataset

Next, prepare a dataset out of the raw data we have. Create word pairs combining the English sequences and their related French sequences.

Check if the dataset has been created properly.

Now tokenize the sequences. Tokenization is the mechanism of creating an internal vocabulary comprising English and French tokens (i.e. words), converting the tokens (or, in general, sequences) to integers, and padding them all to make the sequences possess the same length. All in all, tokenization facilitates the model training process.

Create a function tokenize() to encapsulate all the above-mentioned requirements.

Load the tokenized dataset by calling the create_dataset() and tokenize() functions.

Reduce the number of data samples required to train the model. Employing the whole dataset will consume a lot more time for training the model.

The max_length of both the input and target tensors is essential to determine every sequence's maximum padded length.

Step 4: Create the Dataset

Segregate the train and validation datasets.

Validate the mapping that’s been created between the tokens of the sequences and the indices.

Step 5: Initialize the Model Parameters

With the dataset in hand, start initializing the model parameters.

BUFFER_SIZE: Total number of input/target samples. In our model, it’s 40,000.BATCH_SIZE: Length of the training batch.steps_per_epoch: The number of steps per epoch. Computed by dividingBUFFER_SIZEbyBATCH_SIZE.embedding_dim: Number of nodes in the embedding layer.units: Hidden units in the network.vocab_inp_size: Length of the input (French) vocabulary.vocab_tar_size: Length of the output (English) vocabulary.

Next, call the tf.data.Dataset API and create a proper dataset.

Validate the shapes of the input and target batches of the newly-created dataset.

19 and 11 denote the maximum padded lengths of the input (French) and target (English) sequences.

Step 6: Encoder Class

The first step in creating an encoder-decoder sequence-to-sequence model (with an attention mechanism) is creating an encoder. For the application at hand, create an encoder with an embedding layer followed by a GRU (Gated Recurrent Unit) layer. The input goes through the embedding layer first and then into the GRU layer. The GRU layer outputs both the encoder network output and the hidden state.

Enclose the model’s __init__() and call() methods in a class Encoder.

In the method, __init__(), initializes the batch size and encoding units. Add an embedding layer that accepts vocab_size as the input dimension and embedding_dim as the output dimension. Also, add a GRU layer that accepts units (dimensionality of the output space) and the first hidden dimension.

In the method call(), define the forward propagation that has to happen through the encoder network.

Moreover, define a method initialize_hidden_state() to initialize the hidden state with the dimensions batch_size and units.

Add the following code as part of your Encoder class.

Call the encoder class to check the shapes of the encoder output and hidden state.

Step 7: Attention Mechanism Class

This step captures the attention mechanism.

- Compute the sum (or product) of the encoder’s outputs and decoder states.

- Pass the generated output through a fully-connected network.

- Apply softmax activation to the output. This gives the attention weights.

- Create the context vector by computing the weighted sum of attention weights and encoder’s outputs.

Everything thus far needs to be captured in a class BahdanauAttention. Bahdanau Attention is also called the “Additive Attention”, a Soft Attention technique. As this is additive attention, we do the sum of the encoder’s outputs and decoder hidden state (as mentioned in the first step).

This class has to have __init__() and call() methods.

In the __init__() method, initialize three Dense layers: one for the decoder state ('units' is the size), another for the encoder’s outputs ('units' is the size), and the other for the fully-connected network (one node).

In the call() method, initialize the decoder state (s0) by taking the final encoder hidden state. Pass the generated decoder hidden state through one dense layer. Also, plug the encoder’s outputs through the other dense layer. Add both the outputs, encase them in a tanh activation and plug them into the fully-connected layer. This fully-connected layer has one node; thus, the final output has the dimensions batch_size * max_length of the sequence * 1.

Later, apply softmax on the output of the fully-connected network to generate the attention weights.

Compute the context_vector by performing a weighted sum of the attention weights and the encoder’s outputs.

Validate the shapes of the attention weights and its output.

sample_hidden here is the hidden state of the encoder, and sample_output denotes the encoder’s outputs.

Step 8: Decoder Class

This step encapsulates the decoding mechanism. The Decoder class has to have two methods: __init__() and call().

In the __init__() method, initialize the batch size, decoder units, embedding dimension, GRU layer, and a Dense layer. Also, create an instance of the BahdanauAttention class.

In the call() method:

- Call the attention forward propagation and capture the context vector and attention weights.

- Send the target token through an embedding layer.

- Concatenate the embedded output and context vector.

- Plug the output into the GRU layer and then into a fully-connected layer.

Add the following code to define the Decoder class.

Validate the decoder output shape.

Step 9: Optimizer and Loss Functions

Define the optimizer and loss functions.

As the input sequences are being padded with zeros, nullify the loss when there’s a zero in the real value.

Step 10: Train the Model

Checkpoint your model’s weights during training. This helps in the automatic retrieval of the weights while evaluating the model.

Next, define the training procedure. First, call the encoder class and procure the encoder outputs and final hidden state. Initialize the decoder input to have the <start> token spread across all the input sequences (indicated using the BATCH_SIZE). Use the teacher forcing technique to iterate over all decoder states by feeding the target as the next input. This loop continues until every token in the target sequence (English) is visited.

Call the decoder class with decoder input, decoder hidden state, and encoder’s outputs. Procure the decoder output and hidden state. Compute the loss by comparing the real against the predicted value of the target. Fetch the target token and feed it to the next decoder state (concerning the successive target token). Also, make a note that the target decoder hidden state will be the next decoder hidden state.

After the teacher forcing technique gets finished, compute the batch loss, and run the optimizer to update the model's variables.

Now initialize the actual training loop. Run your loop over a specified number of epochs. First, initialize the encoder hidden state using the method initialize_hidden_state(). Loop through the dataset one batch at a time (per epoch). Call the train_step() method per batch and compute the loss. Continue until all the epochs have been covered.

Step 11: Test the Model

Now define your model evaluation procedure. First, take the sentence given by the user into consideration. This has to be given in the French language. The model now has to convert the sentence from French to English.

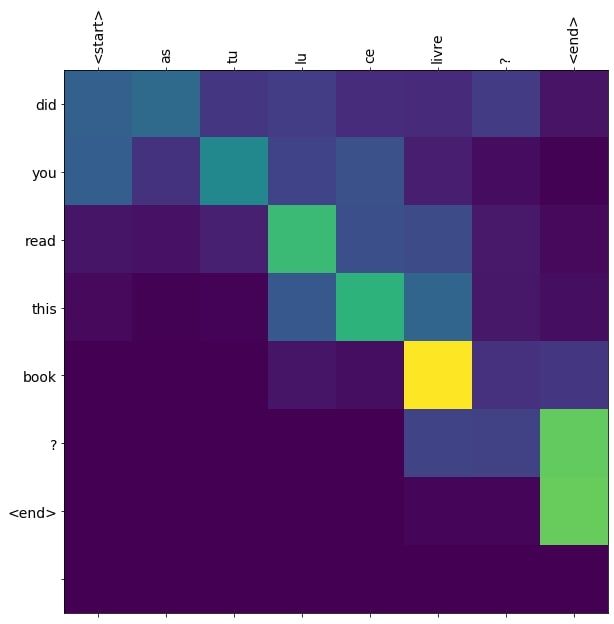

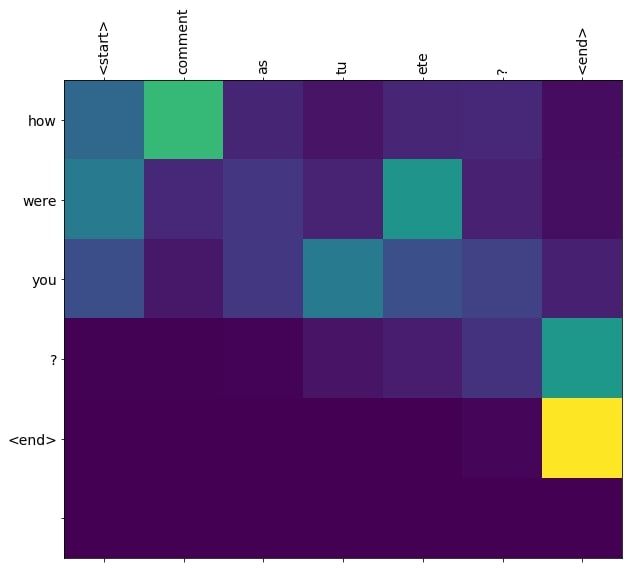

Initialize an empty attention plot to be plotted later on with max_length_target on the Y-axis, and max_length_input on the X-axis.

Preprocess the sentence and convert it into tensors.

Then plug the sentence into the model.

Initialize an empty hidden state which is to be used while initializing an encoder. Usually, the initialize_hidden_state() method in the encoder class gives the hidden state having the dimensions batch_size * hidden_units. Now, as the batch size is 1, the initial hidden state has to be manually initialized.

Call the encoder class and procure the encoder outputs and final hidden state.

By looping over max_length_targ, call the decoder class wherein the dec_input is the <start> token, dec_hidden state is the encoder hidden state, and enc_out is the encoder’s outputs. Procure the decoder output, hidden state, and attention weights.

Create a plot using the attention weights. Fetch the predicted token with the maximum attention. Append the token to the result and continue until the <end> token is reached.

The next decoder input will be the previously predicted index (concerning the token).

Add the following code as part of the evaluate() function.

Step 12: Plot and Predict

Define the plot_attention() function to plot the attention statistics.

Define a function translate() which internally calls the evaluate() function.

Restore the saved checkpoint to the model.

Call the translate() function by inputting a few sentences in French.

The actual translation is "Have you read this book?"

The actual translation is "How have you been?"

As can be inferred, the predicted translations are in proximity to the actual translations.

Conclusion

This is the final part of my series on RNNs. In this tutorial, you’ve learned what the Attention Mechanism is all about. You’ve learned how it fares better than a general encoder-decoder sequence-to-sequence model. You’ve also trained a neural machine translation model to convert sentences from French to English. You can further tweak the model’s hyperparameters to measure how the model’s performing.

I hope you enjoyed reading this series!

Reference: TensorFlow Tutorial