Introduction

Welcome to the second article in my tutorial series where I try to explain the concept behind Genetic algorithms how they work and use them to solve fun problems, in our case we hope to be able to build a simple agent to play a tic tac toe game.

Recap

In our previous article, Getting started with the genetic algorithm, we explained what a genetic algorithm is and the simple logic behind it (maybe, not so simple). We were then able to break it down into steps which include

- Creating the population

- Selection

- Crossover

- Mutation

Doing steps 2 to 4 repeatedly helped us to create an optimal answer for the problem set we defined, which was simply recreating a phrase passed into the function. In this article, we are going up the ladder a little bit by using a genetic algorithm to optimize a neural network.

Our test or example problem

We are not going into building our tic tac toe game just yet: we need to understand the basics first and how they solve simpler problems then scale them up to bigger problems. I want this to be a tutorial series that takes individuals from beginner level to master understanding of how genetic algorithms work. To do so, we are going to solve a simple problem of adding 2 numbers together to show how a genetic algorithm can optimize a neural network.

Whats a neural Network

Neural nets or Artificial Neural Networks are simple basic computing systems inspired by the biological brain. They represent a set of logical connections made up of smaller units called neurons, which are also modeled from the biological brain. Their ability to solve simple to complex problems works by receiving information from input layer, processing them within the hidden layer(s), and passing the result as an output in the output layer. We can have multiple layers in our network (defined by the user), and every layer has both weights and biases.

Weights

Weights are numeric representations of the strength of the connection between nodes, bigger numbers show stronger connections and smaller numbers show weaker connections.

Bias

A bias can also be seen as a range of incorrectness, meaning how likely is our answer wrong, and is added to our weighted sum for every layer.

The aim for us to use the genetic algorithm here is to optimize the values of our weights and bias for our neural networks. Doing this repeatedly over multiple iterations or generations, we can adjust the values of our ANN model and make it more adaptable to solve our problem task.

We call the mathematical representation of this interaction the activation function.

output = activationFunction( input * weights + bias )

An activation function

This is a function added to the end of the layer. It takes the product of the input and weights with the addition of the bias, and decides what's passed to the next layer. It works to inform the next layer if this neuron is worth listening to by firing a large number or telling the next layer to ignore by firing a smaller number.

Optimizing out neural Network

When our neural network is created, it is initialized with random weights and biases. It is okay for them not to work well at first, as we are meant to implement optimization algorithms to be able to adjust our weights and reduce our average loss over time to improve the model. We can do this by implementing some algorithms like

- Gradient Descent

- Momentum

- Adagrad Adaptive Gradient Algorithm

- RMSProp Root Mean Squared Propagation,

The most popular of them is arguably gradient descent or stochastic gradient descent which is a method of finding global minima/maxima of defined differentiable function or loss function.

In case you want to learn more, check out some of the great breakdowns featured here in the Paperspace blog:

- Gradient Descent and Optimization In Deep Learning

- Implementing Gradient Descent in Python, Part 1: The Forward and Backward Pass

- Intro to optimization in deep learning: Gradient Descent

A genetic algorithm as an optimization algorithm

Genetic algorithms differ from conventional optimization algorithms in a few ways

- Almost all possible values of the algorithm are defined already in the initial populations, so unlike algorithms like gradient descent, we already have a finite search space (A very large finite search space).

- The genetic algorithm doesn't require too much information on the problem. It just needs an accurate fitness function to work with and select the best possible candidates for the problem. This removes the need for sourcing training data.

- Genetic algorithms are probabilistic while others are usually stochastic.

Advantages

- The genetic algorithm can avoid local maxima and reach global maxima

- Works well for real-world problems

- Able to train models that we don't have data on but we do know how they work

- Less mathematical approach

More recap🧢

What we have done so far in our previous article was to be able to build a genetic algorithm to be able to generate a phrase

We were able to optimize our population till the point where all our members were equal to our target phrase.

Using our codebase to optimize a neural network

Optimizing a neural network is almost the same principle but a different approach to solving the problem. Before we get there let us look at something we need to understand.

Elitism

Yes even software can favor treating some instances better than others. Elitism is the concept of saving our best performing instances from our population over time. This list is never cleared, and has a finite size usually much less than the population size, (i suggest 20 to 30 percent). It is only updated when we find an element that has a fitness higher than the lowest-performing element in the array, and doing this ensures that we always have the best possible candidates in the gene pool to select from at all times. Let's look at a code implementation:

amIElite(object) {

const smallest = this.elites[0]

if (this.elittSize > this.elites.length) {

this.elites.push(object)///elites not yet enough

}

else if (parseInt(object.fitness) > parseInt(smallest.fitness)) {///check if am bigger than the smallest

///bigger than this guy so am going to replace him

this.elites.shift()

this.elites.push(object)

}

this.elites = this.elites.sort((a, b) => a.fitness - b.fitness);///sort from smallest to biggest every time i add a new smallest

}What else changes?

Chromosomes

In our previous example, the chromosomes where the letters that make up our phrase. Here our chromosomes are going to be the values in our weights, lets look at an example

To achieve this we first flatten our neutral network weights, which are originally represented by an M * N * O matrix, into a flat matrix, eg

[

[3,2],

[3,3]], ===>[3,2,3,3,,5,2]

[5,2]]

]This lets us easily manipulate our data and perform fundamental operations like crossing parents and mutation.

Fitness function

Our fitness function is the critical component to the genetic algorithm, and I can't overemphasize this, and it changes to fit our current example problem.

Our example problem would be a genetic algorithm to optimize a neural net to find the sum of two numbers passed into it, a mathematical representation would be

$T = x + y$

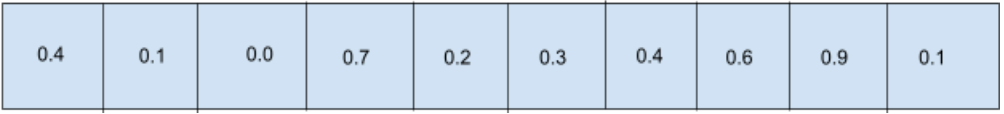

Our fitness function would be the inverse of x + y -T which is 1 / (x + y -T), lets take a look at a simple example

$X = 20$

$Y = 40$

If we predict T as 25,

Putting everything in we would have $1/ ( (20 + 40) - 25) = 1/35 = 0.02857142857$

0.02 is regarded as the accuracy or fitness of this instance of the neural network if we adjust the values a bit more and predict T as 55 we would have 0.2 as our accuracy or fitness.

Let's look at the code.

/// The code bellow calculates the fitness function of the model

/// ANN model by attempting to perform a calculation 5 times, and gets

/// the average fiteness

let fit = 0

for (let i = 0; i < 5; i++) {

const number1 = Math.floor(Math.random() * 100)

const number2 = Math.floor(Math.random() * 100)

const numberT = object.chromosomes.predict([number1, number2])[0]

////number T found

let score = (1 / (number1 + number2 - numberT)) || 1 ///if it evaluates as 0 then make it 1 do thee math u would understand y

if (score > 1) {

score = 1 //// if x + y - t eevaluates as 0.5 orr anything less than 1 theen score becomess 2 which may not bee a bad thing would ttest

}

if (score < 0) {

score = score * -1 //// acceptting negativee numbers should check

}

///multiply by 100 and parse as int ///test values between 10 and 1000

fit = fit + score

}

object.fitness = parseInt(fit/5)We actually try to predict the value 5 times, and use the total average as the fitness function for that instance of the Neural Net. This is because it may be lucky enough to calculate the value of 2 random numbers, but that does not guarantee its ability to calculate the value of another set of random numbers.

Bring this project to life with Paperspace Core!

Crossing Parents

This is similar to our previous example where we have a flat list of elements in an array, guess a random position, divide both parents at that point, and then use that position to create 2 more children.

We deconstruct the components for the layer into a flat matrix for weights and bias then we perform our crossing operations then reconstruct them again.

Now let's look at the code for the neural network we are working with

Matrix.js

The matrix.js file is used to perform matrix calculations like addition and multiplication for our neural network.

class Matrix {

///initialize our object

constructor(data) {

const {outputLayer, inputLayer,init} = data

if(init){

this.data = init

this.shape = [init.length,Array.isArray(init[0])?init[0].length: 1 ]

}

else{

this.data = Array.from(Array(inputLayer), () => new Array(outputLayer).fill(0));

this.shape = [inputLayer, outputLayer]

}

}

/*

Function to perform multiplication of a matrix

*/

multiply(matrix) {

///simple check to see if we can multiply this

if (!matrix instanceof Matrix) {

throw new Error('This is no Matrix')

}

if (this.shape[1] !== matrix.shape[0]) {

throw new Error(`Can not multiply this two matrices. the object:${JSON.stringify(this.shape)} multipleidBy:${JSON.stringify(matrix.shape)}`)

}

const newMatrice = new Matrix({

inputLayer : this.shape[0],

outputLayer: matrix.shape[1]

})

for (let i = 0; i < newMatrice.shape[0]; i++) {

for (let j = 0; j < newMatrice.shape[1]; j++) {

let sum = 0;

for (let k = 0; k < this.shape[1]; k++) {

sum += this.data[i][k] * matrix.data[k][j];

}

newMatrice.data[i][j] = sum;

}

}

return newMatrice

}

/*

Function to perform addition of a matrix

*/

add(matrix) {

const newMatrice = new Matrix({

inputLayer : this.shape[0],

outputLayer: matrix.shape[1]

})

if (!(matrix instanceof Matrix)) {

for (let i = 0; i < this.shape[0]; i++)

for (let j = 0; j < this.shape[1]; j++) {

newMatrice.data[i][j] = this.data[i][j] + matrix;

}

}

else {

for (let i = 0; i < matrix.shape[0]; i++) {

for (let j = 0; j < this.shape[1]; j++) {

newMatrice.data[i][j] = matrix.data[i][j] + this.data[i][j];

}

}

}

this.data = newMatrice.data

this.shape = newMatrice.shape

return newMatrice

}

/*

Function to perform subtraction of a matrix

*/

subtract(matrix) {

const newMatrice = new Matrix(this.shape[0], this.shape[1])

if (!(matrix instanceof Matrix)) {

for (let i = 0; i < this.shape[0]; i++)

for (let j = 0; j < this.shape[1]; j++) {

newMatrice.data[i][j] = this.data[i][j] - matrix;

}

}

else {

for (let i = 0; i < matrix.shape[0]; i++) {

for (let j = 0; j < this.shape[1]; j++) {

newMatrice.data[i][j] = matrix.data[i][j] - this.data[i][j];

}

}

}

return newMatrice

}

map(func) {

// Applys a function to every element of matrix

for (let i = 0; i < this.shape[0]; i++) {

for (let j = 0; j < this.shape[1]; j++) {

let val = this.data[i][j];

this.data[i][j] = func(val);

}

}

}

/*

Function to generate random values in the matrix

*/

randomize(){

for(let i = 0; i < this.shape[0]; i++)

for(let j = 0; j < this.shape[1]; j++)

this.data[i][j] = (Math.random()*2) - 1; //between -1 and 1

}

};

module.exports = MatrixNeuralNet.js

This file helps us create ANN models with classes, meaning we can create multiple ANN models and add to our population. Each model has its own state like weights and bias.

const Matrix = require('./matrix.js')

/*

Layerlink is our individual network layer

*/

class LayerLink {

constructor(prevNode_count, node_count) {

this.weights = new Matrix({ outputLayer: node_count, inputLayer: prevNode_count });

this.bias = new Matrix({ outputLayer: node_count, inputLayer: 1 });

this.weights.randomize()

this.bias.randomize()

this.weights.flat = this.flatenMatrix(this.weights)

this.bias.flat = this.flatenMatrix(this.bias)

}

updateWeights(weights) {

this.weights = weights;

}

getWeights() {

return this.weights;

}

getBias() {

return this.bias;

}

/*

Function to flatten matrix

*/

flatenMatrix({data}){

///flaten matrix

let flattened = []

data.forEach(dataRow => {

flattened = flattened.concat(dataRow)

});

return flattened

}

}

/*

NeuralNetwork model

*/

class NeuralNetwork {

constructor(layers, options) {

this.id = Math.random()

this.fitness = 0

this.weightsFlat = []

this.biasFlat =[]

if (layers.length < 2) {

console.error("Neural Network Needs Atleast 2 Layers To Work.");

return { layers: layers };

}

this.options = {

activation: function(x) {

return 1 / (1 + Math.exp(-x))

},

derivative: function(y) {

return (y * (1 - y));

},

relu:(x)=>{

return Math.max(0, x);

}

}

this.learning_rate = 0.1;

this.layerCount = layers.length - 1; // Ignoring Output Layer.

this.inputs = layers[0];

this.output_nodes = layers[layers.length - 1];

this.layerLink = [];

for (let i = 1, j = 0; j < (this.layerCount); i++ , j++) {

if (layers[i] <= 0) {

console.error("A Layer Needs To Have Atleast One Node (Neuron).");

return { layers: layers };

}

this.layerLink[j] = new LayerLink(layers[j], layers[i]); // Previous Layer Nodes & Current Layer Nodes

this.weightsFlat = this.weightsFlat.concat(this.layerLink[j].weights.flat)

this.biasFlat = this.biasFlat.concat(this.layerLink[j].bias.flat)

}

}

/*

Function to perform prediction with model, takes in input arrray

*/

predict(input_array) {

if (input_array.length !== this.inputs) {

throw new Error('Sorry the input can not be evaluated')

}

let result = new Matrix({ init: [input_array] })

for (let i = 0; i < this.layerLink.length; i++) {

result = result.multiply(this.layerLink[i].getWeights())

result = result.add(this.layerLink[i].getBias())

// console.log('old===> ',i,result.data[0])

const newR = result.data[0].map(this.options.relu)

// console.log('new====> ',i,newR)

result.data = [newR]

}

return result.data[0]

}

/*

Reconstructs a matrix from a flat array to a M * N arrray with the shape passed to it

*/

reconstructMatrix(data,shape){

const result = []

try {

for(let i = 0;i< shape[0];i++){

result.push(data.slice(i*shape[1], shape[1] + (i*shape[1])))

}

} catch (error) {

console.log(error,this)

throw new Error('')

}

return result

}

/*

Reconstructs the weight values from the weightsFlat matrix to match the shape of the matrix

*/

reconstructWeights(){

///reconstruct weights

let start = 0;

for (let i = 0; i < this.layerLink.length; i++) {

const layer = this.layerLink[i];

const shape = layer.weights.shape

const total = shape[0] * shape[1]

const array = this.weightsFlat.slice(start, start + total)

start = start + total

const weightMatrix = this.reconstructMatrix(array,shape)

this.layerLink[i].weights.data = weightMatrix

}

}

/*

Reconstructs the bias values from the biasFlat matrix to match the shape of the matrix

*/

reconstructBias(){

///reconstruct bias

let start = 0;

for (let i = 0; i < this.layerLink.length; i++) {

const layer = this.layerLink[i];

const shape = layer.bias.shape

const total = shape[0] * shape[1]

const array = this.biasFlat.slice(start, start + total)

start = start + total

const biasMatrix = this.reconstructMatrix(array,shape)

this.layerLink[i].bias.data = biasMatrix

}

}

}

module.exports = NeuralNetworkNow we have our custom neural network set up let's look at our new version of the genetic algorithm.

GNN.js

The Genetic algorithm file contains the genetic algorithm functions we have discussed in our current and previous article, our main entry point is the solve function that attempts to optimize the ANN models after 1000 generations.

const NeuralNetwork = require('./nn.js')

class GNN {

constructor(number,) {

this.population = []

this.genePool = []

this.numnber = number

this.mostFit = { fitness: 0 }

this.initializePopulation()

this.elittSize = 40

this.elites = []

this.soFar = {}

}

/*

Initialize a population of N amount of individuals into our geenetic algorithm

*/

initializePopulation() {

for (let i = 0; i < this.numnber; i++) this.population.push({ chromosomes: this.makeIndividual(), fitness: 0 })

}

/*

Entry point into our genetic algorithm goes through our 3 step process

*/

solve() {

for (var i = 0; i < 1000; i++) {

this.calcFitnessAll()

this.crossParents()

this.mutate()

}

}

/*

Mutate data in our flatttened weights then reconstruct them back

*/

mutate() {

this.population.forEach((object) => {

const shouldIMutateChromosome = Math.random() < 0.3

if (shouldIMutateChromosome) {

const layer = object.chromosomes

for (var j = 0; j < layer.weightsFlat.length; j++) {////going through all the layers

const shoulIMutateGene = Math.random() < 0.05

if (shoulIMutateGene) {

///mutate the item in the array

layer.weightsFlat[j] = (Math.random() * 2) - 1; //between -1 and 1

}

}

layer.reconstructWeights()

////recontruct the array

}

})

}

/*

Calcualate the fittness function of all the individuals in the model

*/

calcFitnessAll() {

this.population.forEach((object) => {

////task is to join 2 numbers

//// x + y = t

////fitness function is (1/x + y - t )basically how close it is to 1

let fit = 0

for (let i = 0; i < 5; i++) {

const number1 = Math.floor(Math.random() * 100)

const number2 = Math.floor(Math.random() * 100)

const numberT = object.chromosomes.predict([number1, number2])[0]

////number T found

let score = (1 / (number1 + number2 - numberT)) || 1 ///if it evaluates as 0 then make it 1 do thee math u would understand y

if (score > 1) {

score = 1 //// if x + y - t eevaluates as 0.5 orr anything less than 1 theen score becomess 2 which may not bee a bad thing would ttest

}

if (score < 0) {

score = score * -1 //// acceptting negativee numbers should check

}

///multiply by 100 and parse as int ///test values between 10 and 1000

fit = fit + score

}

object.fitness = parseInt(fit/5)

for (let i = 0; i < fit; i++) {

this.genePool.push(object.chromosomes)

}

this.amIElite([object].filter(()=>true)[0])

})

const getBeestElite = this.elites[39]

///run test

const number1 = Math.floor(Math.random() * 100)

const number2 = Math.floor(Math.random() * 100)

const numberT = getBeestElite.chromosomes.predict([number1, number2])[0]

console.log(`Beest item soo far numbers are ${number1} and ${number2} and the prediction is ${numberT} fittness is ${getBeestElite.fitness} `)

}

/*

Function to cheeck if our model is actually eligible to become an elite and maintians the top perfrooming models over a constant period of time

*/

amIElite(object) {

const smallest = this.elites[0]

if (this.elittSize > this.elites.length) {

this.elites.push(object)///elites not yet enough

}

else if (parseInt(object.fitness) > parseInt(smallest.fitness)) {///check if am bigger than the smallest

///bigger than this guy so am going to replace him

this.elites.shift()

this.elites.push(object)

}

this.elites = this.elites.sort((a, b) => a.fitness - b.fitness);///sort from smallest to biggest every time i add a new smallest

}

/*

Function to cross 2 rrandomly selected parents into 2 children, can be more but i dont see a need to

*/

crossParents() {

let newPopulation = [];

const pool = this.genePool

while (this.population.length - this.elites.length !== newPopulation.length) {

const parent1 = pool[Math.floor(Math.random() * pool.length)];

const parent2 = pool[Math.floor(Math.random() * pool.length)];

const newKid = this.makeIndividual()

const newKid2 = this.makeIndividual()

////select a crossSection

const items = ['', '']

const crossSection = Math.floor(Math.random() * parent1.weightsFlat.length)

////kid 1

const layerParent1 = parent1.weightsFlat.filter(() => true)

const layerParent2 = parent2.weightsFlat.filter(() => true)

const layerParent1bias = parent1.weightsFlat.filter(() => true)

const layerParent2bias = parent2.weightsFlat.filter(() => true)

const newKidWeights = layerParent1.slice(0, crossSection).concat(layerParent2.slice(crossSection, layerParent2.length))

const newKid2Weights = layerParent2.slice(0, crossSection).concat(layerParent2.slice(crossSection, layerParent1.length))

newKid.weightsFlat = newKidWeights

newKid.reconstructWeights()

newKid2.weightsFlat = newKid2Weights

newKid2.reconstructWeights()

const crossSectionBias = Math.floor(Math.random() * layerParent2bias.length)

const newKidBias = layerParent1bias.slice(0, crossSectionBias).concat(layerParent2bias.slice(crossSectionBias, layerParent2bias.length))

const newKidBias2 = layerParent2bias.slice(0, crossSectionBias).concat(layerParent1bias.slice(crossSectionBias, layerParent2bias.length))

newKid.biasFlat = newKidBias

newKid.reconstructBias()

newKid2.biasFlat = newKidBias2

newKid2.reconstructBias()

newPopulation.push({ chromosomes: newKid, fitness: 0 })

newPopulation.push({ chromosomes: newKid2, fitness: 0 })

}

newPopulation = newPopulation.concat(this.elites)///making sure we pass on elites

this.population = newPopulation

this.genePool = []///clear genepool

}

makeIndividual() {

return new NeuralNetwork([2, 8,8, 1]);

}

}

const gnn = new GNN(4000)

gnn.solve()Let's look at how these train

Beest item soo far numbers are 13 and 24 and the prediction is 25.28607491037004 fittness is 4

Beest item soo far numbers are 6 and 23 and the prediction is 26.32267394133354 fittness is 13

Beest item soo far numbers are 50 and 7 and the prediction is 62.49884171193919 fittness is 83

Beest item soo far numbers are 49 and 98 and the prediction is 146.41341907285596 fittness is 121

Beest item soo far numbers are 72 and 9 and the prediction is 83.4678176435441 fittness is 563

Beest item soo far numbers are 14 and 93 and the prediction is 106.26071461398661 fittness is 2743

Beest item soo far numbers are 86 and 18 and the prediction is 110.79668473593276 fittness is 1112

Beest item soo far numbers are 88 and 0 and the prediction is 103.38716156007081 fittness is 2739

Beest item soo far numbers are 43 and 36 and the prediction is 79.17631973457603 fittness is 1111

Beest item soo far numbers are 1 and 24 and the prediction is 24.314085857021304 fittness is 1110

Beest item soo far numbers are 34 and 20 and the prediction is 54.326947949897615 fittness is 1111

Beest item soo far numbers are 65 and 40 and the prediction is 105.26124354533928 fittness is 1112

Beest item soo far numbers are 83 and 79 and the prediction is 161.86964868039985 fittness is 1128

Beest item soo far numbers are 85 and 24 and the prediction is 112.87455742664426 fittness is 2741

Beest item soo far numbers are 95 and 22 and the prediction is 116.77612736073525 fittness is 2740

Beest item soo far numbers are 18 and 88 and the prediction is 105.35006857127684 fittness is 2747

Beest item soo far numbers are 79 and 66 and the prediction is 145.0125935541571 fittness is 1112

Beest item soo far numbers are 72 and 87 and the prediction is 158.69813186888078 fittness is 2740

Beest item soo far numbers are 25 and 23 and the prediction is 48.23237184355801 fittness is 2740

Beest item soo far numbers are 4 and 32 and the prediction is 35.98588328087144 fittness is 2743

Beest item soo far numbers are 72 and 47 and the prediction is 119.21435149343066 fittness is 2740

Beest item soo far numbers are 46 and 49 and the prediction is 95.02716823476345 fittness is 2742

Beest item soo far numbers are 28 and 55 and the prediction is 82.83801602208422 fittness is 2740

Beest item soo far numbers are 13 and 18 and the prediction is 31.22241978396226 fittness is 2741

Beest item soo far numbers are 18 and 48 and the prediction is 65.86628819582671 fittness is 2742

Beest item soo far numbers are 83 and 50 and the prediction is 133.2439079081985 fittness is 2740

Beest item soo far numbers are 5 and 17 and the prediction is 22.305395227971694 fittness is 54194

Beest item soo far numbers are 81 and 97 and the prediction is 179.5644485790281 fittness is 54237 We can see the training progress of our model and how the neural network was completely wrong on the first try but was able to be optimized over time to solve our simple addition problem.

Conclusion

We went over the basics of neural networks and we were able to understand how genetic algorithms can be used to optimize a neural network, in our next article we would be looking at the codebase and see how to implement our concept into working code.