Introduction to Part 2

In the second part of a six-part series, we will show how to go from a raw dataset to a suitable model training set using TensorFlow 2 and Gradient notebooks.

Series parts

Part 1: Posing a business problem

Part 2: Preparing the data

Part 3: Building a TensorFlow model

Part 4: Tuning the model for best performance

Part 5: Deploying the model into production

Part 6: Summary, conclusions, and next steps

Accompanying material

- The main location for accompanying material to this blog series is the GitHub repository at https://github.com/gradient-ai/Deep-Learning-Recommender-TF .

- This contains the notebook for the project,

deep_learning_recommender_tf.ipynb, which can be run in Gradient Notebooks or the JupyterLab interface, and 3 files for the Gradient Workflow:workflow_train_model.py,workflow-train-model.yamlandworkflow-deploy-model.yaml. - The repo is designed to be able to be used and followed along without having to refer to the blog series, and vice versa, but they compliment each other.

NOTE

Model deployment support in the Gradient product on public clusters and from Workflows is currently pending, expected in 2021 Q4. Therefore section 5 of the Notebook deep_learning_recommender_tf.ipynb on model deployment is shown but will not yet run.

The role of data preparation

It is well-known that no machine learning model can solve a problem if the data is insufficient. This means that data must be prepared correctly to be suitable for passing to an ML model.

Too much online content neglects the reality of data preparation. A recommended paradigm for enterprise ML is "do a pilot, not a PoC," which means set up something simple that goes all the way through end-to-end, then go back and refine the details.

Because Gradient makes it easy to deploy models it encourages us to keep model deployment and production in mind right from the start. In data preparation, this means spotting issues that may not otherwise come to light until later – and indeed we'll see an example of this below.

Gradient encourages a way of working where entities are versioned. Using tools like Git repos, YAML files, and others, we may counteract the natural tendency of the data scientist to plunge forward into an ad hoc order of operations that is difficult to reproduce.

The question of versioning not just the models and code, but the data itself, is also important. Such versioning doesn't work in Git except for small data. In this series, the data remain relatively small, so full consideration of this limitation is considered future work.

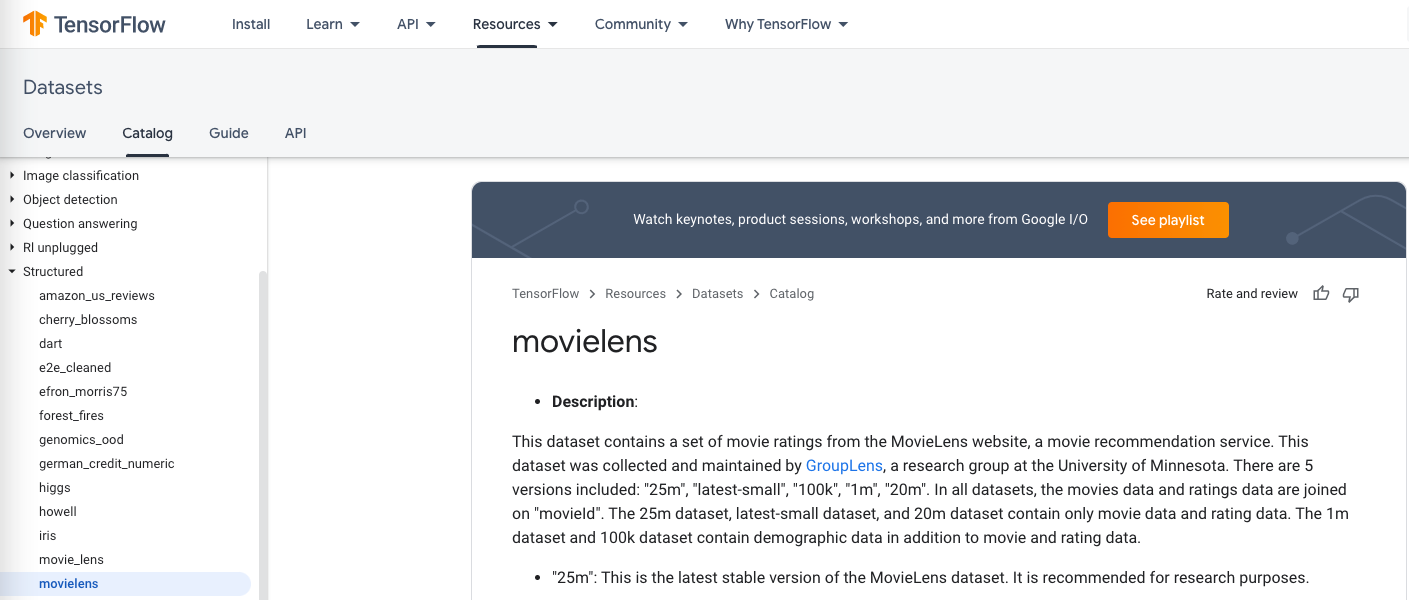

MovieLens dataset

So while we won't start this series with a 100% typical business scenario such as a petascale data lake containing millions of unstructured raw files in multiple formats that lack a schema (or even a contact person to explain them), we do use data that has been widely used in ML research.

The dataset is especially useful because it contains a number of characteristics typical of real-world enterprise datasets.

The MovieLens dataset has information about movies, users, and the users' ratings of the movies. For new users, we would like to be able to recommend new movies that they are likely to watch and enjoy.

We will first select candidates using the retrieval model, then we will predict the users' ratings with the ranking model.

The top-predicted rated movies will then be our recommendations.

The first couple rows of the initial data are as follows:

{'bucketized_user_age': 45.0,

'movie_genres': array([7]),

'movie_id': b'357',

'movie_title': b"One Flew Over the Cuckoo's Nest (1975)",

'raw_user_age': 46.0,

'timestamp': 879024327,

'user_gender': True,

'user_id': b'138',

'user_occupation_label': 4,

'user_occupation_text': b'doctor',

'user_rating': 4.0,

'user_zip_code': b'53211'}

{'bucketized_user_age': 25.0,

'movie_genres': array([ 4, 14]),

'movie_id': b'709',

'movie_title': b'Strictly Ballroom (1992)',

'raw_user_age': 32.0,

'timestamp': 875654590,

'user_gender': True,

'user_id': b'92',

'user_occupation_label': 5,

'user_occupation_text': b'entertainment',

'user_rating': 2.0,

'user_zip_code': b'80525'}

We see that most of the columns appear self-explanatory, although some are not obvious. Data are divided into movie information, user information, and outcome from watching the movie, e.g., when it was watched, user's rating, etc.

We also see various issues with the data that need to be resolved during preparation:

- Each row is a dictionary, so we will need to extract items or access them correctly to be able to do the prep.

- The target column is not differentiated from the feature columns. We therefore have to use context and our domain knowledge to see that the target column is

user_rating, and that none of the other columns are cheat variables. A cheat variable here would be information that is not available until after a user has watched a movie, and could therefore not be used when recommending a movie that a user has not yet watched. (1) - Some of the data columns are formatted in bytecode (e.g.

b'357') rather than regular UTF-8 unicode. This will fail if the model is to be deployed as a REST API and the data are converted to JSON. JSON requires the data to be UTF, and ideally UTF-8. So we will need to account for this. - The timestamps are not suitable for ML as given. A column of unique or almost-unique continuous values doesn't add information that the model can use. If, however, we can featurize these values into something recurring like time of day, day of week, season of year, or holiday, they could become much more valuable.

- Because there are timestamps, and user viewing habits can change over time, considerations for dealing with time series apply, and it is better to split the data into training, validation, and testing sets that do not overlap in time. Failing to do this violates the ML assumption that rows in a training set are not correlated and could lead to spurious high model performance or overfitting.

Obviously there are many other questions that could be asked and answered:

- Are there missing or other bad/out-of-range values?

- What do the genres mean?

- Are the IDs unique and do they correspond 1:1 with movie titles?

- What is user gender

True? - Are the user IDs unique?

- What are the occupation labels?

- Why are the ratings floats not integers?

- Do all rows have the same number of columns?

In a real business scenario, we would ideally like to discuss with the data originators what the columns mean and check that everyone is on the same page. We might also use various plots, exploratory data analysis (EDA) tools, and apply any other domain knowledge at our disposal.

In the case here, the MovieLens data is already well-known. And while one shouldn't assume that that makes it sensible or even coherent for the task, we don't need to spend the time doing the extensive data EDA that would be done in a full-scale project.

(1) There is in fact a subtlety here regarding "information available," because of course, aside from user_rating, the timestamp column when the user viewed the movie is not available beforehand. However, unlike the rating, the time that it is "now" when the recommendation is being made is available and so knowing something like the user is more likely to watch certain kinds of movies on weekend evenings can be used without it being cheating.

Preparing the data

TensorFlow has various modules that assist with data preparation. For MovieLens, the data are not large enough to require other tools such as Spark but of course this is not always the case.

In full-scale production recommender systems, it is common to require further tools to deal with larger-than-memory data. There could realistically be billions of rows available from a large user base spread over time.

The data are loaded into TensorFlow 2 from the movielens/ directory of the official TensorFlow datasets repository. Gradient can connect to other data sources, such as Amazon S3.

import tensorflow_datasets as tfds

...

ratings_raw = tfds.load('movielens/100k-ratings', split='train')

They are returned as a TensorFlow Prefetch dataset type. We can then use a Python lambda function and TensorFlow's .map to select the columns that we will be using to build our model.

For this series, that is just movie_title, timestamp, user_id, and user_rating.

ratings = ratings_raw.map(lambda x: {

'movie_title': x['movie_title'],

'timestamp': x['timestamp'],

'user_id': x['user_id'],

'user_rating': x['user_rating']

})

TensorFlow in-part overlaps with NumPy, so we can use the .concatenate, .min, .max, .batch and .as_numpy_iterator routines to extract the timestamps and create training, validation, and testing sets that do not overlap in time. Nice and simple! 😀

timestamps = np.concatenate(list(ratings.map(lambda x: x['timestamp']).batch(100)))

max_time = timestamps.max()

...

sixtieth_percentile = min_time + 0.6*(max_time - min_time)

...

train = ratings.filter(lambda x: x['timestamp'] <= sixtieth_percentile)

validation = ratings.filter(lambda x: x['timestamp'] > sixtieth_percentile and x['timestamp'] <= eightieth_percentile)

test = ratings.filter(lambda x: x['timestamp'] > eightieth_percentile)

We then shuffle the data within each set, since ML models assume that data are IID (rows are independent and identically distributed). We need to shuffle the data since our data appear to be originally ordered by time.

train = train.shuffle(ntimes_tr)

...

Finally we obtain a list of unique movie titles and user IDs that are needed for the recommender model.

movie_titles = ratings.batch(1_000_000).map(lambda x: x['movie_title'])

...

unique_movie_titles = np.unique(np.concatenate(list(movie_titles)))

...

There are some further adjustments that are mentioned in the notebook to get everything to work together. These include things like getting .len() to work on the FilterDataset type resulting from applying the lambda. These should be self explanatory in the notebook as they basically amount to figuring out how to use TensorFlow to get done what is needed.

Notice also that we assumed that the number of rows resulting from using percentiles of time corresponds roughly to number of rows as percentiles of the data. This is typical of the sort of assumptions that happen in data prep that are not necessarily called out by simply reading the code. (In this case the numbers are consistent, as shown in the notebook.)

The rest of the input data processing is done within the model, as shown in Part 3.

Next

In Part 3 of the series - Building a TensorFlow model, we will use the TensorFlow Recommenders library to build a basic recommender model and train it on the above prepared data.