Introduction to the series

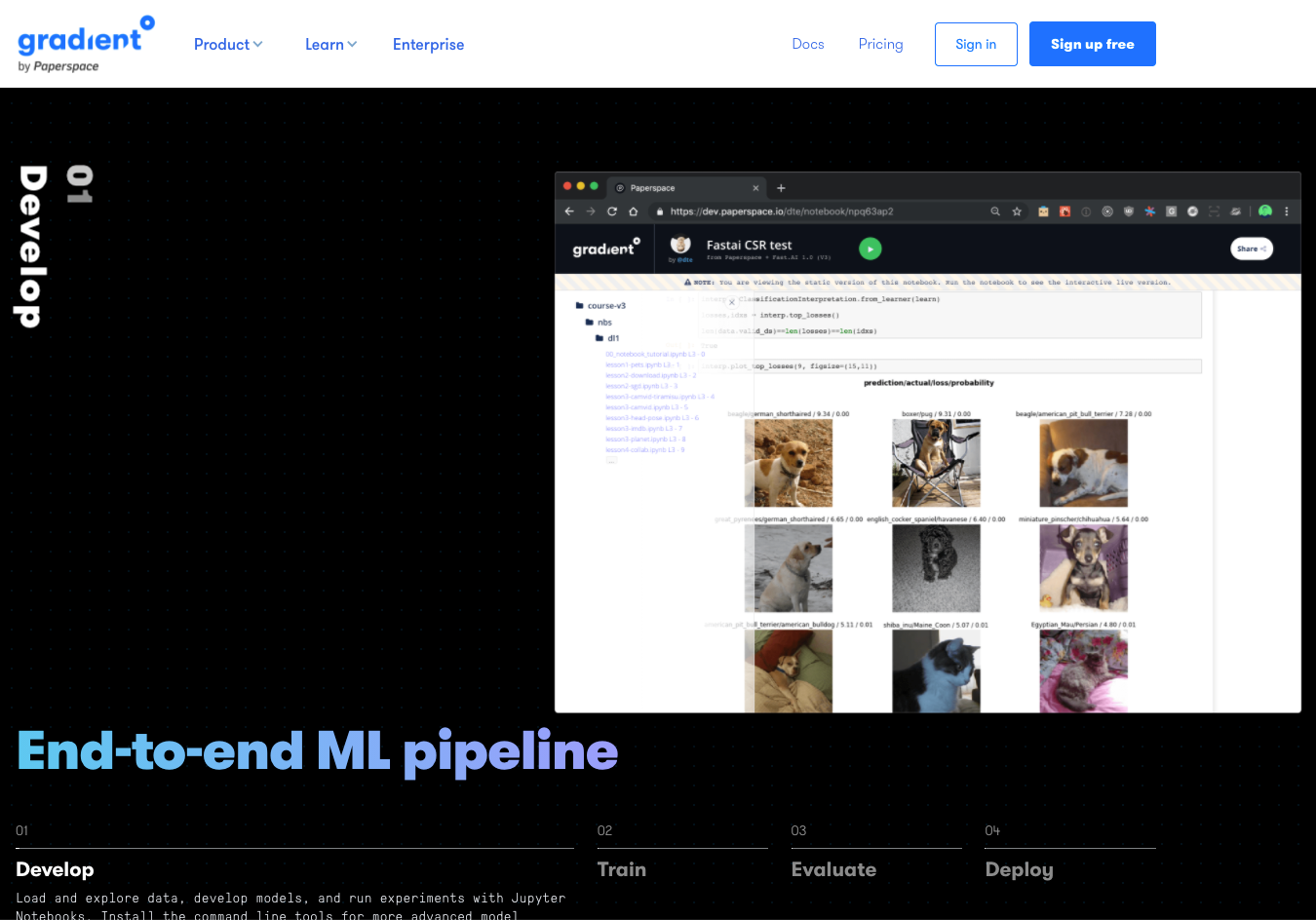

For many organizations, a major question faced by data scientists and engineers is how best to go from the experimentation stage to production. Gradient Notebooks and Gradient Workflows each offer functionality on both sides of this divide.

This blog series demonstrates the use of Gradient Notebooks and Workflows as part of an end-to-end project. Along the way, we'll explain each step in detail and we'll make the material accessible to data scientists, ML engineers, and other technical readers alike.

In this first part of six, we outline the most important part of any enterprise data science analysis – posing a business problem. Let's get started.

Series parts

Part 1: Posing a business problem

Part 2: Preparing the data

Part 3: Building a TensorFlow model

Part 4: Tuning the model for best performance

Part 5: Deploying the model into production

Part 6: Summary, conclusions, and next steps

Accompanying material

- The main location for accompanying material to this blog series is the GitHub repository at https://github.com/gradient-ai/Deep-Learning-Recommender-TF

- This contains the notebook for the project,

deep_learning_recommender_tf.ipynb, which can be run in Gradient Notebooks or the JupyterLab interface, and 3 files for the Gradient Workflow:workflow_train_model.py,workflow-train-model.yamlandworkflow-deploy-model.yaml. - The repo is designed to be able to be used and followed along without having to refer to the blog series, and vice versa, but they compliment each other.

Note

Model deployment support in the Gradient product on public clusters and from Workflows is currently pending, expected in 2021 Q4. Therefore section 5 of the Notebook deep_learning_recommender_tf.ipynb on model deployment is shown but will not yet run.

Introduction to Part 1

The outputs of recommender systems are now a common feature of the online world. Many of us are familiar with how sites like Amazon encourage you to buy an extra item to compliment the items in your cart, or how YouTube suggests you watch just one more video before going elsewhere.

We're also familiar with when they don't work so well. If you buy a set of Allen wrenches from Amazon, for example, Amazon may continue to show you Allen wrenches for some time despite the fact that you just bought a set. Or if you book a hotel on a road trip, you may still be receiving offers for the town that you departed weeks later. Examples like this might have some statistical validity in the sense that you're more interested in these items than a random user, but they are not capturing the empirical common sense that a human would have.

So while recommender systems have gotten much better, there is still room for improvement to capture the full complexity of what users want. This is especially true outside of the few top technology companies where teams tend to have fewer data scientists and engineers on staff.

For many businesses, adding a well-performing recommender model to the technology stack has potential to improve revenue substantially.

In this 6-part blog series, we aim to show both how deep learning can improve the results of a recommender and how Paperspace Gradient makes it easier to build such a system.

In particular we're going to focus on the full lifecycle of building such a recommender – from the experimentation stage of writing notebooks and training models through to the production stage of deploying models and realizing business value.

Series highlights

- Demonstrate a real-world-style example of machine learning on Gradient

- Incorporate an end-to-end dataflow with Gradient Notebooks and Workflows

- Use modern data science methodology based on Gradient's integrations with Git

- Use TensorFlow 2 and TensorFlow Recommenders (TFRS) to train a recommender model that includes deep learning

- Use training data that reflects real-world project variables and realities

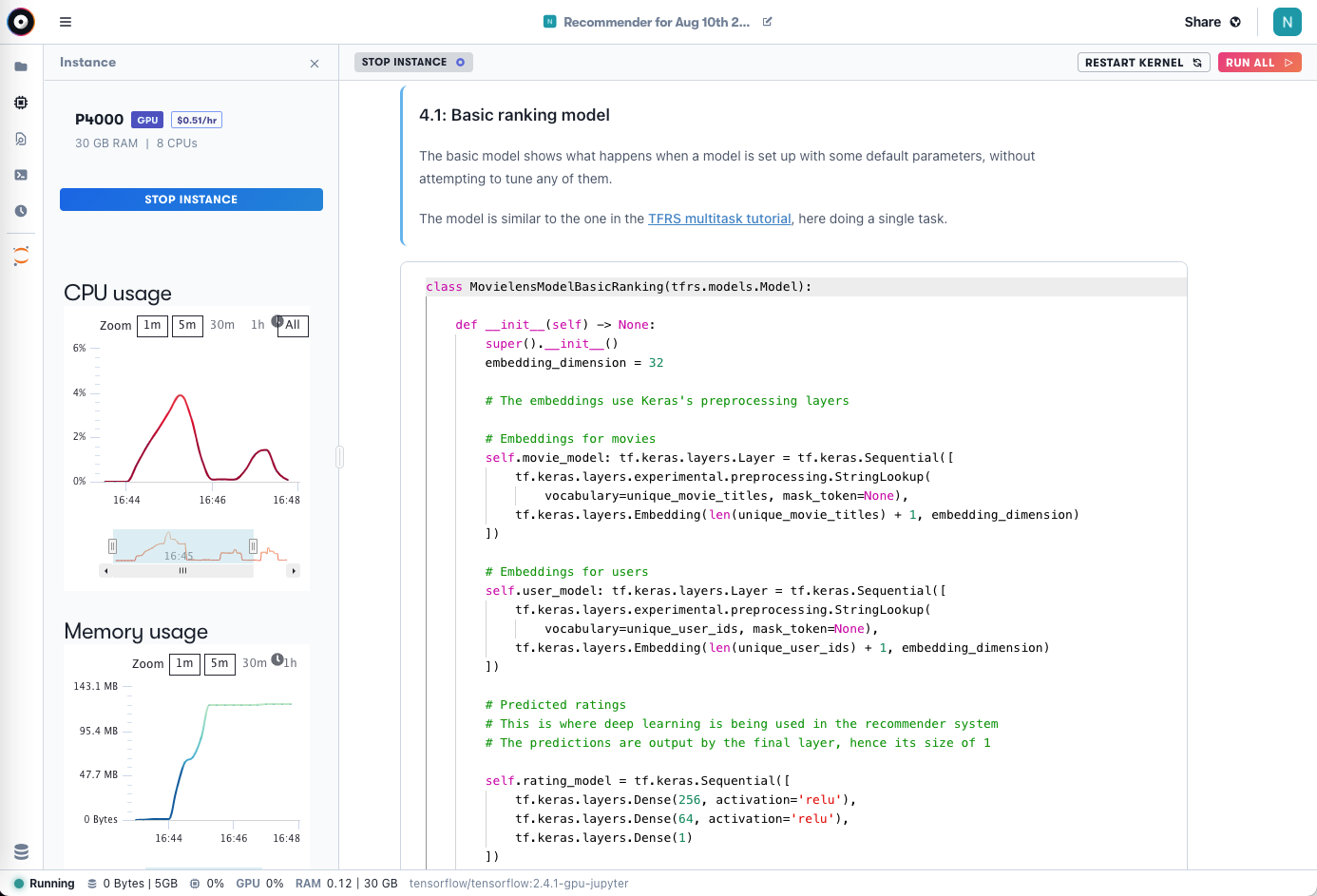

- Construct a custom model using the full TensorFlow subclassing API

- Show working hyperparameter tuning that improves the results

- Deploy model using Gradient Deployments and its TensorFlow Serving integrations

- Include a self-contained working Jupyter notebook and Git repository

- Make it relatable to a broad audience including ML engineers, data scientists, and those somewhere in between

Understanding the business problem

As is often stated but not always practiced, data science should start with the business problem to be solved.

There are simply too many possible approaches at each step of the process (data, feature engineering, algorithms, performance metrics, etc.) to explore without specific criteria in mind.

It is also the case that "build a better classifier" (or recommender in this case) is not a business problem. It's a statement of part of the solution. The business problem needs to be given in terms that describe direct value for the business.

An example of this, from a talk based on the book Thinking with Data, is as follows:

Not a business problem scope: We will use R to create a logistic regression to predict who will quit using the product.

Business problem scope: Context = Company has subscription model. CEO wants to know if we can target users who are likely to drop off with deals. Needs = Want to understand who drops off early enough so that we can intervene. Vision = Predictive model that uses behavioral data to predict who will drop out, identifying them quickly enough to be useful. Outcome = Software team will implement the model in a batch process to run daily, automatically sending out offers. Calculate precision and recall using held out users, send weekly email.

It's not uncommon for scope to be even longer than this – the key part is to understand the outcomes in measurable terms.

For this project, our business problem is as follows:

Demonstrate that Paperspace Gradient Notebooks and Workflows can be used for solving real machine learning problems. Do this by showing an end-to-end solution from raw data to production deployment. Further show that what is demonstrated can be plausibly built up into a full enterprise-grade system. In this case, the model is a recommender system whose results are improved by utilizing a tuned deep learning component.

Now let's move on to talk about recommender systems! We'll discuss in particular the ideas embodied by the classical approaches and how they change when deep learning is added. We will then see how Gradient helps bring these approaches to life.

Classical recommender systems

The labeling of any method of machine learning (ML) that is not deep learning as "classical" can be misleading.

In fact, "classical" methods such as gradient boosted decision trees can solve arbitrarily complex nonlinear problems just as deep learning can – and for many business problems these methods are more appropriate due to their retention of human-readable data features and less compute-intensive training times.

So by "classical" here we mean methods that utilize the (perhaps similarly under-appreciated) classical statistical methods as opposed to trained ML models. These form a baseline over which the ML model can improve.

Here is a common task that a recommender model will try to achieve:

Present suggested new items to a user that similar users have already liked

A common setup to do this consists of using a retrieval model and a ranking model. In this setup, the retrieval model selects a set of candidate items to recommend from the full inventory, then the ranking model refines the selection and orders the recommendations on the smaller dataset.

The classical statistics portion in recommendation is to use collaborative filtering, where we have:

- The users' preferences, either explicit such as ratings or implicit such as views or purchases

- A measure of distance between each user to find the users that are most similar to them

- Recommend items to a user that the users similar to them liked

These work, but are limited in accuracy because they do not use all of the information available in a dataset, e.g., distances may only be represented in a matrix or low-dimensional tensor, such as user-user (similar ratings) or item-item (similar items bought).

Even if user features such as demographics are added to the calculation (context-aware), those that do not translate directly into obvious differences in distance, such as feature interactions, would not necessarily be captured.

Addition of deep learning

In the same way that ML is able to improve upon classical statistics in areas where complex nonlinear mappings represent the best solution to a problem, ML can improve recommenders too.

Adding ML to a recommender enables:

- More features to be captured

- More types of features to be used, such as many columns of user or item information and not just distances

- Nonlinear combinations of these features to be found by the model

- More fine-grained and personalized recommendations for each individual user

- Mitigation of the "cold start" problem of what to do with a new user or item

In this series, we focus on adding some deep learning layers to a recommender ranking model which improves its predictions of how much the user will like the recommended items.

The current state of the art is represented by setups like Facebook's deep learning recommendation model (DLRM). This is a hybrid approach of collaborative filtering and deep learning that learns lower order feature interactions but limits higher order ones to those that add performance without too much cost in compute resources.

While we do not build a system as sophisticated as this, we do aim to demonstrate the foundation of functionality that Gradient provides to build such production systems.

Implementation in Gradient

In the next part of the series, we will begin to implement the model in Paperspace Gradient using TensorFlow 2 and the TensorFlow Recommenders library. We will then deploy the model using Gradient's integration of TensorFlow Serving.

We'll show usage of Gradient Notebooks, including how to do data preparation and model training, and then we demonstrate how to create a production setup that performs model training and deployment in Gradient Workflows.

Next

In Part 2 of the series - Preparing the data, we will show how to go from a raw dataset to a suitable model training set, using TensorFlow 2 and Gradient notebooks.