Introduction to Part 3

In the third part of this six-part series, we will use the TensorFlow Recommenders library to build a basic recommender model and train it on the data we prepared in Part 2.

Series parts

Part 1: Posing a business problem

Part 2: Preparing the data

Part 3: Building a TensorFlow model

Part 4: Tuning the model for best performance

Part 5: Deploying the model into production

Part 6: Summary, conclusions, and next steps

Accompanying material

- The main location for accompanying material to this blog series is the GitHub repository at https://github.com/gradient-ai/Deep-Learning-Recommender-TF .

- This contains the notebook for the project,

deep_learning_recommender_tf.ipynb, which can be run in Gradient Notebooks or the JupyterLab interface, and 3 files for the Gradient Workflow:workflow_train_model.py,workflow-train-model.yamlandworkflow-deploy-model.yaml. - The repo is designed to be able to be used and followed along without having to refer to the blog series, and vice versa, but they compliment each other.

NOTE

Model deployment support in the Gradient product on public clusters and from Workflows is currently pending, expected in 2021 Q4. Therefore section 5 of the Notebook deep_learning_recommender_tf.ipynb on model deployment is shown but will not yet run.

Recommender models

Now that we have suitable data (from Part 2) to pass to the model, we can go ahead and build our basic recommender using TensorFlow.

The simplest form of TensorFlow model for the user – especially now in TensorFlow 2 which is much easier to use than version 1 – is to use the sequential layers model formulation with Keras. This allows specification of model layers, usually a neural network, and their hyperparameters.

Some more complex models, such as those with non-sequential arrangements of layers, can be specified with the functional API.

Recommender systems do not follow the standard supervised setup because they are outputting an ordered list of possibly several recommendations, not just a single prediction or unordered classification probabilities. They therefore require the most general TensorFlow setup which uses the subclassing API. Models are written as classes and the user has the most control over how they are set up.

Real business problems will often require some custom component in the ML model that cannot be represented using the simpler interfaces. This means that the full class formulation must be used to gain the requisite generality and control of the process.

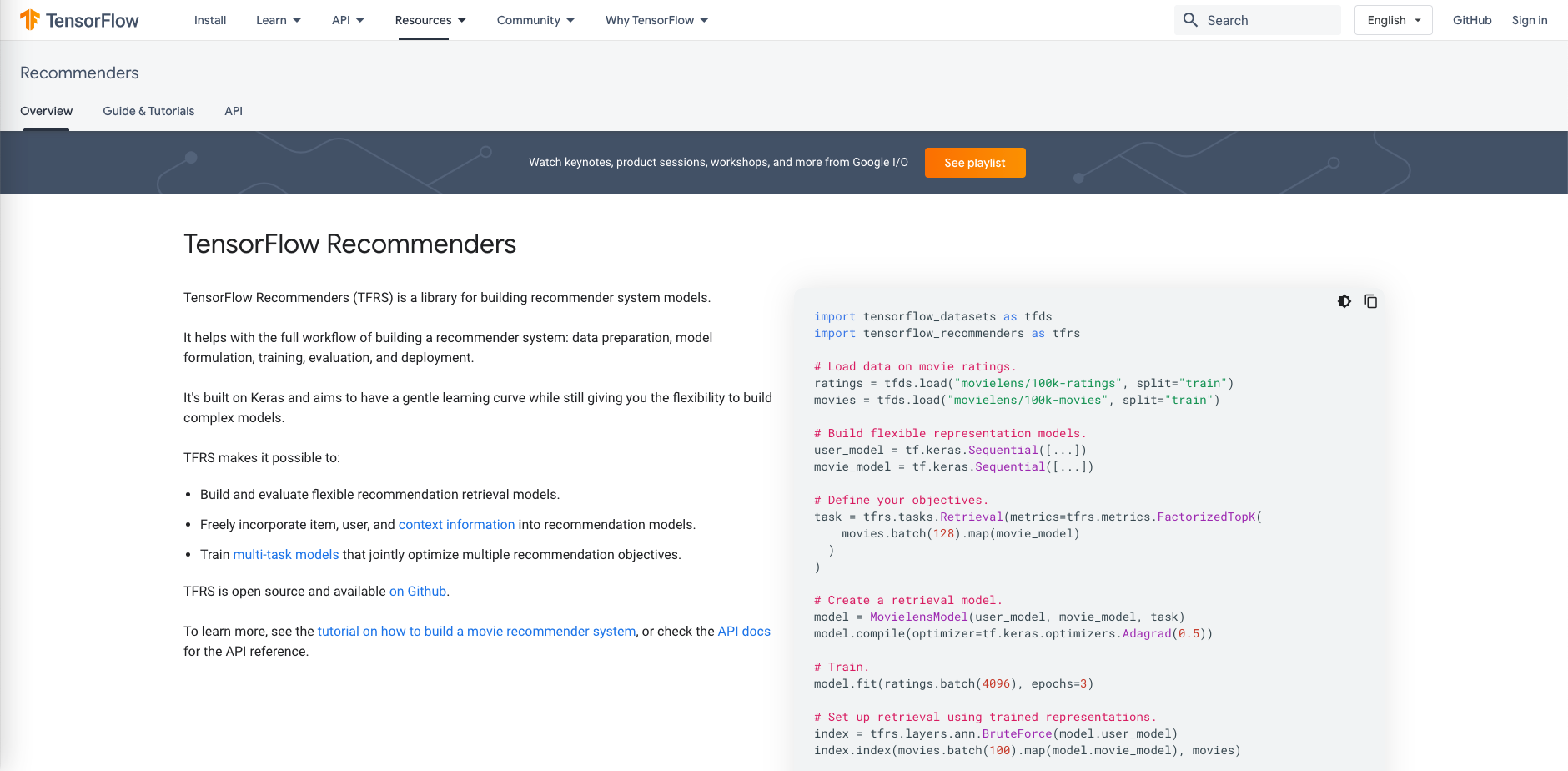

We don't entirely define our own model from low-level components, however, but instead utilize the TensorFlow library TensorFlow Recommenders (TFRS). This implements classes for common components of a recommender system such as embeddings which can then be assembled using the subclassing API.

If you're familiar with the TensorFlow Recommenders tutorials, you may recognize some of the examples in this series – however we've emphasized building a working end-to-end example with data preparation and deployment rather than adding features to the recommenders themselves.

Model components

We will be building the ranking component of the model in which we will predict the user's rating on new movies and then output recommendations.

To enable the model to make predictions, we need to take the input movie_title and user_id columns and train the model on the user_rating column so that it can predict the rating for new potential user+movie combinations.

To go from a large list of titles and users to a lower dimensional representation that the model can use we convert those features into embeddings.

Embeddings are computed by mapping the raw categories onto integers (known as a vocabulary), and then converting those into a continuous vector space with a much lower dimension, such as 32.

These are then passed to the deep learning layers which predict the user ratings and output the recommendations.

We can deploy the model more simply if the embeddings are computed in the model. This is because there is less data manipulation done outside the model between new raw data coming in and the model running predictions on it.

This reduces the scope for errors and is a strength of TensorFlow, TFRS, and other modern ML libraries.

Model implementation

The basic ranking model is instantiated by creating a TFRS model class that computes the embeddings. It then contains deep learning layers to predict the user rating, code to compute the loss at each training iteration, and the call method that allows the model to be deployed after it has been trained and saved.

The class is worth showing in full here:

class MovielensModelBasicRanking(tfrs.models.Model):

def __init__(self) -> None:

super().__init__()

embedding_dimension = 32

# The embeddings use Keras's preprocessing layers

# Embeddings for movies

self.movie_model: tf.keras.layers.Layer = tf.keras.Sequential([

tf.keras.layers.experimental.preprocessing.StringLookup(

vocabulary=unique_movie_titles, mask_token=None),

tf.keras.layers.Embedding(len(unique_movie_titles) + 1, embedding_dimension)

])

# Embeddings for users

self.user_model: tf.keras.layers.Layer = tf.keras.Sequential([

tf.keras.layers.experimental.preprocessing.StringLookup(

vocabulary=unique_user_ids, mask_token=None),

tf.keras.layers.Embedding(len(unique_user_ids) + 1, embedding_dimension)

])

# Predicted ratings

# This is where deep learning is being used in the recommender system

# The predictions are output by the final layer, hence its size of 1

self.rating_model = tf.keras.Sequential([

tf.keras.layers.Dense(256, activation='relu'),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(1)

])

# Ranking is written as a TFRS task

self.task: tf.keras.layers.Layer = tfrs.tasks.Ranking(

loss = tf.keras.losses.MeanSquaredError(),

metrics=[tf.keras.metrics.RootMeanSquaredError()]

)

# The call method allows the model to be run, and saved

# The embeddings are passed into the model

# The embeddings and predicted rating are returned

def call(self, features: Dict[Text, tf.Tensor]) -> tf.Tensor:

user_embeddings = self.user_model(features['user_id'])

movie_embeddings = self.movie_model(features['movie_title'])

return (

user_embeddings,

movie_embeddings,

self.rating_model(

tf.concat([user_embeddings, movie_embeddings], axis=1)

),

)

# This is the TFRS built-in method that computes the model loss function during training

def compute_loss(self, features: Dict[Text, tf.Tensor], training=False) -> tf.Tensor:

ratings = features.pop('user_rating')

user_embeddings, movie_embeddings, rating_predictions = self(features)

rating_loss = self.task(

labels=ratings,

predictions=rating_predictions,

)

return rating_loss

To pass data to it following the TFRS tutorials, there are some more TensorFlow incantations.

These are putting the training, validation, and testing datasets into cached form to help performance, and sending the data in batches rather than all at once or one row at a time.:

cached_train = train.shuffle(ntimes_tr).batch(8192).cache()

...

The form of the data being passed to the model (which should always be sanity-checked when writing an analysis) is, for 2 batches:

{'movie_title': array([b'Spanking the Monkey (1994)', b'Bob Roberts (1992)',

b'Like Water For Chocolate (Como agua para chocolate) (1992)', ...,

b"Stephen King's The Langoliers (1995)",

b'Alice in Wonderland (1951)', b'Phantom, The (1996)'],

dtype=object),

'timestamp': array([879618019, 883602865, 884209535, ..., 885548475, 879518233,

877751143]),

'user_id': array([b'535', b'6', b'198', ..., b'405', b'295', b'207'], dtype=object),

'user_rating': array([3., 3., 3., ..., 1., 4., 2.], dtype=float32)}

{'movie_title': array([b'Top Gun (1986)', b'Grace of My Heart (1996)',

b'Ghost and the Darkness, The (1996)', ..., b'Crimson Tide (1995)',

b'To Kill a Mockingbird (1962)', b'Harold and Maude (1971)'],

dtype=object),

'timestamp': array([882397829, 881959103, 884748708, ..., 880610814, 877554174,

880844350]),

'user_id': array([b'457', b'498', b'320', ..., b'233', b'453', b'916'], dtype=object),

'user_rating': array([4., 3., 4., ..., 3., 3., 4.], dtype=float32)}

The ellipses represent the un-shown rest of the fields in each batch.

By comparing to a line from the original raw input data we can see that the correct columns of movie_title and user_id have been selected:

{'bucketized_user_age': 45.0,

'movie_genres': array([7]),

'movie_id': b'357',

'movie_title': b"One Flew Over the Cuckoo's Nest (1975)",

'raw_user_age': 46.0,

'timestamp': 879024327,

'user_gender': True,

'user_id': b'138',

'user_occupation_label': 4,

'user_occupation_text': b'doctor',

'user_rating': 4.0,

'user_zip_code': b'53211'}

The IDs are categories and not numerical. The target ratings are numerical, and the data in each batch are in an array.

Training and results

Model training follows the standard TensorFlow method of compiling the model and running fit:

model_br = MovielensModelBasicRanking()

model_br.compile(optimizer=tf.keras.optimizers.Adagrad(learning_rate=0.1))

history_br = model_br.fit(cached_train, epochs=3, validation_data=cached_validation)

Here we have used the Adagrad optimizer. We have specified a learning rate of 0.1 and only a small number of epochs.

In Part 4 we will tune these parameters to improve the performance and show how the model can be trained using Gradient Workflows in addition to using a Notebook.

The metric used to measure the performance of the model is the root-mean-squared error (RMSE) between the predicted user ratings and their true ratings.

The performance of the model can be seen from the history:

rmse_br = history_br.history['root_mean_squared_error'][-1]

print(f'Root mean squared error in user rating from training: {rmse_br:.2f}')

This gives:

Root mean squared error in user rating from training: 1.11

In other words, the RMSE in prediction of someone's movie rating on the scale of 0-5 is 1.11. We can similarly output the RMSE from the validation set, which should be similar if the model has not overfit the data.

We can view a summary of the model as follows where we see the deep learning layers and the ranking layer:

model_br.summary()

Model: "movielens_model_basic_ranking"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

sequential (Sequential) (None, 32) 53280

_________________________________________________________________

sequential_1 (Sequential) (None, 32) 30208

_________________________________________________________________

sequential_2 (Sequential) (None, 1) 33153

_________________________________________________________________

ranking (Ranking) multiple 2

=================================================================

Total params: 116,643

Trainable params: 116,641

Non-trainable params: 2

_________________________________________________________________

Finally, we can evaluate the model on unseen test data, as one always should to quote a realistic expected model performance in production:

eval_br = model_br.evaluate(cached_test, return_dict=True)

...

Root mean squared error in user rating from evaluation: 1.12

The performance is similar to training, so our model appears to be working correctly.

Next

In Part 4 of the series - Tuning the model for best performance, we will improve the above result by tuning some of the model parameters, and show how the training can also be done in Gradient Workflows to take full advantage of automation and reproducibility.