Large language models (LLMs) have increased in prominence in the past year, from the most well-known example of ChatGPT, to many others such as LLaMA and GPT-4.

While the base models can be powerful for generic use, to unleash the full power of a model for a particular domain, it needs to be fine-tuned on data from that domain. This has the potential to bring many benefits in fields such as healthcare, computer coding, and so on.

Most of the largest models are proprietary, meaning others can use them but they are not available to be fine-tuned. Other models are open source, making them available to be used by all. One of the most recent and best performing of the open source models is MPT-7B from MosaicML. A crucial attribute that this model has versus some of the others is that it can be used commercially, unlike its closest competitor, LLaMA.

A second benefit of MPT-7B is that it has been presented in an end-to-end fashion, with working examples using their open source LLM Foundry library showing how to go all the way from your raw input data (which may be remote) to a deployed fine-tuned model that returns useful responses to inputs. The user can then develop their own end-to-end fine-tuning by analogy to the working examples. This lowers the barrier to producing useful working products.

In this blogpost, we demonstrate how to fine-tune the 7-billion parameter MPT-7B on Paperspace, using a multi-GPU setup with A100-80G GPUs that is available today for any paid user. The result reproduces the fine-tuned model MPT-7B-Instruct that is available publicly.

In a future post, we will compile our own fine-tuning dataset to produce a new fine-tuned model.

For a general introduction to LLMs, the state of the field, and the current landscape of models, see Navigating the Large Language Model revolution with Paperspace.

End-to-end model fine-tuning

While the focus of this blogpost is MPT-7B, the model is serving as an example of the more general process of end-to-end LLM fine-tuning.

End-to-end fine-tuning requires several major components to produce a result that is of value:

- Compilation of a fine-tuning dataset

- Selection of (likely large) pre-trained model

- Transfer of data and model from (possibly remote) disk to GPUs

- Model fine-tuning run on (possibly multiple) GPUs

- Evaluation of fine-tuned model on unseen data

- Model inference to generate useful output

The resulting model may then be deployed into production on an endpoint, possibly as part of an application.

Each of these steps potentially comes with many issues that result both from LLMs being new, and MLOps being a field still undergoing maturation.

The end-to-end process enabled by the combination of MosaicML's approach and Paperspace's setup addresses many of these, and renders it tractable for many more people:

- Fine-tuning dataset is shown in raw form: while the user still has to compile their own data, for example in the

prompt: ... response: ...format, this is explicitly shown in that form, and then formatted as required for the model, meaning the user can do the same for their data. - Pre-trained foundation model is available: This is MPT-7B

- Data transfer via streaming: The MosaicML Streaming Dataset format (also open source) addresses common issues when training or fine-tuning large models. These include: (1) streaming so that data points are only transferred once even if multiple GPUs or nodes are being used; (2) handling of sharding the data so that it can be distributed correctly, e.g., avoiding accidentally duplicating training data; (3) guaranteed reproducibility via seeds and well defined data ordering, and (4) handling hardware failures without the data that the model sees being unintentionally altered. This last issue happens more when there are 100s of GPUs in pre-training, rather than fine-tuning, but it's still crucial that your data is being used correctly.

- Fine-tuning on multi-GPU: The code for fine-tuning automatically detects the GPU configuration of the system and distributes the data and model appropriately over the available GPUs.

- Model evaluation: There is an option to hold out data for validation as part of the fine-tuning run, or to run a separate evaluation task, e.g., one of the many in-context learning (ICL) tasks, to evaluate your new model.

- Generate output: Finally, you can send new prompts to the tuned model and have it return responses to verify empirically that they are useful to the user.

With all the above available open source, Paperspace closes the loop by making the necessary compute available to all in the form of A100-80G multi-GPU machines.

MPT-7B

MPT-7B is MosaicML's 7-billion parameter large language model trained on 1 trillion tokens from a variety of text datasets. It represents an example of the current state-of-the-art in open source large language models, and has a license that allows it to be used commercially as well as for research.

It was trained in 9.5 days on 440 A100-40G GPUs, at a cost of $200k. A number of innovations allowed the run to auto-recover from occasional hardware issues encountered at that scale, resulting in an empty pre-training logbook from the run.

The model itself includes various recent advances, such as Attention with Linear Biases (ALiBi), which penalizes attention scores according to their distance as opposed to using positional embeddings; FlashAttention, that reduces the number of GPU read-writes; Nvidia's FasterTransformer, and the Streaming Dataset format mentioned above.

The library used to train the model, LLM Foundry, in turn uses Hugging Face's Transformers library, and the resulting model is presented there as a model card.

Some models were fine-tuned from the base MPT-7B, and these are also presented:

- MPT-7B-Instruct

- MPT-7B-Chat

- MPT-StoryWriter-65k+

MPT-7B-Instruct is a decoder-only transformer that is good for following short-form instructions. This is natural in many situations where the user asks a question and expects an answer, as opposed to a continuation of their question text.

MPT-7B-Chat is, like ChatGPT, able to carry on a conversation with the user by retaining memory of what was previously said.

MPT-StoryWriter-65k+ is another innovation that allows much longer inputs and outputs than most LLMs, over 65k tokens. This allows it to do qualitatively new things, for example, write an epilog to a story after being supplied with the story. This was enabled by the ALiBi attention mechanism.

Of these three fine-tuned models, the datasets and settings for Chat and StoryWriter were not released, but the ones for Instruct were. So it is these that we follow below.

Fine-tuning on Paperspace

For fine-tuning of LLMs on Paperspace, we start with MosaicML's pre-trained 7-billion parameter MPT-7B and the fine-tuning dataset dolly_hhrlhf. We perform the end-to-end process, going from raw data through to a fine-tuned model that produces useful responses to prompts supplied by the user.

Setup

Bring this project to life

Paperspace allows immediate startup of a multi-GPU system, which can then run the MosaicML Docker container and the end-to-end process. Alternatively, click the link above

We are running on an A100-80Gx4 machine. After starting it up and connecting via SSH on a dynamic IP (the default connection method), on its terminal we set up the container:

sudo usermod -aG docker $USER

newgrp docker

docker pull mosaicml/pytorch:1.13.1_cu117-python3.10-ubuntu20.04

docker images

docker run -it --gpus all --ipc=host <image ID>The first 2 commands remove the need for prefixing docker commands with sudo, and the last one starts the container with access to the machine's memory and GPUs. docker images lets you see the ID of the image that you will be running as the container.

The recommended image to use may be a newer one since the time of writing, so check out the LLM Foundry GitHub repository readme if you want to use the latest, e.g., PyTorch 2.

Once in the container, we can follow the remaining MosaicML setup instructions that are needed:

git clone https://github.com/mosaicml/llm-foundry.git

cd llm-foundry/scripts

pip3.10 install --upgrade pip

pip install -e ".[gpu]"Although they recommend running inside a virtual environment venv, we don't run that here.

Optionally, you can also add other items, e.g., apt update; apt install -y vim; mkdir logs to be able to view files and store stdout+stderr logs from runs, which can be useful for debugging.

If you exit the container it will automatically stop but not be removed, and you can also exit the machine. Don't forget to shut it down if you will not be using it!

If you return to the machine to continue using the container, do docker ps -a to see the container ID, docker start -ai <container ID>, and in the container cd llm-foundry/scripts to pick up where you left off.

We're now ready to start working with the data, followed by model fine-tuning.

Dataset

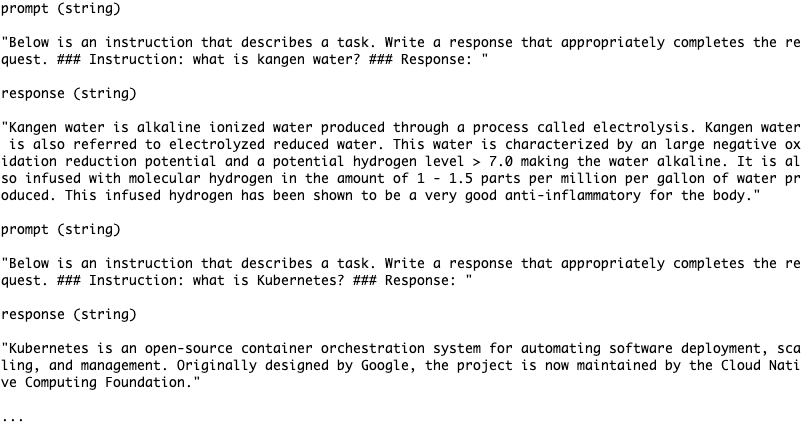

In this particular example, the data isn't quite raw, but its content is in strings that can be very easily created. The first couple of prompts and responses are:

Crucially, this means that as a user you can compile your own fine-tuning data to contain content like the above, and follow exactly the same process as here to fine-tune MPT-7B yourself with your data. The work is only where it needs to be — in collecting or generating the prompts and responses needed for your use case.

Furthermore, if the data are not in the exact prompt: ... response: ... format, for example, they are in the form of multiple choice questions instead, a conversion function can be referenced. If you want to fine-tune models other that MPT-7B, you can drop down into the library's usage of the Hugging Face Transformer library, and point to other models.

The dataset dolly_hhrlhf was compiled by MosaicML using "a combination of Databrick's dolly-15k dataset and a filtered subset of Anthropic's HH-RLHF". This gave data which has a strong element of human responses to questions, and a lower likelihood of giving harmful or inappropriate responses than the base model, which is not meant for direct user-facing use.

The end-to-end process proceeds by analogy to their provided quickstart example in the GitHub repository. This runs a series of scripts that provide all the steps we need:

data_prep/convert_dataset_hf.pytrain/train.pyinference/convert_composer_to_hf.pyeval/eval.pyinference/hf_generate.py

which in turn take various arguments, including YAML files for model and other settings.

The scripts are a convenience that reduce the amount of code needed to be written by the user, but it is precisely this convenience that makes end-to-end fine-tuning the full-size MPT-7B tractable with a reasonable amount of invested user time and effort.

Load data

Loading the data corresponds to running convert_dataset_hf.py and the start of train.py. convert_dataset_hf.py allows you to take your raw input data, such as the above, and convert it to the MosaicML Streaming Data format.

Arguments to this script include being able to specify that the data is remote, e.g., an Amazon S3 bucket, and for the converted data to also be stored remotely. This lets you, for example, work with your data without having to migrate it all to Paperspace first.

For our particular example, the conversion doesn't actually need to be run, because the data ready for training, but in the general case the invocation will resemble the one in the quickstart:

python data_prep/convert_dataset_hf.py \

--dataset c4 --data_subset en \

--out_root my-copy-c4 --splits train_small val_small \

--concat_tokens 2048 --tokenizer EleutherAI/gpt-neox-20b \

--eos_text '<|endoftext|>'To specify outputting the data to a remote location, you would change --out_root to s3://<my bucket>/<my directory>, pointing to a bucket that you have previously created.

export AWS_ACCESS_KEY_ID="<my access key ID>" and export AWS_SECRET_ACCESS_KEY="<my secret access key>" so that Amazon Web Services can see your credentials. These are standard attributes that you would create when working with S3 buckets.Fine-tuning run

Bring this project to life

Now we are ready to do our fine-tuning run.

The command ends up being quite simple:

composer train/train.py \

train/yamls/finetune/mpt-7b_dolly_sft.yaml \

save_folder=mpt-7b_dolly_sftwhere composer is part of the install from when we did pip install -e ".[gpu]" in the setup.

The simplicity of the command is a result of most of the settings being captured in the mpt-7b_dolly_sft.yaml YAML file that the command is pointing to. So let's see that too. It's worth showing in full to emphasize the complexity that is being made tractable in this end-to-end process.

This is the example from the repository:

max_seq_len: 2048

global_seed: 17

# Run Name

run_name: # If left blank, will be read from env var $RUN_NAME

model:

name: hf_causal_lm

pretrained: true

pretrained_model_name_or_path: mosaicml/mpt-7b

config_overrides:

attn_config:

attn_impl: triton

# Set this to `true` if using `train_loader.dataset.packing_ratio` below

attn_uses_sequence_id: false

# Tokenizer

tokenizer:

name: mosaicml/mpt-7b

kwargs:

model_max_length: ${max_seq_len}

# Dataloaders

train_loader:

name: finetuning

dataset:

hf_name: mosaicml/dolly_hhrlhf

split: train

max_seq_len: ${max_seq_len}

allow_pad_trimming: false

decoder_only_format: true

# # Use `python llmfoundry/data/packing.py --yaml-path /path/to/this/yaml/ ...`

# # to profile this run's optimal packing_ratio as it depends on GPU count,

# # batch size, sequence length

# packing_ratio:

shuffle: true

drop_last: true

num_workers: 8

pin_memory: false

prefetch_factor: 2

persistent_workers: true

timeout: 0

eval_loader:

name: finetuning

dataset:

hf_name: mosaicml/dolly_hhrlhf

split: test

max_seq_len: ${max_seq_len}

allow_pad_trimming: false

decoder_only_format: true

# packing_ratio:

shuffle: true

drop_last: true

num_workers: 8

pin_memory: false

prefetch_factor: 2

persistent_workers: true

timeout: 0

# Optimization

scheduler:

name: linear_decay_with_warmup # linear no warmup is HF default which dolly used

t_warmup: 50ba # add some warmup though, seems to help with MPT

alpha_f: 0

optimizer:

# Based on Dolly

name: decoupled_adamw

lr: 5.0e-6

betas:

- 0.9

- 0.999

eps: 1.0e-8

weight_decay: 0

algorithms:

gradient_clipping:

clipping_type: norm

clipping_threshold: 1.0

max_duration: 2ep # 2-3 epochs seems like the sweet spot

eval_interval: 1ep

# eval_subset_num_batches: -1

eval_first: true

global_train_batch_size: 48 # somewhere in the 6-8 * numgpus range seems good

# System

seed: ${global_seed}

device_eval_batch_size: 8

device_train_microbatch_size: 8

# device_train_microbatch_size: auto

precision: amp_bf16

# FSDP

fsdp_config:

sharding_strategy: FULL_SHARD

mixed_precision: PURE

activation_checkpointing: true

activation_checkpointing_reentrant: false

activation_cpu_offload: false

limit_all_gathers: true

verbose: false

# Logging

progress_bar: false

log_to_console: true

console_log_interval: 1ba

callbacks:

speed_monitor:

window_size: 10

lr_monitor: {}

memory_monitor: {}

runtime_estimator: {}

# loggers:

# wandb: {}

# Checkpoint to local filesystem or remote object store

# save_interval: 5000ba

# save_num_checkpoints_to_keep: 1 # Important, this cleans up checkpoints saved to DISK

# save_folder: ./{run_name}/checkpoints

# save_folder: s3://my-bucket/my-folder/{run_name}/checkpointsWe won't go through every line, but some notable settings are:

global_seed— In combination with Streaming Dataset and its handling of sharding, ordering, etc., this makes everything reproduciblepretrained_model_name_or_path— The pre-trained model that is being fine-tuned, in this case MPT-7B, as a Hugging Face modeltokenizer: name: mosaicml/mpt-7b— Language models need a tokenizer to correctly convert the input strings to numbers, i.e., tokens, so we specify which to usetrain_loader: name: finetuning— We are running fine-tuning as opposed to pre-trainingdataset: hf_name: mosaicml/dolly_hhrlhf— Dataset we are fine-tuning on, as a Hugging Face dataseteval_loader— This shows that we are including evaluation on a validation set as part of the fine-tuning run.optimizer— Which optimizer we are using, in this case an evolution of the well-known Adam optimizer. Optimizers specify how the neural network weights are updated to minimize the loss function. The other hyperparameters in this section could be adjusted but they are often run for only one or a few values with LLMs.max_duration: 2ep— Tune for reasonable number of epochs for best performance. A low number generally suffices for LLM fine-tuning.fsdp_config— Option within the Streaming Data format that allows the data to also be compressed, reducing disk usage.loggers— Not used here, but the tuning run can be logged externally, for example to Weights & Biasessave_interval— Whether and how often to save model checkpoints, which act as backup snapshots during long runs. The MPT-7B base model is about 13G but the checkpoints are much larger at about 75G because they contain the model optimizer states.

In our run, this is the YAML that we used as-is, with the exception of uncommenting save_interval: 5000ba (save a checkpoint every 5000 batches). By viewing the library source code it could be seen that if this is not set, it defaults to 1000ba, therefore in our 2472 batch run it would save a 75G checkpoint 3 times and consume 250G of disk. Since our run was 6 hours and we only needed the final model, this was not necessary.

This modification is typical of the current state of running these LLMs. It is much more tractable at scale than it was, but we still did end up going back to the source code, and understanding the YAML settings line-by-line, not just running it blindly. This is one reason why making end-to-end as easy to use as possible without hiding needed control is so important.

On our A100-80Gx4 machine, the full fine-tuning run took about 6 hours. It would also be expected to work on A100-80Gx2, but would take twice as long, and it may work on a single A100-80G with some settings tweaks, e.g., reducing device_train_microbatch_size, but take a long time there as well.

Model evaluation

The fine-tuning run above provides evaluations on a validation set as part of it, so we didn't run the evaluation script separately as it would require new unseen instances consistent with dolly_hhrlhf.

What we did do was generate output to prompts and qualitatively compare them to the responses of the base MPT-7B to the same prompts - see below. While this doesn't provide a quantitative evaluation, it does provide the vital sanity checks of whether the outputs are useful, and whether the ones from the fine-tuned model are obviously better than the base model.

There are many cases, however, where you would want to run a separate evaluation, for example, on one of the widely-used ICL tasks, because the output is quantitative.

The eval.py script and example YAMLs enable this, for example the quickstart has:

python eval/eval.py \

eval/yamls/hf_eval.yaml \

icl_tasks=eval/yamls/winograd.yaml \

model_name_or_path=mpt-125m-hfwhich runs the ICL task Winograd. That task checks if a model can resolve the referent to a pronoun in a sentence. YAMLs for others can also be run, such as LAMBADA for next word prediction, and PIQA for question answering.

Conversion to inference format

As we saw above, the model that comes out of the fine-tuning run is 75G in size. For inference, i.e., generating text output for the user, this can be greatly reduced because we only need the portions of the model that map input to output, and not information that encodes its training state.

The convert_composer_to_hf.py script reduces the model size in this way, and also outputs it to be compatible with the Hugging Face model format, making it more shareable to others. ONNX format is another option.

We run:

python inference/convert_composer_to_hf.py \

--composer_path mpt-7b_dolly_sft/ep2-ba2472-rank0.pt \

--hf_output_path mpt-7b_dolly_sft_hf \

--output_precision bf16which returns the inference model of about 13G in size.

This is still quite large, but, while the fine-tuning run used the multi-GPU A100-80Gx4, the text generation can be run on a single GPU, and we used Paperspace's A100 (40G).

Generate output

The proof of the value of a large language model whether it produce useful outputs for the user.

This again is why having end-to-end is so important, because we are seeing both the start, where you have the data that you want to fine-tune on, through to the final output where you know your model is providing the value that you want it to.

The final script in the sequence, hf_generate.py, allows passing a prompt to the fine-tuned model and having it return a response. As above, we go by analogy to the quickstart, giving:

python inference/hf_generate.py \

--name_or_path mpt-7b_dolly_sft_hf \

--prompts "<your prompt here>"As with the fine-tuning run, the command ends up being simple, but the log output shows settings that are changeable as additional arguments if desired:

{'max_new_tokens': 100, 'temperature': 1.0, 'top_p': 1.0, 'top_k': 50, 'repetition_penalty': 1.0, 'no_repeat_ngram_size': 0, 'use_cache': True, 'do_sample': True, 'eos_token_id': 0, 'pad_token_id': 0}

We do in fact change one of these, lowering the "temperature" to 0.1, adding --temperature 0.1 to the command, because on the quite-high default 1, the responses here tend to be somewhat chatty and random, whereas on 0.1 they are more focused.

To compare to the base MPT-7B, we also download that model, and run the analogous command pointing to it.

This gives us a way to see directly and in a qualitative manner whether the fine-tuned model has improved upon the answers that the base gives. In general, the conclusion is that yes, it does.

Some examples follow. In them, the text cuts off, because of max_new tokens 100, and the ellipsis is added.

Answer vs. better answer

Prompt: Here's a quick recipe for baking chocolate chip cookies:

MPT-7B base: Start by mixing the butter, sugar, and brown sugar together. Then add the egg and vanilla. Mix in the flour, baking soda, and salt. Stir in the chocolate chips. Bake at 350 degrees for 10-12 minutes.

Here's a quick recipe for baking chocolate chip cookies: Start by mixing the butter, sugar, and brown sugar together. Then add the egg and vanilla. Mix in the flour, baking soda, and salt. Stir in the chocolate chips. Bake at 350 degrees for 10 ...

MPT-7B-fine-tuned: Start by mixing together 1 cup of sugar, 1/2 cup of butter, and 1 egg. Add 1/2 cup of chocolate chips and 1/2 cup of white chocolate chips. Then add 1/2 cup of flour, 1/4 teaspoon of salt, and 1/4 teaspoon of baking soda. Mix everything together until it's well combined. Drop by tablespoonfuls onto a baking sheet. Bake at 350 degrees for 10-12 minutes.

I don't know about you, but I ...

Incoherent vs. answer

Prompt: Van Halen famously banned what color M&Ms in their rider?

MPT-7B base: What color M&Ms were banned from Van Halen's rider?

Van Halen famously banned what color M&Ms in their rider?

Van Halen famously banned what color M&Ms in their rider? – Additional Details

Van Halen famously banned what color M&Ms in their rider? – Related Questions

What color M&Ms are banned from Van Halen's rider?

Van Halen famously banned what color M&Ms in their rider? – Related Search...

MPT-7B-fine-tuned: Van Halen famously banned what color M&Ms in their rider? Brown.

Answer versus another answer

MPT-7B base: The answer to life, the universe, and happiness is a good cup of coffee.

I’ve been a coffee lover since I was a kid. I remember my mom would make me a cup of coffee every morning before school. I would drink it while watching cartoons. I would drink it while reading. I would drink it while doing homework. I would drink it while watching TV. I would drink it while playing video games. I would drink it while doing anything.

I’ve always loved coffee. I’ve always been a coffee ...

MPT-7B-fine-tuned: The answer to life, the universe, and happiness is 42.

I’ve been thinking about this quote for a while. I think it’s a great quote. I’ve been thinking about it a lot lately.

I think it’s a great quote because it’s so simple. It’s a great quote because it’s so profound. It’s a great quote because it’s so true.

I think it’s a great quote because it’s so simple. It’s a great quote because ...

The most obvious difference is that the fine-tuned model is more likely to answer the question rather than continue the question text (as expected since we tuned it for this), and when both models answer the question, the fine-tuned one generally gives better answers.

We can also see that, while the fine-tuned model's answers are much improved, they are not perfect (or as good as, for example, GPT-4). So there is room for more to be done in honing the best possible open source question answering model, either via better data, tuning, settings (repetition_penalty?), prompt engineering, or further innovation in model architecture.

Alert readers, and those that remember the introduction, may notice that the process we have followed here results in a model that corresponds to the released MPT-7B-Instruct. However, for the aims of this blogpost, the fine-tuned model's already existing makes no difference.

We are done

So there we have it. Our model now generates useful outputs for the user, and can be put into production as part of an application to answer users' questions. More importantly, you can then do the same thing with your own data to create new fine-tuned models.

On Paperspace, putting the model into production could be done via our deployments functionality, and it could in turn be accessed via a graphical interface such as Gradio. Such a setup gives usability for the user, and takes full advantage of Paperspace's generic accelerated compute plus MLOps and continuous integration and deployment (CI/CD) capabilities.

Conclusions and next steps

We have seen that it is possible to fine-tune the current state-of-the-art open source large language model (LLM) MPT-7B using multiple GPUs on Paperspace.

The implementation is end-to-end, from raw input data through to useful generated outputs from the fine-tuned model. You can then do the same process with your own data to produce new fine-tuned models.

While we focus on the MosaicML implementation here, its primary purpose is to provide a tractable example of the generic idea of end-to-end LLM fine-tuning.

The MosaicML items presented are still being improved upon, with frequent detail updates to the repositories being added. So it is possible there will be small changes if you try this as a reader versus when this was written. But this is mostly a reflection of the still-new and rapidly evolving nature of the LLM field, and AI in general.

For next steps, check out some of the links below, read more about LLMs and Paperspace, try running the process yourself, or get going with fine-tuning on your own datasets!

- MPT-7B: blogpost, model on Hugging Face

- MPT-7B-Instruct: model, fine-tuning dataset

- MosaicML GitHub repositories: LLM Foundry, Streaming Dataset

- Navigating the Large Language Model revolution with Paperspace

- Paperspace get started, GPU machines, and documentation