Updated on Aug 17, 2022

Bring this project to life

The recent release of StyleGAN3 on Oct. 12 is cause for a lot of excitement in the deep learning community, and for good reason! For years, StyleGAN2 (and its various offshoots like StyleGAN2 with ADA and StarGAN-v2) has dominated the field of image generation with deep learning.

StyleGAN2 has an unparalleled ability to generate high-resolution (1024x1024) images. This capability has been applied in a myriad of projects, like the enormously popular FaceApp or the academic project This X Does Not Exist.

StyleGAN2 is also enormously generalizable meaning it's able to perform well on any image dataset that fits its rather simplistic requirements for use. Thanks to this combination of high quality and ease of use, StyleGAN2 has established itself as the premier model for tasks where novel image generation is required.

This is likely to change now that StyleGAN3 has been released.

What's New with StyleGAN3?

Under the hood

The team at Nvidia Labs had a number of interesting problems to solve before they released StyleGAN3.

In humans, information is processed in a way that enables sub-features of perceived images to transform hierarchically. As the authors at Synced [1] put it:

When a human head turns, sub-features such as the hair and nose will move correspondingly. The structure of most GAN generators is similar. Low-resolution features are modified by upsampling layers, locally mixed by convolutions, and new detail is introduced through nonlinearities

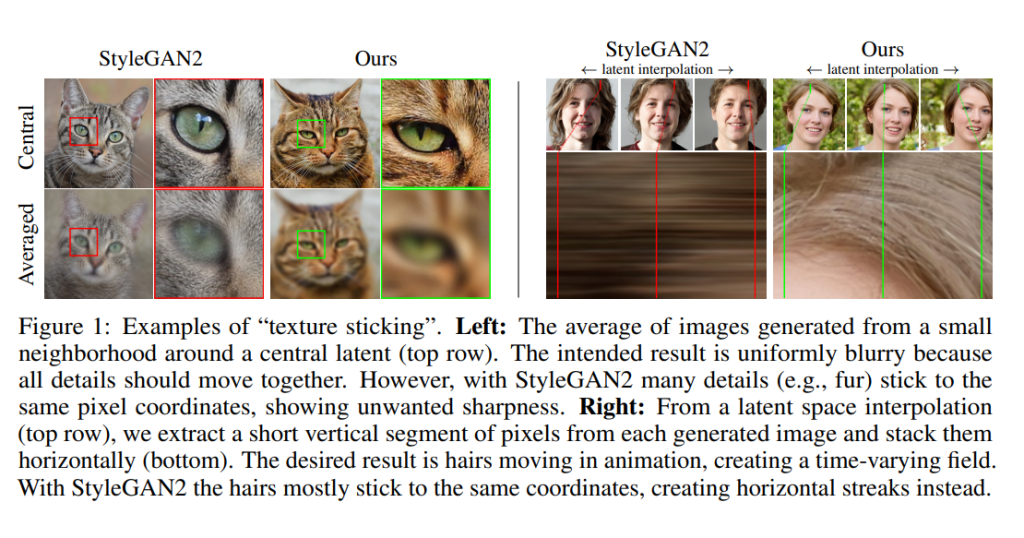

Despite this apparent similarity, it isn't actually the case. Currently, image synthesis using typical GAN generator architectures create their images in a non-hierarchical fashion. Instead of both the presence and position of finer features being controlled by the coarser features, fine detail positioning in StyleGAN2 and other image generator GAN architectures is instead dictated by "texture sticking." This is where the details are instead locked to their pixel coordinates inherited from training.

Eliminating this aliasing effect was a major priority for the development of StyleGAN3. To do this, they first identified that it was the signal processing in StyleGAN2 that was the problem.

In the generator, unintended positional references are drawn on to intermediate layers through the effect of aliasing. As a result, there is no outright mechanism in StyleGAN2 to force the generator to synthesize images in the strict hierarchical manner. The network will always amplify the effect of any aliasing, and, after combining this effect across multiple scales, causes the fixing of texture motifs to the pixel level coordinates. [2]

To solve this, they redesigned the network to be alias-free. This was accomplished by suppressing this aliasing by considering the effect of such aliasing in the continuous domain and low-pass filtering the results. In practice, this allows for the enforcement of continuous equivariance of the sub-pixel translation and rotation in all layers – meaning that details can be generated with equal effectiveness in StyleGAN3 regardless of their positional location and removes any effect of "texture sticking."

What's improved?

The removal of the aliasing effect on the StyleGAN architecture improved the efficacy of the model substantially, as well as diversified its potential use cases.

The removal of the effect of aliasing had an obvious impact on the images generated by the model. Aliasing was directly preventing the formation of hierarchical information upsampling. It was also removing any presence of "texture sticking."

With the finer details no longer constrained by the physical location of the coarse details, StyleGAN3's internal image representations are now constructed with coordinate systems that guide detail formations to be attached appropriately to underlying surfaces. This addition allows for significant improvements to be made particularly in models that are used to generate videos and animations.

Finally, StyleGAN3 does all of this while generating impressive FID scores. FID or Frechet Inception Distance is a measure of synthetic image quality. A low score indicates high-quality images and StyleGAN3 achieves a low score in combination with low computational expense.

StyleGAN3 in Gradient Notebooks:

Gradient Notebooks is a great place to try out StyleGAN3 on a free GPU instance. If you don't know Gradient already, it's a platform for building and scaling real-world machine learning applications and Gradient Notebooks provides a JupyterLab environment with powerful free and paid GPUs.

To get started with StyleGAN3 in Gradient Notebooks, we'll login or sign up to the Gradient console and head over to Notebooks.

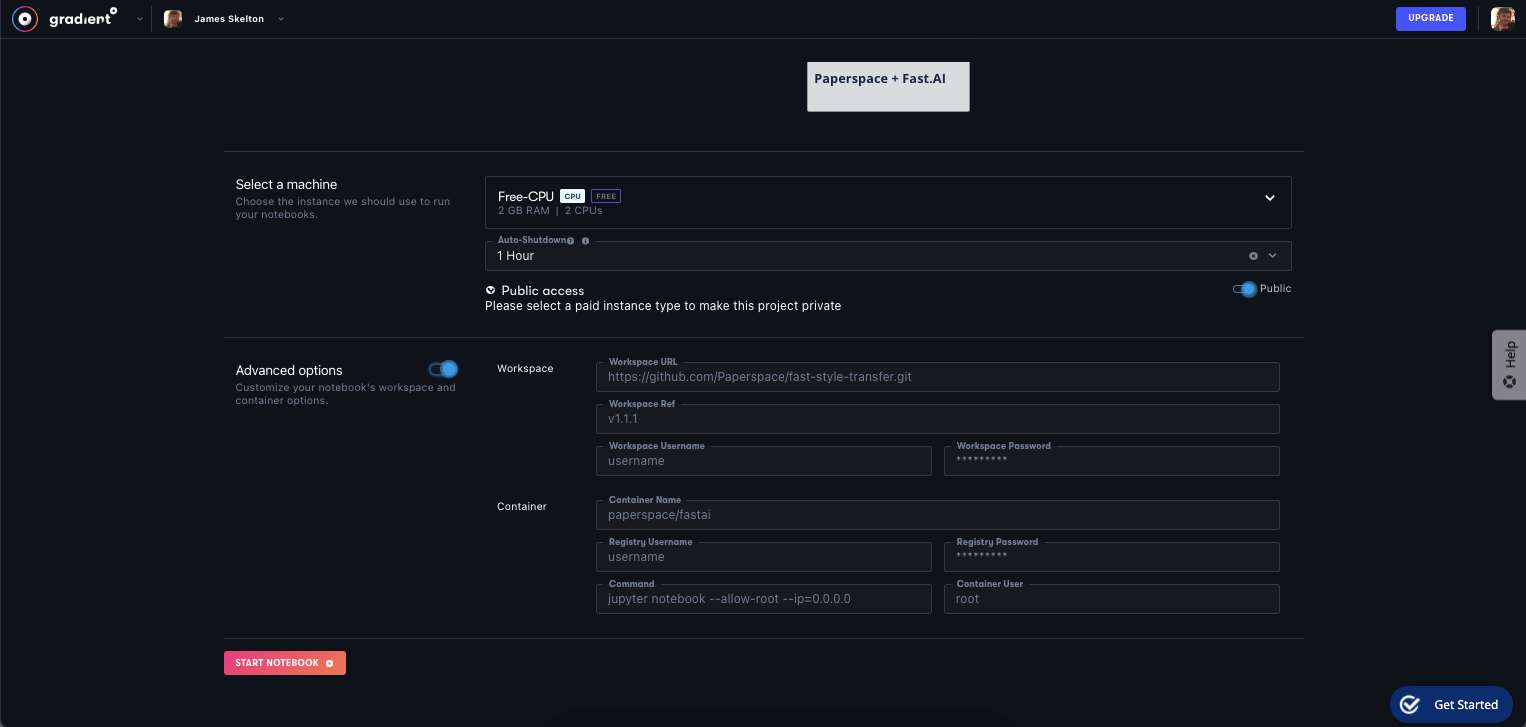

From the Notebooks page, we'll scroll to the last section "Advanced Options" and click the toggle.

For our Workspace, we'll tell Gradient to pull the repository located at this URL:

https://github.com/NVlabs/stylegan3

We will use the already provided, default container name. Finally, we'll scroll up to Select a Machine and we'll select a GPU instances.

Free GPU instances will be able to handle the training process although it will be fairly slow. We recommend you try a more powerful GPU if possible such as the paid A100 or V100 instance types – however of course this isn't always possible.

Next, start your notebook.

You can skip all the instructions above by clicking the link below!

Start this Notebook with one click!

Generate images using a pretrained model

Before continuing, make sure you run the following in your terminal to enable StyleGAN3:

pip install ninjaTo start, we are going to use a pretrained model to generate our images. The StyleGAN3 provides the relevant instructions needed to do so in the section "Getting Started."

python gen_images.py --outdir=out --trunc=1 --seeds=2 \

--network=https://api.ngc.nvidia.com/v2/models/nvidia/research/stylegan3/versions/1/files/stylegan3-r-afhqv2-512x512.pkl

We are going to copy the first command listed. This uses a pretrained model on the AFHQ-v2 dataset, comprised of 15,000 high quality images of cats, dogs, and various wildlife, to generate a single image of a cat. Here is an example of what that output may look like:

Train your own model

For this demo, we are going to use the Animal Faces-HQ dataset (AFHQ). We chose this dataset because it's simple to download the file to Gradient, but you can use any image dataset that matches the requirements for StyleGAN3.

After your notebook has finished initializing, open the terminal in the IDE and enter these commands:

git clone https://github.com/clovaai/stargan-v2

cd stargan-v2

bash download.sh afhq-dataset

This will download the dataset to a preset folder in stargan-v2.

Once the download is complete, change directories back to notebooks to run dataset_tool.py. This script packages the images into an uncompressed zip file, which StyleGAN3 can then take in the zip file for training. Training can still be enacted on a folder without using the dataset tool, however this is at the risk of having suboptimal performance.

cd ..

python stylegan3/dataset_tool.py --source=stargan-v2/data/afhq/train/cat --dest=data/afhq-256x256.zip --resolution=256x256

Now that our data is ready, we can begin training. If you have followed the above instructions, you can run training with the following terminal input:

python train.py --outdir=mods --gpus=1 --batch=16 --gamma=8.2 --data=data/afhq-256x2562.zip --cfg=stylegan3-t --kimg=10 --snap=10Once you have completed training your new model, it should output to the designated folder, in this case "mods".

Once we're done training our model, we can begin generating images. Here is an example of an image generated using the model that we trained using this process. After roughly 24 hours of training, we are able to generate realistic looking images of novel cats using StyleGAN3 on Gradient Notebooks.

Conclusion:

StyleGAN3 improved StyleGAN2 in the following ways:

- Overhauled upsampling filters to be more aggressive to suppress automatic aliasing of images

- Removed the effect of aliasing that was causing texture fixing of finer details to coarse detail pixel coordinates

- Improved capabilities for video and animation generation by forcing more natural hierarchical refinement of image details and creating internal representations of image details with coordinate systems to tie details correctly to underlying surfaces.

Follow our guide at the top of this article to begin setting up your own StyleGAN3 model training and image generation processes within the Gradient environment, and be on the look out to part 2 of this article in the future where we will work with StyleGAN3 to train a complete, novel model on Workflows before Deploying it.

Sources: