Ayoosh Kathuria

Currently, a research assistant at IIIT-Delhi working on representation learning in Deep RL. Ex - Mathworks, DRDO.

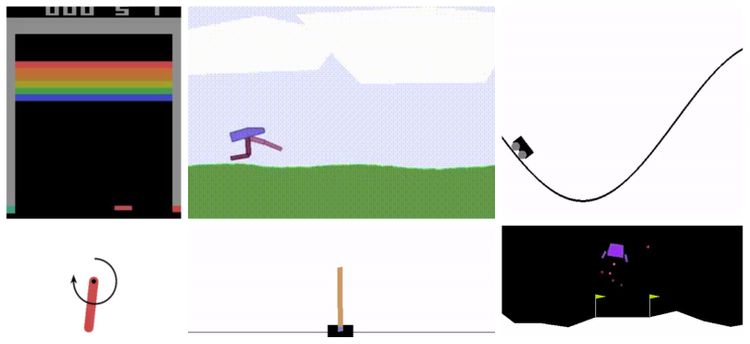

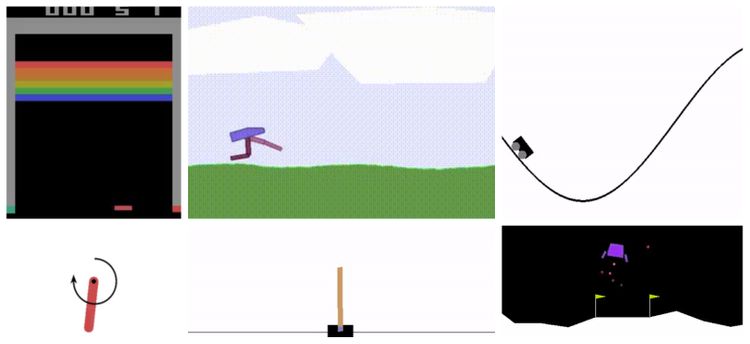

Getting Started With OpenAI Gym: Creating Custom Gym Environments

This post covers how to implement a custom environment in OpenAI Gym. As an example, we implement a custom environment that involves flying a Chopper (or a helicopter) while avoiding obstacles mid-air.

The Machine Learning Practitioner's Guide to Reinforcement Learning: Overview of the RL Universe

In this post, we describe the anatomy of how most Deep Reinforcement Learning algorithms work. We also cover the motivation to use RL over standard machine learning, On-Policy v/s Off-Policy learning, the Exploration-Exploitation Tradeoff, and many more important RL concepts.

Getting Started With OpenAI Gym: The Basic Building Blocks

In this article, we'll cover the basic building blocks of Open AI Gym. This includes environments, spaces, wrappers, and vectorized environments.

Nuts and Bolts of NumPy Optimization Part 3: Understanding NumPy Internals, Strides, Reshape and Transpose

We cover basic mistakes that can lead to unnecessary copying of data and memory allocation in NumPy. We further cover NumPy internals, strides, reshaping, and transpose in detail.

Nuts and Bolts of NumPy Optimization Part 2: Speed Up K-Means Clustering by 70x

In this part we'll see how to speed up an implementation of the k-means clustering algorithm by 70x using NumPy. We cover how to use cProfile to find bottlenecks in the code, and how to address them using vectorization.

Nuts and Bolts of NumPy Optimization Part 1: Understanding Vectorization and Broadcasting

In Part 1 of our series on writing efficient code with NumPy we cover why loops are slow in Python, and how to replace them with vectorized code. We also dig deep into how broadcasting works, along with a few practical examples.