One of the things we’re always interested in learning more about is the use of ML in the music industry. We recently posted tutorials on Music Generation with LSTMs and Audio Classification with Deep Learning, but what about companies that are building applications with ML-based features for audio professionals?

That’s where Oliver Reznik comes into play. Oliver and his team have spent the past few years building a pair of ML-powered applications for musicians, DJs, and producers called Tunebat and Specterr. We were lucky enough to sit down with Oliver and explore some of these topics together.

Let’s dive into the world of ML-assisted professional audio tools!

Paperspace: You’re building two applications at the same time right now, is that right? Can you tell us about Tunebat and Specterr?

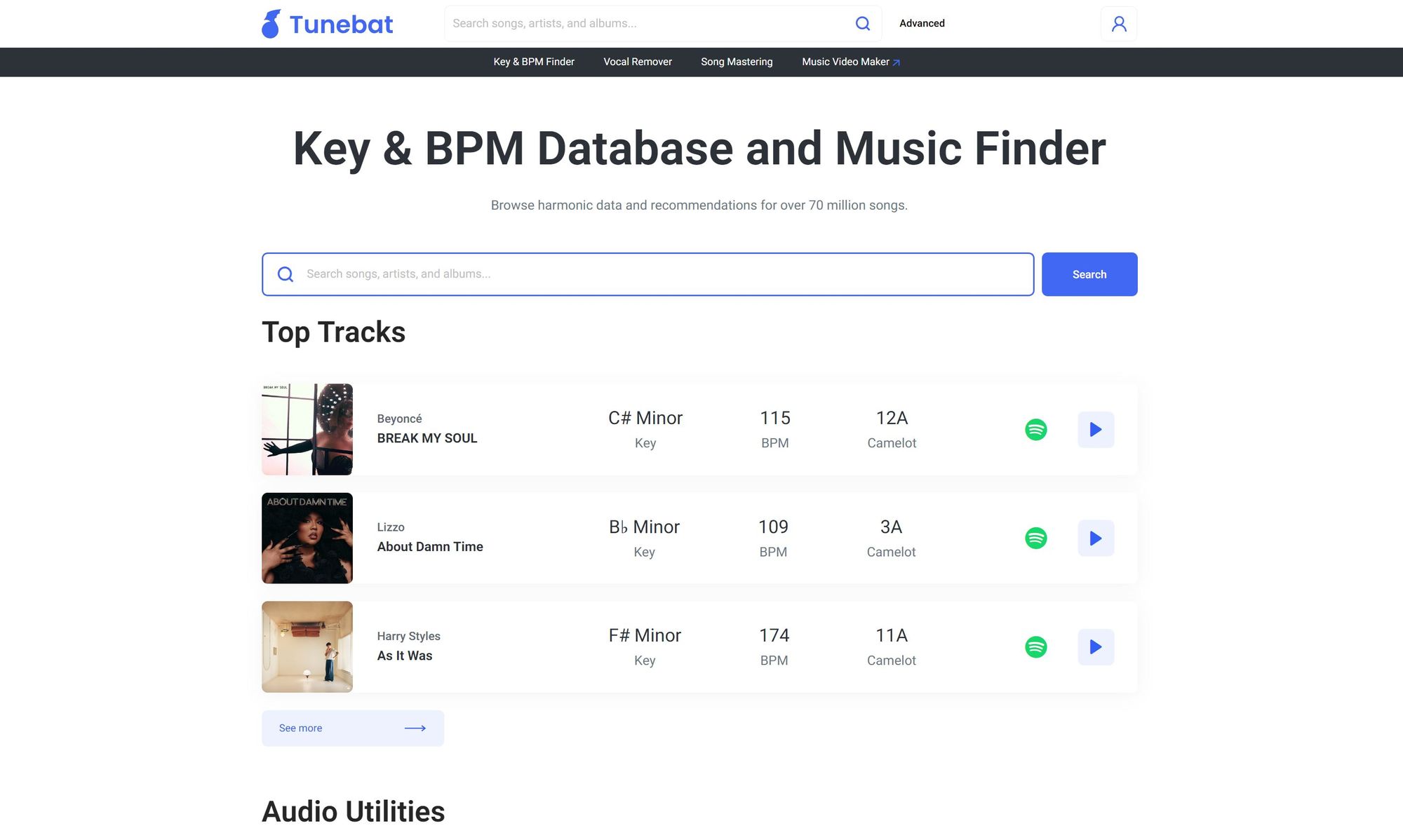

Reznik: Yes that’s correct. Tunebat is a web app with a lot of different music related features, probably best known for making it easy to find the key and BPM (beats per minute) of songs. You can either browse a music database or upload audio files to be analyzed by the key & BPM finder. Additionally, Tunebat assists users with music discovery, vocal removal, and automated mastering.

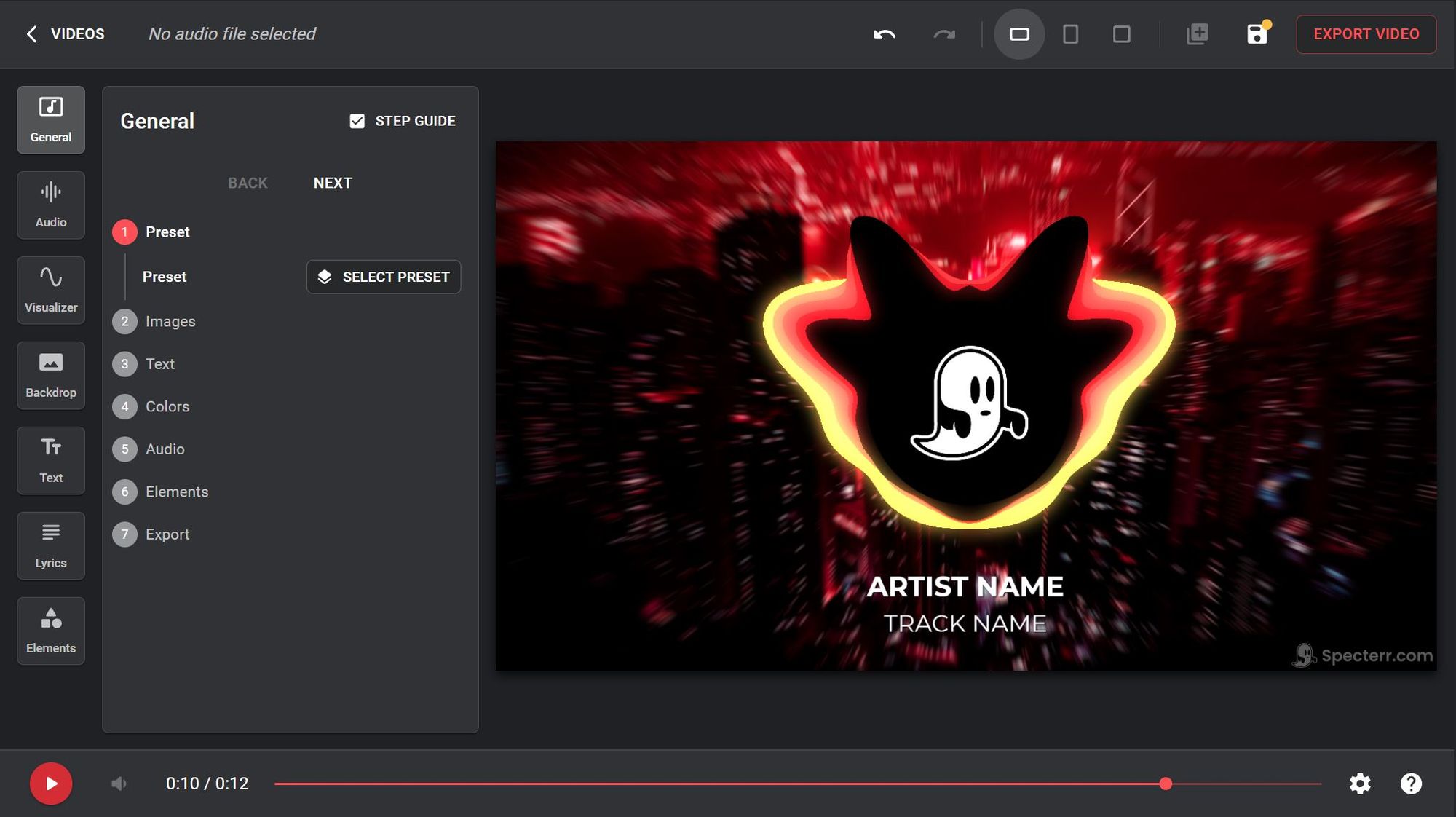

Specterr is an online music video maker. Users upload audio files and create customized videos using a web-based editor. After exporting, videos can be downloaded or posted on social media to help promote the artist. Specterr specializes in music visualizer and lyric video creation.

Paperspace: We’re interested in how you got started with these ideas. How and when did you know you were onto something useful for the music industry? What did your MVPs look like?

Reznik: Originally I was interested in learning how to DJ and I wanted to find songs that mixed well together. DJs often prefer to mix tracks with similar BPMs and keys to achieve smooth transitions. When I tried googling the key and BPM of songs, nothing came up. So I put my DJ aspirations on hold and created Tunebat as an easy way to find that info.

That same data can also be leveraged to enable better music discovery for DJ mixes. The MVP was a more basic and ugly version of the database portion of the current day Tunebat. Pretty soon after the initial launch I posted a link on a DJ message board and traffic started pouring in. The users loved it and kept coming back so I knew Tunebat was filling a need.

Specterr was an idea that once again was born from my personal experience. As a hobbyist EDM producer, I needed music videos in order to post my music on video platforms. The available music video tools were either too tedious to use, incapable of achieving the right effects, or just had a bad user experience. So I built a video rendering engine and started taking orders from artists where I would manually create videos for them with the engine.

It wasn’t an ideal process for anyone, but the demand was still there. So Specterr was created as a self-service platform for music video customization and rendering.

“Paperspace has been the ideal platform for incorporating GPU-equipped machines into our cloud infrastructure. The Tunebat vocal remover depends on GPUs to process audio files with complex models. Specterr on the other hand relies on GPUs to accelerate the video rendering process, so videos can be generated in a timely manner. Paperspace enables both these use cases.”

Oliver Reznik, Founder of Tunebat and Specterr

Paperspace: Tunebat is able to parse an audio track into a few component parts, including beats per minute (BPM) and key signature (or location on the Camelot Wheel). It’s also able to remove vocals from an audio track. Can you tell us a little bit about how these features work?

Reznik: Sure. For the audio analyzer we worked closely with the Music Technology Group at Universitat Pompeu Fabra Barcelona.

We implemented their essentia.js library on Tunebat. This allows us to determine audio features using the algorithms and models from the Essentia project on the client side. The primary ML use case here is for sentiment analysis, which in this context means determining the perceived energy, danceability, and happiness of a track.

Essentia.js is really cool because it’s able to analyze these audio files efficiently using models entirely in a web browser.

The vocal remover also uses models to identify the vocal and instrumental parts of an audio track. Then it splits those parts into separate files. This process is done in the cloud on GPU-enabled Paperspace VMs using PyTorch.

Paperspace: Specterr is able to generate some pretty interesting music-aware visuals from a song. What can you tell us about how it works?

With Specterr, it’s important that the visuals compliment the audio rather than compete with it. The visualizer generates animations that respond to and accentuate the features of a submitted music file.

Specterr analyzes the file using an FFT (fast Fourier transform), which collects amplitude and frequency data. This data is then used to generate audio visualizations.

We’ve spent a great deal of time figuring out how to manipulate this data to create visuals that are aesthetically pleasing. Our engine uses a variety of in-house algorithms to animate various video elements.

When the video is synced with the audio, you get visuals that dance to the music.

Paperspace: What does the roadmap look like moving forward? How do you decide what features to add next? What signals do you look for from your users during adoption?

Reznik: We’re always working on improvements and new features. We mainly look at usage volume and retention when evaluating the impact of a feature. The vocal remover has become one of the most used features on Tunebat so we intend to expand on it soon with new removal options such as drums, bass, and more.

Later on in our roadmap we want to provide better support for developers with an API for Tunebat and Specterr. Also we want to give music listeners insight into their listening habits with an upcoming project called MusicStats.com.

Paperspace: You’ve been a Paperspace user for a little while – can you tell us about what problem Paperspace solves for you? What can you tell us about the role of GPU computing in building Tunebat & Specterr?

Reznik: Paperspace has been the ideal platform for incorporating GPU-equipped machines into our cloud infrastructure. The Tunebat vocal remover depends on GPUs to process audio files with complex models. Specterr on the other hand relies on GPUs to accelerate the video rendering process, so videos can be generated in a timely manner. Paperspace enables both these use cases.

Beyond that, it’s just really easy to use as a developer. Paperspace has a lot of features like pre-configured templates, and a well-designed console application that speeds up a lot of our development processes.

Paperspace: What’s the best way for our readers to start using Tunebat or Specterr? What would you recommend they check out for more information about your products?

Reznik: I’d definitely recommend getting started by visiting tunebat.com or specterr.com. From there it’s pretty easy to start browsing the Tunebat database. You’ll find some interesting information about how happy or energetic your favorite music is, and you can easily discover some new music to listen to as well.

On Specterr, you can jump into the video editor and start customizing right away. If you have some music you’d like to use, just upload it and you’ll be able to see a live preview as you edit.