This tutorial is from a 7 part series on Dimension Reduction:

- Understanding Dimension Reduction with Principal Component Analysis (PCA)

- Diving Deeper into Dimension Reduction with Independent Components Analysis (ICA)

- Multi-Dimension Scaling (MDS)

- LLE

- t-SNE

- IsoMap

- Autoencoders

(A jupyter notebook with math and code(python and pyspark) is available on github repo )

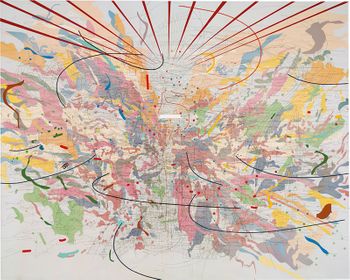

LLE is a topology preserving manifold learning method. All manifold learning algorithms assume that dataset lies on a smooth non linear manifold of low dimension and a mapping f: RD -> Rd (D>>d) can be found by preserving one or more properties of the higher dimension space. Topology preservation means the neighborhood structure is intact. Unlike, distance preservation methods like MDS topology preservation is more intuitive. The points which are nearby in higher dimension should be close in lower dimension. The inspiration behind LLE is as original authors nicely put it 'Think globally fit locally'. Methods like SOM(self-organizing map) are also topology preserving but they assume a predefined lattice for the lower manifold. LLE creates the lattice based on the information contained in the dataset.

The image above shows a simulated dataset and its LLE-embedding. Few points of the simulated S curve are circled. They are neighbors of the same point. We can see that in the LLE-embedding they have been mapped close to each other(points in the circle). This shows the ability of LLE in preserving the neighborhood structure.

LLE makes few important assumptions. These are:

- Data is well sampled i.e. density of the dataset is high.

- Dataset lies on a smooth manifold.

Data is well-sampled means that for every point we have at least 2d points in its neighborhood. If the manifold is smooth we can assume that the point and its neighbors lie on the locally linear manifold. If there are sharp bends or holes this property won't hold true.

Implementation of LLE

Necessary imports.

#necessary imports

from sklearn import datasets

from pyspark.sql import SQLContext as SQC

from pyspark.mllib.linalg import Vectors as mllibVs, VectorUDT as mllibVUDT

from pyspark.ml.linalg import Vectors as mlVs, VectorUDT as mlVUDT

from pyspark.sql.types import *

from pyspark.mllib.linalg import *

from pyspark.sql.functions import *

from pyspark.ml.feature import StandardScaler

from pyspark.mllib.linalg.distributed import IndexedRowMatrix

import math as m

import numpy as np

Steps of LLE:

- Create neighborhood graph

- For every point calculate local weight matrix W

- For every point use W to create the point from its neighbors.

First Step of LLE: Neighborhood graph:

The first step of LLE is to create a neighborhood graph. A distance metric is needed to measure the distance between the two points and classify them as neighbors. For example Euclidean, Mahalanobis, hamming and cosine.

Neighbourhood matrix: Neighbourhood matrix can be created using e-neighbourhood, K-nearest neighbors, locality sensitive hashing.

e-neighbourhood: It creates an edge between nodei & nodej if distance between them is smaller than a fixed parameter e. e should neither be small nor very large. If e is large every node will have an edge to every other and its small many nodes will be without neighbors.

K-nearest neighbors: Using this method for every node we choose K nearest data points as its neighbors. This method ensures that every node has K neighbors. If the density of data points varies a lot, this method will produce an asymmetric neighborhood graph. For example, a nodei may be selected by a faraway point as its neighbor due to the low density at that point.

Locality-sensitive hashing: Unlike the above two methods locality sensitive hashing is an approximate method to select neighbors. In layman's terms locality -sensitive hashing hashes every point using a set of hash functions and all the points which are hashed to the same bucket are classified as neighbors. If you wish to learn more about LSH, there are many good tutorials available on the web.

# for every point create an id sorted vector of distance from every other point

num_samples = 3000

X, color = datasets.make_s_curve(num_samples)

df = (spark.createDataFrame(sc.parallelize(X.tolist()).zipWithIndex().

map(lambda x: (x[1], mlVs.dense(x[0]))), ["id", "features"]))

udf_dist = udf(lambda x, y: float(x.squared_distance(y)), DoubleType())

df_2 = df

df = df.crossJoin(df ).toDF('x_id', 'x_feature', 'y_id', 'y_feature')

df = df.withColumn("sim", udf_dist(df.x_feature, df.y_feature))

df = df.drop("x_feature")

st = struct([name for name in ["y_id", "sim","y_feature"]]).alias("map")

df = df.select("x_id", st)

df = df.groupby("x_id").agg(collect_list("map").alias("map"))

df = df.join(df_2, df_2.id == df.x_id, "inner").drop("x_id")

Second Step of LLE: Linear Weighted Reconstruction of a point using its neighbors:

Each point of the dataset is reconstructed as a linear weighted sum of its neighbors. Since only neighbors participate in reconstruction it is local. Reconstruction is achieved by linear coefficients of weights, hence linear. That is why this method is named as locally linear embedding.

The weights of points Pi and Pj are independent from each other.

Rotation, rescalings, and translations of a points and its neighbors are don't affect the local W matrix of the point.

It is important to note that while solving for W if the number of neighbors is greater than the original dimension D some of the weight coefficients might be zero leading to multiple solutions. This issue can be handled by adding a regularization to the least squares problems penalizing the large weights.

For every point Yi:

create a matrix Z with all neighbors of Yi

subtract Yi from Z

create the local covariance matrix C = ZZT

Add a regularized term to avoid C being singular, C= C + reg*I

solve CW = 1 for W

set Wij = 0 if j is not a neighbor of i

set W = W/sum(W)$

# calculate local neighborhood matrix

def get_weights(map_list, k, features, reg):

sorted_map = sorted(map_list, key = lambda x: x[1])[1:(k+1)]

neighbors = np.array([s[2] for s in sorted_map])

ind = [s[0] for s in sorted_map]

nbors, n_features = neighbors.shape

neighbors_mat = neighbors - features.toArray().reshape(1,n_features)

cov_neighbors = np.dot(neighbors_mat, neighbors_mat.T)

# add regularization term

trace = np.trace(cov_neighbors)

if trace > 0:

R = reg * trace

else:

R = reg

cov_neighbors.flat[::nbors + 1] += R

weights = linalg.solve(cov_neighbors, np.ones(k).T, sym_pos=True)

weights = weights/weights.sum()

full_weights = np.zeros(len(map_list))

full_weights[ind] = weights

return(Vectors.dense(full_weights))

udf_sort = udf(get_weights, mllibVUDT())

df = df.withColumn("weights", udf_sort("map", lit(10), "features", lit(0.001)))

Third Step of LLE: Reconstruct points in lower dimension:

At this step, we don't need the dataset. Now we have to create each point in lower dimension using its neighbors and local W matrix. The neighborhood graph and the local Weight matrix capture the topology of the manifold.

The recreation error in lower dimension is constrained to make it well posed. The equation 1(in the image steps in LLE) constrains the output to be centered at origin making it translation invariant. The equation 2 is required to make the output rotation and scaling invariant.

# udf for creating a row of identity matrix

def I(ind):

i = [0]*150

i[ind]=1.0

return(mllibVs.dense(i))

# convert dataframe to indexedrowmatrix

weights_irm = IndexedRowMatrix(df.select(["id","weights"]).rdd.map(lambda x:(x[0], I(x[0])-x[1])))

M = weights_irm.toBlockMatrix().transpose().multiply( weights_irm.toBlockMatrix() )

SVD = M.toIndexedRowMatrix().computeSVD(150, True)

# select the vectors for low dimensional embedding

lle_embbeding = np.fliplr(SVD.V.toArray()[:,-(d+1):-1])

Plot the resultant embedding

Visualization of a subset of MNIST dataset after LLE.

Drawbacks of LLE:

LLE is sensitive to outliers and noise. Datasets have a varying density and it is not always possible to have a smooth manifold. In these cases, LLE gives a poor result.

Conclusion: In this article, we discussed the basic and important concepts related to the understanding of LLE and its implementation. Later, we implemented a standard LLE algorithm on pyspark. Hessian LLE, Modified LLE, and LTSA address some of the shortcomings of LLE.

We will talk about t-SNE in the next article.