The Ampere microarchitecture has proven dominant in every domain that requires GPU computation, especially in deep learning. Whether we are discussing the lower powered, consumer grade Workstation GPUs or the higher powered, professional grade Data Center GPUs, a user would be hard pressed to achieve a similar performance on older generation GPUs of the same class. We outlined why Ampere is so powerful in our Ampere deep dive and coverage of Tensor Cores and Mixed Precision Training, so please do check those out for more information about this microarchitecture.

This capability is especially true in the context of the de facto apex machine for Deep Learning: the A100. The A100 has shown demonstrably in benchmarking tasks that it is a radical improvement over the previous Data Center GPU peak, the V100. These GPUs, whether used alone or in a multi-GPU machine instance, therefore represent the state of the art in a data scientist's technological arsenal.

Paperspace is ecstatic to announce that we are bringing more of these powerful Ampere GPUs to our users. These new machines, including new A100-80GB, A4000, and A6000 GPUs, are now available for Paperspace users, with accessibility varying from region to region. In the next section, let's take a slightly deeper look at the new type of available instance, contrast its pricing with competitors, and detail its regional availability. We will then discuss the other new Ampere GPU's and their regional availability.

The A100 - 80 GB on Paperspace

We are pleased to announce that we now have both A100s with 40 GB and 80 GB of GPU memory on Paperspace. Previously, only A100s with 40 GB of GPU memory were available. The 80 GB versions, which differ little from their counterparts beyond their GPU memory specification, have double the available RAM. Effectively, this allows users on these GPUs to take even better advantage of the massive throughput (1,555 GB/s) capabilities of these powerhouse machines to even more rapidly train deep learning models than ever before!

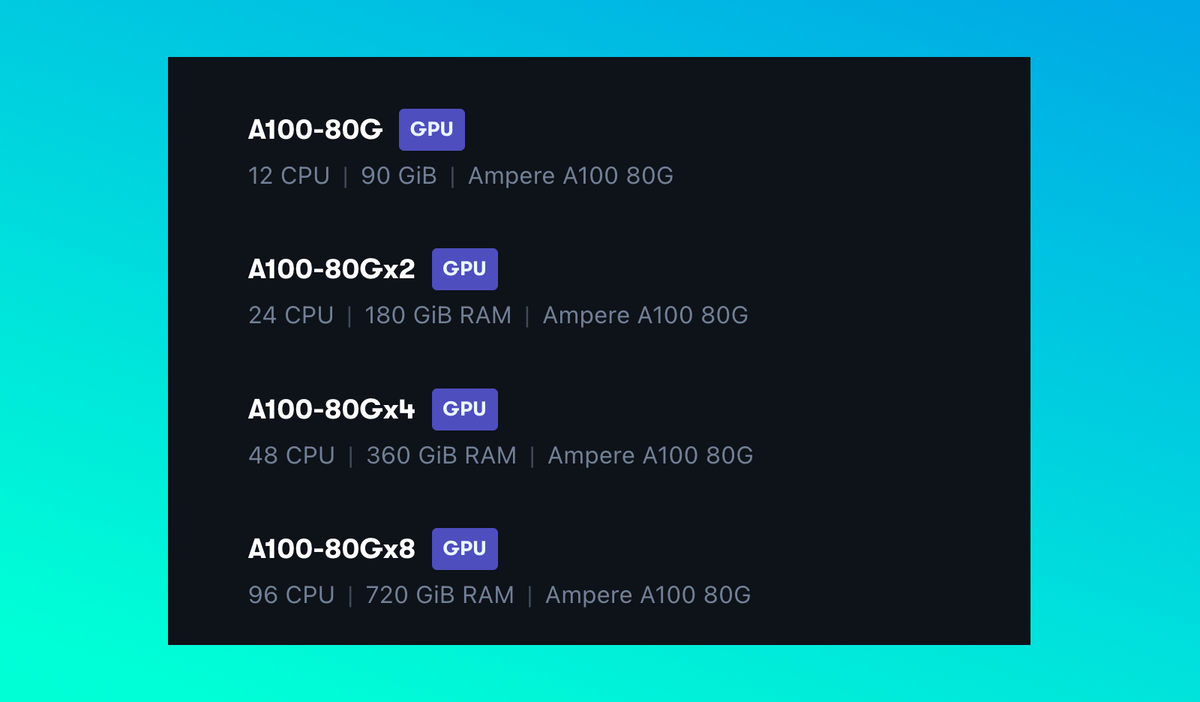

Both the 80 GB and 40 GBA100s can now be configured into even larger multi-GPU instances. You can now select A100s in 1x, 2x, 4x, and 8x configurations if you are on the Growth plan. Using one of these multi-GPU machine types, when combined with a Deep Learning package for multi-GPU instances like Colossal AI, can massively cut your training times. This is compounded further by the Third Generation NVLink software present in A100s, which will accelerate training even further than previous generation multi-GPU instances could achieve.

As you can see from the table above, the pricing for these multi-GPU machines is predicated on a function of the number of GPUs in the system and the initial pricing. At 24.72 per hour, the A100- 40 GB x 8 instance is significantly cheaper on Paperspace than with major competing services like AWS (which does not allow users to access x 1, x 2, or x 4 instances of A100s) and GCP, where the prices for a similar instance would cost $32.77 and $29.38 per hour respectively.

This difference in pricing is particularly interesting because the A100 - 80GB instances are also less costly, an effect compounded by the direct relationship between time and cost when using these machines. Relatively few other GPU cloud providers offer access to these A100 - 80 GB machines outside of Paperspace. Major competitors like AWS and GCP do not offer any instances with this GPU type, only the 40 GB version. Nonetheless, the pricing continues to compare favorably for those that do. For example, the NC A100 v4 series at Azure, which is still in preview in their documentation, will be priced at $3.64 per hour for a single GPU instance: over half a USD more per hour than Paperspace.

This expanded GPU capacity for A100s will be accessible in the NY2 for now, but be sure to watch our announcement pages for further updates in your region!

More Ampere in more regions!

In addition to the new A100s for the NY2 region, our other two regions have also received major upgrades in the form of additional Ampere machine types. Specifically, these are new A4000 instances in CA1 and AMS1 in single, x 2, and x 4 multi-GPU machine types, and new A6000 single GPU machine types available in AMS1.

These expansions to our Ampere Workstation GPU lineup will serve to offer massive upgrades over previous generation iterations, the RTX 4000 and RTX 6000 GPUs, also available on Paperspace, and are available for users in these regions today.

For more information about regional availability for Paperspace machines, please visit our docs.

Closing thoughts

These powerful Ampere series GPUs represent a massive upgrade for any new user to the microarchitecture. Now, more users than ever can access these powerful GPUs on Paperspace in each region, and we have put more power in the hands of our users than ever through the new, powerful A100 - 80GB multi-GPU instances.

We are always trying to bring our users the most powerful machines for their work on Paperspace. Look out for more upcoming new machine types and other updates for the Paperspace by keeping up with us at https://updates.paperspace.com/.