Bring this project to life

PyTorch Lightning is a framework for research using PyTorch that simplifies our code without taking away the power of original PyTorch. It abstracts away boilerplate code and organizes our work into classes, enabling, for example, separation of data handling and model training that would otherwise quickly become mixed together and hard to maintain.

In this way it enables building up and rapid iteration on advanced projects, and getting to results that would otherwise be difficult to reach.

When combined with the ease of use of Paperspace and its readily available GPU hardware, this provides an excellent route for data scientists who want to get ahead on their real projects, without having to become full-time maintainers of code and infrastructure.

Who is Lightning for?

In a blog entry on Lightning by its creator, they state

PyTorch Lightning was created for professional researchers and PhD students working on AI research

This is fair, and to get the full benefit from the structure it provides to our code requires doing real-sized projects. In turn, this requires users to be familiar already with PyTorch, Python classes, and deep learning concepts.

Nevertheless, it is not any harder to use than those tools, and in some ways it is easier. So for those on their journey to becoming data scientists, it is a good route to follow.

This is particularly true given its documentation, which includes excellent overviews of what Lightning can do, and includes a set of tutorials that introduce PyTorch as well as Lightning.

How Lightning is Organized

Lightning separates the main parts of our end-to-end data science workflow into classes. This separates data preparation and model training, and makes things re-useable without having to go through the code line-by-line. For example, changes needed to train a model on a new dataset becomes much clearer.

The main classes in Lightning are

- LightningModule

- DataModule

- Trainer

The LightningModule enables a deep learning model to be trained within Lightning, by using the PyTorch nn.Module. This can include training, validation, and testing as separate methods within the class.

The DataModule lets us put all the processing needed for a particular dataset in one place. In real-world projects, going from our raw data to something model-ready can be a very large part of the overall code and effort needed to get to a result. So organizing it in this way so that data prep is not mixed in with the model is hugely valuable.

The Trainer then allows us to use the dataset and the model from the above 2 classes together, without having to write much more engineering or boilerplate code.

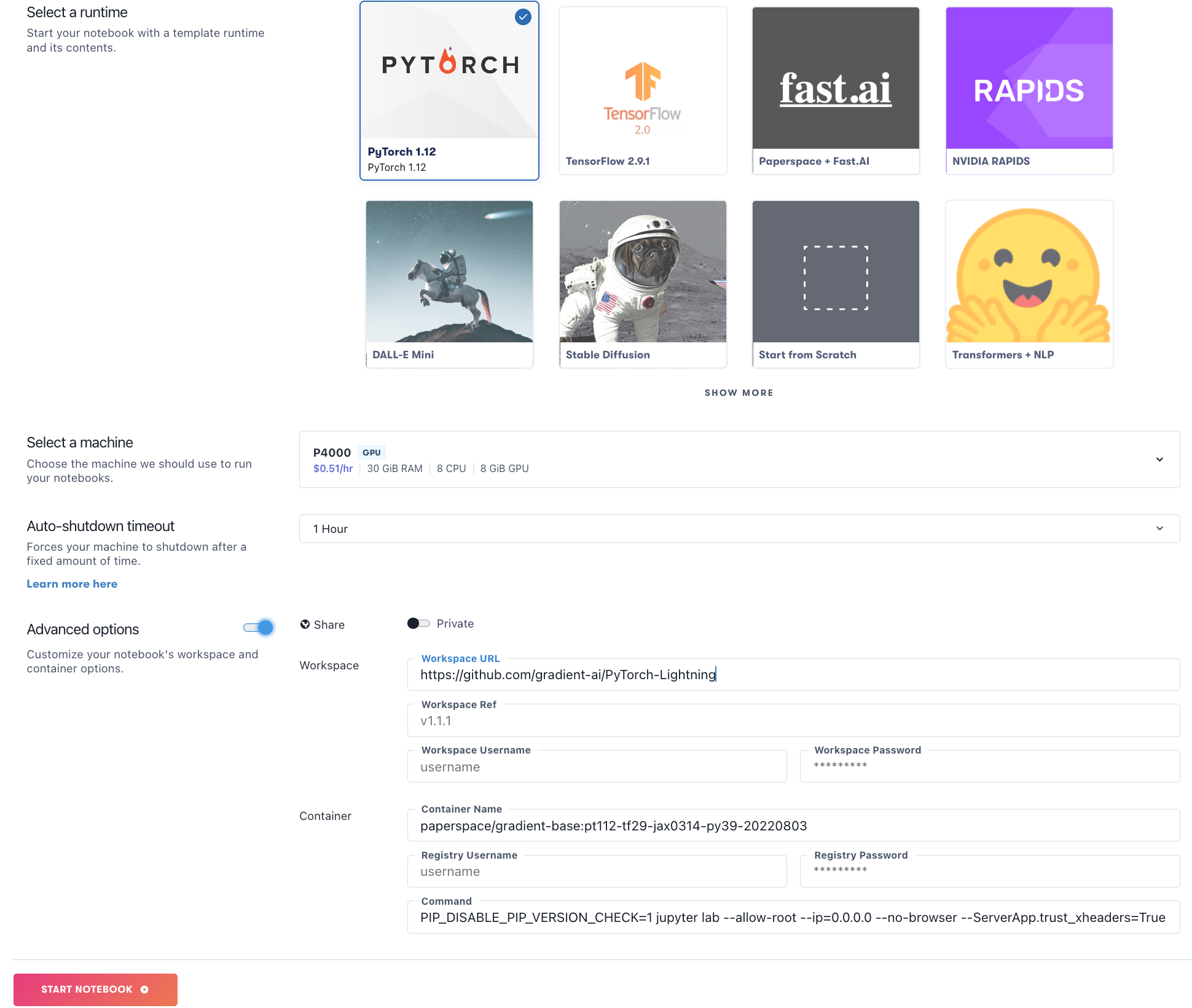

Running Lightning on Paperspace

To run Lightning on Paperspace, once signed in, simply spin up a Gradient Notebook, choose a machine, point to our repo containing example .ipynb notebooks, and we're ready to go. It's as simple as that!

Alternatively, we can also launch the notebooks with just a click using the Run on Gradient button below

Bring this project to life

For more information on running notebooks on Gradient, visit our Notebooks tutorial.

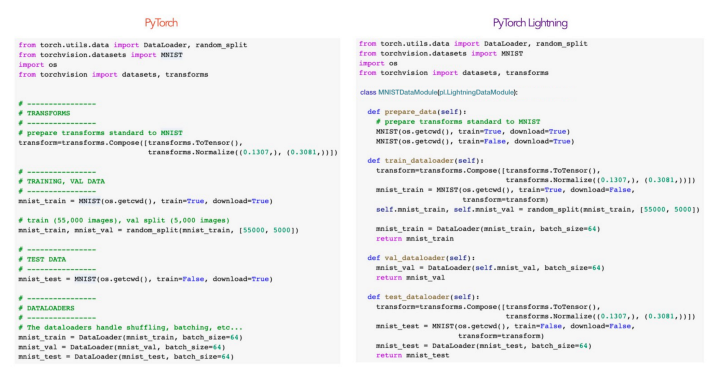

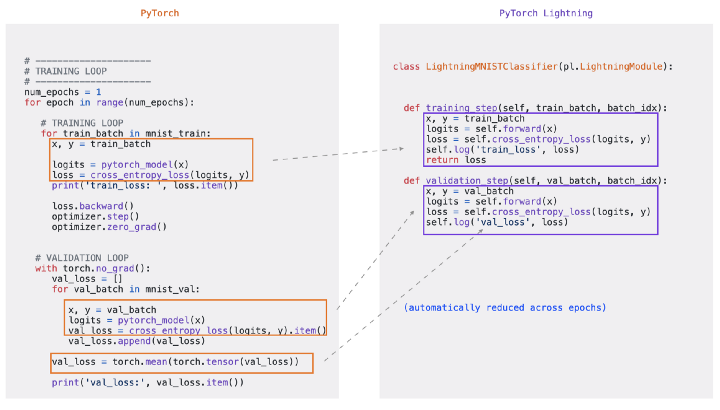

Example of code being simplified

These two screenshots, from the blog entry introducing Lightning by its author, show a typical example of code being simplified.

In the first image, an ad hoc linear arrangement of data preparation and splitting into train/validation/test sets is encapsulated in a DataModule. Each preparation step becomes its own method.

In the second image, a screenful of mostly boilerplate code to instantiate a model training loop is simplified into a LightningModule less than half its length, with the training and classification steps in turn becoming class methods rather than for and with loops.

While the overhead can appear quite large for simple examples, when data preparation on full-sized real projects begins to take many screens of code, this organization and consequent modularization with reusability only becomes more valuable.

The Lightning documentation also has great animated examples showing in more detail the code arrangements and simplifications through a typical data preparation plus model training process.

There's a lot more

This blog entry is a brief and basic overview of Lightning and running it on Paperspace. There is a lot more functionality waiting to be explored, that either comes for free or is easy to activate without having to write a lot more code.

- Checkpoint saving and loading

- Epoch and batch iteration

- Multi-GPU support

- Log experiments to TensorBoard

- Does the optimizer step, backpropagation and zero-out-the-gradients calls

- Disables gradients during model evaluation

- TPU and Graphcore IPU hardware accelerators

- 16-bit automatic mixed precision (AMP)

- Export to ONNX or Torchscript for model deployment

- Integration with DeepSpeed

- Profiler to find code bottlenecks

Finally, a companion online presence to Lightning, Lightning Bolts (website, docs, repo) contains a range of extensions and models that can be used with Lightning to further add to its capabilities.

Documentation & tutorials

As a data scientist with over 20 years' experience, your author has seen documentation for many products and websites. Lightning's documentation and tutorials are certainly among the higher quality and most comprehensive that I have worked with. Everything is covered with quality content, and they "get" what we want to be seeing in terms of content as a data scientist.

It's not a site for complete beginners, as on the first quick start page we are launched straight into PyTorch code, Python classes, and an autoencoder deep learning model. You can follow what's going on without having to be expert at all of these, but it does help to have some familiarity.

Having said that, there is a set of hands-on examples and tutorials, which like the documentation are comprehensive and well presented. The ones labeled 1-13 are based on the 2020 version of the University of Amsterdam deep learning courses.

The tutorials 1-13 begin with introducing PyTorch, and then move on to Lightning, so if you are less familiar with the PyTorch-classes-deep learning trifecta, these would be a good place to go.

All of the tutorials are in .ipynb Jupyter notebook form, and can thus be run on Paperspace Gradient with no setup required.

The full set of documentation on the page is

- Get started

- Level up: basic, intermediate, advanced, and expert skills

- Core API

- API reference

- Common workflows (level-up rearranged)

- Glossary

- Hands-on examples

Conclusions & next steps

We have introduced PyTorch Lightning and shown how it can be used to simplify and organize our real-world deep learning projects.

It assumes some familiarity with PyTorch, Python classes, and deep learning, but includes excellent documentation and tutorials that help us learn PyTorch in addition to Lightning.

In combination with Paperspace's ease of use in getting going with coding, and available GPU hardware, this lets us get straight to solving our data science problems and avoids needing time to set things up and write boilerplate code.

For next steps, try running the tutorials on Paperspace, or visit the websites below to learn more:

and, outside of Lightning itself: