Intro to Optimization in Deep Learning: Vanishing Gradients and Choosing the Right Activation Function

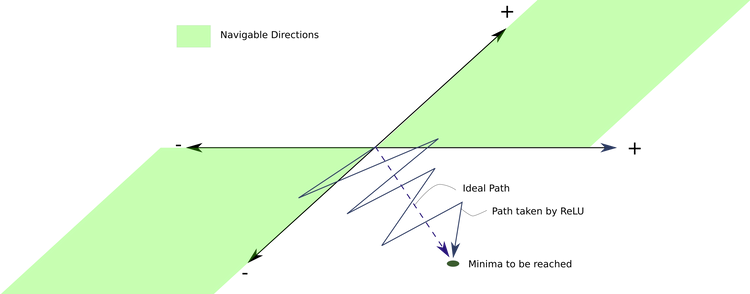

An look into how various activation functions like ReLU, PReLU, RReLU and ELU are used to address the vanishing gradient problem, and how to chose one amongst them for your network.