In the modern era of high computational power and advanced technological advancements, we are able to achieve many of the complex tasks that would once be deemed impossible. Generative networks are one of the strongest talking points of the previous decade due to the gravity of the fabulous results that they have been able to generate successfully. One of the tasks that we will discuss in this article, which was also once considered quite complex to achieve, is the generation of realistic faces with the help of generative adversarial networks. There have been multiple successful deployments of models and different neural architectural builds that have managed to accomplish this task effectively.

This article will focus on understanding some of the basic concepts of facial generation with the help of generative adversarial networks. We will try to accomplish the generation of realistic images with the help of Deep Convolutional Generative Adversarial Networks (DCGANs) that we constructed in one of our previous works. Before moving further with this article, I would recommend checking out two of my preceding works to stay updated with the contents of this article. To get started with GANs, check out the following link - "Complete Guide to Generative Adversarial Networks (GANs)" and to gain further knowledge on DCGANs, check out the following link - "Getting Started With DCGANs."

To understand the numerous core concepts that we will cover in this article, check out the table of contents. We will start off with an introduction to the generation of facial structures with GANs and then proceed to gain further knowledge on some of the pre-existing techniques that have been successfully deployed to achieve the best results possible for these tasks. We will have a quick overview of the DCGANs architecture and methodologies to approach the following task. And then have a code walkthrough to discuss the various steps to follow to achieve a desirable result. Finally, we will conclude with some much-needed sections of the future works and the types of improvements and advancements that we can make to the built architecture model.

Introduction:

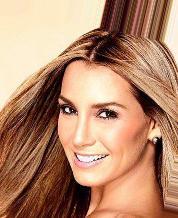

The growth of Generative Adversarial Networks (GANs) is rapid. The continuous advancements in these dual neural network architectures, consisting of a generator model and discriminator, help to stabilize outputs and generate real images, which become almost next to impossible for the human eye to differentiate. The above 1024x1024 sized image shows the picture of a cheerful woman. Looking at the following image, it would come as a surprise to people new to this spectrum of deep learning that the person does not actually exist. It was generated from the website called this X does not exist, and you can also generate random faces on your own by refreshing the following link. Each time the generator is run, a completely new realistic face is generated.

When I asked a few of my friends to determine what they thought about the particular picture, almost nobody was able to figure out that the following image was generated with the help of Artificial Intelligence (AI). To be precise, the StyleGAN2 architecture used to generate the following faces performs at an exceptional level producing these results indistinguishable from real photographs. Only upon further introspection will you be able to notice slight abnormalities in the following image depicted above. One of the noticeable issues could be the weird shaded-out background. Another issue could be the difference in the two earrings that are worn by her, but it could also be considered as an interesting fashion sense. Small errors like these are still difficult for

It is fun to speculate how the generated image may not be real, but the simple fact we are having the following discussion is enough evidence that the potential for generative networks for a task like facial structure generation or any other similar project is humungous and something to watch out for in the upcoming future. We will explore the enormous potential that these generative networks have to create realistic faces or any other type of images from scratch. The concept of two neural networks competing against each other to produce the most effective results is an intriguing aspect of study in deep learning.

Let us start by understanding some of the methodologies and pre-existing techniques that are currently used in the generation of faces. Once we explore these pre-existing techniques, we will go into greater detail on how we can produce these similar results with the help of deep convolutional generative adversarial networks. So, without further ado, let us proceed to understand and explore these topics accordingly.

Understanding some pre-existing techniques:

The progression of the technology of GANs has been fast-faced and extremely successful in a short span of less than a decade. An adventure started out in 2014 with a simple Generative Adversarial Architecture soon found continuous popularity through the numerous years of advancements and improvements. There are tons of new techniques and methodologies that are constantly being discovered by developers and researchers in this field of generative neural networks. The above image is an indication of the rapid pace of development that is achieved by the improvement in the overall technology and methods used in Generative Adversarial Networks. Let us briefly discuss some of the fantastic techniques that are currently deployed in the world of machine learning for the purpose of realistic face generation.

One of the major architectures that did a fantastic job of accomplishing this task of generating photorealistic faces from scratch was the StyleGAN architecture developed by NVIDIA. While these initial models produced fabulous results to start with, it was in the second iteration of the StyleGAN architectures that these models gained immense popularity. They fixed some of the characteristic artifacts features after thorough research, analysis, and experiments. In 2019, this new version of Style GANs 2 was released with an advanced model architecture and several methods of improvement in their training procedure to achieve the best results till that time. To understand and learn more about this particular architecture, I would suggest looking at the following research paper. Recently, the third iteration of the StyleGAN architecture was released with improved results and further advancements on the type of tasks it is typically built to perform.

Another intriguing concept is of the Face App that performs changes in the type of age of a person by altering the faces is also built with the help of a GAN. Typically to perform these types of tasks, a Cycle GAN architecture is used, which has gained immense popularity as of late. These GANs perform the action of learning the transformation of various images of different styles. The Face App, for example, transforms the generated or uploaded faces into faces of different age groups. We will explore both the Cycle GAN and the Style in future works, but for the purpose of this article, we will stick to our previously discussed DCGANs architecture to achieve the task of generating faces.

Generating Faces With DCGANs:

In this section of the article, we will further explore the concept of DCGANs and how to generate numerous faces with the help of this architecture. In one of my previous articles on DCGANs, we have covered most of the essential concepts required for understanding and solving a typical task with the help of these generative networks. Hence, we will have a brief overview of the type of model we will utilize for solving the task of face generation and achieving the best results. Let us get started with the exploration of these concepts.

Overview of DCGANs:

While the basic or vanilla Generative Adversarial Networks produced some of the best works in the early days of generative networks, these networks were not highly sustainable for more complex tasks and were not as computationally effective for performing certain operations. For this purpose, we use a variety of generative network architectures that are built for specific purposes and perform a particular action more effectively than others. In this article, we will utilize the DCGANs model with a deep convolutional generator architecture to generate images. The generator with a random latent noise vector will generate the facial structures, and the discriminator, which acts as an image classifier, will distinguish if the output image is real or fake.

While the working procedure of the deep convolutional generative adversarial networks is similar to the workings of a typical GAN type network, there are some key architectural improvements that were developed to produce more effective results on most datasets. Most of the pooling layers were replaced with strided convolutions in the discriminator model and fractional-strided convolutions in the case of the generator models. The use of batch normalization layers is extensively used in both the generator and discriminator models for obtaining the stability of the overall architecture. Most of the later dense layers, i.e., the fully connected layers, were removed for a fully convolutional type architecture.

All the convolutional layers in the discriminator model are followed by the Leaky ReLU activation function other than the final layer with Sigmoid for classification purposes. The generator model makes use of the ReLU activation function in all layers except the final layer, which uses the tanh function for generative purposes. However, it is noticeable that we have made certain small changes in our generator architecture as these are some minute alterations in modern time that have shown some better results in specific use cases. The viewers and developers can feel free to try out their own variations or stick to the default archetypes for their respective projects according to their requirements.

Procedure To Follow:

The procedure we will follow for the effective construction of this project is to ensure that we collect the best datasets for the required task. Our options are to utilize high-quality datasets that are available on the internet or stick to some other alternatives for performing these tasks. We will utilize the Celeb Faces Attributes (CelebA) Dataset to develop our facial recognition generative networks. The following dataset is available on Kaggle, and it is recommended that you download it to continue with the remaining contents of the article. If you have an advanced system with the resources to compute high-end problems, then you can try out the Celeb HQ datasets. Also, remember that the Gradient platform on Paperspace is one of the best options for these extensive GPU-related tasks.

Once we have the dataset downloaded, ensure that you extract the img align celeba zip file in the "Images" directory to proceed with the next steps. We will utilize the TensorFlow and Keras deep learning frameworks for this project. If you don't have complete knowledge of these two libraries or want to refresh your basics quickly, I would recommend checking out the following link for TensorFlow and this particular link for Keras. Once we access the dataset, we will construct both the generator and the discriminator models for performing the task of face generation. The entire architectural build will focus on creating the adversarial framework for generating the image and classifying the most realistic interpretations. After the construction of the entire architectural build, we will start the training procedure and save the images accordingly. Finally, we will save the generator and discriminator models so that we can reuse them some other time.

Code walkthrough:

In this section of the article, we will explore how to code our face generator model from scratch so that we can utilize these generator models to obtain high-quality results similar to some of the state-of-the-art methods. It is crucial to note that in this project, we will utilize sizes of lesser dimensions (64x64) so that people at all levels can construct this work and won't have to suffer from the obstacle of graphics limitations. The Gradient platform provided by Paperspace is one of the best utility options for these complex computational projects. If you have better equipment or are looking to produce better-looking results, then I would recommend trying out more HD datasets that are available on the internet. Feel free to explore the options and choose the best ones accordingly. For the purpose of this article, we will stick with a simple smaller dimensional dataset that does not consume too much space. Let us get started with the construction of this project.

Bring this project to life

Importing the essential libraries:

The starting step of most projects is to import all the essential libraries that we will utilize for the development of the required project. For the construction of this face generation model, we will utilize the TensorFlow and Keras deep learning frameworks for achieving our goals. If you are comfortable with PyTorch, that would also be another valid option to develop the project as you desire. If you aren't familiar with TensorFlow or Keras, check out the following two articles that will guide you in detail on how you can get started with these libraries - "The Absolute Guide to TensorFlow" and "The Absolute Guide to Keras." Apart from these fabulous frameworks, we will also utilize other essential libraries, such as matplotlib, numpy, and OpenCV, for performing visualizations, computations, and graphics-related operations accordingly.

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

import numpy as np

import matplotlib.pyplot as plt

import os

import cv2

from tqdm import tqdmYou can feel free to utilize other libraries that you might deem necessary or suitable to perform the following task. You can also add numerous options of graphics tools or visualization graphs to track your progress and ultimately help you in improving the results. Once we have successfully imported all the required libraries that we need, we can proceed to explore the data and construct our model for solving the following task.

Exploring the data:

In this step, we will load the data into a Dataset format and map the details accordingly. The pre-processing class in the Keras allows us to access the flowing of data from a particular directory so that we can access all the images stored in the particular folder. Ensure that once you extract the celeb dataset containing over 200000 images, you place the extracted directory into another directory titled "Images" or any other folder name of your choice. The dataset will flow from the following directory, and we will assign the image size of 64 x 64 for the computation of the following data.

dataset = keras.preprocessing.image_dataset_from_directory(

"Images", label_mode=None, image_size=(64, 64), batch_size=32

)

dataset = dataset.map(lambda x: x / 255.0)The successful execution of the above code cell block should show a result similar to the following statement - "Found 202599 files belonging to 1 classes." You can choose to randomly display an image to check if you have loaded the dataset precisely as planned. The code block below is one of the ways of visualizing your data.

for i in dataset:

plt.axis("off")

plt.imshow((i.numpy() * 255).astype("int32")[0])

break

With the standard exploration of data and combining them effectively into your code, you can proceed to construct the discriminator and generator blocks for the computation of the face generation. Let us cover these in the upcoming sections of the article.

Constructing the Discriminator Model:

Firstly, we will construct the discriminator model for the generative network to classify the images as real or fake. The model architecture of the discriminator is exactly as discussed in the previous section. We will use the image shape of (64, 64, 3), which is the same size as the original images. The leaky ReLU will follow after each convolutional layer of varying filter sizes. Finally, after three blocks of the following combination, we will add a flatten layer, a dropout layer, and finally add the Dense layer with the sigmoid activation function to make the predictions of 0 or 1, for distinguishing between either real or fake images.

discriminator = keras.Sequential(

[

keras.Input(shape=(64, 64, 3)),

layers.Conv2D(64, kernel_size=4, strides=2, padding="same"),

layers.LeakyReLU(alpha=0.2),

layers.Conv2D(128, kernel_size=4, strides=2, padding="same"),

layers.LeakyReLU(alpha=0.2),

layers.Conv2D(128, kernel_size=4, strides=2, padding="same"),

layers.LeakyReLU(alpha=0.2),

layers.Flatten(),

layers.Dropout(0.2),

layers.Dense(1, activation="sigmoid"),

],

name="discriminator",

)Note that if you are performing the following activities for different image sizes, especially for a higher resolution one, you will need to modify some of the parameters, such as the filter size, for a more precise result. However, note that there might be several GPU limitations, and you might encounter a resource exhausted error depending on the type of machine you use on your local system. The Gradient platform on Paperspace is a great option worth considering for higher resolution tasks.

Constructing the Generator Model:

The generator model of the DCGANs will be built, similarly as discussed in the overview section of this article. A few changes we will make are the modifications in the general activation function that are utilized in the generator model. Note that the ReLU functions suggested in the paper are replaced by the Leaky ReLU, similar to the ones that we used in the discriminator, and the final tanh function is replaced with the sigmoid activation. If you are looking at a higher quality of images to construct the project (like 512x512 or 1024x1024), ensure that you make the essential changes in the deconvolutional layer strides accordingly to suit the particular architecture.

latent_dim = 128

generator = keras.Sequential(

[

keras.Input(shape=(latent_dim,)),

layers.Dense(8 * 8 * 128),

layers.Reshape((8, 8, 128)),

layers.Conv2DTranspose(128, kernel_size=4, strides=2, padding="same"),

layers.LeakyReLU(alpha=0.2),

layers.Conv2DTranspose(256, kernel_size=4, strides=2, padding="same"),

layers.LeakyReLU(alpha=0.2),

layers.Conv2DTranspose(512, kernel_size=4, strides=2, padding="same"),

layers.LeakyReLU(alpha=0.2),

layers.Conv2D(3, kernel_size=5, padding="same", activation="sigmoid"),

],

name="generator",

)Once you have created the generator and discriminator models, we can begin the training process of the GANs. We will also generate the images at intervals to ensure that we are receiving a brief idea of the performance of our architectural build.

Training and Generating Images:

The final step of constructing our project is to train the models for a large number of epochs. Note that I have used only five epochs for the following train and saved an image at an interval of 100. The Gradient Tape function will automatically compile both the models accordingly, and the construction phase for the following project can be started. Keep training the model until you are satisfied with the results produced by the generator.

for epoch in range(5):

for index, real in enumerate(tqdm(dataset)):

batch_size = real.shape[0]

vectors = tf.random.normal(shape = (batch_size, latent_dim))

fake = generator(vectors)

if index % 100 == 0:

Image = keras.preprocessing.image.img_to_array(fake[0])

# path = (f"My_Images/images{epoch}_{index}.png")

# cv2.imwrite(path, image)

keras.preprocessing.image.save_img(f"My_Images/images{epoch}_{index}.png", Image)

with tf.GradientTape() as disc_tape:

real_loss = loss_function(tf.ones((batch_size, 1)), discriminator(real))

fake_loss = loss_function(tf.zeros(batch_size, 1), discriminator(fake))

total_disc_loss = (real_loss + fake_loss)/2

grads = disc_tape.gradient(total_disc_loss, discriminator.trainable_weights)

discriminator_optimizer.apply_gradients(zip(grads, discriminator.trainable_weights))

with tf.GradientTape() as gen_tape:

fake = generator(vectors)

generator_output = discriminator(fake)

generator_loss = loss_function(tf.ones(batch_size, 1), generator_output)

grads = gen_tape.gradient(generator_loss, generator.trainable_weights)

generator_optimizer.apply_gradients(zip(grads, generator.trainable_weights))Once your training procedure is complete, you can proceed to save the generator and the discriminator models in their respective variables either in the same directory or another directory of your creation. Note that you can also save these models during the training process of each epoch. If you have any system issues or other similar problems that might affect the complete computation of the training process, the best idea might be to save the models at the end of each iteration.

generator.save("gen/")

discriminator.save("disc/")Feel free to perform other experiments on this project. Some of the changes I would recommend trying out is to construct a custom class for the GANs and build a tracker of discriminator and generator loss accordingly. You can also create your checkpoint systems to monitor the best results and save the generator and discriminator models. Once you are comfortable with the given image sizes, it is worth trying out higher resolutions for better-interpreted results. Let us now look at some of the possible future works that we can focus on for further developments in this sector of neural networks and deep learning.

Future works:

Face generation is a continuously evolving concept. Even with all the discoveries and the new modern technologies that have been developed in the field of generative adversarial networks, there are is an enormous potential to exceed far beyond the confines of simple face generations. In this section of the article, we will focus on the type of future works that are currently happening in the world of deep learning and what your next steps to progress in the art of face generation with generative networks and deep learning methodologies must be. Let us analyze a couple of ideas that will help to innovate and elevate our generative models to even further superior levels.

Further advancements and improvements to our model:

We know that the model that we currently built is capable of generating pretty realistic training images after a good few epochs of training, which could potentially take anywhere between several hours of training to a few days, depending on the type of system that you use. However, we can notice that even though the model performs quite well in generating a large number of faces, the amount of control that we possess upon the choice of generation is quite less as the generator model randomly generates a facial structure. A few major improvements that we can make to our models is to be able to construct them in a way such that a lot of parameters are under our control, and we have the option to stabilize the received outputs.

Some of the parameters and attributes that you can make adjustable are human traits such as gender, age, type of emotion, numerous poses of the head, ethnicity, toning of the skin, color of the hair, the type of accessories worn (such as a cap or sunglasses), and so much more. By making all these characters variable with the Generative Adversarial Network you construct, a lot of variations are possible. An example of performing such action is noticeable in the Face Generator website, where you can generate almost 11,232,000+ variants of the same face with numerous combinations. We can also make such advancements in our training model to achieve superior results while having decent control over the type of facial structures we choose to generate.

Realistic Deepfakes works:

In one of the previous sections, we discussed how the Face App can change the age of a person from a specific range with the help of generative adversarial networks, namely using Cycle GANs. To take these experiments one step further would be to utilize Deepfakes in our projects. By making effective use of artificial intelligence and machine learning, namely using generative networks such as autoencoders and generative adversarial networks, it is possible to generate synthetic media (AI-generated media). This powerful technique can manipulate or generate faces, video content, or audio content, which is extremely realistic, and almost impossible to differentiate if fake or not.

With modern Deepfakes computations, you can generate a face and make the same generated face perform numerous actions such as an intriguing dialogue delivery or a facial movement that actually never existed. There is enormous scope for combining our generative neural network architecture with other similar technologies to create something absolutely fabulous and unexpected. One of the future experiments worth trying out is to construct a generative model that can generate high-quality faces with high precision and realism. Using this generated face and combining it with Deepfakes technology, a lot of accomplishments and fun projects are possible. Feel free to explore the various combinations and permutations that these topics offer the developers to work with accordingly.

Conclusion:

The generation of faces with exceptional precision and the realistic display is one of the greatest accomplishments of generative adversarial networks. From deeming the following task of a facial generation to be almost impossible a few decades ago, to generating an average facial generation in 2014, to the continuous progression of these facial structures that look so realistic and fabulous is a humungous achievement. The possibilities for these generative neural networks to take over a wide portion of deep learning and create new areas of study are enormous. Hence, it is of utmost significance for modern deep learning researchers to start experimenting and learning about these phenomenal model architectures.

In this article, we understood the significance of generative adversarial networks on achieving a complex task such as generating realistic faces of humans that have never actually existed. We covered the topic of different pre-existing techniques that are continuously deployed in the modern development phase to achieve the following task successfully. We then studied a brief overview of our previous DCGANs architecture and understood how to deal with face generation with these networks. After an extensive code review for the following, we understood the procedure of face generation with DCGANs. Finally, we explored the enormous potential that these networks have by gaining more knowledge about the future developments and improvements that we can make to these models.

In the upcoming articles, we will further explore the topic of generative adversarial networks and try to learn the different types of GANs that have been developed through the years. One of the next works that we will explore will involve the utility of super-resolution generative adversarial networks (SRGANs). Until then, enjoy researching, programming, and developing!